Computing Power ‘Hua Shan Debate’: Can Open Collaboration Pave the Future for China's Computing Industry?

![]() 11/21 2025

11/21 2025

![]() 552

552

On November 20th, the World Computing Conference kicked off in Changsha, drawing countless eyes to this grand event, much like how all eyes were on NVIDIA's earnings report the previous day.

Jensen Huang, the founder and CEO of NVIDIA, disclosed that GPU products tailored for cloud servers are "already sold out." The global demand for computing power continues to soar unabated. Meanwhile, at the venue of the World Computing Conference, a 'Hua Shan Debate' on China's computing power industry quietly unfolded.

Sugon and Huawei, two leading forces in China's computing power sector, have both set their sights on the future trajectory of China's computing power industry—intelligent computing clusters. The former unveiled the world's first single-cabinet-level 640-card supernode, scaleX640, while the latter introduced the Ascend 384 supernode solution.

(Image Source: 2025 World Computing Conference)

Training large models with parameters numbering in the hundreds of billions or even trillions necessitates efficient collaboration among a vast array of computing cards to enhance computing density, thereby giving rise to supernodes. Consequently, the crux of the 'debate' lies not merely in which entity possesses more formidable single-card computing power but in envisioning the future of computing organization models: Huawei's strength resides in its fully autonomous ecosystem, whereas Sugon firmly advocates for open collaboration.

The capital market will inevitably discern the trend indicator of the AI industry's trajectory from this. Where does the future of computing infrastructure lie? What opportunities remain untapped in the industrial chain? The shifts in trillion-dollar capital flows are concealed within the answers to these questions.

The Battle for Computing Power: From 'Internal Strength' to 'Forming Alliances'

The transition in AI competition from single-card performance to the efficiency of clusters comprising tens of thousands of cards signifies that a flourishing era for China's computing power industry is on the horizon. To comprehend this transformation, we must first adopt a broader perspective.

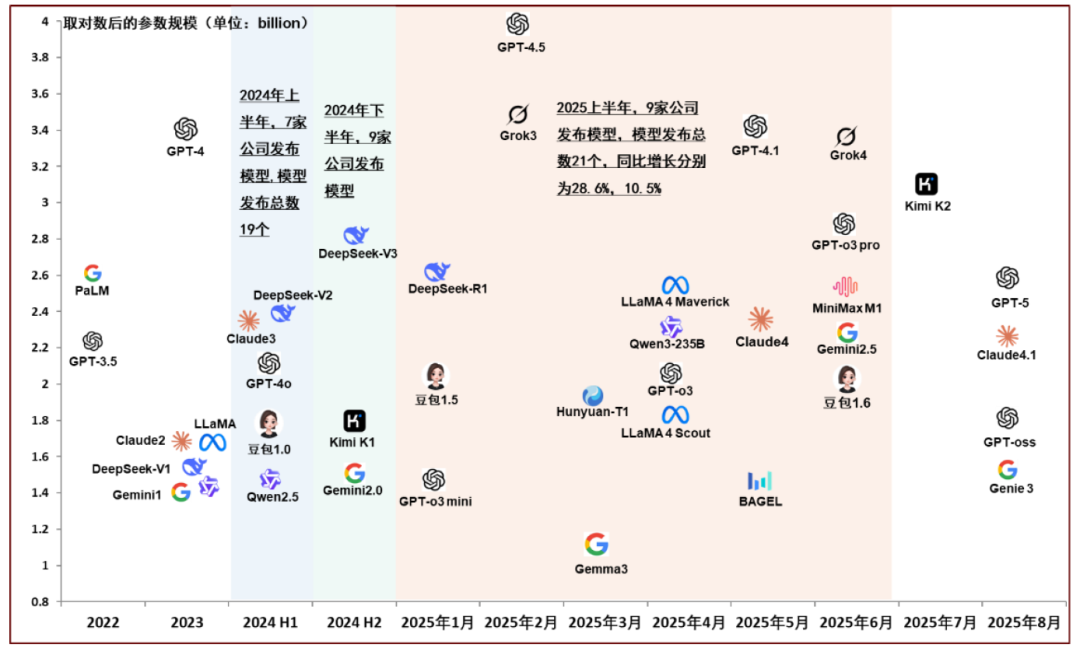

Ernst & Young once presented a set of data in its report 'Riding the AI Wave, Breaking Through Cyclical Tides': In 2023, the global artificial intelligence market size stood at $196.6 billion, and by 2030, this figure is projected to balloon to $1.81 trillion. In just seven years, a ninefold increase, with a compound annual growth rate as high as 37.3%.

Computing power serves as the cornerstone of this explosive growth. China's intelligent computing power scale surged to 725.3 EFLOPS in 2024, with an increase more than three times that of general-purpose computing power.

Initially, everyone perceived this as merely a race to stockpile GPUs, but the trajectory quietly shifted.

As large model parameters progress from hundreds of billions to trillions, and technological clusters such as multimodal and embodied intelligence continuously expand application boundaries, the imperative for self-reliance in China's computing power industry becomes increasingly pronounced. CICC points out that both the expansion of training scales and the complex scheduling of MoE (Mixture of Experts) models are compelling qualitative changes in computing power systems.

(Image Source: CICC Insights)

In the past, the industry was preoccupied with 'internal strength,' with single-card performance once revered as the ultimate benchmark. However, it was not until the industry encountered four 'walls' that new demands in the computing power industry emerged.

The first is the 'computing power wall,' where the deceleration of Moore's Law has led to diminishing marginal returns in single-chip performance enhancements. The second is the 'communication wall,' where data throughput bottlenecks between cards cause expensive computing power to remain idle. The third is the 'energy consumption wall,' where immense heat generation gives data centers a reputation for being 'power-hungry.' The fourth is the 'reliability wall,' where a failure in any node of a cluster comprising tens of thousands of cards can disrupt training, rendering previous efforts futile.

However, the challenge lies in the fact that China's computing power landscape is fragmented. Due to historical reasons and supply chain constraints, the 'coexistence of heterogeneous systems' in domestic intelligent computing centers is not uncommon. Various AI acceleration cards, differing in instruction sets and software stacks, form isolated 'computing power islands.' Closed vertical ecosystems exacerbate resource fragmentation and waste, compelling enterprises to reinvent the wheel for different hardware, thereby driving up collaboration costs.

This elucidates why the concept of 'supernodes' has garnered such high-level attention. With individual combat capabilities limited, winning this war necessitates relying on 'forming alliances.' Whoever can orchestrate tens of thousands of computing power cards like a disciplined army will elevate China's computing power industry to new heights.

This trend is reshaping the rules of the AI computing power game and enabling us to perceive the subtle sounds of new opportunities in the computing power industrial chain breaking through the soil.

Breaking Down Barriers: China's Computing Power Has No 'Lonely Champion'

The choice of technological routes often mirrors divergences in industrial philosophy.

The Ernst & Young report mentions that after the model explosion and capital frenzy of 2023–2024, the AI industry is entering a more rational growth cycle. Capital's focus is gradually shifting from chasing hype to emphasizing implementation. The two routes represented by Sugon and Huawei precisely offer capital markets two vastly different 'treasure maps.'

Huawei's fully stacked ecosystem adheres to a logic akin to the 'Apple model' in the smartphone era—pursuing ultimate performance and a consistent experience within a closed ecosystem through vertical integration from chips to systems to applications. Along this route, capital is more inclined towards 'chain leader' enterprises with deep layouts in core chip design and foundational software.

On the other hand, the route of open collaboration resembles a vibrant 'Android model' or 'open-source world.' It acknowledges the reality of a diverse and heterogeneous landscape in domestic computing power and seeks to establish order amidst this diversity.

Its emergence stems from the genuine needs of the computing power industry: Against the backdrop of generational gaps in single-card performance, how to efficiently organize computing power from different brands and architectures to achieve optimal utilization is a question that demands an answer. The core strategic significance of open architectures lies in compensating for single-point performance deficiencies through cluster scale benefits and system collaboration efficiency.

Industrial practices adhering to this logic are already accelerating. Take Sugon's released scaleX640 supernode as an example: while achieving a 20-fold increase in computing density, it supports the expansion of large clusters with hundreds of thousands of cards. However, the market's greatest focus is on the architectural philosophy it represents: hardware support for multi-brand acceleration cards and software compatibility with mainstream AI computing frameworks.

This implies that computing power infrastructure has truly become a public resource that can be standardized and universally utilized, much like water and electricity. Investment opportunities in the industrial chain have also been redistributed.

Under the 'Apple model,' value is highly concentrated at the top; whereas under the 'Android model,' opportunities permeate every capillary of the industrial chain. Upstream component suppliers adhering to open standards, software developers specializing in middleware optimization, and system integrators capable of flexibly scheduling heterogeneous computing power will all find their ecological niches within a broader ecosystem.

AI investment hotspots have thus shifted from focusing on singular technological breakthroughs to emphasizing overall cluster capabilities and ecological value.

Currently, the 'team-up' approach adopted by China's computing power industrial chain to address challenges is becoming increasingly evident: building systematic competitiveness through architectural formation, collaborative hardware-software decoupling, and engineering innovations (logistics).

Scenarios are diverse, and demands are fragmented. Only open architectures can adapt to the 'AI+' needs of various industries at the lowest cost and fastest speed.

CICC points out that domestic computing power manufacturers have focused on interconnect innovation, supernode architecture construction, and large-scale system solution output to capture market share through systemic capabilities. Meanwhile, Guohai Securities believes that, against the backdrop of continuous advancement in chip localization, this open architecture approach is expected to accelerate industrial collaboration and shorten overall R&D cycles, leading to value reevaluation.

Thus, the 'Hua Shan Debate' in the supernode domain fully demonstrates China's valuable explorations in the uncharted territories of core technologies. Whether pursuing peak performance or embracing an open and inclusive domestic ecosystem, both represent milestones in the maturation of China's computing power industry. From unilateral strengths to strategic alliances, different routes ultimately converge.

Both developers, entrepreneurs, and investors will find their own inspiration and direction within this landscape. The capabilities of China's digital foundation are being solidified layer by layer through this seemingly intense 'route debate' and saturated innovation. Ultimately, the beneficiaries will always be the new quality productive forces accelerating their rise amidst the surge of computing power.

Source: Songguo Finance