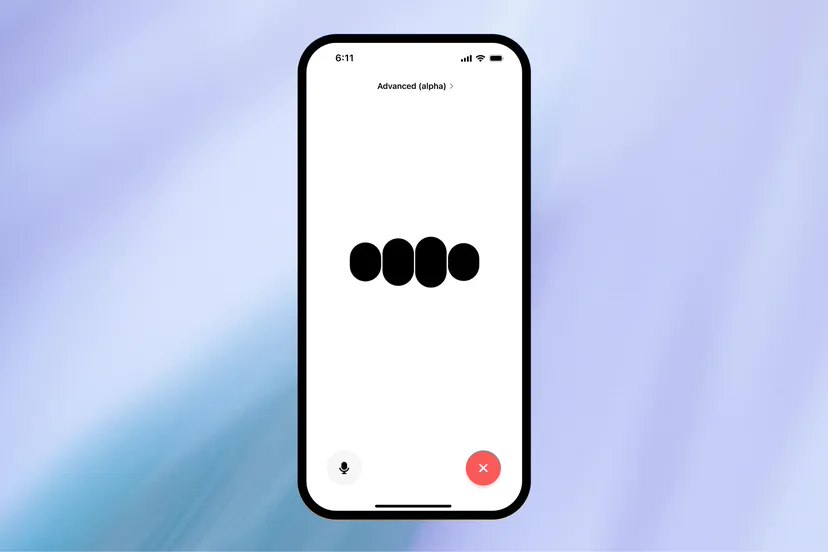

OpenAI opens GPT-4o's speech mode to some paid subscribers, offering more natural real-time conversations

![]() 07/31 2024

07/31 2024

![]() 595

595

OpenAI announced on the 30th (local time) that it would immediately open GPT-4o's speech mode (IT Home note: Alpha version) to some ChatGPT Plus users and gradually expand it to all ChatGPT Plus subscribers this fall.

In May this year, Mira Murati, Chief Technology Officer of OpenAI, mentioned in a speech:

In GPT-4o, we trained a brand-new end-to-end unified model across text, vision, and audio, which means all inputs and outputs are processed by the same neural network.

As GPT-4o is our first model that combines all these modalities, we are still in the early stages of exploring its capabilities and limitations.

OpenAI originally planned to invite a small group of ChatGPT Plus users to test GPT-4o's speech mode at the end of June, but the company announced a delay in June, stating that more time was needed to refine the model and improve its ability to detect and reject certain content.

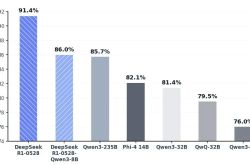

According to previously disclosed information, the average speech feedback delay of the GPT-3.5 model is 2.8 seconds, while the GPT-4 model has a delay of 5.4 seconds, making it less suitable for voice communication. However, the upcoming GPT-4o can significantly reduce latency, enabling nearly seamless conversations.

The GPT-4o speech mode features rapid response and a voice comparable to that of a real person. OpenAI even claims that the GPT-4o speech mode can perceive emotional tones in speech, including sadness, excitement, or singing.

Lindsay McCallum, a spokesperson for OpenAI, said, "ChatGPT will not impersonate the voices of others, including individuals and public figures, and will prevent outputs that differ from preset voices."