Can server manufacturers break the fate of low profit margins in the AI era?

![]() 09/19 2024

09/19 2024

![]() 459

459

AI's large models are sparking a new round of 'compute hunger.'

Recently, OpenAI's newly released o1 large model has once again pushed the boundaries of large model capabilities. Compared to its previous iteration, o1's reasoning abilities comprehensively surpass GPT-4o. This enhanced capability stems from the integration of a chain of thought into the reasoning process. As o1 breaks down and answers a question step-by-step, the interconnected chain of thought leads to more reliable answers.

This upgrade in reasoning mode also translates to an increased demand for compute power. Since the model's underlying principle involves additional RL (Reinforcement Learning) Post-Training on top of LLM training, the compute power required for both reasoning and training will increase further.

'The biggest challenge in AI research is a lack of compute power – AI is essentially brute-force computation,' summarized Xu Zhijun, Deputy Chairman and Rotating Chairman of Huawei.

As a result, tech giants have been steadily increasing their investments in AI infrastructure in recent years. Alongside NVIDIA's rising stock prices, AI server manufacturers, who sell the 'AI shovel,' have also experienced double-digit growth in revenue this quarter.

Moreover, as the demand for AI compute power continues to grow and infrastructure decentralizes, server manufacturers stand to earn increasingly more from AI.

The robust performance of manufacturers is a testament to the deep integration between servers and AI.

In the AI training phase, server manufacturers have adopted various methods to accelerate the entire AI training process, turning heterogeneous computing AI servers into efficient AI training task 'distributors.' On the other hand, to address the scarcity of compute hardware, AI server manufacturers have leveraged their experience with large server clusters to deploy platforms that enable mixed training of large models using GPUs from NVIDIA, AMD, Huawei Ascend, Intel, and other vendors.

With a deeper understanding of AI, from training to hardware optimization, server manufacturers are evolving beyond their traditional role of hardware assembly to become more valuable players in the AI ecosystem.

In particular, focusing on the construction of intelligent computing centers, many server manufacturers have adjusted the hardware infrastructure of their AI server clusters to meet AI demands. Furthermore, with the deep integration of domestic compute chips, customized solutions based on AI server manufacturers are being widely implemented.

On the software front, AI-savvy server manufacturers are exploring the productivity attributes of AI within infrastructure. As server manufacturers introduce AI large models and agents, their collaboration with AI application customers becomes increasingly tight, leading to more revenue from software solutions.

Undoubtedly, the transformation of the AI era has reshaped the industry logic of compute power carriers.

AI server manufacturers are delivering more intensive and efficient compute power to users in various ways. In this era of 'compute hunger,' AI server manufacturers are becoming increasingly important 'water sellers.'

In the AI industry, those who sell the 'shovel' are making money first

The accelerated investments of AI giants are enabling AI server manufacturers, the 'shovel sellers,' to start making money.

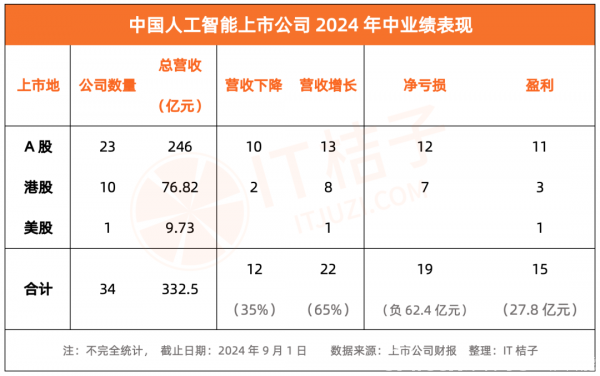

According to ITjuzi data, as of September 1, AI-related listed companies are still predominantly loss-making. Specifically, 15 profitable AI listed companies have accumulated a net profit of 2.78 billion yuan, while 19 loss-making companies have accumulated a net loss of 6.24 billion yuan.

One reason AI has yet to achieve overall profitability for the industry is that AI giants are still in an accelerated investment phase.

Statistics show that in the first half of this year, China's three AI giants (BAT) spent a total of 50 billion yuan on AI infrastructure, more than doubling the 23 billion yuan spent in the same period last year. Globally, with Amazon's fixed capital expenditures growing by 18% in the previous quarter, it has once again entered a cycle of capital expansion. American tech giants such as Microsoft, Amazon, Google, and Meta (collectively known as 'Mag7') have also reached a consensus to continue investing heavily in AI.

'The risk of underinvesting in AI far outweighs the risk of overinvesting,' declared Sundar Pichai, CEO of Alphabet, Google's parent company, emphasizing his aggressive stance and dismissing concerns about an investment bubble.

Riding the wave of increased investments, AI server players providing AI infrastructure are raking in profits.

In the AI era, global veteran server manufacturers HP and Dell have experienced a 'second spring.' According to HP's latest earnings report (Q3 2024), its server business grew by 35.1% year-over-year. Dell's previous quarter's financial results (covering May-July 2024) showed an 80% year-over-year increase in revenue from its server and networking business.

Similarly, in China, Lenovo's latest quarterly financial report mentioned that driven by growing AI demand, its Infrastructure Solutions Group achieved quarterly revenue exceeding US$3 billion for the first time, a 65% year-over-year increase. Inwave's interim report showed a 90.56% year-over-year increase in net profit attributable to shareholders of the listed company, reaching 597 million yuan. On the other hand, Digital China achieved a 17.5% year-over-year increase in net profit attributable to shareholders of the listed company, with its Shenzhou Kuntai AI server business generating revenue of 560 million yuan, up 273.3% year-over-year.

Revenue growth exceeding 50% is a testament to the large-scale deployment of AI servers.

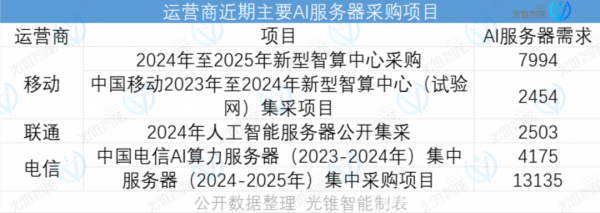

In addition to cloud providers, telecom operators are the primary demand drivers for AI servers. Since 2023, operators have increased their investments in AI compute power, with demand for AI servers from telecom and mobile operators more than doubling.

Concurrently, the demand for intelligent computing centers is rapidly driving the adoption of AI servers. According to Yu Mingyang, head of Habana China, a subsidiary of Intel focused on AI chips, who shared at the 2024 Global AI Chip Summit, over 50 government-led intelligent computing centers have been established in the past three years, with more than 60 projects currently in planning and construction.

The robust demand for AI servers is rewriting the growth structure of the entire server industry.

According to a recent report by TrendForce, with large CSPs (Cloud Service Providers) increasing their procurement of AI servers, the estimated output value of AI servers in 2024 is projected to reach US$187 billion, representing a growth rate of 69%. In contrast, the estimated annual shipment growth of general-purpose servers is only 1.9%.

Going forward, as CSPs gradually complete the construction of intelligent computing centers, AI server growth will further accelerate with the broader demand for edge computing. The sales of AI servers will also shift from bulk procurement by CSPs to smaller-scale purchases for enterprise edge computing.

In other words, the bargaining power and profitability of AI server manufacturers will further improve as procurement models evolve.

Server manufacturers will continue to earn more from AI. This trend is a stark contrast to the lengthy payback periods for AI server customers.

Taking the compute leasing business model as a reference, the industry has long calculated the numbers. Including the equipment supporting intelligent computing centers (storage, networking), and without considering the annual decline in compute prices, the investment return period for NVIDIA's H100 GPU as a compute card can stretch up to five years, while the most cost-effective NVIDIA GeForce RTX 4090 GPU also has a return period of over two years.

Given this, helping customers make the most of AI servers has become the core competitive focus for the entire server industry.

Accelerating and stabilizing: AI server manufacturers showcase their prowess

'The deployment of large models is complex, involving advanced technologies and processes such as distributed parallel computing, compute power scheduling, storage allocation, and large-scale networking,' summarized Feng Lianglei, Senior Product Manager of H3C's Smart Computing Product Line, addressing the challenges in AI server deployment.

These challenges correspond to two major issues in AI server deployment: compute optimization and large-scale usage.

A sales representative told Guangzhui Intelligence that common customer demands include hardware specifications, support for AI training, and the capability for large-scale clusters.

Compute optimization primarily addresses the issue of heterogeneous computing in AI servers. Currently, industry solutions primarily focus on optimizing compute allocation and heterogeneous chip collaboration.

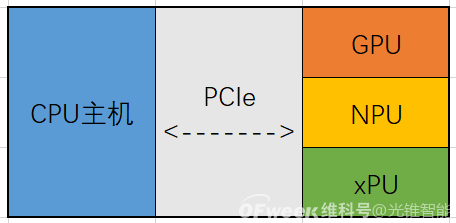

Since AI servers no longer rely solely on CPUs for task processing but instead collaborate with compute hardware (GPUs, NPUs, TPUs, etc.), the industry's mainstream solution involves using CPUs to distribute computing tasks to dedicated compute hardware.

This compute allocation model is similar to NVIDIA's CUDA fundamentals. The more compute hardware the CPU 'drives' simultaneously, the greater the overall compute power.

Principle of heterogeneous compute allocation

At the hardware level, this translates to AI servers becoming 'building blocks' that can stack compute hardware. AI servers are becoming larger and thicker, evolving from the standard 1U (unit of server height) to the more common 4U and 7U configurations.

To further optimize compute power, many server manufacturers have proposed their own solutions. For instance, H3C's Aofei Compute Platform supports fine-grained compute and memory allocation down to 1% and MB, respectively, with on-demand scheduling. Lenovo's Wanquan Heterogeneous Smart Computing Platform, on the other hand, automatically identifies AI scenarios, algorithms, and compute clusters using a knowledge base approach. Customers simply input scenarios and data, and the platform automatically loads the optimal algorithm and schedules the best cluster configuration.

In terms of heterogeneous chip collaboration, the primary challenge lies in synchronizing different compute hardware servers.

Given the ongoing shortage of NVIDIA GPUs, many intelligent computing centers opt to mix and match GPUs from vendors like NVIDIA, AMD, Huawei Ascend, and Intel or train a single AI large model using a mix of GPUs. This approach leads to issues such as communication efficiency, interoperability, and coordinated scheduling during the entire AI training process.

AI Server Equipped with AI Chips from Different Vendors (Source: TrendForce)

'The process of training AI using server clusters can be simplified as 'rounds' of training. A task is first broken down across all compute hardware, and the results are aggregated before updating for the next round of computation. If the process is not well-coordinated, such as if some GPUs are slower or communication is poor, it's like other compute hardware has to 'wait.' With more rounds, the entire AI training process can be significantly delayed,' explained a technical expert to Guangzhui Intelligence, using a vivid analogy to illustrate the practical issues addressed by heterogeneous compute hardware collaboration.

Currently, the mainstream solution to this problem involves leveraging cloud management systems (including scheduling, PaaS, and MaaS platforms) to finely partition the entire AI training (and neural network) process.

For example, H3C's solution involves building a heterogeneous resource management platform that uses a unified collective communication library to manage GPUs from different vendors, thereby bridging the gaps between them. Baidu's Baihe Heterogeneous Computing Platform's multi-chip mixed training solution fuses various chips into a large cluster to support the entire training task.

Despite slight variations, the goal of these solutions, as summarized by Xia Lixue, Co-founder and CEO of Wuwenxinqiong, is that 'before turning on the faucet, we don't need to know which river the water comes from.'

Resolving heterogeneous computing issues means that a broader range of hardware options becomes available for intelligent computing clusters. The synergy between server, compute chip, and AI infrastructure manufacturers collaborates to maintain the stability of large-scale compute clusters built with AI servers.

Drawing on Meta's experience with compute clusters, AI large model training is not always smooth sailing. Statistics show that during synchronous training on Meta's 16K H100 cluster, there were 466 job anomalies within 54 days. To quickly restore server clusters to operational status after issues arise, a mainstream solution involves adding a 'firewall' during the training process.

For instance, Lenovo's solution employs 'magic to defeat magic.' By predicting AI training failures using AI models, Lenovo's solution optimizes backups before breakpoints occur. Huawei Ascend and Ultra Fusion take a more straightforward approach. Upon detecting a node failure, they automatically isolate the faulty node and resume training from the nearest checkpoint.

Overall, AI server manufacturers are enhancing their value proposition by understanding AI, optimizing compute power, and upgrading stability.

Leveraging AI's transformation of the industry, AI server players are rejuvenating the classic ToB server industry with a vertically integrated approach.

Is AI making server manufacturers more valuable?

Looking back, server manufacturers have long been 'trapped' in the middle of the smile curve.

After the Third Industrial Revolution, the server market expanded significantly, giving rise to numerous server manufacturers.

In the PC era, the Wintel alliance's X86 architecture nurtured international server giants like Dell and HP. In the cloud computing era, the surge in digital demand fueled the emergence of OEM manufacturers like Inspur and Foxconn.

However, despite revenues in the tens to hundreds of billions annually, server manufacturers' net profit margins have consistently hovered around single digits. Under Inspur's JDM (Joint Design Manufacturing) model, which prioritizes manufacturing efficiency, net profit margins are often just 1-2%.

'The reason for the smile curve's formation is not due to issues inherent in the manufacturing process but rather the inability to grasp core technologies and patents within the industry chain, leading to standardized production and a lack of irreplaceability,' explained an electronic analyst at Guotai Junan Securities to Guangzhui Intelligence, addressing the challenges faced by server manufacturers.

In the AI era, the value of server manufacturers is evolving as AI redefines compute power applications. Vertical integration capabilities for AI have become the focal point of competition among server manufacturers.

Focusing on the hardware level, many server manufacturers have delved into the construction of intelligent computing centers.

For instance, addressing PUE (Power Usage Effectiveness), manufacturers like H3C, Inspur, Ultra Fusion, and Lenovo have introduced liquid-cooled rack solutions. H3C, in particular, has not only introduced silicon photonics switches (CPO) to reduce overall data center energy consumption but has also AI-optimized its entire networking product line. On the other hand, to overcome the limitations of NVIDIA's compute chips, manufacturers like Digital China and Lenovo are actively promoting the adoption of domestic compute chips, collectively contributing to China's chip industry's leapfrog development.

At the software level, server manufacturers are actively tapping into the productivity attributes of AI to make their businesses not limited to selling hardware.

The most common one is the AI empowerment platform launched by server manufacturers. Among them, Digital China has integrated model computing power management, enterprise private domain knowledge, and AI application engineering modules on the Digital China Learning platform. By integrating Agent capabilities into the server usage process through its native AI platform, Digital China has made the user experience "better and better with each use".

Li Gang, Vice President of Digital China, commented on this, saying, "We need such a platform to embed the Agent knowledge framework that has been verified by the enterprise environment, and at the same time, we can continuously accumulate new Agent frameworks. This is the value of the Digital China Learning AI application engineering platform."

H3C has fully leveraged its existing advantages in network products and used AIGC to achieve anomaly detection, trend prediction, fault diagnosis, and intelligent tuning in the field of communications. In addition to the operation and maintenance segment, H3C has also launched the Baiye Lingxi AI large model, attempting to enter the business segments of different industry customers and expand the original ToB hardware business scope by using a general-purpose large model to "drive" industry-specific large models.

Through continuous technological innovation and product refinement, we seek new breakthroughs in the AI trend and unleash the new momentum of AI infrastructure.

As Chen Zhenkuan, Vice President of Lenovo Group and General Manager of Lenovo Infrastructure Solutions Group China, summarized, server manufacturers are reaping the rewards of significantly increased profit margins by continuously deepening the process of AI vertical integration.

Server manufacturers are embarking on their own AI era as they leap out of manufacturing.