Will future large models all be calculated using A-series cards?

![]() 10/21 2024

10/21 2024

![]() 694

694

Preface:

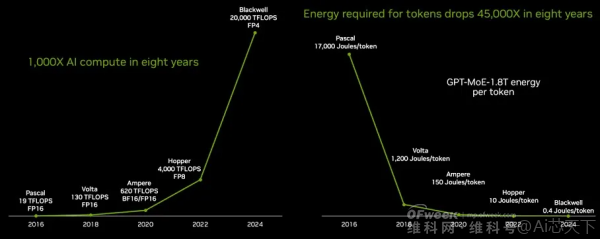

Currently, the field of computing power centers is facing an important trend, where some large model manufacturers are gradually reducing their reliance on pre-training, resulting in a significant increase in demand for inference capabilities and model fine-tuning.

This change has had a profound impact on downstream large model and application developers, prompting upstream chip manufacturers to quickly adjust their strategic directions.

However, relying solely on the differentiated competition of a single chip is no longer sufficient to meet market demands.

Author | Fang Wensan

Image Source | Network

AMD's new products directly target NVIDIA's Blackwell series of chips

At the Advancing AI 2024 conference held in San Francisco, AMD CEO Lisa Su unveiled a new generation of Ryzen CPUs, Instinct AI computing cards, and EPYC AI chips.

These products comprehensively cover the AI computing field and are clearly targeting NVIDIA's Blackwell series of chips.

According to international media reports, NVIDIA has dominated the data center GPU market in recent years, approaching a monopoly position, while AMD has consistently ranked second in market share.

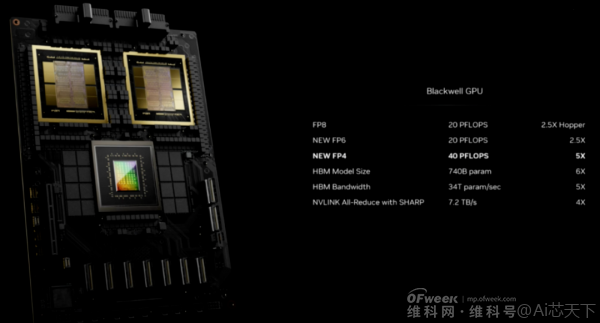

Earlier this year, NVIDIA released its flagship product, the B200, which offers nearly 30 times the computing power of its predecessor, the H200 chip.

It is planned that the B200 will enter mass production and be released in the fourth quarter of this year, further widening the gap with competitors.

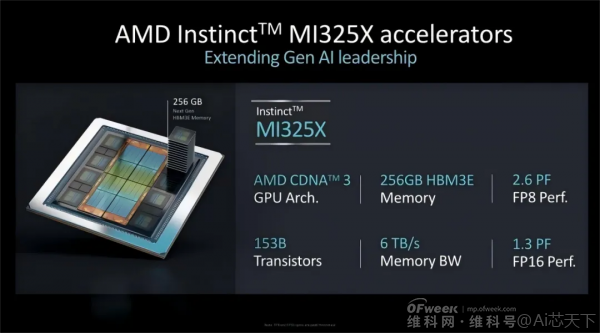

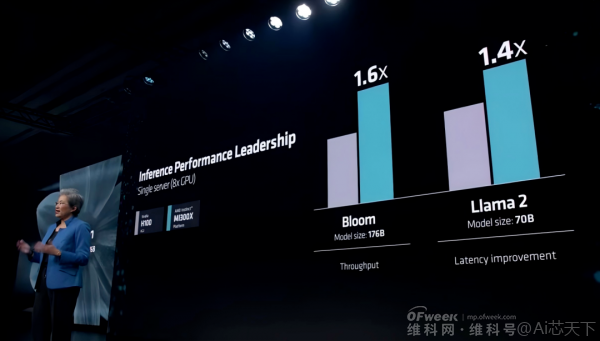

However, with the release of the MI325X, AMD aims to compete vigorously with NVIDIA's Blackwell chips.

AMD's Instinct MI325X GPU integrates eight MI325X units, supporting up to 2TB of HBM3E memory.

Its theoretical peak performance reaches 20.8 PFLOPs at FP8 precision and 10.4 PFLOPs at FP16 precision.

In terms of system architecture, AMD employs Infinity Fabric interconnect technology, achieving up to 896 GB/s of bandwidth and a total memory bandwidth of 48 TB/s.

Additionally, the power consumption of each GPU has increased from 750W to 1000W.

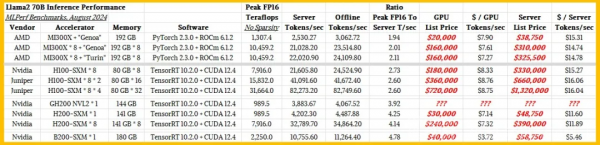

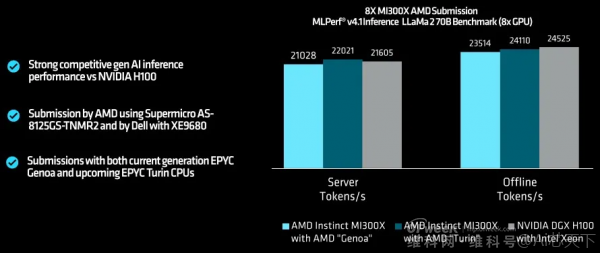

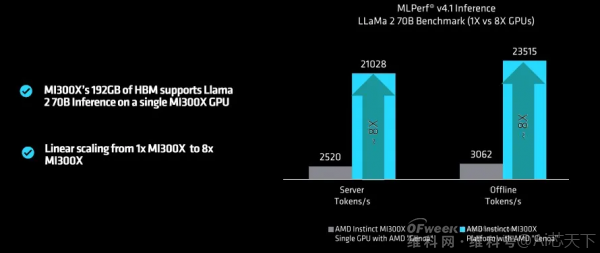

Compared to NVIDIA's H200 HGX, the Instinct MI325X demonstrates 1.8 times and 1.3 times advantages in memory capacity, memory bandwidth, and computing power, respectively.

In terms of inference performance, it also achieves approximately 1.4 times the performance of the H200 HGX.

In mainstream models such as Meta Llama-2, the single-GPU training efficiency of MI325X surpasses that of H200, though both remain comparable in cluster environments.

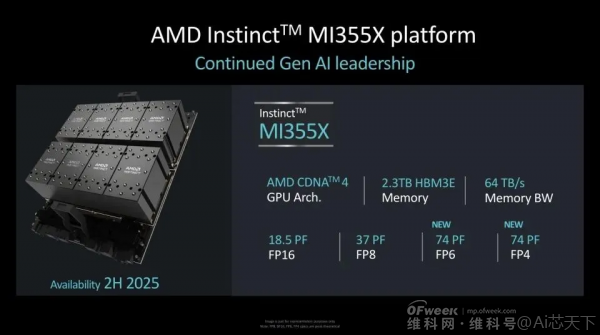

Furthermore, AMD highlighted its next-generation MI300 series GPU accelerator, the MI355X.

Based on the next-generation CDNA 4 architecture and fabricated using a 3nm process, its memory capacity has been upgraded to 288GB HBM3E.

The MI355X supports both FP4 and FP6 data types, enhancing AI training and inference performance while maintaining computational accuracy.

AMD anticipates a 35-fold performance improvement and a 7-fold boost in AI computing power with the next-generation CDNA 4 architecture compared to CDNA 3, along with approximately 50% increases in memory capacity and bandwidth.

Therefore, next year's Instinct MI355X AI GPU is projected to achieve significant performance gains.

Based on AMD's currently published data, the Instinct MI355X AI GPU boasts 2.3 PF of computing power at FP16, a 1.8-fold improvement over the MI325X and on par with NVIDIA's B200.

In FP6 and FP4 formats, its computing power reaches 9.2 PF, nearly double that of B200 in FP6 format and comparable in FP4 format.

As such, the MI355X is regarded as AMD's true competitor to NVIDIA's B200 GPU chip.

Production issues with Blackwell GPUs have now been resolved

Previous reports indicated that the Blackwell architecture products encountered production issues, specifically low yield rates, which affected shipments.

As the first products to adopt TSMC's CoWoS-L packaging technology, NVIDIA's Blackwell GPUs utilize LSI bridges to create high-density interconnects, seamlessly compatible with various high-performance chips.

However, differences in the coefficients of thermal expansion (CTE) between GPU chips, RDL interposers, LSI bridges, and substrates posed challenges during production.

According to Morgan Stanley, the decline in yield rates during the post-packaging stage of NVIDIA's Blackwell GPUs exacerbated the already tight supply of CoWoS packaging and HBM3e memory.

Nonetheless, the firm believes NVIDIA has internally resolved these issues.

Morgan Stanley predicts that NVIDIA will ship up to 450,000 Blackwell GPUs in the fourth quarter of this year, potentially generating revenues of $5 billion to $10 billion.

As Blackwell GPU orders are already backlogged into the second half of next year, unfulfilled orders are driving demand for Hopper GPUs.

NVIDIA's Blackwell GPU production issues were initially seen as an opportunity for AMD, but this opportunity failed to materialize as anticipated.

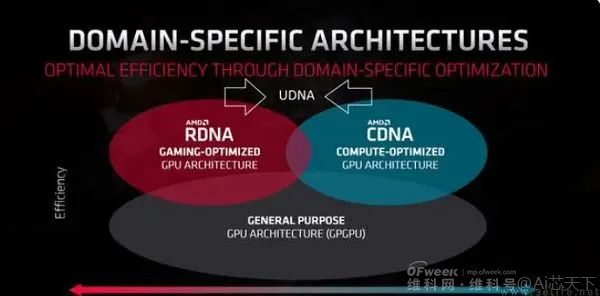

AMD's UDNA competes with NVIDIA's CUDA ecosystem

At ADVANCING AI 2024, AMD further outlined its AI chip development blueprint, announcing that the MI350 series based on the CDNA 4 architecture will be launched next year, while the MI400 series will adopt an even more advanced CDNA architecture.

However, challenging NVIDIA's leading CUDA ecosystem will require significant long-term efforts from AMD.

UDNA, as AMD's long-term strategy, requires time to demonstrate its potential.

AMD's primary challenge in expanding market share lies in NVIDIA's robust barriers in AI software development through its CUDA platform, which attracts and binds developers to NVIDIA's ecosystem.

The CUDA ecosystem, NVIDIA's unique parallel computing platform and programming model, has become a benchmark standard in AI and high-performance computing tasks.

AMD's challenges extend beyond hardware performance to building a software ecosystem that attracts developers and data scientists.

To this end, AMD has increased investments in its ROCm (Radeon Open Compute) software stack and recently announced doubling the inference and training performance of AMD Instinct MI300X accelerators in widely used AI models.

Additionally, the company noted that over a million models can now run seamlessly on the AMD Instinct platform, triple the number at the launch of MI300X.

Exiting the high-end graphics card market to focus on AI

For gamers, mainstream manufacturers' exclusive optimization strategies for NVIDIA products offer irresistible appeal.

Thus, AMD's temporary exit from the high-end graphics card market is viewed as a strategic business decision.

Notably, this is not AMD's first concession to NVIDIA in the high-end market.

Between 2017 and 2019, AMD was absent from the high-end graphics card space, with Vega 64 and Radeon VII fading from the market, relying solely on RX580 and RX590 to compete in the mid-to-low-end market.

AMD's current strategic focus is on the mid-to-low-end market to expand its user base and guide developers through a large user community.

Moreover, AMD's strategic retreat could signal its commitment to AI.

With Dr. Lisa Su, AMD's Chairman and CEO, asserting that the AI supercycle has just begun, AMD views AI as a critical direction for future growth and is investing heavily.

Faced with the rapidly growing AI chip market, AMD is determined not to miss out.

While its CPU business thrives thanks to the success of the ZEN architecture, its discrete graphics cards, particularly high-end gaming cards, have underperformed.

Thus, focusing on AMD's strengths and avoiding weaknesses by prioritizing AI product development is a rational choice.

While NVIDIA maintains a significant advantage in AI training performance, the real profit center in AI lies in inference workloads.

In essence, training involves 'educating' AI models with datasets, while inference is the process of predictions made by trained models on new data.

Notably, AMD's aggressive pricing strategy for its accelerated server products contrasts sharply with NVIDIA's, which boasts a net profit margin exceeding 50%.

This strategy, similar to AMD's approach in weakening Intel's position in the CPU market, may drive some customers away from NVIDIA due to its cost-effectiveness.

AMD's top priority is securing more market orders

According to TechInsights, NVIDIA shipped approximately 3.76 million data center GPUs in 2023, capturing 97.7% of the market share, similar to 2022 levels.

In contrast, AMD and Intel shipped 500,000 and 400,000 units, respectively, accounting for 1.3% and 1% of the market.

For AMD, its current goal is not to surpass NVIDIA but to actively secure more market orders.

As one of NVIDIA's few competitors, AMD is viewed as a diversified option by tech giants.

Currently, AI chips contribute a growing proportion of AMD's business.

AMD's second-quarter financial report revealed that the AMD Instinct MI300X GPU contributed over $1 billion in revenue during the quarter, with annual sales projected to exceed $4.5 billion, accounting for approximately 15% of the company's overall sales.

Notably, companies including Microsoft, OpenAI, Meta, Cohere, Stability AI, Lepton AI, and World Labs have adopted AMD Instinct MI300X GPUs in their generative AI solutions.

In addition to support from tech giants, AMD's cost-effectiveness is a critical factor in market capture.

Due to its technological leadership and high market share, NVIDIA's products command a premium price.

In contrast, AMD's MI300 series outperforms NVIDIA's H100 in performance while offering a more affordable price tag.

This cost-effectiveness give AMD stay AI The huge development potential in the chip market .

Based on these analyses, Dr. Su raised AMD's AI chip revenue forecast for 2024 to over $4.5 billion, up from her April estimate of $4 billion.

This underscores AMD's robust growth momentum and promising prospects in the AI chip market.

Similar to NVIDIA, AMD plans to introduce new AI chip products annually.

Conclusion:

For AMD, while NVIDIA's capacity recovery may bring competitive pressure, fortunately, AMD has gradually found its competitive rhythm.

Looking ahead to 2025, the two chip giants will face off again in the GPU field, marking a crucial year for testing their comprehensive strength.

As technology advances and GPUs find wider applications, both companies will continue to optimize GPU architectures, enhance computing efficiency, and jointly propel the advent of the intelligently connected era.

References: Chaowaiyin: "AMD Unveils Its Most Powerful AI Chip, Targeting NVIDIA's Blackwell for 2025 Launch," Zhiding Toutiao: "AMD Steps Up Efforts in GPU Computing, Pressuring NVIDIA," Jiqizhixin: "Is AMD's GPU Running AI Models Finally a Yes? Fearlessly Challenging NVIDIA's H100," Dianzifeishouyouwang: "AMD's Most Powerful AI Chip Outperforms NVIDIA's H200, But Market Remains Unconvinced: Ecosystem is the Biggest Weakness?" Meiguyanjiushe: "AMD Poised to Seize NVIDIA's AI Leadership," Baogaocaijing: "OORT Founder: AMD's Challenge to NVIDIA in the AI Chip Market," NO Yimo: "AMD's Most Powerful AI Chip Debuts, Potentially Outperforming NVIDIA Completely," Sanyishenghuo: "Abandoning High-End Graphics Card Market, AMD May Have Chosen to 'Go All In' on AI," Saiboche: "Challenging NVIDIA, AMD Becomes an AI Chip Company," Dashengtangdianzi: "NVIDIA and AMD: The History and Future of GPU Competition"