Benz also uses end-to-end, rushing into the first tier of intelligent driving?

![]() 11/06 2024

11/06 2024

![]() 607

607

Before 2023, Mercedes-Benz's delegated advanced driver assistance systems (ADAS) could achieve not only adaptive cruise control, lane centering, and other conventional functions; after 2023, the new E-Class long-wheelbase version adopted a highway pilot assistance system, which took Mercedes-Benz 12 months to develop; by early November 2024, the mapless L2++ intelligent driving system was launched, which will be mass-produced with the CLA EV in April next year, taking 14 months to develop. If all goes well, Mercedes-Benz will likely be the first luxury brand to adopt end-to-end intelligent driving in about half a year, and will be the fourth domestic automaker to adopt a camera + multi-sensor vision solution after XPeng, Geely's Ji Yue brand, and Huawei. We are more interested in how effective Benz's end-to-end system will be and how it compares to the more mainstream Huawei Kunlun Intelligent Driving System (ADS) 3.0, Li Auto and Xiaomi's E2E+VLM, and XPeng's AI Eagle Eye solution.

The takeover rate is lower than Xiaomi's, but comfort details still need optimization?

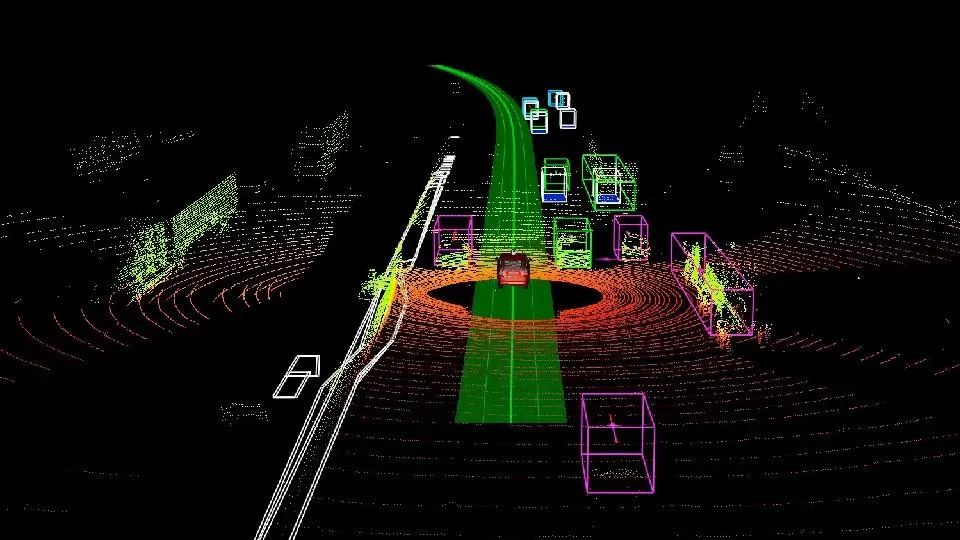

Benz's mapless L2++ full-scenario advanced intelligent driving is based on integrating the BEV+transformer architecture into an end-to-end AI model. The main change is the addition of deep learning capabilities. Since end-to-end integrates the previously separate perception, decision-making, and execution modules, data transmission speeds have certainly increased. In other words, the end-to-end system requires extensive data analysis and learning to continuously optimize decisions. Theoretically, the more it is used, the closer the system will be to human driving habits and styles.

The core of this system is that it removes the need for LiDAR and does not rely on high-definition maps, instead adopting a camera + multi-sensor technical solution. How should we understand Benz's intelligent driving system? The end-to-end concept is now basically clear. The so-called multi-sensor (including LiDAR) or pure vision technology solutions are just different hardware choices for data perception by automakers. Whether it's Huawei, Li Auto, XPeng, or Xiaomi, the next stage of their autonomous driving capabilities is to achieve a One Model form similar to Tesla's Full Self-Driving (FSD), which uses a single model to handle all perception, decision-making, and execution. In essence, end-to-end uses extensive real-world data to teach the large model how to drive safely.

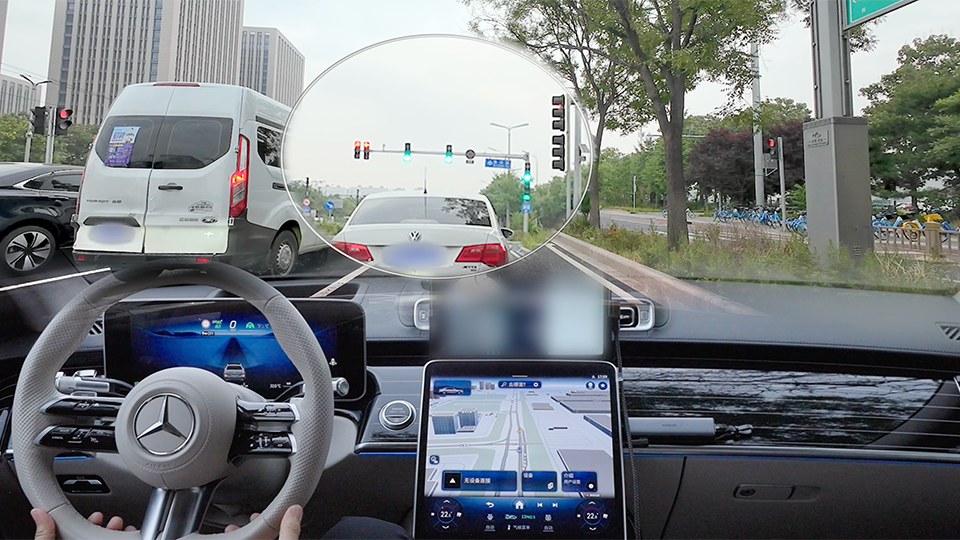

So, after upgrading from the BEV+transformer architecture to end-to-end, is Benz's L2++ system easy to use? It has been about three months since the first domestic road tests began in early August this year. Basic urban No Automated Driving (NOA) functions can be achieved, such as immediate use upon starting, main and auxiliary road switching, entering and exiting roundabouts, U-turns, traffic light recognition, pedestrian and bicycle avoidance, unprotected turns, and side-by-side vehicle interactions, basically covering the intelligent driving functions of Huawei Kunlun ADS 3.0.

After activating urban NOA, the driving style of the entire system on urban roads does not deliberately choose to be conservative or aggressive. For example, when facing non-motor vehicles traveling in the same direction on narrow roads or motor vehicles temporarily parked on the road, the system's strategy is to make reasonable evasive maneuvers, with minimal unnecessary maneuvers within a safe distance, almost passing close to obstacles. In contrast, Xiaomi's SU7 recently had issues with logical layers of same-direction avoidance after OTA 1.4.0, such as stopping and waiting or active downgrading. Compared to that, Benz's system is significantly more aggressive. However, when facing misaligned intersections, especially those with chaotic ground markings due to construction, the system's decisions become more conservative, prioritizing courtesy, but still ensuring a certain level of traffic efficiency.

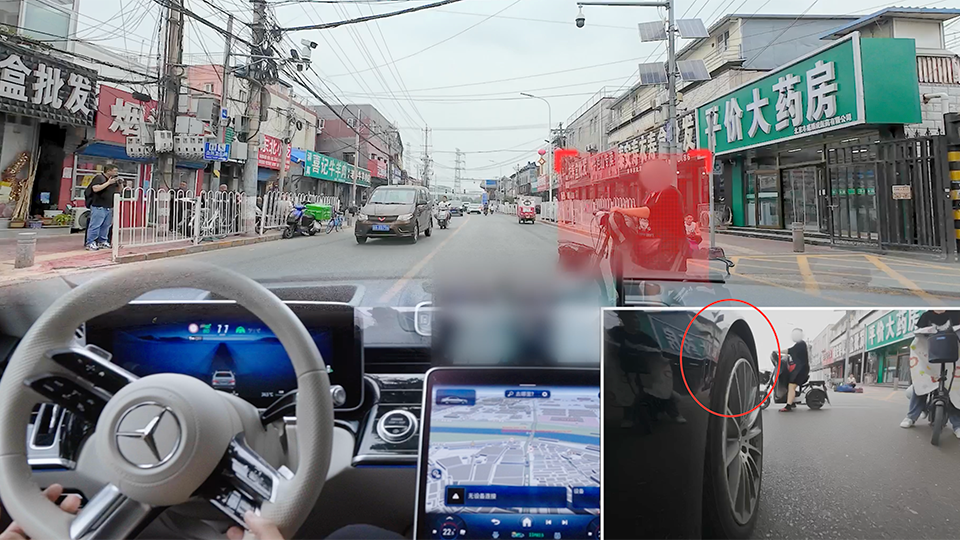

It is worth mentioning that there were almost no takeovers throughout Benz's test vehicle's journey. When Lei Jun personally tested Xiaomi's SU7 urban NOA in Beijing, there were four takeovers over nearly 50 kilometers. The only takeover in Benz's case was due to a social vehicle suddenly accelerating and cutting in, prompting the driver to take over for safety reasons, turning the steering wheel half a turn to avoid a collision risk. Such relatively extreme scenarios actually test two things: whether the system will compete with the driver for control of the steering wheel and whether the system will downgrade if the safety distance is exceeded. From the actual situation, when manual intervention and takeover force are significant, the system's vehicle control force is obviously weakened, and the transition between human and intelligent driving is relatively smooth.

However, regarding the system's anthropomorphic and comfortable details, not all operating conditions can achieve this. For example, when recognizing that the intersection traffic light changes from green to yellow flashing, the system comprehensively considers factors such as the distance to the front and rear vehicles, the distance to the intersection, and ride comfort during acceleration and deceleration, prioritizing not running the yellow light to avoid traffic accident risks caused by rushing at intersections. However, in actual scenarios, there is a special situation where the vehicle may not always brake gently. For instance, when the green light is about to end and the vehicle in front brakes suddenly, the system, based on its settings, will make judgments considering the following distance and traffic light instructions. Therefore, even if the front vehicle brakes before the yellow light appears, the system will also apply heavy braking, significantly affecting ride comfort.

Similarly, when dealing with ghost probe situations, the system's execution logic is not to slow down and detour but to mainly apply emergency braking. As can be clearly seen from the actual test footage, the front suspension spring travel is significantly compressed under braking, and significant nodding occurs. Therefore, regarding Benz's end-to-end intelligent driving effect, we can basically draw some conclusions. The takeover rate is lower than Xiaomi's at this stage, and traffic efficiency at complex urban intersections is guaranteed, but there is still room for optimization in comfort details. Of course, this issue is not difficult to resolve since the data iteration speed of the end-to-end system can already achieve weekly updates. So, we can look forward to the performance of subsequent versions regarding the comfort of this intelligent driving system.

Can it rank in the first tier of intelligent driving after getting rid of high-definition maps?

The end-to-end intelligent driving system used by Benz is actually a technical solution provided by Momenta, but the research and development were completed by Benz's own team. In terms of hardware, in addition to not using LiDAR, the chip used is NVIDIA Orin Drive with a single chip computing power of 254 TOPS, which is also used by Xiaomi and Li Auto. However, there may be some differences in the performance of external perception hardware. For example, XPeng's AI Eagle Eye intelligent driving is equipped with Lofic cameras that can recognize high-contrast scenes, while Xiaomi uses BEV zoom technology to better control detection accuracy. However, considering the overall effect mentioned earlier, Benz's end-to-end intelligent driving performance can basically achieve most of the functions of Huawei Kunlun ADS 3.0. Therefore, to some extent, the level of this system is almost in the first tier of intelligent driving.

Currently, the more mainstream intelligent driving solutions are Huawei's Kunlun ADS 3.0, Xiaomi and Li Auto's E2E+VLM, and XPeng's XNGP. After fully switching to the AI pure vision route, XNGP still requires a lot of data training, so the solutions with higher reference value are the two types of technologies adopted by Huawei and Li Auto. So, how does Benz's end-to-end system compare to theirs?

First, let's briefly review these two technical solutions. Firstly, in the first two versions of ADS, Huawei always separated the BEV network. However, after evolving to version 3.0, the BEV network was integrated into the GOD network, and the PDP network responsible for decision-making and planning was also added, forming the concept of a single large GOD network. This logic is similar to Tesla's One Model form mentioned earlier. However, in terms of hardware perception, a 192-beam LiDAR is needed for real-time mapping, which is the foundation of this system. The 4D millimeter-wave radar constructs three-dimensional data through strong echoes. Therefore, at the data level, these two sets of hardware basically do not make misjudgments or omissions, and small and irregular obstacles can also be accurately captured. The PDP network serves as a fallback safety mechanism for countless unknown scenarios. In summary, this system is basically very close to future L3-level technology.

Secondly, Xiaomi and Li Auto use E2E+VLM, which essentially still requires BEV to provide three-dimensional coordinates for obstacles. The large model makes corresponding processing decisions after training. VLM is mainly used to analyze complex scenarios, which can also be regarded as a fast system and a slow system for E2E and VLM, respectively. Slightly different from Xiaomi, Li Auto also uses a model called Cloud World, which is essentially a database that records and analyzes complex black box scenarios. After constructing and analyzing countless unknown scenarios, the autonomously trained data is transmitted to the large model. To some extent, this is similar to Huawei's PDP network, both serving as fallback safety mechanisms.

In comparison, Benz's end-to-end intelligent driving does not have the previously mentioned PDP and Cloud World models. Instead, the fallback safety mechanism is a traditional rule-based algorithm. How should we understand this? To illustrate with a simple example, in movies about intelligent robots, robots undergo extensive data analysis and self-learning, even evolving to possess human-like "ideology." However, the underlying rule-based algorithm is a code that "cannot harm humans." As such, when Benz's intelligent driving system encounters unknown and complex scenarios it has never seen before, it is likely to exhibit early intelligent driving effects of BEV+transformer+occupancy network, i.e., out of primary safety considerations, issues such as active degradation and reduced traffic efficiency may arise. The only solution is to achieve a certain amount of data and training. Therefore, for unknown complex scenarios, intelligent driving solutions with cloud databases from companies like Huawei and Li Auto may have stronger and faster iteration capabilities and cycles.