Is AI deployment difficult? It's time to use open source to lower the barriers to AI adoption

![]() 11/06 2024

11/06 2024

![]() 475

475

Over the past three decades, from Linux to KVM, from OpenStack to Kubernetes, many key technologies in the IT field have come from open source. Open source technology has not only significantly reduced IT costs but also lowered the threshold for enterprise technological innovation.

So, what can open source bring to AI in the era of generative AI?

Red Hat's answer is: Open source technology will drive the faster and wider application of AI across various industries.

Since its inception in 1993, Red Hat has been a steadfast advocate and builder of open source technology. Through the recent 2024 Red Hat Forum, "The Theory of Intelligent Evolution" identified three key phrases describing the collision between open source technology and AI: simple AI, open source AI, and hybrid AI.

01

Simple AI: A journey of enterprise AI applications starting with a laptop

In September 2024, the AI hosting platform Hugging Face announced that the number of AI models it hosts has surpassed 1 million, demonstrating the popularity of generative AI and large models.

However, there is still a significant gap between general-purpose foundational models and solving actual business problems for different enterprises. Computing power, talent, model training platforms and tools, and technical experience are common pain points in the deployment of large models. For example:

"Can we make foundational models use enterprise data and fine-tune them in the environment of our choice, while only requiring a relatively small investment?"

"We want to develop small models tailored to our business based on foundational models, but our team has no experience in AI development, no development platform, and even insufficient GPU computing resources. How do we start?"

Just as Red Hat accelerated the popularization of Linux and container technology through RHEL and OpenShift over the past few years, introducing AI to enterprises through open source is also Red Hat's vision in the AI era.

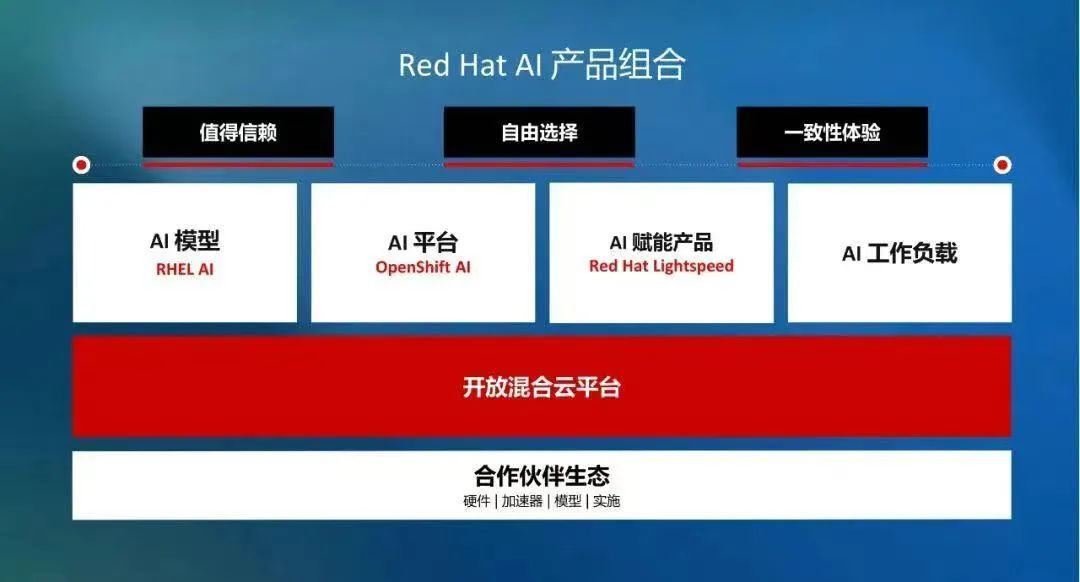

To this end, Red Hat has launched a series of AI platforms and products, forming a three-step process for enterprise AI applications:

Step 1: With Podman Desktop and InstructLab, users can try out open source AI models and tools with minimal resource allocation, such as running AI models on a laptop using a CPU without additional GPU cards.

In the past, many believed that AI model training could not be done on a single PC and must be done in large data centers equipped with GPU cards. Red Hat has completely changed this reality, allowing both data scientists and business professionals without IT development experience to participate in AI model training.

With the Podman AI Lab extension, Podman Desktop allows users to build, test, and run foundational models in a local environment. Setting up an experimental environment for trying out different foundational models only takes a few steps.

InstructLab is an open source tool for foundational model alignment that allows you to download the required foundational models from the open source community for local training, significantly lowering the technical barriers and data preparation for model fine-tuning.

Step 2: Further train models on cloud servers through Red Hat Enterprise Linux AI (RHEL AI).

If the results of the first step are satisfactory, users can proceed to production-level model training on cloud servers.

RHEL AI is a foundational model platform that makes it easier for users to develop, test, and deploy generative AI models.

RHEL AI integrates IBM Research's open source licensed large model Granite, the model alignment tool InstructLab, and GPU accelerators from NVIDIA, Intel, and AMD. This solution is packaged as an optimized, bootable RHEL image for deploying a single server in a hybrid cloud environment and is integrated into OpenShift AI.

Step 3: Conduct production-level model training and deployment in a larger-scale distributed cluster through OpenShift AI.

If the model yields satisfactory results in the previous two steps, it can be deployed in a production environment through a larger-scale distributed cluster.

OpenShift AI is Red Hat's hybrid machine learning operations (MLOps) platform that can run models and InstructLab at scale in a distributed cluster environment, supporting large teams in completing ML Ops workflows. Moreover, OpenShift AI supports cross-cloud hybrid deployment, including on-premises data centers, private clouds, public clouds, hybrid clouds, and other environments.

Beyond the three-step process, Red Hat has also introduced a variety of AI-empowered products, such as Red Hat Lightspeed, which integrates generative artificial intelligence (GenAI) to provide a smoother working experience for beginners and experts. Applying Red Hat Lightspeed to RHEL AI and OpenShift AI allows users to manage operating systems, container platforms, and even clusters through natural language.

02

Open source AI: Driving large model iteration through the open source community

"You can choose anywhere to run AI, and it will be based on open source." At the Red Hat Summit in May 2024, Red Hat CEO Matt Hicks said.

It can be said that the concept of openness and collaboration permeates all of Red Hat's AI products and strategies.

InstructLab is a typical example. InstructLab is both a model alignment tool and an open source community, pioneering a new model of continuously advancing open source models through the open source community.

"Red Hat designed InstructLab with two main goals: First, to allow customers to train models that meet their needs based on the Granite foundational model using InstructLab and their own data. Second, we invite users to go further by feeding back their knowledge and skills to the upstream open source community and integrating them into the community version of the Granite model. Therefore, InstructLab serves as a bridge between the community and customers," said Wang Huihui, Senior Director of Solution Architecture, Red Hat Greater China.

Meanwhile, Red Hat adheres to the concept of openness and collaboration in promoting AI adoption.

"In terms of AI application adoption, Red Hat has introduced the concept of 'Open Labs' to work with customer consulting teams to identify the most effective application scenarios for various aspects of an enterprise, such as research and development, production, marketing, and customer support. Starting with a successful small application and gradually expanding to larger scenarios," said Cao Hengkang, Red Hat's Vice President and President of Greater China.

"Regarding the last mile of AI adoption, Red Hat has accelerated cooperation with local ISVs and solution developers this year to meet the personalized needs of different industries and enterprises," said Zhao Wenbin, Senior Marketing Director, Red Hat Greater China.

Since the launch of its full-stack AI products in May this year, Red Hat's AI series products have accelerated their adoption in the domestic market. As one of the first customers of Red Hat's AI products, a domestic insurance industry enterprise has significantly improved the accuracy of code merging and review after introducing Red Hat's AI products, leading to a notable increase in development efficiency and customer satisfaction.

"Last year, Red Hat Greater China achieved a record high in business, and this year, it continues to grow at a double-digit rate. Our growth stems from the increasing number of enterprises choosing open source technology and recognizing its advantages," said Cao Hengkang.

Cao Hengkang, Red Hat's Vice President and President of Greater China

03

Hybrid AI: The inevitable choice for enterprises developing their AI capabilities

In the era of cloud computing, enterprises can flexibly choose various deployment methods such as bare metal, public clouds, private clouds, hybrid clouds, and dedicated clouds based on different business loads.

Just as the cloud is hybrid, so is AI.

As generative AI technology continues to mature, more and more enterprises are realizing that no single foundational model can dominate the market. Choosing the most suitable model for different businesses will become a trend, with multiple business scenarios corresponding to multiple models becoming the norm.

From this perspective, the era of generative AI is also the era of hybrid AI. Since announcing its open hybrid cloud strategy in 2013, Red Hat's advantage lies in its ability to span open hybrid clouds, which will further continue in the AI era.

Tong Yizhou, Product Line Manager of Red Hat OpenShift, introduced a case study of a financial industry customer. This enterprise had strong ML micro-model development capabilities even before the advent of large models. However, when building a platform for generative AI research and development, production, and implementation, the enterprise decisively chose Red Hat.

On the one hand, the difficulty and complexity of building an AI platform in the era of large models are incomparable to that of the micro-model era. On the other hand, in a mixed scenario with multiple models, enterprises need to find a neutral AI platform as a partner to avoid the risk of being tied to a single vendor.

"Many customers hope that AI platform companies can stably provide services for the next ten years, and many underlying technologies currently come from open source technology. Red Hat's 30 years of open source accumulation is a key advantage valued by many customers," said Tong Yizhou.

Conclusion

In the wave of the generative AI era, open source technology is paving a solid path for the widespread application of AI with its unique charm and strong driving force.

The collision between open source and AI not only lowers the barriers to AI adoption but also gives enterprises more autonomy and choices.

The images in this article are from Shutterstock

END

This article is an original work of "The Theory of Intelligent Evolution",