NVIDIA Unveils H200 NVL and GB200 NVL4

![]() 11/19 2024

11/19 2024

![]() 626

626

Compiled/Front Smart

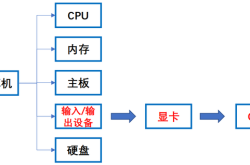

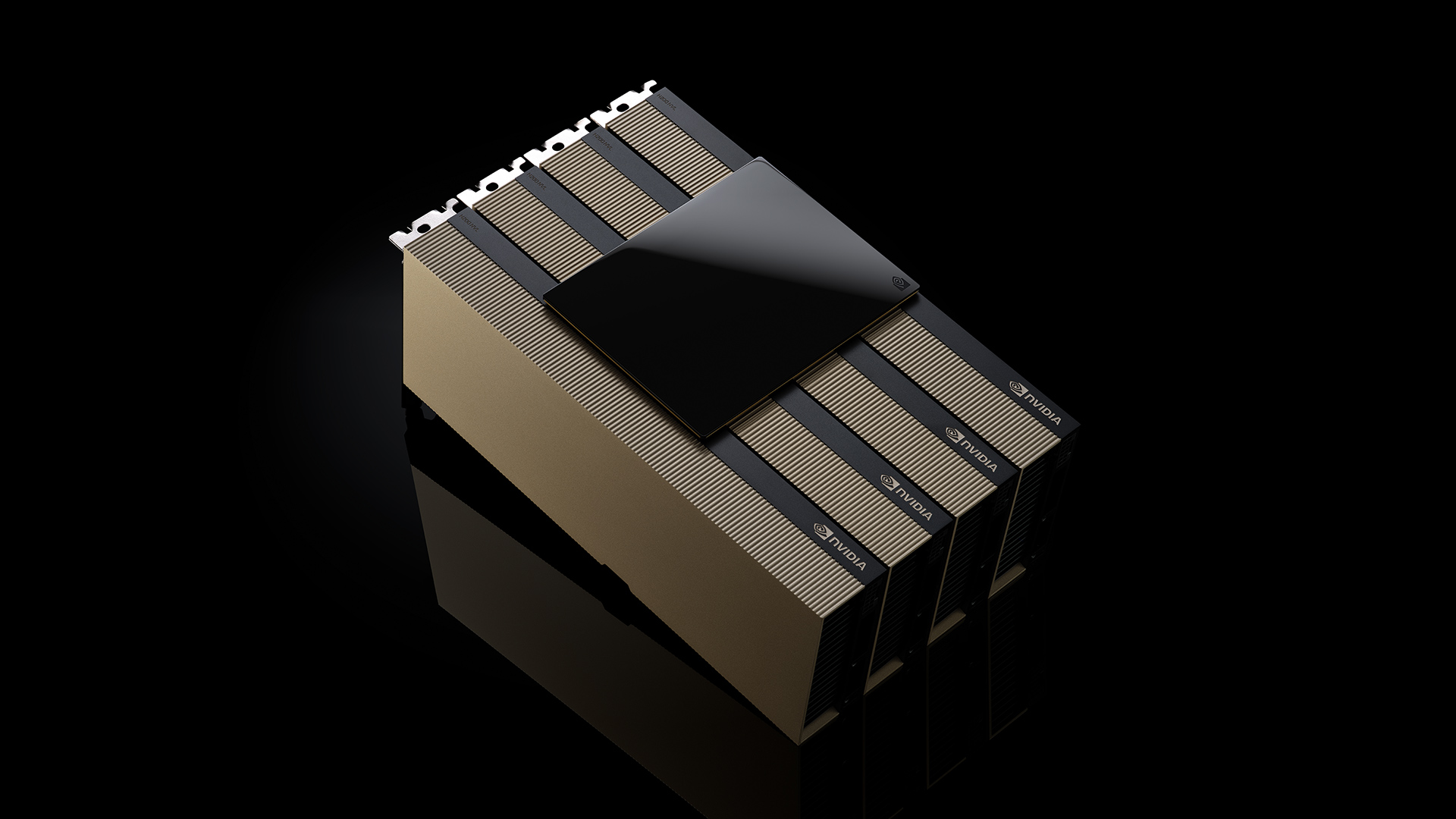

NVIDIA announced the launch of its new data center-grade GPUs, the H200 NVL, and the more powerful four-GPU product, the GB200 NVL4 Superchip, at the SC24 High Performance Computing Conference held in Atlanta on November 18, 2024, further extending its dominance in AI and high-performance computing.

Image Source: NVIDIA

The H200 NVL is the latest addition to NVIDIA's Hopper series, designed specifically for data centers with low-power, air-cooled racks. According to surveys, approximately 70% of enterprise racks operate at less than 20 kilowatts and use air-cooling solutions. The H200 NVL features a PCIe interface design, allowing data centers to flexibly configure the number of GPUs, choosing between one, two, four, or eight GPUs, thereby achieving more powerful computing capabilities within limited space.

Compared to its predecessor, the H100 NVL, the H200 NVL shows significant performance improvements: a 1.5x increase in memory efficiency and a 1.2x boost in bandwidth. For large language model inference, performance can increase by up to 1.7x; for high-performance computing workloads, it offers a 1.3x improvement over the H100 NVL and a 2.5x improvement over Ampere architecture products.

The new product also comes equipped with the latest generation of NVLink technology, providing 7x faster communication speed between GPUs compared to the fifth-generation PCIe. Each H200 NVL is equipped with 141GB of high-bandwidth memory, achieving a memory bandwidth of 4.8TB/s and a maximum thermal design power of 600 watts. Notably, the H200 NVL also includes a five-year subscription to the NVIDIA AI Enterprise software platform.

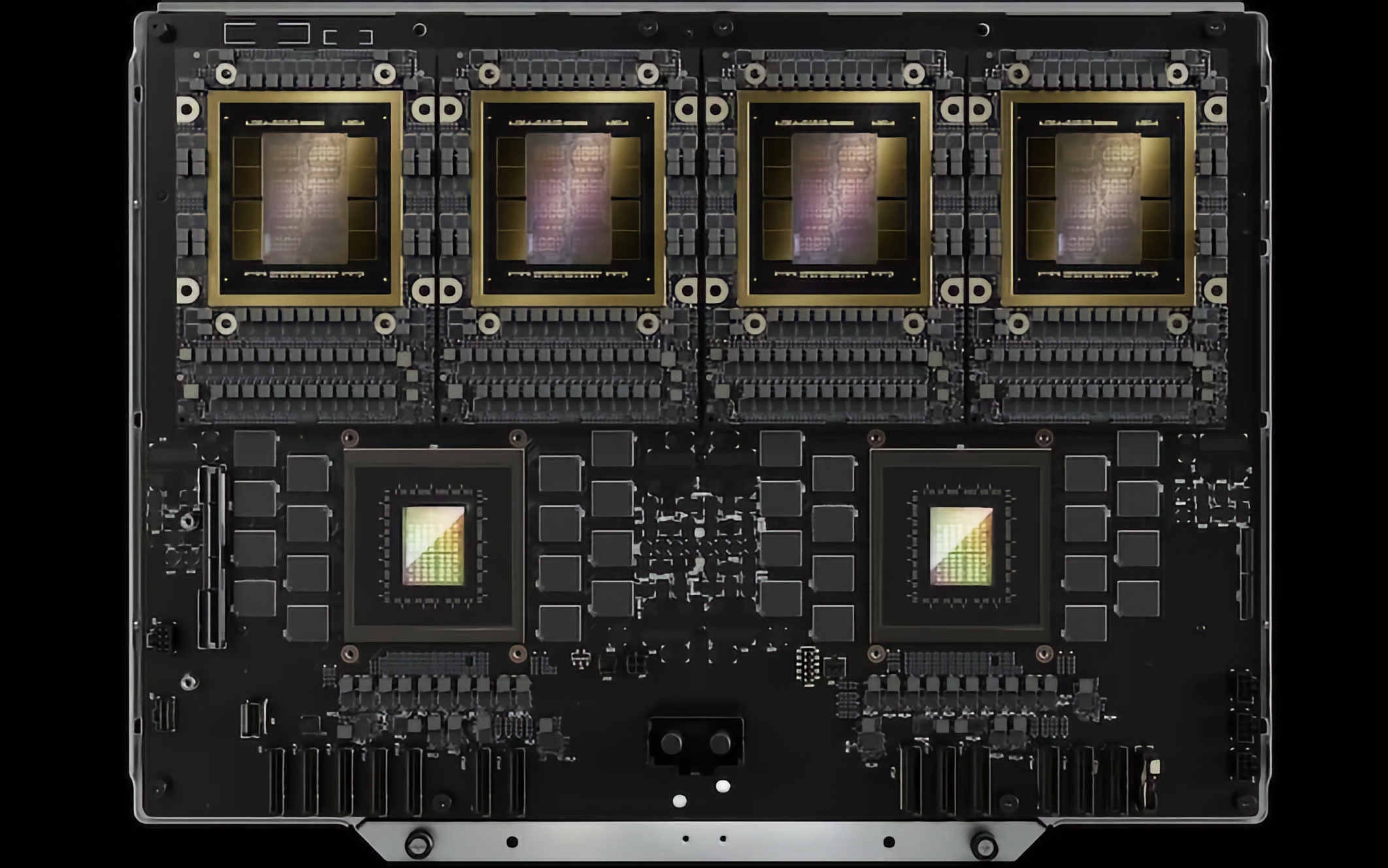

Image Source: NVIDIA

The simultaneously launched GB200 NVL4 Superchip is an even more powerful product, integrating two Arm-based Grace CPUs and four B200 GPUs utilizing the Blackwell architecture. This product boasts 1.3TB of coherent memory, shared across the four B200 GPUs via NVLink. Compared to the previous-generation GH200 NVL4, it offers a 2.2x speedup on MILC code simulation workloads, an 80% faster training time for the GraphCast weather forecasting AI model with 37 million parameters, and similarly, an 80% faster inference speed for the 7 billion-parameter Llama 2 model running at 16-bit floating-point precision.