What changes may the development of artificial intelligence bring to the chip industry?

![]() 11/19 2024

11/19 2024

![]() 626

626

Before discussing the potential changes that AI may bring to the chip industry, let's first talk about the impact of AI on engineers from the perspective of a practitioner.

This is the signature of my email (with some information redacted), and you can see a section called 'Ask my assistant', which is the entry point to my digital twin that I created.

By clicking on this link, you will be redirected to a web page for conversation, where you can interact with a virtual engineer. You can ask it questions and request assistance in solving work-related issues. I particularly hope that everyone who emails me will first contact my digital twin.

My digital twin has learned from my accumulated technical knowledge and problem-solving experience and is constantly being updated. This is because I found that a considerable portion of my work time, roughly 30% to 50%, is spent helping others solve problems. For employees in a company, making money essentially involves selling their time. Besides selling more of their time, it is also crucial to save time. AI can help me achieve this. Although most people still directly email me with their questions, I am gradually introducing my digital twin to others.

Chips are the underlying hardware foundation of AI, and AI is, in turn, enhancing the design and manufacturing of chips.

The first transformative impact of AI on the chip industry is the intelligence of chip design.

Chip industry forums in recent years have hardly been able to avoid the topic of AI, especially for EDA companies such as Synopsys' DSO.ai and Cadence's Cerebrus.

AI can accelerate the chip design process through machine learning and deep learning algorithms. For example, steps such as automatic placement and routing (P&R) and logic synthesis can be optimized using AI, reducing the design cycle and improving efficiency. AI predictive models can identify potential problems at an early stage of the design process, avoiding costly modifications later.

For AI chip design, AlphaChip has attracted much attention. AlphaChip is a system developed by Google based on Deep Reinforcement Learning (DRL) technology for automating chip floorplan design.

In fact, as early as 2020, Deepmind had already published a preprint paper titled "Chip Placement using Deep Reinforcement Learning," introducing a new reinforcement learning method for designing chip layouts, which I also introduced in the chapter on AI and chip design in "Remarkable Chips."

AlphaChip learns from existing chip layout designs and optimizes the layout process, thereby improving design efficiency. It also optimizes metrics such as power, performance, and area (PPA) and outputs probability distributions, significantly shortening the design cycle. For tedious tasks such as the layout and routing of ultra-large-scale chips, AI is highly suitable. Engineers may spend weeks on a task that AI can complete in just a few hours.

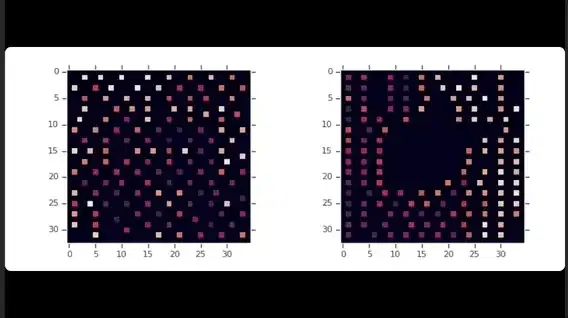

The image on the left shows the result of AlphaChip's layout for the open-source Ariane RISC-V CPU with zero samples, and the image on the right shows the effect of fine-tuning based on a pre-trained strategy (designing 20 TPUs).

To my knowledge, currently, for ultra-large-scale chip designs, AI is used for some auxiliary tasks throughout the entire design cycle.

Then the question arises, will AI take away engineers' jobs? My answer is that there is no need to worry about this in the short term (5 years). AI does not yet possess the ability to design and globally optimize ultra-large-scale chips, and leading companies in the industry will not provide their chip design data for training open-source models. As engineers or industry practitioners, understanding and making good use of AI tools will become an indispensable skill in the workplace. Using AI for design can not only significantly improve work efficiency but also endorse one's abilities. For the vast majority of professionals, I strongly recommend actively learning and applying these advanced productivity tools. Leverage AI as a creative assistant to enhance creativity and design efficiency, becoming a workplace expert at the forefront of technology.

Another transformative impact of AI on the chip industry is its promotion of innovation in chip architecture.

Unlike traditional CPU architectures, various xPU AI chips have made some changes in their architectures to handle AI-related tasks. To more efficiently execute machine learning tasks, especially large-scale matrix operations in deep neural networks, the industry has developed hardware optimized for these tasks. For example, NVIDIA's GPU (Graphics Processing Unit) was originally designed for graphics processing, but its parallel computing capabilities made it an ideal choice for training deep learning models. With growing demand, NVIDIA introduced Tensor Core GPUs specifically designed for AI. Another example is Google's TPU, designed to accelerate operations in machine learning frameworks such as TensorFlow. TPUs are more efficient than traditional CPUs or GPUs when handling specific types of AI workloads.

From a business perspective, AI has also rejuvenated the chip industry, which many considered a 'sunset' industry.

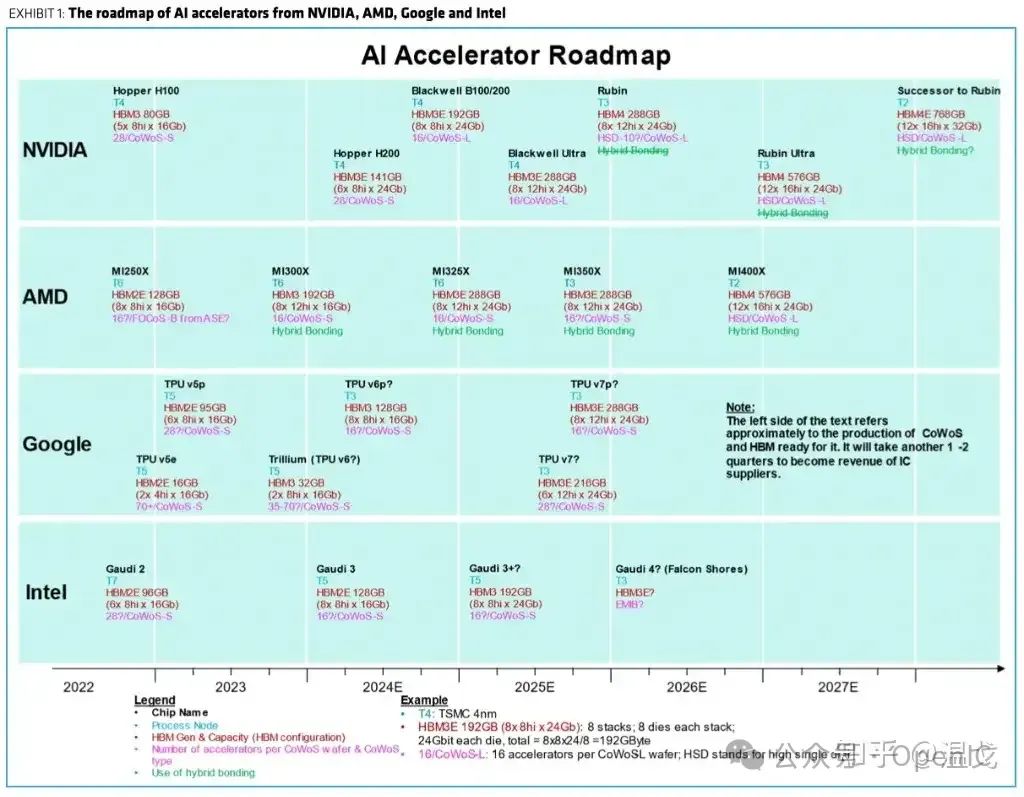

AI-related demands such as AI PCs, iPhone 16, large model training, and autonomous driving have brought good performance to chip companies, and various chip companies are also targeting the large AI market. The roadmaps of major chip companies available in public materials show that investment and focus on AI chips are only increasing.

Although AI is excellent, it has limitations in many aspects in the highly technology-intensive chip industry, such as:

Data quality: For AI to learn well, it requires a large amount of high-quality data. However, in chip design, it is challenging to find datasets that accurately reflect various complex situations. If the data is insufficient or inaccurate, AI may make errors, and its design recommendations may not be reliable, potentially leading to issues in the final chip.

Complex algorithms: Developing powerful AI algorithms that can handle various complex design scenarios is a significant challenge. AI needs to adapt to constantly changing design environments and handle unexpected problems. Sometimes, facing particularly complex or changing situations, these algorithms may struggle to provide accurate predictions or recommendations.

Explainability: AI often acts like a 'black box,' making it difficult to understand its thinking process. It is crucial to know why AI recommends a particular design approach to validate and accept its suggestions. If the explanation is unclear, people may not trust AI's decisions, and engineers may find it difficult to understand and verify AI's choices.

Tool integration: Integrating AI technology into existing EDA tools is also challenging. Compatibility issues and the need for collaborative development of new tools can complicate the process. Difficulties in tool integration may slow down the application of AI in chip design and limit its effectiveness in improving existing processes.

The fields of artificial intelligence and chips have deeply integrated, with chips accelerating AI development, and AI, in turn, driving chip advancements. In summary: Train the best chip design algorithm → Use it to design better AI chips → Use these chips to train better models → Design even better chips → ... Even Hassabis, CEO of Google's DeepMind, joked on Twitter: Now the logic is closed-loop.