What exactly is SLAM, often mentioned in autonomous driving?

![]() 11/21 2024

11/21 2024

![]() 500

500

With the rapid development of autonomous driving technology, the demand for vehicle positioning and navigation in various environments is becoming increasingly urgent. The core task of autonomous driving is to enable vehicles to drive safely and intelligently in unknown or dynamically changing environments. This requires the system to accurately answer two questions: 1) Where am I? 2) Where should I go?

Currently, autonomous driving technology primarily relies on high-precision maps and RTK (Real-Time Kinematic) systems for high-precision positioning. However, this method is costly, relies on well-developed infrastructure, and lacks adaptability in dynamic environments.

To address this, autonomous driving engineers have proposed another more flexible and economical technology—SLAM (Simultaneous Localization and Mapping). SLAM technology allows vehicles to perceive their surroundings through sensors in unknown or dynamic environments, achieving self-positioning while building maps. The emergence of SLAM has injected new possibilities into the field of autonomous driving, not only reducing costs but also providing technical support for vehicles' autonomous decision-making capabilities in complex environments.

Basic Concepts of SLAM

1.1 What is SLAM?

SLAM, short for Simultaneous Localization and Mapping, is a technology that utilizes sensor information to achieve self-positioning and map construction of the surrounding environment in unknown conditions. Its core lies in enabling robots or vehicles to navigate autonomously in unknown or partially known environments. Specifically, SLAM needs to solve two core problems:

• Positioning: The vehicle needs to determine its position and orientation in the environment.

• Mapping: The vehicle needs to gradually construct a map of the environment during its movement, ensuring the consistency and reliability of map information.

Taking a robotic vacuum cleaner as an example, when it enters an unexplored room, it will use sensors to perceive the locations of walls, furniture, and other obstacles, gradually mapping out the room while planning a path to cover uncleaned areas. The realization of this ability is a typical application of SLAM technology.

1.2 Core Components of SLAM

The implementation of SLAM systems relies on the following key modules:

1. Sensor Module

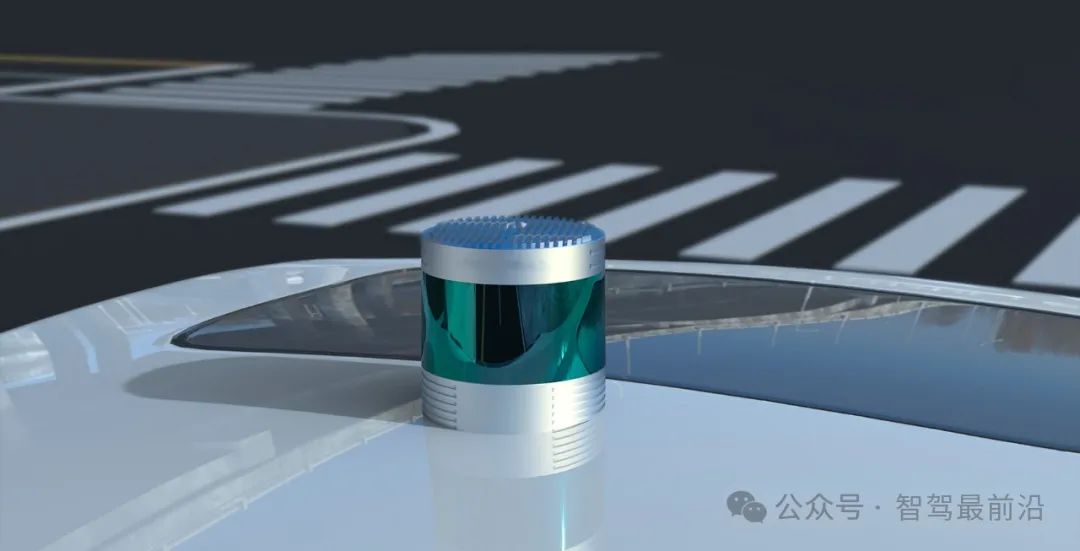

SLAM technology relies on various sensors to perceive environmental information, including LiDAR, cameras, Inertial Measurement Units (IMU), and GPS. Each sensor has its unique advantages. For instance, LiDAR can generate high-precision point cloud data, while cameras can capture rich visual features.

2. Data Processing Module

The raw data collected by sensors often contains noise and redundancy, requiring processing through filtering and noise reduction algorithms. This process aims to extract key feature points, providing foundational data for subsequent positioning and mapping.

3. Positioning and Mapping Module

In SLAM systems, positioning and mapping are performed in parallel. By matching current sensor data with feature points in the existing map, SLAM can adjust the vehicle's position in real-time while updating map information.

4. Optimization Module

Due to inevitable errors in sensor data, SLAM systems need to employ methods such as graph optimization or Kalman filtering to reduce accumulated errors in positioning and mapping, ensuring map consistency and accuracy.

1.3 Classification of SLAM Technology

Based on sensor types and algorithm characteristics, SLAM technology can be classified as follows:

1. Visual SLAM

Visual SLAM (Visual Simultaneous Localization and Mapping) uses visual sensors (e.g., monocular, binocular cameras, or RGB-D cameras) to construct environmental maps and achieve self-positioning. Unlike traditional LiDAR-based SLAM, visual SLAM captures image information from cameras, extracts key feature points or depth information, gradually constructs a 3D map of the environment, and determines the pose of the camera (or robot).

Visual SLAM, with its low cost, lightweight, and flexible characteristics, has become one of the essential technologies in autonomous driving, robotics, AR/VR, and other fields. While cameras provide rich visual information, giving visual SLAM strong advantages in dynamic environment perception and feature extraction, it also faces challenges from lighting changes and dynamic obstacles.

2. LiDAR SLAM

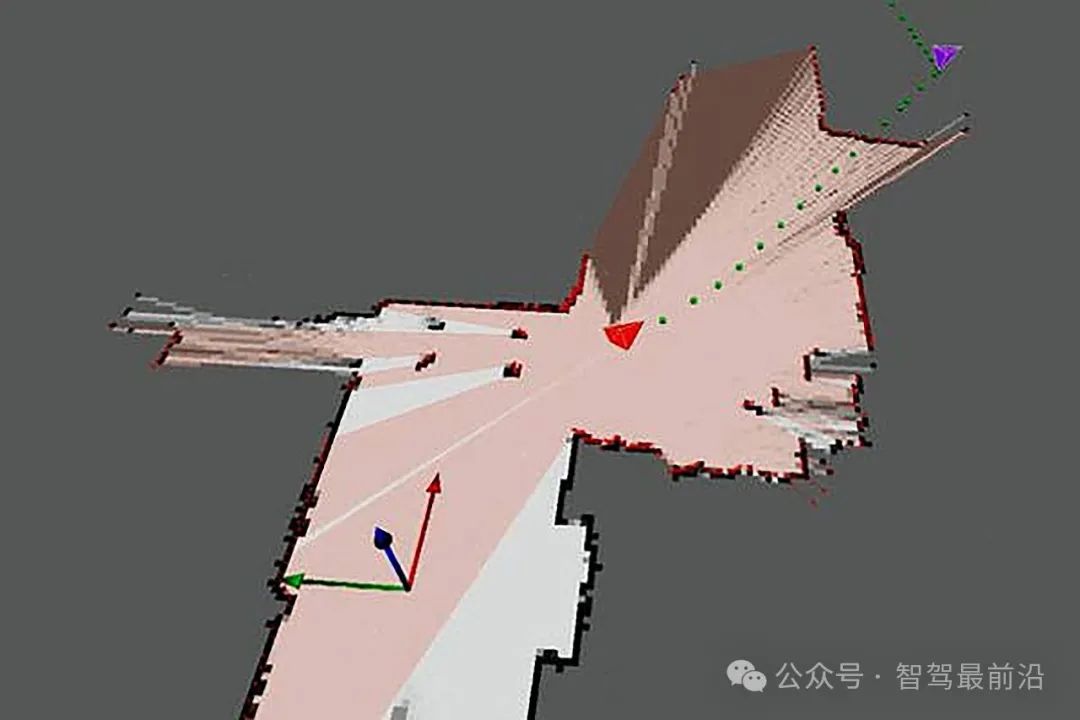

LiDAR SLAM (Laser Simultaneous Localization and Mapping) uses LiDAR (Light Detection and Ranging) for simultaneous positioning and mapping. Unlike visual SLAM, which relies on cameras to capture image information, LiDAR SLAM emits laser beams and measures echo times to obtain distance information between the target object and the sensor, creating an accurate map of the environment.

LiDAR SLAM provides high-precision positioning and mapping in complex environments, widely used in autonomous driving, robotics, and drones. The core idea of LiDAR SLAM is similar to visual SLAM, both perceiving the surrounding environment through sensors and using algorithms to estimate positions in real-time and gradually update maps. However, LiDAR, with its stronger penetration and high precision, exhibits more stable performance in more complex and challenging environments.

3. Fusion SLAM

Fusion SLAM refers to the technology that integrates data from multiple sensors (e.g., LiDAR, cameras, IMU, GPS) for simultaneous positioning and mapping. Compared to traditional single-sensor SLAM (relying solely on LiDAR or cameras), Fusion SLAM combines complementary information from different sensors, providing higher precision and more robust positioning and mapping results in complex and dynamic environments.

The basic idea of Fusion SLAM is to fuse information from different sensors through data fusion algorithms, compensating for the limitations of single sensors in certain situations. For instance, in low-light or highly reflective environments, visual SLAM might be affected, while LiDAR SLAM performs better. IMU provides acceleration and angular velocity information, helping address positioning drift over short periods. By fusing data from these sensors, the system can more accurately estimate the robot's position and update environmental maps in real-time.

The technical advantages of Fusion SLAM include:

1. Improved Precision and Robustness:

By integrating data from multiple sensors, the limitations of single sensors can be eliminated, enhancing overall performance.

2. Adaptability to Complex Environments:

For scenarios with significant environmental changes, Fusion SLAM adapts better, improving positioning and mapping capabilities in complex settings.

3. Reduced Error Accumulation:

The synergistic effect of multi-source data effectively inhibition s error accumulation and drift, enhancing system stability.

Application of SLAM Technology in Autonomous Driving

The application of SLAM technology in autonomous driving holds significant strategic importance, particularly in vehicle positioning, environment perception, and map construction. Traditional autonomous driving systems often rely on high-precision maps and external positioning systems (e.g., RTK-GPS) for assistance, but these methods are costly and highly dependent on the environment. SLAM technology, by constructing environmental maps in real-time and estimating the vehicle's current position, enables autonomous driving systems to perform environmental perception and positioning tasks independently without relying on high-precision maps or GPS signals.

1. Real-time Positioning and Mapping

One of the core functions of SLAM technology is real-time positioning and mapping. In autonomous driving, SLAM enables vehicles to construct real-time maps of their surroundings without pre-loaded maps, positioning themselves by comparing newly acquired environmental information with existing map data. This is crucial for adapting to dynamically changing environments (e.g., road construction, obstacles). By continuously updating maps, autonomous driving systems can maintain precise positioning in new or changing environments.

2. Enhanced Autonomous Navigation Capabilities

Autonomous driving systems need to navigate autonomously without human intervention. SLAM technology provides real-time, precise positioning data, enabling vehicles to plan routes independently based on maps and current positions. Combining multiple sensors such as LiDAR and visual SLAM, autonomous driving systems can handle complex road changes, plan optimal paths, avoid obstacles, and make dynamic maneuvers.

3. Loop Closure Detection and Path Optimization

In autonomous driving, vehicles often face long driving distances or repetitive routes, making loop closure detection particularly important. SLAM technology effectively detects whether the vehicle has returned to a previously visited location, correcting positioning drift caused by accumulated errors and improving path planning and map accuracy. Additionally, loop closure detection optimizes vehicle trajectories, making paths more precise and efficient.

4. Multi-sensor Fusion and Environmental Perception

Autonomous driving vehicles are typically equipped with various sensors, such as LiDAR, cameras, IMUs (Inertial Measurement Units), and ultrasonic sensors, providing multi-source data for SLAM. By fusing information from different sensors, SLAM technology overcomes the limitations of single sensors. For example, in low-light or adverse weather conditions, visual SLAM might be affected, while LiDAR provides stable distance information. Combining IMU acceleration and angular velocity data can address short-term positioning drift. Through data fusion, SLAM technology enhances the accuracy and robustness of environmental perception.

5. Indoor and Underground Navigation

SLAM technology is crucial in environments where GPS signals are unavailable, such as indoors or underground. Autonomous driving vehicles in subways, tunnels, or dense urban areas might not rely on external positioning systems. Here, SLAM technology constructs real-time environmental maps and estimates positions using onboard sensors. For instance, LiDAR and visual SLAM effectively operate in these environments, supporting autonomous driving positioning and navigation, preventing system failures due to signal loss.

6. Improved Fault Tolerance of Autonomous Driving Systems

SLAM technology enhances the fault tolerance of autonomous driving systems. Even if certain sensors fail, SLAM can maintain positioning and mapping functions using data from other sensors. This is crucial for system robustness, as autonomous driving vehicles must maintain high reliability to ensure safety. Through real-time corrections and map updates, SLAM keeps errors low and ensures continuous operation.

7. Long-distance Driving and Map Optimization

During extended autonomous driving, vehicles accumulate errors. Here, the map optimization and global optimization capabilities of SLAM are essential. Through loop closure detection and graph optimization algorithms, SLAM corrects errors during long drives, ensuring accurate vehicle positioning. Additionally, SLAM continuously updates maps during driving, improving road representation and generating more precise environmental models, enhancing autonomous driving system accuracy.

8. Assisted Driving and Automatic Parking

SLAM technology is widely applicable in assisted driving and automatic parking systems. In complex environments like parking lots and narrow roads, autonomous driving systems require high-precision positioning and environmental mapping to assist in parking and obstacle avoidance. SLAM technology provides precise environmental maps, aiding in planning optimal parking paths, avoiding collisions with obstacles, and improving parking efficiency and safety.

9. Road Changes and Real-time Updates

Autonomous driving systems must adapt quickly to road condition changes. SLAM technology reflects real-time road conditions and environmental changes by continuously updating maps. For example, autonomous vehicles can adjust routes and make timely decisions based on the latest road construction information, traffic signs, and obstacle locations. Through real-time environmental perception and map updates, SLAM technology helps vehicles effectively respond to emergencies and dynamic changes.

10. Support for Autonomous Testing and Simulation

SLAM technology also plays a vital role in the development and testing phases of autonomous driving. Using high-precision maps constructed by SLAM, developers can test autonomous driving system performance in simulation environments. Additionally, SLAM technology supports autonomous driving testing by continuously collecting new data and updating maps in real-time to validate system performance under different road and environmental conditions.

Challenges of SLAM Technology

While SLAM technology provides robust support for autonomous vehicle positioning and navigation, it also faces several challenges, primarily stemming from the complexity and variability of autonomous driving environments and high system performance requirements.

1. Environmental Complexity and Dynamic Changes

Autonomous vehicles operate in complex and dynamically changing environments, including traffic flow, pedestrians, obstacles, and weather conditions. SLAM technology must function effectively in these environments, but factors like moving objects, vehicle speed changes, and intense light fluctuations can affect sensor data acquisition, influencing map construction and positioning accuracy. For instance, pedestrian and other vehicle movements may alter previously constructed maps, requiring SLAM systems to update these changes in real-time to maintain accurate positioning and map updates.

2. Sensor Noise and Data Quality

SLAM technology relies on various sensors (such as lidars, cameras, IMUs, GPS, etc.) to acquire environmental information, but sensor noise, data quality, and accuracy differences may affect the performance of SLAM systems. For example, the measurement accuracy of lidars may be disrupted in rainy or snowy weather, visual sensors may not perform as expected in low-light environments, and errors accumulated in IMUs during prolonged operation may lead to positioning drift. These sensor limitations require SLAM systems to possess strong fault tolerance and robustness to handle data inconsistencies from different sensors.

3. Computational Resource Consumption

SLAM technology, especially fused SLAM (e.g., the combination of visual SLAM and laser SLAM), has high computational resource requirements. Real-time processing of large amounts of data from multiple sensors and generating high-precision maps and positioning results require powerful computing capabilities. Especially in highly dynamic autonomous driving scenarios, SLAM systems must quickly process real-time data and update environmental models. Therefore, achieving efficient, low-latency SLAM computations with limited computational resources is a significant challenge. Computational platforms in autonomous vehicles need sufficient processing power while also balancing power consumption and heat management.

4. Long-term Operation and Error Accumulation

A challenge faced by SLAM technology is that errors may gradually accumulate over time, especially during prolonged operations. These errors can affect positioning accuracy and map quality. Error accumulation stems not only from sensor noise but also from environmental uncertainty, positioning drift, and map inconsistencies. For instance, during long-distance driving, errors gradually amplify as the vehicle travels further, ultimately degrading positioning accuracy. Although techniques like loop closure detection and graph optimization can effectively mitigate error accumulation, further reducing errors and ensuring accuracy in complex environments remains a challenge.

5. Loop Closure Detection and Loop Closure Optimization

Loop closure detection is a crucial part of SLAM systems, especially in autonomous driving applications. It identifies when a vehicle returns to a previously visited location, enabling optimization and correction of the constructed map. However, loop closure detection often faces challenges in real-world environments. Due to the highly structured nature of urban environments (e.g., long straight roads, repetitive buildings) or specific environmental constraints (e.g., underground passages), SLAM systems may mistakenly identify two distinct locations as loop closure points. The accuracy of loop closure detection directly impacts the effectiveness of loop closure optimization. Therefore, improving the accuracy of loop closure detection and avoiding false positives is a technical challenge.

6. Coordination of Multi-sensor Fusion

To enhance the accuracy and robustness of SLAM systems, autonomous vehicles typically use multiple sensors (e.g., lidars, cameras, IMUs, GPS) for data acquisition. Effectively fusing data from different sensors to complement each other and improve overall system performance is another challenge. Different sensor types vary in performance, sampling frequency, field of view, etc. Coordinating data from these sensors and addressing conflicts and inconsistencies between them to ensure data accuracy and real-time performance is a complex task. Maintaining sensor data consistency and reliability, especially in special conditions like low light, high-speed motion, or severe weather, poses a significant challenge for SLAM technology.

7. High-precision Map Construction and Update

High-precision maps are crucial for autonomous vehicles to navigate efficiently and safely, but ensuring map accuracy and update speed during real-time map construction with SLAM technology remains challenging. While SLAM aids autonomous systems in constructing environmental maps in real-time, these maps often lack the precision and detail required for autonomous driving. In autonomous driving, especially in complex scenarios (e.g., urban roads, intersections), real-time updating and maintaining high-precision maps present a significant challenge for SLAM technology.

8. Integration of Global Positioning System (GPS) and Environmental Perception

Although SLAM technology enables high-precision positioning and map construction, its positioning accuracy is limited in GPS-denied areas (e.g., tunnels, underground parking lots) for autonomous vehicles. In such cases, leveraging sensors like cameras, lidars, and IMUs to compensate for lost GPS signals and maintain high-precision positioning is an urgent issue. Especially at high speeds, SLAM systems require higher real-time performance and accuracy. Ensuring accurate positioning and navigation without GPS signals is a challenge for SLAM technology in autonomous driving.

9. System Robustness and Safety

SLAM technology must maintain robustness and stability in various extreme environments. For instance, in low-visibility conditions like rain, snow, or fog, autonomous vehicles rely on SLAM for positioning and map construction. Sensors like cameras and lidars may perform poorly, leading to inaccurate data. Enhancing SLAM system robustness to handle extreme environments and ensure safety is a major challenge in autonomous driving.

10. Real-time and Efficiency Issues

SLAM technology in autonomous driving requires not only high accuracy but also real-time updates and processing of environmental data. Since autonomous systems must make decisions at high speeds, SLAM must process sensor data and respond within milliseconds. This demands significant algorithmic optimization and computational power. Improving system real-time performance and efficiency while ensuring accuracy is a pressing issue.

Future Development Directions

The future of SLAM technology in autonomous driving is a promising field. With technological advancements and changing market demands, SLAM applications in autonomous driving will continue to innovate and deepen.

1. Higher Precision and Reliability

Although current SLAM technology provides relatively accurate positioning and mapping for autonomous driving, challenges remain in precision and reliability in complex scenarios (e.g., urban intersections, tunnels, mountainous areas). Future SLAM technology will aim for higher accuracy, focusing on more refined algorithm optimization, sensor fusion techniques, and higher-quality map construction. For example, combining deep learning with traditional SLAM algorithms can use neural networks for deeper environmental understanding, reducing errors and enhancing system robustness.

2. Efficient Sensor Fusion

As autonomous driving technology evolves, future SLAM systems will increasingly rely on data fusion from multiple sensors. Lidars, cameras, millimeter-wave radars, IMUs, ultrasonic sensors, etc., will become essential components of autonomous SLAM systems. Sensor fusion needs to address data consistency, real-time performance, and complementarity. Future SLAM technology will further enhance processing capabilities for multi-sensor data, leveraging the strengths and compensating for the weaknesses of different sensors to improve system stability, accuracy, and robustness, especially in harsh weather or low-visibility environments.

3. Real-time and Efficiency

Real-time performance and efficiency are critical challenges for SLAM technology in autonomous driving. Autonomous vehicles must process large amounts of sensor data in real-time at high speeds, updating maps quickly and positioning accurately. With advancements in computing hardware (e.g., edge computing, dedicated AI chips), SLAM technology will achieve breakthroughs in hardware acceleration, data compression, and algorithm optimization. More efficient algorithms and stronger computing platforms will enable SLAM to complete map construction and updates faster, meeting autonomous driving requirements for real-time performance and efficiency.

4. Long-term and Large-scale Map Construction

Currently, SLAM technology typically handles positioning and mapping in relatively local environments. However, as autonomous driving systems expand to broader scenarios, SLAM's ability to handle long-term and large-scale map construction will be a key focus for future development. Future SLAM systems must manage thousands or even tens of thousands of kilometers of road maps while ensuring efficient long-term stable operation. Key issues include avoiding error accumulation, improving map update efficiency, and ensuring global consistency.

5. Adaptability and Intelligence

Future SLAM technology will no longer be static but adaptive, automatically adjusting strategies based on environmental changes. For example, in different road environments like urban streets and highways, SLAM technology will intelligently select the best map construction and positioning methods based on road characteristics, traffic conditions, weather changes, etc. Combining deep learning with traditional algorithms, SLAM systems will recognize and adapt to environments, dynamically adjusting data processing modes and positioning accuracy, enhancing system flexibility and intelligence.

6. Distributed SLAM Based on Cloud Platforms

With advancements in 5G, cloud computing, and edge computing, SLAM systems may shift from standalone in-vehicle computing platforms to distributed cloud-based architectures. Through cloud platforms, multiple vehicles can share environmental information, map data, and real-time positioning data, collaborating to provide broader and more comprehensive environmental perception and positioning support. Distributed SLAM systems will enable large-scale collaborative map updates, cross-vehicle cooperative positioning, and more precise environmental perception, reducing the burden on individual vehicles' local sensors and improving global positioning accuracy and stability.

7. End-to-end SLAM Combined with Deep Learning

The development of deep learning (especially convolutional neural networks and generative adversarial networks) presents new opportunities for SLAM technology. Future SLAM technology is expected to integrate with deep learning, particularly in visual SLAM, enhancing environmental recognition and feature extraction capabilities. By training deep learning models, SLAM systems can more accurately identify and distinguish different objects, scenes, and environmental features, improving positioning and mapping accuracy. Additionally, deep learning can enhance sensor data processing under adverse conditions (e.g., low light, rain, snow) to further improve system robustness and accuracy.

8. Precision in Loop Closure Detection and Loop Closure Optimization

Loop closure detection is a critical technology in SLAM systems, especially in autonomous driving, where accurately identifying loop closure points and optimizing loops significantly improves positioning accuracy. Future SLAM technology will further enhance the accuracy and reliability of loop closure detection algorithms, particularly in complex and repetitive environments, using intelligent algorithms to avoid false loop closures and detection errors. Additionally, loop closure optimization techniques will become more efficient, with optimization algorithms capable of real-time processing of large-scale map data, reducing error accumulation, and ensuring long-term positioning accuracy.

9. Enhancing SLAM Safety and Robustness

Autonomous driving demands high safety standards, and the stability and robustness of SLAM technology are fundamental to ensuring autonomous system safety. Future SLAM technology will further strengthen its ability to adapt to environmental changes, particularly in complex environments like extreme weather, low light, and night driving, through algorithmic and system design improvements. Techniques like multi-sensor data fusion, fault detection and recovery mechanisms, and redundant systems will enhance SLAM system robustness in uncertain environments. Additionally, SLAM systems must have self-diagnosis capabilities to identify and correct errors, ensuring safety and stability.

10. Cross-industry Applications and Standardization

As autonomous driving technology matures, SLAM technology applications will extend beyond the automotive industry to other sectors like logistics, robotic navigation, and drones. Cross-industry SLAM technology will promote interconnectivity between different technology platforms and devices, driving standardization of SLAM systems. Standardization will improve SLAM technology compatibility and reliability, fostering global autonomous driving technology adoption and promotion.

Conclusion

As a groundbreaking positioning and navigation solution, SLAM technology offers new perspectives for autonomous driving. Its autonomy and flexibility enable vehicles to efficiently position and navigate in complex dynamic environments, reducing autonomous driving technology costs and implementation difficulties. Despite current challenges, with advancements in deep learning, multi-sensor fusion, and cloud collaboration, the application prospects for SLAM in autonomous driving will broaden.