NVIDIA's Revenue Doubles, but Stock Price Plummets - What Went Wrong in Q3?

![]() 11/21 2024

11/21 2024

![]() 682

682

Author|Cora Xu, Editor|Evan

The next step for generative AI lies within NVIDIA's financial report.

In 2024, NVIDIA has had a remarkable year. Since its establishment in 1993, it took NVIDIA 30 years to reach the trillion-dollar club. In 2024, riding the wave of large models, NVIDIA doubled its market value in March, surpassing the RMB 2 trillion mark. In June, it became the world's most valuable company by market capitalization. By November, NVIDIA's market value surpassed Apple's peak of USD 3.54 trillion, reaching USD 3.65 trillion.

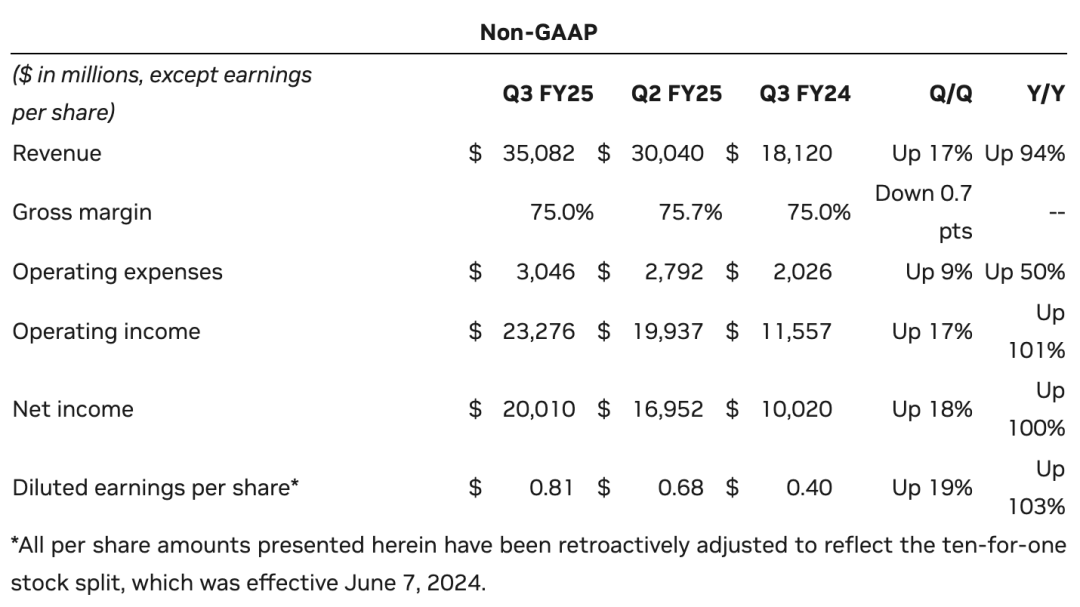

After reaching the pinnacle, the next chapter in NVIDIA's technological legend is hidden within its latest financial report and earnings call. On November 21, NVIDIA released its fiscal third-quarter 2025 earnings report, ending October 27, 2024. Q3 revenue hit a record high of USD 35.1 billion (approximately RMB 228.1 billion), a year-on-year increase of 17% and a quarter-on-quarter increase of 94%. Adjusted net income was USD 20 billion (approximately RMB 144.9 billion), a year-on-year increase of 18% and a quarter-on-quarter increase of 100%.

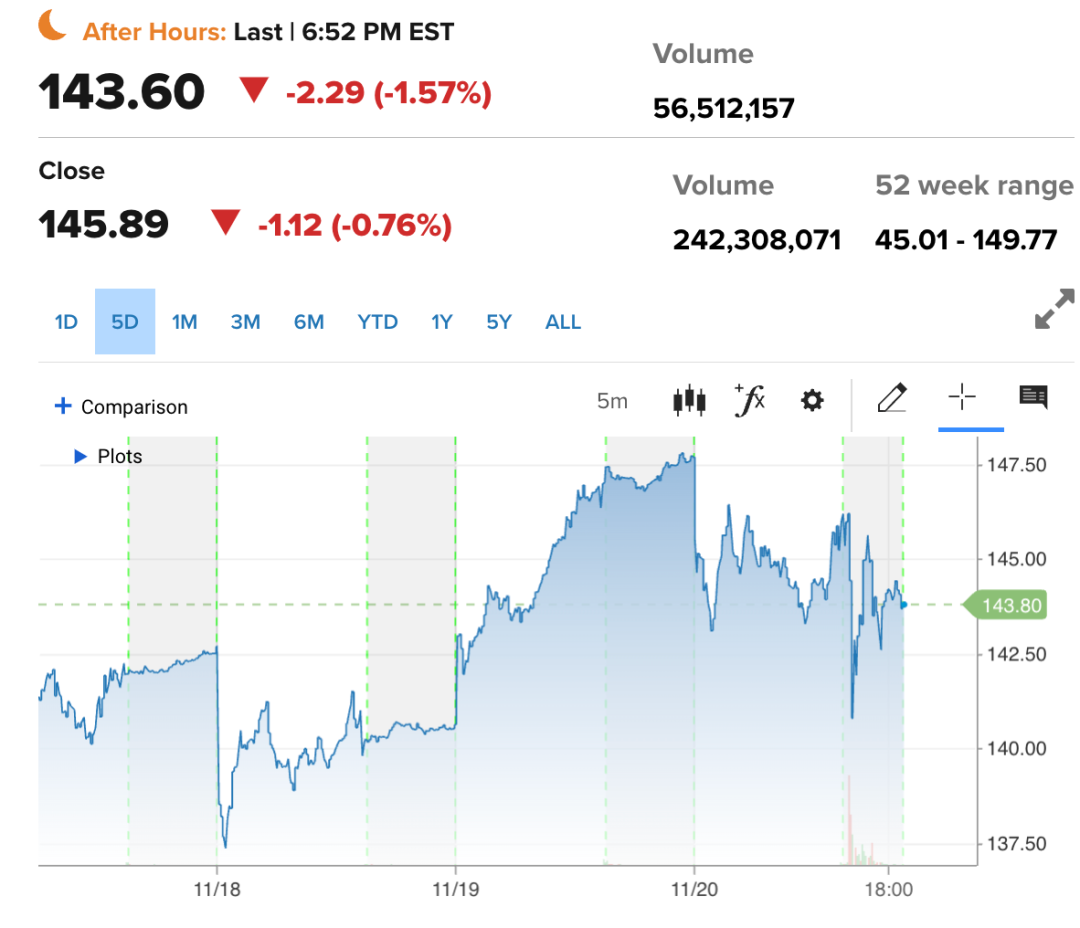

Most of NVIDIA's revenue comes from its datacenter business, amounting to USD 30.8 billion (approximately RMB 223.1 billion), a record high. Among this, revenue from online sales was approximately USD 3.1 billion, accounting for 10.1%. NVIDIA CFO Colette Kress mentioned that the new series of processors, Blackwell, has been widely welcomed by NVIDIA's customers. The company's processor sales in the fourth quarter will exceed the initial estimate of several billion dollars, and its gross margin will gradually increase from below 70% initially to around 70% as production increases. NVIDIA's founder and CEO, Jen-Hsun Huang, stated, "As foundational model creators scale up pre-training, post-training, and inference, demand for Hopper and anticipation for the fully ramped Blackwell are incredible." After the earnings report was released, NVIDIA's stock price fell by approximately 2.29% in after-hours trading. During regular trading hours, the company's stock price plummeted by about 5%, later narrowing to 2.29%. Investors are primarily concerned about NVIDIA's slowing chip sales. After adjustments, NVIDIA's Q3 gross margin fell to 75.0%, a year-on-year decrease of 0.7%.

In a short period, from breaking the trillion-dollar mark to becoming the world's top company, NVIDIA's rapid stock price growth is not only due to its seizing the opportunity of generative AI but also because many investors have recognized the company's long-term accumulation in technology, ecosystem, and applications through generative AI, creating an unbreakable moat.

Can NVIDIA's myth continue? Will the highly anticipated Blackwell be delivered on schedule? What will be NVIDIA's next legendary story? Standing at the end of the year, we interviewed multiple senior chip professionals to look back at how NVIDIA seized its opportunities and look forward to a new future.

#01 Datacenter Revenue Sets New Record, Doubling Year-on-YearNVIDIA's Q3 revenue performance is as follows:Datacenter: Q3 revenue reached a record high of USD 30.8 billion, a 17% increase from the previous quarter and a 112% increase from the same period last year. The growth came from increased GPU demand due to the construction of supercomputing centers and expanded partnerships with companies like AWS, Lenovo, Ericsson, etc.Gaming and AI PCs: Q3 gaming revenue was USD 3.3 billion, a 14% increase from the previous quarter and a 15% increase from the same period last year. This quarter's growth mainly came from increased demand for GPUs in desktops and laptops, as well as increased revenue from gaming console chips. This quarter, NVIDIA also launched 20 GeForce RTX and DLSS games, and ASUS and MSI began selling new RTX AI PCs with performance reaching 321 trillion AI operations per second.

Professional Visualization: Q3 revenue was USD 486 million, a 7% increase from the previous quarter and a 17% increase from the same period last year.

Meanwhile, NVIDIA announced that Foxconn is using NVIDIA Omniverse-based digital twins and industrial AI to bring three factories producing NVIDIA GB200 Grace Blackwell superchips online faster.

Automotive and Robotics: Q3 automotive revenue was USD 449 million, a 30% increase from the previous quarter and a 72% increase from the same period last year. This quarter's growth mainly came from autonomous vehicle chips and chips sold by NVIDIA for robotics.

NVIDIA revealed that Volvo will launch a new electric SUV powered by NVIDIA accelerated computing. Japanese and Indian companies, including Toyota and Ola Motors, are using NVIDIA Isaac and Omniverse to build the next wave of physical AI.

This quarter, GAAP diluted earnings per share were USD 0.78, a 16% increase from the previous quarter and an 111% increase from the same period last year. Non-GAAP diluted earnings per share were USD 0.81, a 19% increase from the previous quarter and a 103% increase from the same period last year.

NVIDIA expects Q4 fiscal year 2025 revenue to be USD 37.5 billion, with a fluctuation of ±2%, and revenue growth will slow from 94% in Q3 to approximately 69.5%.

The company expects Q4 GAAP and Non-GAAP gross margins to be 73.0% and 73.5%, respectively, with a fluctuation of ±50 basis points. GAAP and Non-GAAP operating expenses are expected to be approximately USD 4.8 billion and USD 3.4 billion, respectively.

#02 Blackwell Faces Further Delays; 13,000 Samples Already Sent to Customers

The key to NVIDIA's continued success lies in its AI graphics processing unit (GPU), Blackwell, launched this year.

During the latest earnings call, NVIDIA provided an update on the chip's progress. "Our Blackwell production is better than expected, with higher yields than anticipated," said Jen-Hsun Huang during the investor call. He also mentioned that Microsoft, Oracle, and OpenAI have already started receiving the company's next-generation AI chip, Blackwell. "Almost every company in the world seems to be part of our supply chain. Every customer is scrambling to capture market share."

Blackwell is now being adopted by all our major partners, who are working to build their own datacenters," added NVIDIA CFO Colette Kress, noting that 13,000 chip samples have already been sent to customers. Manufactured using TSMC's 4NP process (a chip process specifically tailored for NVIDIA, offering better performance than 5nm chips), this GPU features a brand-new architecture with 208 billion transistors, accelerating inference and training for large language models (LLMs) and mixture-of-experts (MoE) models. Compared to the previous-generation H100, Blackwell can complete the same training tasks in less time with fewer GPUs.

This is a cornerstone for NVIDIA in the generative AI market. After all, most vendors currently use the H100, which NVIDIA released in April 2022. Currently, tech giants such as AWS, CoreWeave, Google, Meta, Microsoft, and Oracle have made significant purchases of Blackwell GPUs. Even before shipments began, NVIDIA CEO Jen-Hsun Huang declared, "Blackwell is sold out." However, the more impressive its performance, the more complex its manufacturing process, making it difficult to bring into full production. Blackwell GPUs face the same challenge. Originally scheduled for shipment in Q2 2024, NVIDIA delayed Blackwell's shipment to Q4 2024 due to design and process defects. However, Huang later responded that with the help of long-time partner TSMC, the Blackwell design process had been improved. Shortly before the earnings report was released, rumors of further Blackwell delays surfaced, this time due to issues with the server racks housing the chips rather than the chips themselves. The Information reported that in the latest tests, the complex design of the Blackwell racks made it difficult to dissipate heat, requiring liquid cooling technology in server centers to maximize performance. The repeated delays in bringing Blackwell into use have sparked dissatisfaction among many investors. NVIDIA's stock price plunged 8% overnight after its Q2 earnings report was released due to Blackwell's failure to meet expected shipment schedules. However, NVIDIA is now a gold-standard customer for TSMC, accounting for most of TSMC's advanced capacity. It's only a matter of time before Blackwell shipments begin, and by 2026, the situation will ease. NVIDIA CFO Colette Kress stated, "There are supply constraints for both Hopper and Blackwell chips, and demand for Blackwell is expected to exceed supply within a few quarters in fiscal year 2026." She believes the company is on track to generate "billions of dollars" in Blackwell revenue in Q4. She also mentioned that demand for the previous-generation Hopper chip remains strong. During the earnings call, Huang also addressed whether the Trump administration's tariff increases would affect NVIDIA. In response, Huang said, "Regardless of the decisions made by the new administration, we will, of course, support the orders of the government and the highest authorities" and added that the company would "fully comply with any resulting regulations."

#03

Will Corporate Self-Developed Chips

Threaten NVIDIA?

NVIDIA's repeated delays in Blackwell shipments have frustrated tech giants eager to build their factories.

There have been instances like Musk building a 100,000 GPU datacenter for his xAI startup in just 122 days, and Meta, OpenAI, and Google subsequently announcing increased investments in datacenters. OpenAI CEO Sam Altman has openly stated that existing operations are severely limited by GPU resources, leading to the postponement of many short-term plans. xAI's GPU advantage poses a significant threat.

Many tech giants have found a new path forward by developing their AI chips.

In fact, corporate self-developed chips are nothing new. In 2015, Google began developing its custom chip, the TPU (Tensor Processing Unit). In 2019, Intel acquired Israeli AI chip company Habana Labs for USD 2 billion. In 2020, Amazon launched its custom cloud AI training chip, AWS Tranium.

The desire for self-developed chips has surged among major companies since the emergence of generative AI.

Amazon launched Trainium2 in 2023, designed specifically for machine learning training, offering up to four times the training speed of the first-generation Trainium chip.

Meta showcased its next-generation inference chip, MTIA, at the 2024 Hot Chips Conference. Three times more powerful than the first-generation MTIA, it uses TSMC's 5nm process and has a thermal design power of 90W.

Google announced its sixth-generation TPU (Tensor Processing Unit) chip, Trillium (TPU v6), on May 17, 2024. The Trillium chip offers 67% higher energy efficiency than the TPU v5e. Google also introduced its first CPU, Axion, to be used in conjunction with the Trillium chip.

OpenAI, which spearheaded the generative AI large model wave, is also investing heavily in self-developed chips. OpenAI is collaborating with Broadcom to develop its first custom AI inference chip, manufactured by TSMC, with production set to begin in 2026. OpenAI CEO Sam Altman is personally involved in investing in AI chip startup Rain AI.

The increasing investment in self-developed chips by tech giants is driven by their soaring expenditure bills.

A few years ago, a chip industry investor calculated the economic impact for Silicon Bunny. If a company spends USD 40 billion annually on NVIDIA products, and this product requires an additional USD 50-60 billion annually in electricity and operating costs, the total annual expenditure would be USD 90 billion. As the demand for computing power from large models increases exponentially, this cost will continue to rise. Large companies can only save expenses through two paths - either self-development or supporting startups.

Will the self-developed chips of many tech companies pose a competitive threat to NVIDIA?

A chip industry insider told Silicon Bunny that this does not pose a significant threat to NVIDIA. If tech companies use chips primarily for cloud services, which are then provided to other customers, convenience far outweighs chip costs. NVIDIA's mature ecosystem and software and hardware are readily available infrastructure for customers, eliminating the need for additional learning costs. Therefore, corporate self-developed chips are more for internal use. Tech giants cannot fully escape NVIDIA in the short term.

In addition to large companies, startups are also advancing further down the path of self-developed chips, with many even claiming during their chip launches that their products surpass NVIDIA in certain performance aspects.

"This is like the difference between a specialist athlete and an all-round athlete," said a chip entrepreneur.

Is there still an opportunity for chip companies? The aforementioned chip developer said that due to the high cost of chip research and development, if a company's production capacity does not reach 1 million chips, the research and development costs cannot be recovered, which is a big gamble for startups.

In addition to the potential threat from self-developed chips, can NVIDIA continue to hold onto the top spot globally?

As we all know, companies that rise with market trends will more or less be involved in some financial bubbles, and NVIDIA is no exception. Generative AI has given NVIDIA its highest market valuation and also its darkest day.

On September 4, 2024, NVIDIA experienced its worst day in history, with a single-day market value loss of $279 billion, also breaking the record for the largest one-day decline in the tech industry.

After the release of this financial report, NVIDIA's stock also experienced minor fluctuations, mainly due to concerns about the development of generative AI.

Some people also believe that as companies like OpenAI and Google shift their research and development focus from the training side to the inference side for large models, NVIDIA's advantage, which lies in the training side, will weaken, and its growth rate will gradually slow down.

However, the aforementioned developer said that the purpose and starting point of inference and training for large models are different. Training is analogous to university education, where through training, an excellent university graduate is cultivated, so performance is more important.

Inference, on the other hand, is analogous to a large model being able to work, but for employers, the core goal is to reduce labor costs and increase profits.

Therefore, with the exponential growth of computing power and the impending end of Moore's Law, the possibility of blindly pursuing significant improvements in computing power and performance is slim. Therefore, some companies will pursue lower costs under the same computing power, which is not contradictory to the industry's current focus on inference. One pursues performance, and the other pursues cost.

#04

Where else is NVIDIA's imagination space?

For now, as long as artificial intelligence continues to develop along the path of GPUs and high-precision algorithms, NVIDIA's future will be immeasurable.

And those who were once in the same league as it, Intel and AMD, have long been left behind by NVIDIA. Intel's share price has fallen by nearly 55% this year, AMD's share price has fallen by more than 17% over the past month, and by 5% this year, while NVIDIA's share price has risen by nearly 200% this year, making it the biggest winner in the generative AI market.

NVIDIA has always built a solid foundation with chips as its core and ecosystem as its moat. The core of NVIDIA's success comes from its technological and ecological advantages built over years of steady progress.

First is its core business, GPUs, which is also the core reason why NVIDIA can rise in the generative AI era. NVIDIA has always been the leader in the GPU market, with a market share of 88% in the first quarter of 2024 for PC GPUs. Most AI factories currently use NVIDIA's H100 chip, which was released in 2022. This indicates that NVIDIA has laid the groundwork for dominating the generative AI market.

NVIDIA's rapid progress in advanced process technology has given it an advantage in TSMC's advanced process capacity. This advantage means discourse power in the industry chain and rich IP accumulation.

The so-called ecosystem refers to the entire software stack above the chip, which supports AI frameworks, and AI applications are developed based on these frameworks. The software stack includes drivers, compilers, function libraries, algorithm libraries, toolkits, and more.

Huang Renxun has mentioned on many occasions that NVIDIA is a software company. The obvious advantage of building an ecosystem is that once developers or even companies enter NVIDIA's ecosystem, they prefer to choose products within the system, resulting in better hardware and software integration, increased brand recognition, and higher user stickiness.

"Chips are NVIDIA's moat. In terms of software, where it really makes money, NVIDIA may not be much stronger than Microsoft or Google, but once customers enter its ecosystem, it's difficult for them to leave." Therefore, over the years, NVIDIA has mainly earned money by providing software to weaker companies.

As early as 2006, NVIDIA planned to extend its graphical processor hardware product advantages to other directions and build its own CUDA ecosystem.

Through the CUDA developer platform, NVIDIA integrates its hardware, software, algorithms, and databases, greatly improving the effectiveness of hardware and software integration and accelerating computation speed.

The advanced technology maintained by hardware, the advantages brought by the CUDA ecosystem, and the monopoly position in advanced process capacity, together, make it difficult for companies to surpass NVIDIA now, according to the aforementioned chip industry insider.

In addition, the reason why NVIDIA can stay ahead of many companies is not due to opportunity or coincidence but because it can more keenly observe the progress and changes in cutting-edge technology.

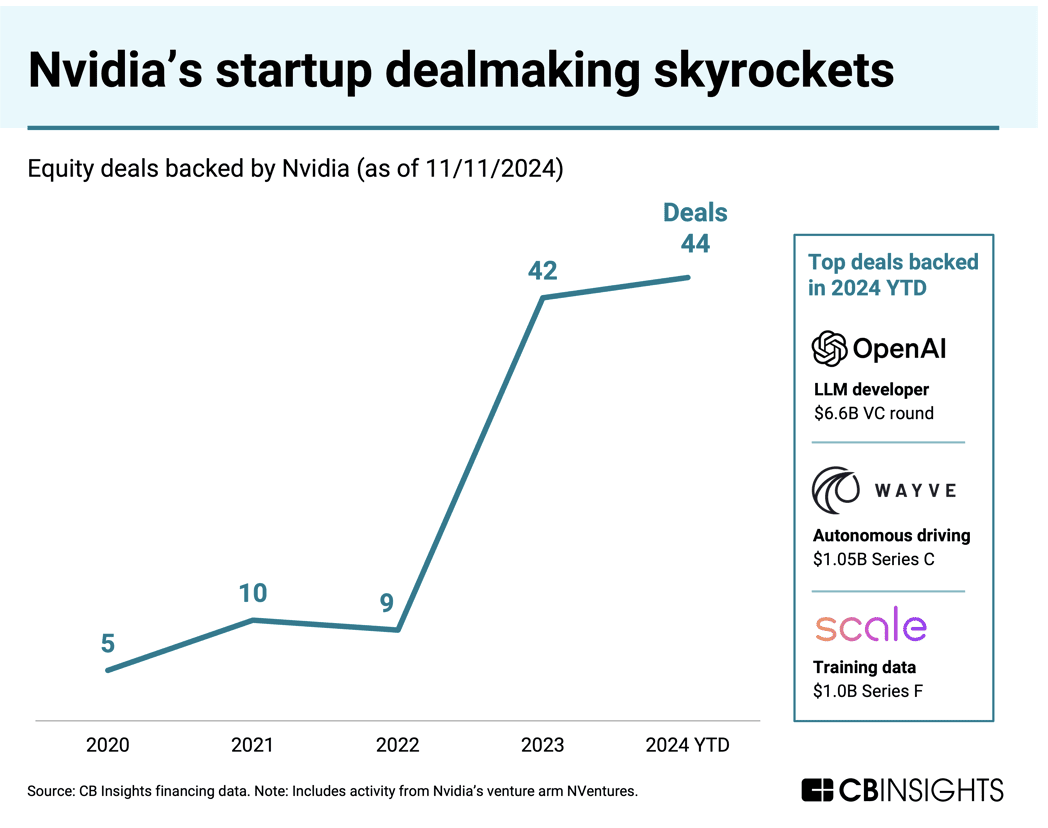

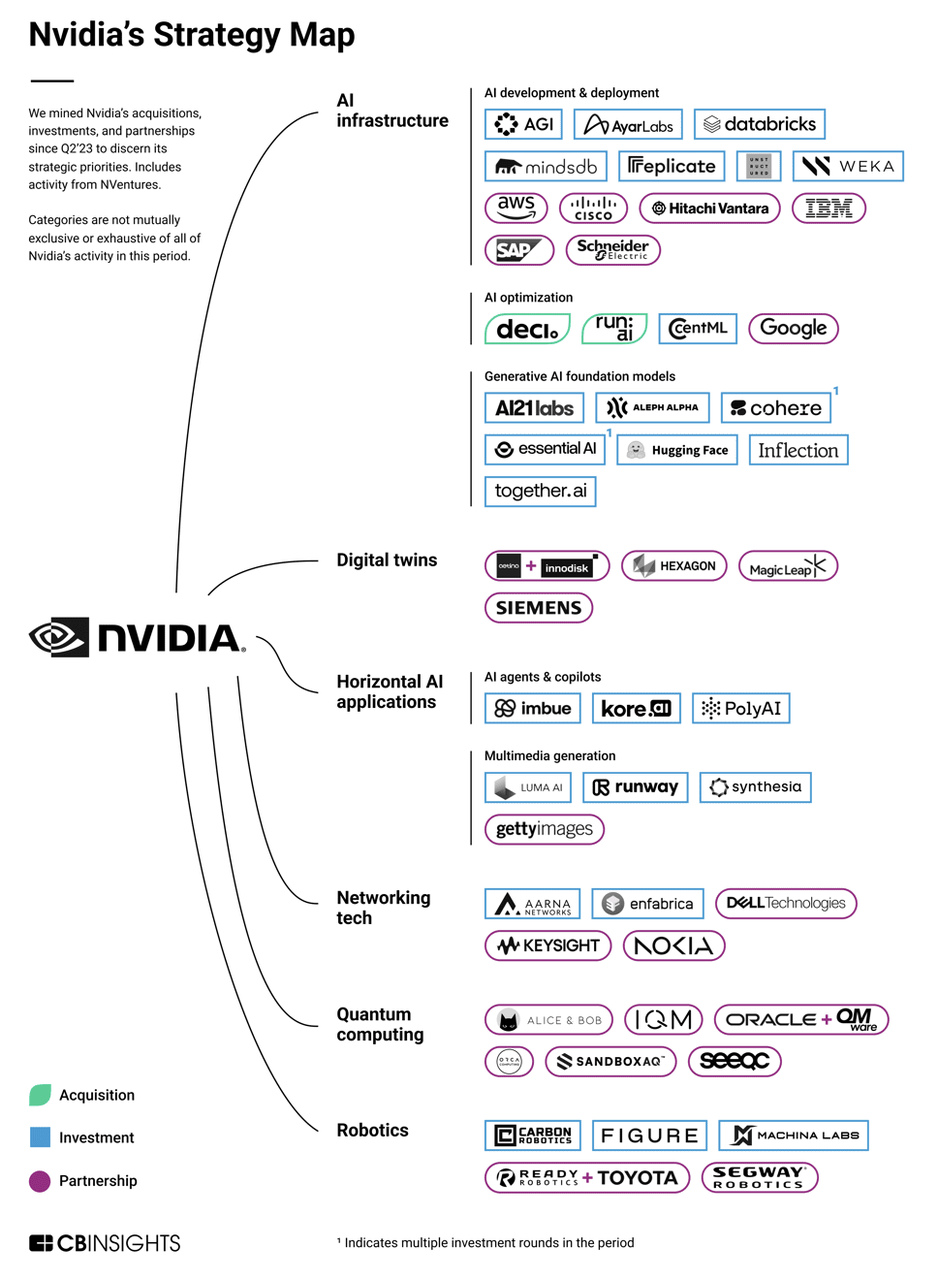

Since the beginning of this year, NVIDIA has acquired multiple startups. According to research firm CB Insights, NVIDIA has invested in at least 44 companies this year, a record high.

In recent years, NVIDIA has made comprehensive layouts in various directions such as humanoid robots, digital twins, generative AI, and quantum computing to enhance its competitiveness.

It took Microsoft 49 years to become the world's most valuable company by market capitalization, and it took Apple 35 years. NVIDIA achieved this feat in just 30 years, faster and more aggressively than the first two.

From the perspective of chip practitioners, investors, and entrepreneurs, without a significant change in technological paradigms, such as a qualitative leap in quantum computing or optical computing that achieves commercialization, or the emergence of new algorithmic frameworks, NVIDIA's position is difficult to shake in the next 10 years.

For NVIDIA, its golden age will continue.

End of article interaction:

How do you view NVIDIA's Q3 performance?

Share your thoughts with us in the comments below!