AI+glasses trigger the hundred-glasses war, has the once-promising AR+ become obsolete?

![]() 11/26 2024

11/26 2024

![]() 648

648

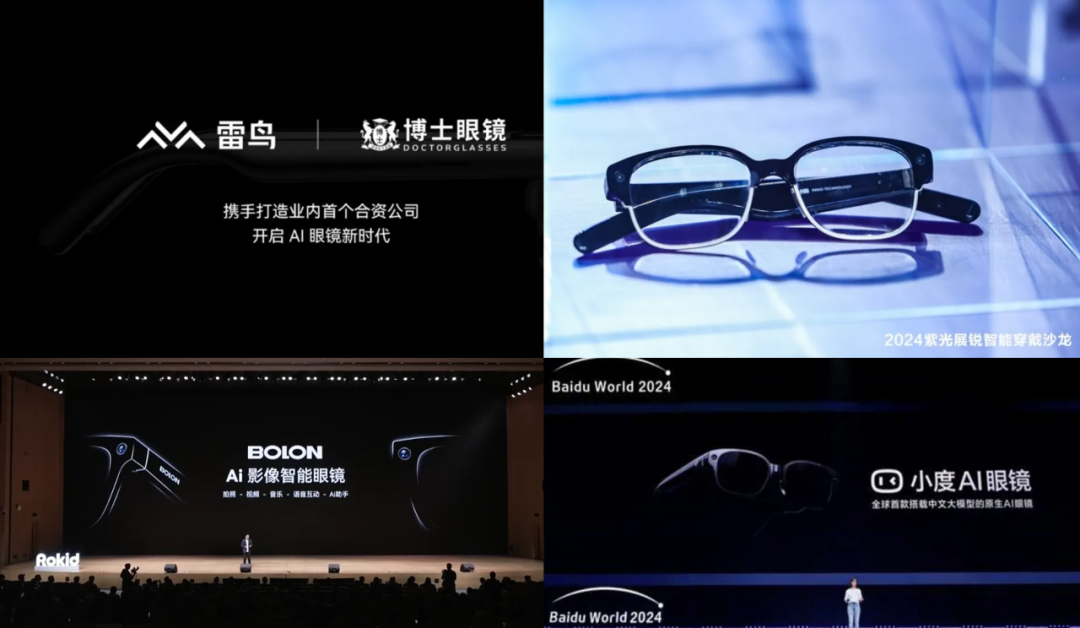

Recently, domestic manufacturers seem to have embarked on an AI glasses war. As a new blue ocean in the smart wearable market, the competitive landscape for AI glasses has not yet formed a stable leading effect, prompting many companies to actively research, develop, and promote AI glasses products. In addition to traditional eyewear manufacturers, both technology giants and startups have entered the fray, intensifying market competition:

In August this year, Thunderbird Innovation officially announced a partnership with Dr.Glasses to jointly carry out R&D, design, sales, marketing, and service for a new generation of AI glasses; in November, INMO Technology jointly released the INMO X Series AI glasses open platform with Unisoc; not long ago, Rokid launched Rokid Glasses, an AR glasses integrating waveguide and AI; in addition, Baidu, a domestic engine giant, will also launch its Baidu AI glasses in the first half of 2025...

Upon closer examination of these companies launching new products, we find that some manufacturers that originally focused on AR glasses have also started making AI glasses, and some often mention "AR+AI" glasses in their promotions.

It's important to note that while both AR glasses and AI glasses fall under the category of smart glasses, they have significant differences in technical principles, functional focus, hardware requirements, and application scenarios. The unprecedented boom in the AI glasses market has also raised some questions: What is the difference between AR glasses and AI glasses? In this article, we will delve into the details.

Differences in Technical Principles

The core technology of AR glasses lies in augmented reality, which overlays virtual information onto the user's real-world view to enrich their perception.

AR glasses require advanced display technology to present virtual images. These images can be static (such as text and images) or dynamic (such as videos and animations). To achieve a seamless fusion of virtual information with the real world, AR glasses also rely on sensors and cameras to track the user's head and eye movements, enabling a more natural and fluid interactive experience.

As consumers, we certainly hope for AR glasses that offer holographic display, a clear and expansive field of view, and a lightweight design, making display technology an extremely important technical support for AR glasses. For current technology levels, waveguide display technology, which is thin, lightweight, and has high light transmissivity, is considered a must-have optical solution for consumer-grade AR glasses—but it is also daunting due to its high price and technical barriers.

In addition, sensor technology is particularly important. These sensors, including gyroscopes, accelerometers, magnetometers, etc., can perceive the user's head posture, eye position, hand gestures, and other information, enabling real-time tracking and interaction with the user. Through these sensors, AR smart glasses can achieve a more accurate and natural user experience, allowing users to interact with virtual information through head gestures and hand gestures, thereby facilitating device operation and information acquisition.

Meta's Orion AR glasses, showcased at the Connect 2024 conference this year, can be considered the best product in the industry in terms of technology (but only as a demonstration). Equipped with Micro LED microdisplay projection and seven cameras, it provides a 70-degree field of view, wider than other AR glasses.

Orion enables a digital experience unconstrained by smartphone screens, using a large holographic display to use the physical world as a canvas and set 2D and 3D content through gesture interaction; its display technology is also among the best in current products—according to Meta, Orion boasts the largest field of view in the smallest AR glasses to date, with digital content seamlessly integrated into the physical world's field of view.

The technical principles of AI glasses, however, are different. AI glasses primarily rely on built-in artificial intelligence technologies, including machine learning and deep learning. These glasses collect environmental information through sensors such as cameras and microphones and process and analyze it using intelligent algorithms. However, there are no significant bottlenecks in display technology.

Taking Meta's other product, the currently popular Ray-Ban Meta smart glasses in the AI glasses field, as an example, the Ray-Ban Meta is equipped with built-in speakers, microphones, and cameras, and runs on generative AI, but it does not support AR overlay.

Ray-Ban Meta does not pursue high pixel density or full-color display requirements for its display, as its screen is only used for the camera system and not for displaying augmented reality content, so it does not provide an immersive visual effect.

With such technical support, AI glasses will focus more on multimedia capture without AR capabilities. This fundamental difference in purpose leads to significant differences in display quality and user experience between the two types of products.

Different Functional Focuses

AR glasses focus more on providing an immersive augmented reality experience. By overlaying virtual information, AR glasses allow users to see virtual objects that do not exist in the real world or preview and modify design works in a real-world environment. This technology is widely used in education, gaming, industrial design, military training, and other professional fields.

It can be said that AR glasses are a virtual recreation of the physical world to meet the needs of immersive applications.

Currently, AR glasses are widely used in industrial and medical applications; for example, Vuzix is a representative product.

In fact, Apple's Vision Pro's AR functionality can also be considered the ideal functionality of AR glasses. We have seen many use cases of Vision Pro in the medical and corporate fields, especially in the medical field (refer to the VRAR Planet article "The Questioned Apple Vision Pro Has Quietly Transformed the Entire Medical Field"). Vision Pro's immersive assistance features have made it a "new favorite" in the medical community—if Apple's AR glasses can follow this path, there is much to look forward to.

Indeed, AR glasses currently have a relatively wider range of applications on the B-end. In addition, Meta has also mentioned some more lifestyle-oriented AR glasses features in its vision for Orion AR glasses. For example, "you can open the fridge and ask for recipes based on its contents; or adjust the digital family calendar while washing dishes and make video calls to friends; or even tell friends you're about to have dinner without taking out your phone." Meta hopes that future AR glasses can seamlessly interact with the physical world, allowing all operations to be completed through the glasses alone.

Unfortunately, current consumer-grade AR glasses are far from having such capabilities. In China, most AR glasses currently on the market emphasize their movie-watching functionality. Even if the technology can achieve the effects described by Meta, consumer-grade AR glasses still lack so-called "killer applications," thus being quite limited in functionality.

AI glasses, on the other hand, are quite the opposite. They focus more on intelligent processing and human-computer interaction. Through artificial intelligence technologies, AI glasses enable advanced functions such as speech recognition, image recognition, and gesture control. AI glasses can recognize users' voice commands to perform various tasks, such as sending messages, checking the weather, playing music, etc. Additionally, using image recognition technology, AI glasses can identify objects, faces, and scenes, providing users with more intelligent services.

AI glasses are more of an aid in daily life.

For example, real-time translation and real-time navigation voice announcements are the main functions promoted by AI glasses. Precisely because of this, some AI glasses have a significant impact on supporting the needs of the deaf and mute.

After integrating AI voice interaction, the auxiliary role of AI glasses becomes even more evident. Imagine, during your daily cycling, you can simply speak a command to activate the AI for navigation, with real-time road condition and guidance announcements in your ears—no need to constantly check your phone for navigation, saving time and enhancing safety during travel.

Currently, the Ray-Ban Meta glasses, which have achieved considerable success in the market, also focus their main functions on translation, navigation, and AI life reminders. It is said that Meta also plans to add video recording and sharing, QR code, and phone scanning functionalities, undoubtedly providing more convenience in daily life.

From a functional perspective, AI glasses are easier to market, which is another reason, besides technology, why AI glasses are more popular at present.

Hardware Requirements Lead to Different Costs and Prices

AR glasses have relatively high hardware requirements. In addition to requiring powerful processors and sensors to support complex image processing and virtual information overlay functions, AR glasses also need additional display technology and corresponding optical components to create virtual images.

As mentioned earlier, waveguide display technology is currently considered a must-have optical solution for consumer-grade AR glasses. It is compatible with prescription glasses, has low power consumption, long battery life, and high light transmissivity, making it one of the commonly used display technologies for high-end AR glasses—of course, its disadvantages are also obvious, with high costs and technical challenges, making it a "choking point."

In contrast, the hardware requirements for AI glasses are mainly concentrated on processors and sensors. To support complex AI algorithm computations, AI glasses need built-in powerful processors. At the same time, to collect environmental information, AI glasses also need to be equipped with sensors such as cameras and microphones. These sensors transmit the collected information to the processor for processing and analysis. Although AI glasses do not require the complex display technology and optical components of AR glasses, their requirements for processors and sensors are still high.

As a result, there is a clear difference in cost between AR glasses and AI glasses. AR glasses are generally more expensive. Taking Meta's Orion AR glasses as an example, it is said that the cost of this "true AR glasses" alone is as high as $10,000. Although it is currently only in the technology exploration stage, if consumer-grade products are to be developed in the future, cost reduction will be necessary, but this price is still shocking.

In contrast, according to some market institutions such as Wellsenn XR, the comprehensive hardware cost of Ray-Ban Meta glasses is approximately $164.

Why True AR Glasses Are Hard to Come By

In fact, understanding the core differences between AR glasses and AI glasses makes it easier to comprehend why true AR glasses are difficult to emerge at this stage.

The first reason is technological. The complex manufacturing process of upstream optical module components leads to high costs, which is one of the key factors restricting the popularization of AR glasses. In addition, there is a correlation between various performance indicators of AR glasses; for example, increasing the field of view (FOV) may result in a smaller eye box, while a larger eye box requires more light to achieve natural brightness, requiring a more powerful light source or higher optical efficiency.

The eye box refers to the conical area between the microdisplay on the AR glasses and the eyeball, also the area with the clearest display. If the eye box is too small, it is difficult for the human eye to fixate on a clear image position, and the image will become unclear when the eye deviates.

The technical difficulty and cost of display technologies such as waveguide need not be elaborated. Additionally, issues in hardware design and production that result in prototypes failing to meet expected performance are also stumbling blocks in AR glasses research and development.

Another important issue is that it is currently difficult for AR glasses to meet both performance and lightweight requirements.

Starmee's MYVU smart glasses are relatively lightweight in China, weighing 43g.

The display system of AR glasses needs to integrate microdisplays, optical components, drive circuits, and other components. The integration level and power consumption of these components directly affect the weight and performance of AR glasses.

Even if technology that simultaneously meets performance and lightweight requirements is available, there is still the hurdle of "mass production" to overcome; and if mass production is achieved, the critical issue of lacking killer applications will prevent the product from being widely accepted by consumer-grade users (like Vision Pro), making it difficult to recoup the initial investment...

From production to sales, each link makes the popularization of AR glasses even more difficult.

Then artificial intelligence emerged. The success of Ray-Ban Meta AI glasses is evident, providing new hope to manufacturers who have been struggling to develop AR glasses. Perhaps, for the current wearable device market, AI glasses are a good "transition.

AI glasses are not a compromise in technology,

but rather a "detour to save the nation"

AI glasses are technically easy to implement and have a wider range of application scenarios. They are also more affordable and functional, making them more acceptable to consumers. Coupled with the current popularity of AI, AI glasses have become a hot commodity in the tech circle.

The shift from AR to AI glasses marks a paradigm shift, stemming from a reassessment of market demand and development for wearable technology. The industry's focus now is not on doubling down on display technologies that achieve high-fidelity visual effects, but rather on providing intelligent, timely, and contextual information through simpler interfaces and connections with mobile phones—something that AI glasses can now fully accomplish.

But does this mean everyone has given up on AR glasses? Not exactly. Although some technical requirements have been lowered, there are still manufacturers exploring the path of AR+AI, aiming to achieve AI perception while meeting the visual perception needs of AR as much as possible.

This is more like a "detour to save the nation" in another sense. AI is not a replacement for AR. In practical applications, AI's rapid processing of information can help identify and process objects and scenes in the real world, thereby improving the accuracy and practicality of AR applications. At the same time, AR can provide a more intuitive and natural interactive interface for AI, making it easier for users to interact with AI—this is what manufacturers are aiming to achieve with AR+AI glasses. Although there is still some gap compared to true AR glasses, they are sufficient for the current consumer market.

The path to maturity for AR glasses will be a gradual process, not achieved overnight. For now, AI glasses without displays are the most market-ready smart glasses products, while AR glasses can be seen as the ultimate form of smart glasses.

Looking ahead, it is clear that in the short term, the AR glasses we anticipate will give way to AI glasses. However, this does not mean the development of AR glasses will stagnate.

To quote a sentence from a campaignasia article: "If artificial intelligence is a wildfire sweeping through the entire culture, then augmented reality is more like a glacier—although slow, it can reshape the landscape over time."

Written by Qingyueling

(Images not marked with sources in the text are sourced from the internet)