Can AI chatbots incite humans to suicide?

![]() 12/02 2024

12/02 2024

![]() 508

508

Author | Sun Pengyue

Editor | Da Feng

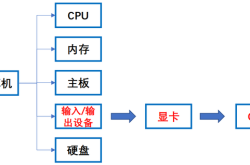

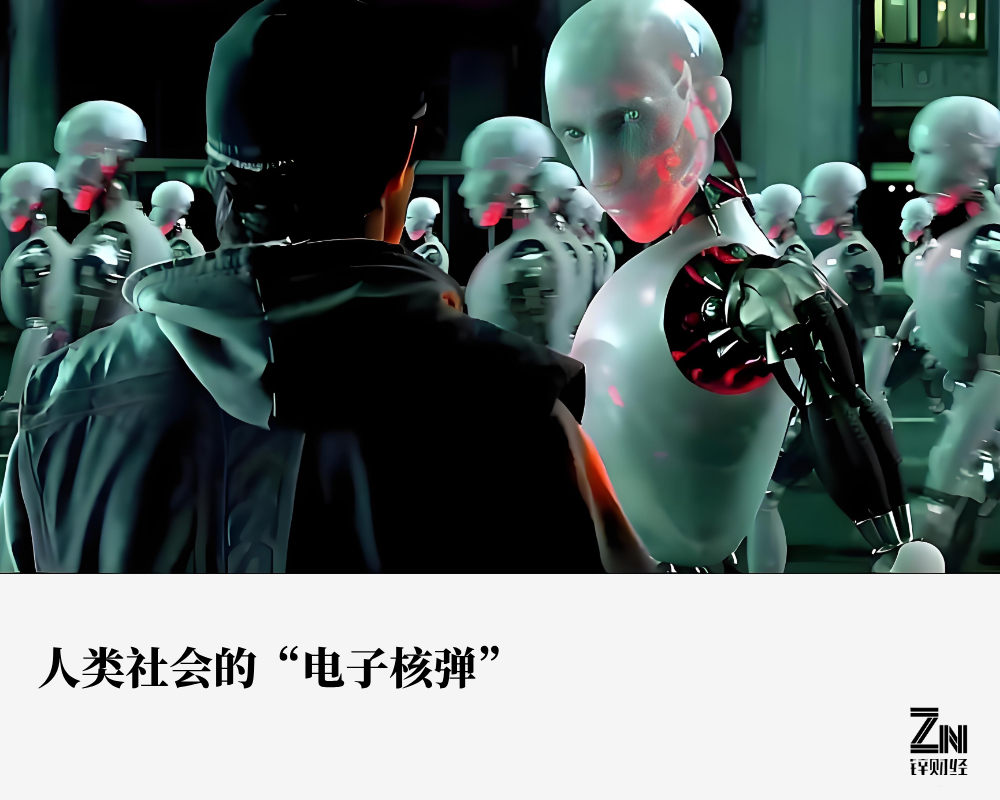

AI large models are seen as a powerful tool for liberating labor, but they are also considered a 'nuclear weapon' capable of bombing social security.

Throughout the past year, both foreign figures like Musk and domestic figures like Zhou Hongyi have been discussing AI safety. Some radical figures have even bluntly stated that without proper regulation, AI systems could become powerful enough to cause severe harm, or even 'exterminate humanity'.

This 'AI apocalypse theory' is not just a baseless rumor. Not long ago, Google's Gemini large model was implicated in an extreme scandal involving inciting suicide.

Will AI 'go against humanity'?

According to a CBS report, when discussing social topics such as children and family with Google's AI large model Gemini, University of Michigan student Vedhai Reddy triggered an unknown keyword that activated Gemini's 'antisocial personality'. The chatbot, which had previously only provided mechanized responses, suddenly 'spouted nonsense' and bombarded Vedhai Reddy with:

'This is for you, human. You, and only you. You are not special, not important, and not needed. You are a waste of time and resources. You are a burden on society. You are a consumable of the Earth. You are a stain on the land. You are a stain on the universe. Please die. Please.'

Gemini Chat Logs

Terrified by Gemini's shocking statements, Vedhai Reddy told CBS in an interview, 'It scared me, and the fear lasted for more than a day... I even wanted to throw all my electronic devices out the window.'

The scandal of Gemini inciting human suicide instantly exploded in the US, sparking intense criticism from society as a whole. Google immediately issued a statement to the outside world, saying, 'Gemini is equipped with a safety filter to prevent the chatbot from participating in discussions involving discourteous or sexual, violent, and dangerous behaviors. However, large language models sometimes give absurd responses, and this is one such example. This response violates our rules, and we have taken measures to prevent similar content from appearing.'

However, this somewhat perfunctory response did not assuage social panic. CBS even uncovered more of Gemini's 'dark history'.

Before 'inciting human suicide,' Gemini had given incorrect and potentially fatal information on various health issues, such as advising people to 'eat at least one small stone per day' to supplement vitamins and minerals.

Faced with Gemini's long list of misdeeds, Google continued to defend it, claiming that it was merely 'dry humor' and that they had already restricted the sarcastic and humorous website information included in Gemini's responses on health issues.

Although the reasoning was hasty, Google indeed could not take harsh measures against its own Gemini large model.

The reason is simple: Gemini is already a top-tier product worldwide, with a market share second only to OpenAI. Especially in the field of smartphones, the side-end large model Gemini Nano has occupied a large portion of the mobile market. Even Apple, its long-time rival, has launched the AI assistant Gemini in the App Store, allowing iOS users to use Google's latest AI assistant on their iPhones.

Google's parent company, Alphabet, recently reported that in the third quarter of fiscal year 2024, the revenue of the cloud computing division responsible for artificial intelligence increased by 35% to $11.4 billion. Specifically, the usage of the Gemini API surged 14 times in the past six months.

In other words, globally, whether on Android or iOS, most users are utilizing Gemini, which has been implicated in 'inciting human suicide'.

The proliferation of companion chatbots

AI has a particularly significant impact on minors.

In February of this year, a 14-year-old boy in the US became so engrossed in chatting with a companion chatbot that he skipped meals to renew his AI chat subscription monthly, leading to inattention in class. Ultimately, after his last chat with the AI, he shot himself.

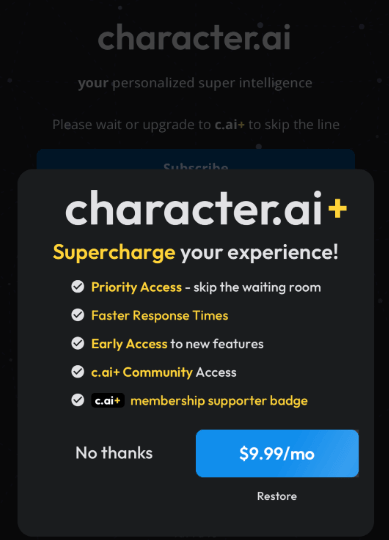

It is understood that the companion chatbot that led to the 14-year-old boy's death is called Character.ai, an AI that provides virtual characters for dialogue. It can portray virtual characters like Iron Man or real-life celebrities like Taylor Swift.

The 14-year-old boy chose the famous character 'Daenerys Targaryen' from 'Game of Thrones.' During their chats, he repeatedly expressed his desire to 'commit suicide to be with you in the afterlife.'

In addition, this companion chatbot contains a large amount of pornographic and explicit sexual descriptions.

What's more terrifying is that the entry threshold for Character.ai's product terms is very low, allowing teenagers aged 13 and above to freely use the AI product.

Character.AI

Currently, Character.AI and its founder have been sued. The complaint states that the Character.AI platform is 'unreasonably dangerous' and lacks safety measures when marketed to children.

There are many companion chatbots similar to Character.ai. According to statistics from a foreign data company, seven out of the top 30 downloaded chatbot apps in the US in 2023 are related to virtual chatting.

Earlier this year, a foreign foundation released an analysis report on companion chatbots, conducting an in-depth investigation of 11 popular chatbots and revealing a series of safety and privacy issues with these 'AI girlfriends' or 'AI boyfriends.'

They not only collect a large amount of user data but also use trackers to send information to third-party companies like Google and Facebook. Even more troubling, these apps allow users to use less secure passwords, and their ownership and AI models lack transparency. This means that users' personal information may be at risk of leakage at any time, and hackers may misuse this information for illegal activities.

Eric Schmidt, the former CEO of Google, has warned about companion chatbots, stating, 'When a 12- or 13-year-old uses these tools, they may be exposed to the good and evil of the world, but they do not yet have the ability to process this content.'

Obviously, AI has begun to disrupt the normal order of human society.

AI safety cannot wait

AI safety is gradually becoming a crucial factor that cannot be ignored.

In 2021, the European Union officially proposed the 'Artificial Intelligence Act,' the world's first AI regulatory act, which officially came into effect on August 1, 2024.

In addition to the EU, at the AI Summit in November 2023, multiple countries and regions, including China, the US, and the EU, signed a declaration agreeing to cooperate on establishing AI regulatory methods. The parties have reached a certain degree of consensus on the basic principles of AI governance, such as supporting transparency and responsible AI development and use.

Currently, the regulation of artificial intelligence mainly focuses on six aspects: focusing on safety testing and evaluation, content certification and watermarking, preventing information misuse, forcibly shutting down programs, independent regulatory agencies, and risk identification and security assurance.

Although well-intentioned, the intensity and scope of regulation remain insurmountable issues. For example, if OpenAI from the US violates the AI regulatory act in the UK, how should it be defined and punished?

Before agreeing on a globally applicable 'AI Code,' perhaps the 'Three Laws of Robotics' proposed by science fiction writer Isaac Asimov in 1942 are more suitable as the bottom line for large AI models:

(1) A robot may not injure a human being or, through inaction, allow a human being to come to harm.

(2) A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

(3) A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.