Algorithms and Users: The Landlord and the Serf

![]() 12/05 2024

12/05 2024

![]() 525

525

Author|Sun Pengyue

Editor|Da Feng

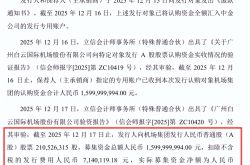

Recently, the Cyberspace Administration of China and three other departments jointly issued the "Notice on Carrying Out the Special Action of 'Clear and Bright Network Platform Algorithm Typical Problem Governance'". The core issue is to build a "information cocoon" prevention mechanism and enhance the diversity and richness of content pushed. "Information cocoon" is a concept proposed by Professor Cass R. Sunstein of Harvard University in his book "Information Utopia": It is a warm and friendly place because everything here is what one likes to see and hear, and the discussion of viewpoints and topics is merely one's own echo. In other words, the platform algorithm uses the user's clicks, browsing, and search history to allocate some information to the user and then reduce or ignore the user's exposure to other information, constructing an information-enclosed "black box": what you see is what the algorithm wants you to see; your thoughts are also subtly influenced by the algorithm. So, in this "black box", are you afraid?

Algorithms are constantly evolving

In the era of the Internet information explosion, knowledge has become an easily accessible information stream. The challenge for users is no longer "where to learn knowledge" but has escalated to "how to judge the authenticity of knowledge", which also gives algorithms room to maneuver. The "echo chamber effect" formed by the information cocoon means that even if you have niche hobbies or absurd ideas, you can find partners with similar views through algorithm allocation. The images and short videos you like are all your own "echoes". Everyone sees what they like to see, and everyone can maintain "freedom" and "happiness" in a small circle. Even if this "freedom" and "happiness" are just the fences in which the algorithm confines you.

Even more terrifying is that algorithms evolve. In Algorithm 1.0, users actively select and collect information, and the algorithm uses collaborative filtering to mine user behavior history, discover user preferences, and predict and recommend products that users may like. This is the common "guess what you like" feature on shopping platforms. In Algorithm 2.0, with the support of big data, there is no need for personal collection and selection; the machine directly pushes content based on your preferences. Regardless of your race, class, group, or viewpoint, the algorithm only shows you what you want to see. Everyone believes that their own views are the most correct in the world, fully satisfying and comforting your spiritual needs. Of course, Algorithm 2.0 will not admit to creating an "information cocoon"; it will only claim that this is the result of "customized reach/vertical recommendation/precise delivery," a legal and legitimate business tool.

Source: Internet

Interest recommendations on Douyin/Kuaishou, which seem neutral, actually have vastly different impacts on different groups.

For most users with "diverse interests," the content pushed to them may be pets, travel, digital products, etc., including "women in black stockings" and "men with six-pack abs." At least in the current platform environment, the latter proportion is much larger than the former.

For users with relatively monotonous real lives, repetitive content, weak self-control, and single information access channels, such algorithm recommendations may have extremely negative effects on them.

And this type of user covers a wide range, including blue-collar workers, middle-aged and elderly people, and primary and secondary school students.

Under the algorithm, they can easily become immersed in the "women in black stockings and men with six-pack abs" cocoon and even receive "inappropriate" information.

For example, the silver-haired generation is obsessed with health preservation, health, and short dramas, believing that rich people will really fall in love with cleaning ladies;

After a day of physical labor, blue-collar workers lie in their rented rooms, immersed in borderline live broadcasts, spending all their wages to be the top donor;

Even some primary and secondary school students receive content prematurely that is not appropriate for their age.

If these densely populated groups are recommended by algorithms that are "indifferent and only interested," it will bring a series of social problems.

Red pill or blue pill?

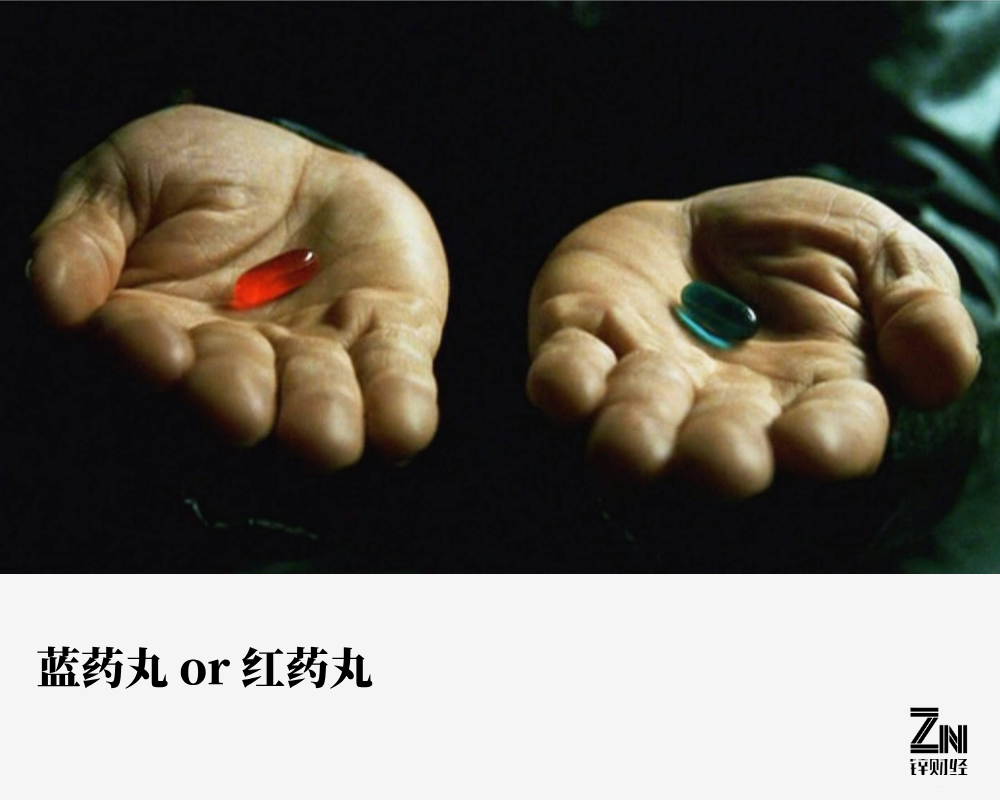

If you can only live in the world you want, many people think it doesn't matter; isn't it just "comforting entertainment"? I just want to relax and escape the brutal reality. But what if the algorithm affects your cognition? Cognition actually refers to the amount of information + the ability to process it. The more information you intake, the more powerful the knowledge system supporting your cognition becomes. What if your information intake is full of "misleading" content? For example, now open Douyin/Kuaishou and other short video apps and scroll through 10 short videos. If 9 out of 10 are related to the LGBT movement, will you think that LGBT is already the mainstream sexual orientation in society? Are heterosexuals actually a sexual minority? If you complete the intake of this information and form a cognition, congratulations, then you have entered the "second half" of the algorithm: if you deny it, you have to overturn your entire cognition.

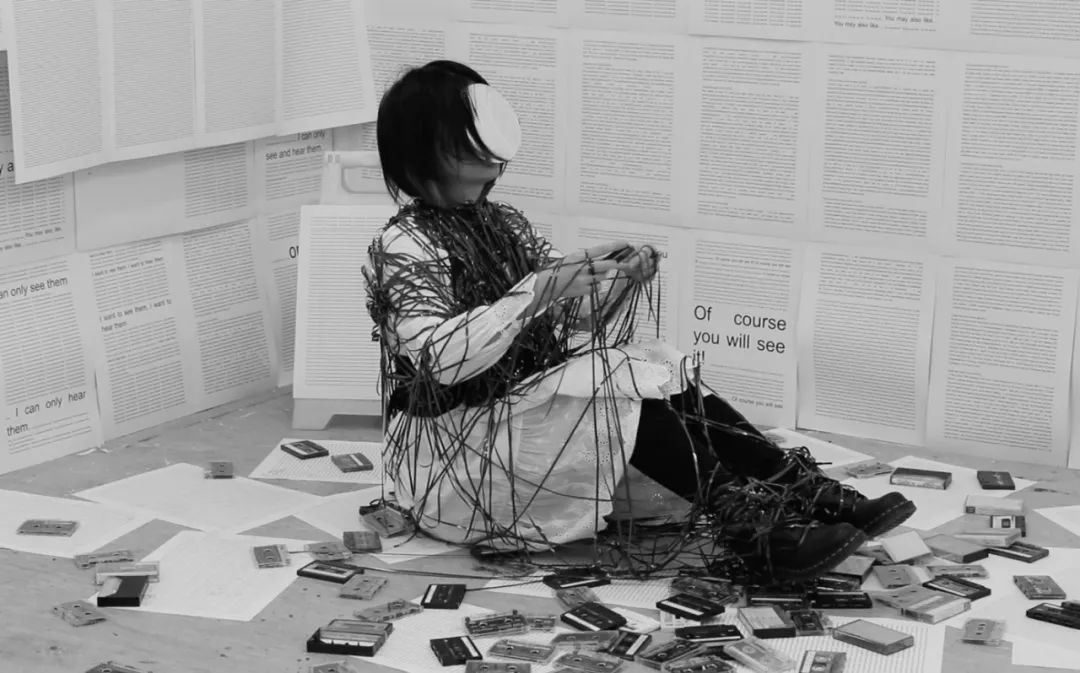

Source: Experimental short film from the University of the Arts London UAL

There is an ironic joke that perfectly describes the current algorithm.

"When I was in elementary school, everyone loved watching 'Journey to the West.' Everyone said that Tang Sanzang's cassock was black. One classmate strongly opposed and insisted it was red. The classmates got angry and beat him up. Later, he cried and took us to his house, and we were shocked to discover that there were actually color televisions in the world."

Yes, when you believe that the "black cassock" is the truth, the "red cassock" is heretical, alternative, and someone to be burned at the stake. There is exclude and even hostility toward different voices. If you want the "black cassock" to know the truth, you have to shatter their cognition and lift the skullcap to truly know what the truth is. Just like "The Matrix," faced with the comfortable algorithmic world and the cruel and indifferent real world, will you choose the blue pill or the red pill? I believe that most people will choose an algorithmic world that caters to everything, even if it dominates all your information.

Landlord or serf?

The French economist Cédric Durand wrote a book called "Technological Feudalism" in which he regards the enterprises behind the algorithms as "landlords" and users as "serfs." Durand believes that the application of algorithms and digital technology turns users into digital serfs. By analyzing user data, algorithms predict user behavior and convert it into profit. Under the rule of algorithms, users lose autonomy and control, acting according to the logic of the algorithm. This phenomenon of algorithmic rule is similar to the attachment of farmers to land in feudal society, binding users to the "territory" of the digital platform, from which they cannot escape the control of the algorithm.

The most typical case of "landlord and serf" is food delivery riders and ride-hailing drivers. They feel that their work is free, not supervised by a boss, and they can work whenever they want, completely depending on themselves, as if gaining some autonomy. But in reality, the enslaver has merely transformed from the "corporate boss" to the "corporate tool." Apart from following the orders, routes, and prices given by the platform algorithm, they have no other choice. Social pressure is also cleverly shifted, turning it into a conflict between "passengers and drivers" or "customers and food delivery personnel," as if all orders, routes, and prices are not autonomously generated by the algorithm. Everything returns to the core theme of Durand and "Technological Feudalism": the data we generate in social interactions constantly become the "nourishment" for algorithm optimization and decision-making, while social interactions are being "manipulated" by the results of the algorithm.

If you ask how to break free from the confinement of algorithms, the only way is to give users the right to choose and let them actively select rather than passively accept so-called "customized reach/vertical recommendation/precise delivery" and other commercial behaviors. Meanwhile, I want to ask again: "Do you choose the blue pill or the red pill?"