2024: Large Models Enter the "Finals"

![]() 12/18 2024

12/18 2024

![]() 506

506

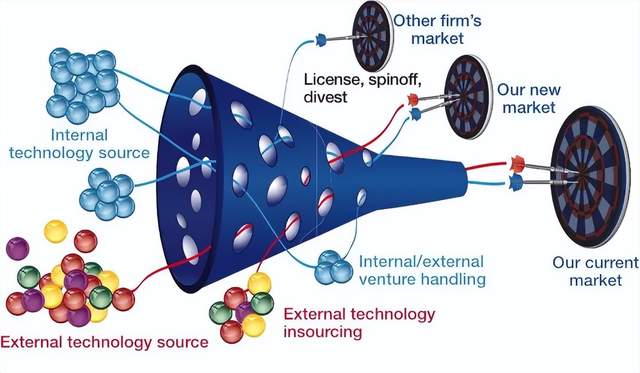

In his seminal book "Profiting from Technological Innovation," Henry Chesbrough introduced the innovative "Funnel Model." Open innovation initially encourages a myriad of approaches, but ultimately, only 10% of technologies make it through the funnel, successfully reaching the target market and progressing to the next stage of commercialization and industrialization, while the remaining 90% gradually fade away.

The year 2024 was a brutal test of this funnel order for large models.

At the dawn of 2023, the industry buzzed with the question, "Can China nurture top-tier large models?" Over the next year, the number of domestic large models skyrocketed, with over 100 registrations and online services.

By early 2024, the focus had shifted to, "With so many large models, how do we digest and utilize them?"

After the hundred-model war, basic large models were "reduced to one out of ten." Only about 10% of large models, boasting market vitality and high user activity, emerged victorious, advancing to the finals. The commercial market for large models has converged into two main forces:

One is tech giants represented by internet and cloud computing companies, such as Baidu's ERNIE Bot, Alibaba's Tongyi, Tencent's Hunyuan, ByteDance's Doubao, and Huawei's Pangu. The other is leading AI startups, exemplified by the "AI Six Tigers," including Wisdom Brain from AISmart and Yi from ZeroOne.

In essence, 2024 saw large models navigate a complete "Funnel Model." However, the asset-heavy nature of the large model industry makes the competition far more brutal than general technologies. We predict that 99% of large models will lose their industrial space. Hence, this model elimination round is far from over. Next, the innovation funnel for basic models will further narrow, ultimately leaving only three or four products as AI infrastructure.

It is essential to reflect on the large model elimination round in 2024 and identify the remaining contenders.

In 2024, both domestic and international large model landscapes exhibited a pronounced Matthew Effect. Overseas, giants like OpenAI, Google, and Microsoft remained unchallenged, while numerous large model startups, such as Stability AI, Adept, Humane, and Reka AI, lined up seeking acquisitions.

The domestic scenario mirrored this trend. Tech giants represented by internet and cloud vendors (Baidu, Alibaba, Tencent, Huawei, JD.com, ByteDance), along with AI startups boasting outstanding financing capabilities (the "AI Six Tigers"), emerged as dynamic competitors in the large model commercial market.

As the tide recedes, revealing the underlying truths, and enthusiasm for developing large models wanes in academia and industry, a clearer large model business model emerges in 2024. Specifically, for large models to successfully navigate the funnel, they require three driving forces:

1. Sustained Resource Investment: The AI large model industry is asset-intensive. In 2024, the Scaling Law remained valid, and as models continued to grow, the amount of high-quality data and computation required for training new models also increased. This is akin to climbing a mountain; every step forward is a challenge. Leading enterprises' advantages in funding, technology, and data became increasingly prominent, with ByteDance serving as a prime example.

ByteDance only fully invested in large models in 2024, and the Doubao large model, launched in May, quickly garnered industry recognition, with daily token usage surging from 120 billion in May to over 1.3 trillion in September. Leveraging its existing Volcano Cloud infrastructure and talent team, coupled with aggressive hiring and increased investment throughout the year, ByteDance established a competitive barrier within months.

2. Rapid Iteration of Model Capabilities: ByteDance's rapid rise and overtaking also demonstrate that AI large models lack a secure moat. Model capabilities are constantly depreciating; with new, more advanced model versions, older models become obsolete. Open-source models render similar closed-source models obsolete. This necessitates model factories continuously developing more powerful new models and iterating on older ones.

For instance, ERNIE Bot benefits from Baidu's full-stack layout in chips, frameworks, models, and applications, as well as the joint optimization of the PaddlePaddle deep learning platform and ERNIE Bot. The iteration speed of ERNIE Bot has always been at the forefront of the industry. In 2024, Baidu successively launched ERNIE Bot 4.0 Tool Edition and ERNIE Bot 4.0 Turbo based on ERNIE Bot 4.0, further enhancing inference speed and effectiveness. Faster model iteration bolsters user and developer confidence, increasing stickiness and willingness to pay.

3. Viable Commercial Channels: Competition among model factories is not merely reflected in the development of basic models but also in subsequent commercial promotion.

In 2024, large models transitioned from a "price war" to a "free war." In May, ByteDance ushered domestic large model market prices into the "cent era," followed by ERNIE Bot announcing that its two main models, ENIRESpeed and ENIRELite, would be fully free. As models enter the free era, model factories must explore alternative commercial channels to generate revenue and recoup initial large model investments.

Among them, tech giants typically have direct access to user data, application products, and channel resources, enabling AI large models to reach end-users and generate value. For example, Baidu Wenku app has integrated a series of AI functions based on ERNIE Bot, such as intelligent PPT and drawing, leading to rapid growth in paid users, with tens of millions of monthly active AI users.

Conversely, AI startups are expected to shine in the commercial market with cutting-edge technologies and product solutions. Among the "Six Tigers," ZeroOne explicitly stated it would not abandon pre-trained models. Currently, leveraging the standardized capabilities of the Yi series base models, ZeroOne is delving into vertical and refined business scenarios, launching digital human solutions like "Ruyi" and marketing short video solutions like "Wanshi."

In summary, the large model industry in 2024 witnessed numerous models being pushed into the market, each facing a narrow "funnel" exit and undergoing a grueling elimination round. Internet and cloud computing giants, alongside a handful of AI unicorns, successfully navigated the funnel and reached the next stage.

The 2024 elimination round left only one out of ten large models, rationalizing the industry structure and leaving about 10% of large models in the finals.

From the outcome, large models exhibit a "the rich get richer" Matthew Effect. So, how did these formidable players emerge victorious from the battlefield? If 2023 was a pivotal battle for infrastructure, with model factories sparing no effort to build computing clusters and high-end hardware resources necessary for training large models, then 2024 became a crucial battle for the commercial market.

To compete for active users, the commercial market for large models in 2024 revolved around two themes:

Theme 1: Money-Burning Marketing.

Generative AI (AIGC) products based on large models can achieve commercial conversion by providing services to users, making it the most direct and swiftest commercialization path for large models. In 2024, AIGC products exploded, with 309 generative AI products registered in China as of November 2024, according to the "Registered Information on Generative AI Services." Amidst numerous overlapping AIGC products, model factories had to engage in large-scale, high-frequency marketing and promotional activities to compete for active users and expand the user base.

Companies like MoonShadow and Wisdom Brain reportedly invested heavily in marketing, with the average customer acquisition cost for Kimi Intelligent Assistant reaching 30 yuan per user.

These money-burning AIGC products effectively increased brand awareness and user bases, but it must be acknowledged that the extent of their commercial value activation remains uncertain.

Theme 2: Moving Towards Application.

Can large models generate revenue without buying traffic or losing money on promotions? Yes, by moving towards applications. Reaching out to industries, users, and developers, and realizing commercialization through value-based and project-based payments. In 2024, the "application of large models" was already a reality.

Firstly, intelligent agents make large models more useful. Applications of large models shifted from AI assistants to intelligent agents, such as Doubao, Kimi, Wenxiaoyan, which can automatically decompose instructions and perform simple operations, offering better "autonomous driving" capabilities and significantly enhancing technology usability.

Secondly, toolchains make large models easier to use. Platforms like Baidu's ERNIE Bot intelligent agent platform, ByteDance's Kouzi, and Alibaba's Tongyi Qianwen provide support for intelligent agent technology and toolchains, enabling ordinary people to quickly and cost-effectively create their intelligent agents. Among them, Baidu, which is betting on "AI application," has the most comprehensive layout in the intelligent agent ecosystem, launching development platforms like APP builder and Agent builder, along with hardware like all-in-one local deployment devices, supporting C-end and industry users in developing exclusive intelligent agents. ByteDance's Kouzi is also extremely user-friendly, allowing users to replicate official high-quality templates, swiftly complete intelligent agent development with private data, and publish it for use in ByteDance products.

"Buying traffic with money" and "trading usage for traffic" were the two intertwined themes in the 2024 large model commercialization battle. A model factory might employ a combination of these two approaches to ensure the user base and market vitality of large models, solidifying its leading position at this stage.

Consumer-level technology adheres to a basic rule: simplifying complex technology to unlock breakthrough applications. Just as we send emails without delving into the SMTP protocol or use mobile payments without understanding the underlying encryption technology. This "hidden code" simplification makes technology more user-friendly, fostering faster adoption and expansion.

From this, we can predict some potential changes in the "finals" for underlying models:

Fewer Models: The large models spearheaded by tech giants and AI startups will continue to shuffle, ultimately leaving only 3-4 basic models as infrastructure to support a diverse array of downstream applications. In this process, the sustainability of investment, iteration speed, and commercialization capabilities will continue to play crucial roles, with internet companies and cloud vendors having a higher chance of success.

Further Simplification of Use: Currently, there is still room to simplify the use of large model technology. For example, intelligent agent development has not yet achieved low-code or no-code status. Once personalized scenario-specific plugins, knowledge bases, data processing, etc., are involved, the complexity of the development process increases, deterring industry experts from developing more specialized intelligent agents, which limits the explosion of large models on the B-end. Therefore, in 2025, intelligent agent development and exclusive model training should become simpler and more user-friendly. AI development novices may want to stay tuned.

Expanding Ecosystem: With everyone capable of engaging in AI development, involving training and analysis of private sensitive data and diverse personalized functional requirements, basic model factories cannot simply provide a simple encapsulation of an underlying model. Instead, they must support local training and deployment, invocation and combination of multiple models, and more diverse distribution channels. These requirements necessitate that basic model factories integrate AI hardware, AI terminals, vertical model factories, channel partners, etc., into their ecosystem to jointly meet users' customized needs. The size of this "friend circle" will also be a critical competition point for large models in 2025.

By 2024, the mid-field battle for foundational models came to a close, ushering in the finals. As the funnel for large models narrows to its narrowest point, the funnel for AI applications is just beginning to erupt. Listen, the year 2025, where "AI for all" becomes a reality, is drawing ever closer.