Introducing the Affordable Doubao Vision Understanding Model: A Sign of Intensifying Price Competition Among Large AI Models?

![]() 12/21 2024

12/21 2024

![]() 620

620

ByteDance has once again driven down the cost of large AI models!

At the Volcano Engine Force Conference on December 18, ByteDance officially launched the Doubao Vision Understanding Model, offering enterprises cost-effective multimodal capabilities.

According to conference data, processing 1,000 tokens on the Doubao Vision Understanding Model costs just 0.003 yuan, enabling the processing of 284 720P images for only one yuan—85% cheaper than industry standards. This significant cost reduction facilitates the widespread adoption and development of AI technology.

On the same day, OpenAI also joined the price reduction trend.

Its official o1 model's API costs 60% less in thinking costs compared to the preview version, and the audio costs of GPT-4o have been reduced by 60%. The audio price of GPT-4o mini, which saw the largest price reduction, is now 10 times cheaper than the current price.

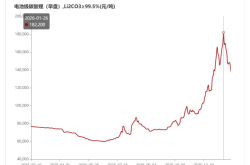

Various indicators suggest that the fierce competition in the large model industry has escalated into a price war. As vendors successively lower prices, large models are transitioning from a pricing model of "cents per token" to "tenths of a cent per token".

AI Large Models Embark on a "Price War"

Since mid-year, the "price war" among AI large models has intensified. Amidst the proliferation of AI large models, competition among industry participants extends beyond technology alone; price and specific application scenarios have become paramount.

As early as mid-May this year, ByteDance unveiled the Doubao large model, leading the price war among major companies. Its main model was priced at 0.0008 yuan per thousand tokens, claimed to be 99.3% cheaper than the industry average at the time.

In the same month, Alibaba Cloud announced comprehensive price reductions for nine main AI large models, including Qwen-Turbo, Qwen-Plus, and other main models of the Tongyi Qianwen GPT-4 level. Among them, the Qwen-Long API input price was reduced from 0.02 yuan per thousand tokens to 0.0005 yuan per thousand tokens, a maximum reduction of 97%.

Subsequently, Tencent also announced comprehensive price reductions for its large models. The price of its main model, Hunyuan-lite, was adjusted from 0.008 yuan per thousand tokens to completely free, and the total length of API input and output was also planned to be upgraded from 4k to 256k.

In July, Baidu announced significant price reductions for its Wenxin flagship models ERNIE 4.0 and ERNIE 3.5, with ERNIE Speed and ERNIE Lite remaining free.

Wenxin's latest flagship large model, ERNIE 4.0 Turbo, is fully open to enterprise customers at a highly competitive price, with input and output prices as low as 0.03 yuan and 0.06 yuan per thousand tokens, respectively.

In fact, the price war among large models is not limited to China but is also unfolding in overseas markets.

In May, OpenAI officially launched the new-generation flagship AI model "GPT-4o" at its spring conference. It not only significantly surpassed GPT-4 Turbo in functionality but also brought a pricing surprise. According to the official introduction, new GPT-4o users do not need to register, and all functions are free.

Prior to this, free users of ChatGPT could only use GPT-3.5. After the update, users can use GPT-4o for free for data analysis, image analysis, internet searches, accessing app stores, and other operations.

It's worth noting that amidst the plethora of price reduction news, some large model players are not rushing to follow suit.

Wang Xiaochuan, CEO of Baichuan Intelligence, believes that a free price is an advantage but not necessarily a competitive edge. "Baichuan will not participate in the price war because To B is not the company's main business model, and the impact of a price war is limited. The company will focus more on super apps."

When discussing the price war, Kai-Fu Lee, Chairman of Innovation Works and CEO of ZeroOne Everything, said that ZeroOne Everything currently has no plans to reduce the API prices of its YI series models and believes that the performance and cost-effectiveness currently offered by ZeroOne Everything are already very high, and that a crazy price war would be a lose-lose situation.

"Behind the 'Price War,' the Logic of Competition Has Changed"

As we all know, AI large models are notorious for being a capital-intensive industry. Why has a price reduction trend emerged when their commercialization is still in the exploratory stage?

On one hand, competition in the current large model market is intensifying. According to incomplete statistics, there are over 200 domestic large models, and companies are using price as a key competitive advantage, reducing prices to enhance their market competitiveness and share.

Research firm Canalys states that with the clustered emergence of vertical large models in multiple fields, domestic leading vendors must enter the market at low prices through "volume sales" to stabilize their industry position, lowering the threshold for large models and quickly forming an ecosystem through "land grabbing".

The effect of price reductions for large models is significant. Taking Alibaba Cloud as an example, after the price reduction of large models, the number of paid customers on the Alibaba Cloud Bailian platform increased by over 200% compared to the previous quarter, with more enterprises opting to use various AI large models on Bailian instead of deploying them privately.

On the other hand, the commercial application of large models requires a substantial user base and data support. A high volume of traffic helps enterprises further enhance their model service capabilities and amortize costs through economies of scale.

A research report from Hualong Securities states that as competition among domestic and foreign large model vendors intensifies, the signal for a price war has become very clear.

By lowering the price threshold, large model vendors aim to attract a broader range of enterprise user groups, further balancing revenue and costs. Simultaneously, more C-end users are expected to use basic AI applications for free, and the high volume of traffic helps enterprises further enhance their model service capabilities, completing a virtuous cycle.

In summary, the trend of major model vendors engaging in a "price war" is just the beginning. Next, leading vendors will continue to focus on accelerating the deployment of large models. While competing, they will also propel large models to a new stage of development.