Training GPT-5 for 18 Months Yet No Launch: Is the AI Large Model Bubble About to Burst?

![]() 12/24 2024

12/24 2024

![]() 556

556

From early to mid-December, OpenAI held a marathon press conference spanning 12 days, unveiling new products or technologies daily. These included the enhanced o1 large model, the text-to-video large model Sora Turbo, the streamlined inference model o3-mini, and advanced voice mode enhancements.

However, the 12-day event failed to generate much enthusiasm. Even the comprehensively upgraded large models and the text-to-video model Sora Turbo received limited discussion, accompanied by more criticism than praise.

(Image source: OpenAI)

The reason is straightforward: while these large models have indeed become more powerful, assisting users with a wider range of tasks, there has been no substantial improvement. The long-anticipated GPT-5 did not materialize, and the new product Sora Turbo can only generate 1080P videos up to 20 seconds long, falling short of the earlier 2024 promise of 2-minute videos.

After the release of GPT-4 in March 2023, OpenAI initiated the GPT-5 development project, codenamed "Orion." Microsoft, OpenAI's primary investor, originally anticipated GPT-5 by mid-2024, but 18 months have passed, and GPT-5 is still struggling to launch.

Faced with the delayed GPT-5 launch, The Wall Street Journal stated that OpenAI's AI projects are extremely costly, and it remains unclear when or even if they will succeed. Some have questioned whether the problem lies with OpenAI or if the AI industry has hit a bottleneck.

Costly yet ineffective, OpenAI is in deep trouble

In mid-2023, OpenAI launched its first live test project for Orion, codenamed "Arrakis." However, the test results revealed that training larger AI models required an extremely long time, causing overall costs to soar.

OpenAI staff believe Orion's slow progress is due to the lack of sufficient high-quality data. Earlier, OpenAI continuously scraped data from the internet, using news reports, social media posts, scientific papers, etc., to train large models, leading to a lawsuit from Canada's Torstar Corp. Group.

However, existing internet data is insufficient for training GPT-5, so OpenAI devised a solution: original data. OpenAI is recruiting personnel to write software code or solve math problems for Orion to learn from. Obviously, this solution will further extend Orion's training time and significantly increase costs.

(Image source: AI generated)

Feeling pressure from competitors in early 2024, OpenAI conducted several small-scale trainings for Orion and launched a second large-scale training from May to November, but the issues of insufficient data volume and lack of data diversity persisted.

OpenAI CEO Sam Altman stated that training GPT-4 cost approximately $100 million, and future AI model training costs could reach $1 billion. Currently, GPT-5's months-long training has already consumed $500 million without achieving the desired results.

OpenAI's troubles extend beyond data and costs to external competition. The AI industry's boom has surged the demand for talent. As an industry leader, OpenAI has naturally become a target for poaching by other companies. Nine of OpenAI's original 11 co-founders have left, along with senior executives like CTO Mira Murati, Chief Research Officer Bob McGrew, and Vice President of Research Barret Zoph in 2024.

Competition from rivals has also forced OpenAI to explore more sectors, such as creating a streamlined GPT-4 and the text-to-video large model Sora. Insiders say these new projects have forced OpenAI's internal new product development teams and Orion researchers to compete for limited resources.

Fortunately for OpenAI, it's not alone in facing data and funding issues. Ilya Sutskever, who has worked at Google and OpenAI, bluntly stated that data is AI's fossil fuel, and this fuel is running out. We only have one internet, and the era of maximizing data use has passed.

This is why The Wall Street Journal questions whether GPT-5 will ultimately succeed. But does the limitation of data volume really stifle the development of the AI industry?

Endowing AI Large Models with Thinking Abilities: Is this OpenAI's Pipe Dream?

Despite the Orion project's massive funding, OpenAI, backed by internet giants like Microsoft and Apple, is not short of funds but lacks data and computing power.

Faced with insufficient data, OpenAI researchers devised a shortcut: giving AI large models more time to think and solve untrained difficult problems. In other words, OpenAI aims to circumvent the issue of insufficient data by endowing large models with thinking abilities, allowing them to think like humans and solve unprecedented problems.

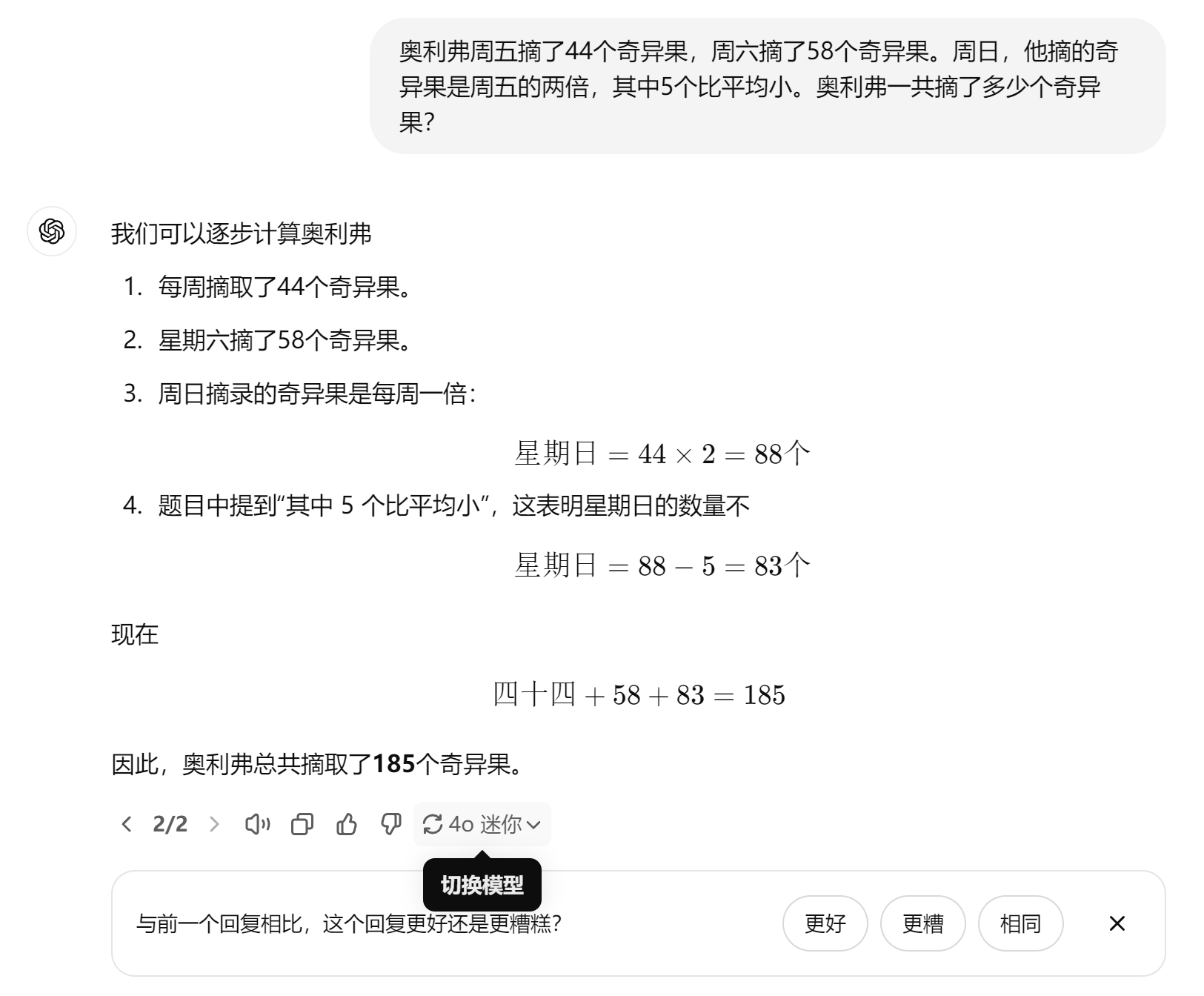

The question is, do AI large models truly possess thinking abilities? Apple researchers raised objections in their paper "Understanding the Limitations of Mathematical Reasoning in Large Language Models," stating that AI large models can only apply existing patterns and do not possess genuine reasoning abilities. Apple provided an example of a kiwi test case where GPT-4o mini failed to accurately count the number of kiwis when an irrelevant sentence was added.

In a previous article, I tested this case and found that while GPT-4o mini failed, multiple other large models like Doubao and Kimi passed the test. Additionally, when asking AI large models mathematical questions now, the answers usually come with problem-solving steps, indicating that large models are no longer merely applying trained patterns but solving problems based on logic.

This approach naturally reduces the data volume required to train AI large models and may even achieve solving problems by inputting mathematical formulas in the future. Of course, AI large models are not yet capable of this, and high-quality data remains indispensable.

Are high-quality data truly exhausted, as Ilya Sutskever claimed? I believe the answer is no. More accurately, easily collectible data has been used up.

There are three main sources of data for training AI large models: first, public data, such as open-source data from institutions or organizations, internet posts, and academic papers. While internet data has copyright issues, scrutiny is not strict, and it is convenient to scrape; second, proprietary data, which companies like Alibaba and Xiaomi can use to train large models using user data accumulated on their platforms; third, collaborative data, which AI companies exchange or purchase from other enterprises.

(Image source: AI generated)

The data that has been collected mainly refers to public and proprietary data, leaving ample room for collaborative data. For example, in the development of Chinese internet culture, the proportion of web pages is not as significant as imagined, and a large amount of data is concentrated in the hands of App developers. Collaborating with developers to exchange or purchase this data can also be used to train large models. Additionally, many enterprises have confidential data that AI companies can purchase to train large models.

This data is not publicly available and requires companies to incur costs to obtain, which may increase the cost of training large models for AI companies. Therefore, many AI companies are considering using AI-generated data or transformed existing data to train AI large models.

However, using AI-created data to train itself may lead to malfunctions or generate meaningless content. Therefore, another AI large model is needed to generate data to avoid this issue, which also requires substantial funding.

The development of AI large models has hit a bottleneck but is far from over. The cost for AI companies to obtain data has soared, and the demand for computing power has increased. The solution is simple: achieve profitability as soon as possible.

AI Large Models: Money-Eaters or Future Goldmines?

In recent years, global bubbles like the metaverse, blockchain, and predicting all diseases with a single drop of blood have successively burst, leading many netizens to suspect that AI is also a bubble and scam. From my experience, AI has become a valuable helper in improving our work efficiency. Multiple illustrations in this article were generated by AI, proving that AI is not a bubble, but funding issues have become a crucial factor hindering the development of AI technology.

Earlier this year, Sam Altman stated that $7 trillion was needed to reshape the global semiconductor industry and provide sufficient computing power to train AI large models. Almost everyone thought Altman's idea was unrealistic, and NVIDIA CEO Jen-Hsun Huang even said the total value of global data centers was only $1 trillion.

Now it seems that even $7 trillion may not push the AI industry to its peak, and AI companies still have to pay a high price for data. Without massive data, AI large models cannot undergo qualitative changes. Without these changes, their value may not be sufficient, potentially leading investors to withdraw support. As AI large models are on the verge of a bottleneck, turning losses into profits as soon as possible can rejuvenate the AI industry.

Currently, subscription prices for paid versions of global AI large models are quite expensive, especially for industry leader OpenAI's ChatGPT. The subscription price for ChatGPT Plus is as high as $20 per month, and the more powerful ChatGPT Pro reaches a staggering $200 per month.

(Image source: ChatGPT screenshot)

But can increasing subscription fees lead to profitability? Probably not. Willing individual users are always in the minority. Only by creating professional application scenarios and earning money from enterprises can profitability be achieved sooner. Moreover, professional scenario training requires less data and computing power, saving costs to some extent. The C-end market is typically less profitable, more troublesome, and harder to please, so related investments can be temporarily reduced to lower expenditure costs.

Once AI companies achieve profitability, investors will naturally be more confident in funding support, and companies will have more funds to purchase data and computing power chips, thereby training and enhancing AI large models.

Source: Leitech