Apple Eyes Humanoid Robots Amidst Industry Surge

![]() 01/13 2025

01/13 2025

![]() 419

419

Author|Lingxiao

As Google, NVIDIA, and OpenAI spearhead the humanoid robot revolution, Apple has stealthily joined the fray.

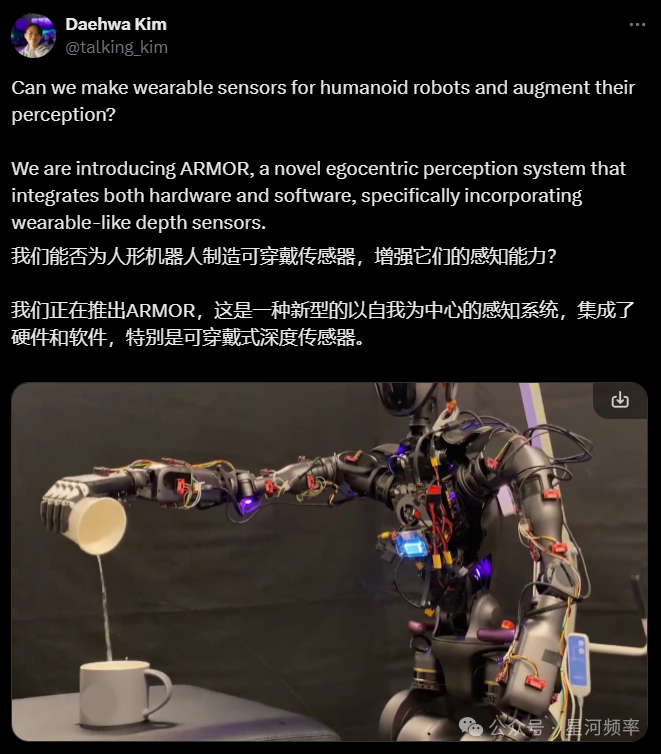

The news broke not from an official announcement but from Daehwa Kim, a former Apple intern and current Ph.D. student at Carnegie Mellon University.

Kim revealed that Apple and Carnegie Mellon University have collaboratively developed ARMOR, a robot perception system designed to mitigate arm blind spots, enhance spatial awareness, and aid in motion planning.

Equipped with ARMOR, robots can navigate complex, crowded environments more flexibly, avoiding collisions and ensuring heightened safety.

Apple holds the title of the world's most valuable company, yet technological advancements race ahead. In this fiercely competitive landscape, Apple's high market value cannot dispel its sense of crisis as a technology enterprise.

Recent years have seen Apple stumble in its pursuit of new growth avenues: its automotive ambitions fell through, and the much-anticipated Vision Pro headset faced weak sales and rumors of production halts.

While products like the iPhone and Mac sustain Apple's prosperity, the company must seize the next "iPhone moment" in tech to ensure its dominance.

Thus, humanoid robots have emerged as one of Apple's strategic bets.

ARMOR tackles blind spots and boasts a 26x improvement in computational efficiency.

This system can be seen as the humanoid robot's intelligent skin, enhancing perception and facilitating precise motion control.

ARMOR is a hardware-software integrated system comprising multiple small LiDAR sensors and the ARMOR-Policy motion planning strategy.

In essence, ARMOR combines the robot's "eyes" with a learning motion algorithm.

Traditionally, cameras or LiDAR are mounted on the head and torso to give robots environmental perception. However, this often obstructs the robot's arms, creating blind spots and complicating motion planning in crowded settings.

Apple's ARMOR system aims to resolve this issue.

Tactile sensors, commonly used in robotic hands, are challenging and costly to integrate into humanoid robot arms.

Hence, ARMOR employs the VL53L5CX ToF LiDAR from SparkFun, which, despite lower detection accuracy, offers lightweight, low-cost, low-power, and flexible scalability benefits.

Its wearable design makes it theoretically installable on any humanoid robot.

The research team deployed 40 LiDAR sensors on the robot's arms, 20 on each, providing an almost unobstructed view of the environment.

Fourier Intelligence's GR-1 robot served as the experimental subject.

Perception data is transmitted as follows:

Four sensors are grouped and connected to an XIAO ESP microcontroller, which reads data via the I2C bus and transmits it via USB to the robot's onboard computer (Jetson Xavier NX), then to a Linux host equipped with an NVIDIA GeForce RTX 4090 GPU for processing.

This method allows the system to operate at 15 Hz, enabling the robot to update its environmental perception every 1/15 second.

With perception hardware in place, the next step is enhancing motion planning.

The research team developed ARMOR-Policy, based on the Transformer encoder-decoder architecture, akin to the imitation learning algorithm Action Chunking Transformers.

ARMOR-Policy learns from human motion demonstrations devoid of collisions, trained on approximately 86 hours of data from the AMASS dataset.

It functions like a generative model, predicting the robot's next move based on sensor data and target joint positions.

Under identical hardware conditions, ARMOR-Policy outperformed NVIDIA's cuRobo, reducing collisions by 31.6%, increasing precise control success rates by 16.9%, and enhancing computational efficiency by 26x.

ARMOR also surpasses traditional perception systems in effectiveness and reliability.

Compared to robots with four depth cameras, those using ARMOR experienced 63.7% fewer collisions and a 78.7% increase in success rates.

The technology landscape for humanoid robots is vast, encompassing mechanical design, power and drive systems, control systems, AI, and more.

Apple's focus on the perception system likely stems from its critical role as a bridge between the virtual and real worlds for robots.

While AI excels in digital worlds, transitioning to physical realms remains challenging due to limited training data and deficiencies in perception and motion planning. Apple aims to build this bridge.

Apple Seeks New Growth Horizons

Attempted Acquisition of Boston Dynamics

Based solely on the ARMOR perception system, Apple's investment in humanoid robots appears modest. The project team comprises just four individuals: Daehwa Kim from Carnegie Mellon University, Mario Srouji (senior machine learning engineer at Apple), Chen Chen (machine learning engineer at Apple), and Jian Zhang (director of AIML robotics research at Apple).

The development of ARMOR represents a small-scale trial for Apple in humanoid robotics.

Currently, Apple's approach mirrors that of NVIDIA and Google, aiming to enter the humanoid robot ecosystem by providing key development tools.

However, unlike NVIDIA and Google, which have aggressively pursued humanoid robots, Apple adopts a more low-key, cautious stance, perhaps due to the failure of its automotive venture.

Since the "iPhone moment," Apple has been eager to create another industry-disrupting innovation. However, unclear new business positioning and conservative product iterations have long criticized Apple for lacking innovation and new growth points.

For instance, Apple's "Titan" automotive project, launched in 2014, spanned 10 years and over $10 billion in investment. Frequent leadership changes and indecision between building a full vehicle or focusing on autonomous driving technology led to no commercially viable outcomes, ultimately deemed a failure in February 2024.

Moreover, the Vision Pro, touted as a groundbreaking Apple product, faced dismal sales. Priced at $3,444, it lacked a robust content ecosystem. Expected to sell 1 million units in 2024, it fell short with less than 500,000 sales, amid rumors of production halts.

Revenue growth in Apple's core businesses is also decelerating.

The iPhone, Apple's flagship product, has faced criticism for incremental updates, often described as "squeezing the toothpaste tube."

From Q4 2022 to Q4 2023, Apple's revenue declined for five consecutive quarters year-on-year, only improving after significant investments in generative AI were announced.

Meanwhile, the global smartphone market is fiercely competitive and increasingly saturated, necessitating Apple's proactive search for change and new breakthroughs.

As Musk envisions Optimus supporting Tesla's $25 trillion market value, Apple, with a $3.6 trillion market value, also eyes this colossal pie.

Robots have emerged as a key potential area for Apple to expand its business horizons.

Apple's robotics exploration began around 2020.

Adrian Perica, Apple's mergers and acquisitions director, approached Boston Dynamics to discuss an acquisition, but the latter was acquired by South Korea's Hyundai Motor Group in 2021.

After several explorations, Apple chose to focus on home service robots.

In 2022, CEO Tim Cook defied opposition and launched a desktop robot project codenamed J595.

This iPad-equipped robotic arm, featuring Siri and Apple Intelligence, will serve as a smart home control center and video conferencing assistant, with an anticipated launch in 2026 or 2027.

AI-generated image

Apple Intelligence represents a significant AI bet for Apple this year.

This personal intelligence system provides various AI functions across hardware products like the iPhone, iPad, and Mac, including understanding device data, processing documents, speech, video, and other files, perceiving user needs, and offering appropriate assistance.

According to Bloomberg, the J595 desktop robot project team comprises hundreds of individuals, many from the "Titan" project.

Kevin Lynch, Apple's Vice President of Technology, will oversee this project. He previously contributed to the launch of the Apple Watch and oversaw the automotive project's progress.

Kevin Lynch

Apple's robotics R&D falls under its Hardware Engineering and Artificial Intelligence and Machine Learning departments, led by AI Director John Giannandrea.

Bloomberg adds that Apple might introduce mobile or even humanoid robots within the next decade. This year, Apple has hired top robotics experts from institutions like the Technion-Israel Institute of Technology.

Apple possesses notable advantages in entering the humanoid robot market, including expertise in sensors, advanced chips, hardware engineering, batteries, and environmental mapping. This year, it also launched Apple Intelligence, a major AI product.

However, as most global humanoid robot companies target 2025 for mass production, Apple's J595 desktop robot project seems delayed. Additionally, its AI research lags NVIDIA and Microsoft by two years. It remains to be seen how impactful an iPad-equipped robotic arm will be compared to ordinary smart home devices.

Apple may need to adopt a more decisive and bold approach to humanoid robots.