AI: A Double-Edged Sword Polluting the Global Internet

![]() 01/15 2025

01/15 2025

![]() 686

686

Addressing both development and governance is paramount.

"Seeing the image of the Hollywood sign ablaze, I feared the worst for Hollywood, only to discover it was an AI fabrication."

Fan Chengzi (pseudonym), an avid moviegoer, frequently vents to friends about the "obviously fake" AI-generated images on social media. However, amidst recent news coverage of the Los Angeles wildfires, Chengzi failed to recognize the plethora of AI fakes intertwined.

Image source: Weibo screenshot

"The image of the burning Hollywood sign spread like wildfire across every movie fan group. Everyone joked it was a 'fall' before the movie market could. Yet, the next day, I saw media debunking the rumor, revealing it as AI-generated." Chengzi noted that the emotional elements in the fake image made it all the more challenging to discern from real news when juxtaposed.

"Qu Xie Shang Ye" observed that despite the rumor's debunking, many netizens still perceived the AI image as genuine.

01. Have You Ever Been Fooled by AI?

Prior to the "Hollywood sign on fire" incident, an AI-crafted image of a "trapped little boy" also went viral on social media following a 6.8-magnitude earthquake in Tibet's Tingri.

Chengzi lamented, "At the time, I even griped that the character image had too strong an AI feel, with the wrong number of fingers and overly blurred facial features, thinking only the middle-aged and elderly, unaware of AI, would believe it. Yet, days later, I too was 'fooled' by the AI image of the Hollywood fire."

Recent social news items, including "Hollywood sign on fire" and "earthquake-buried boy," deceived many. Despite official refutations, numerous users, including youths, remained "steadfast" in their belief.

Image source: Douyin screenshot

Media reports revealed that a Qinghai internet user was investigated for fabricating attention by associating and compiling the "buried little boy" image with Xigaze earthquake information, confusing the public and misleading the masses, thereby spreading rumors. The individual was subsequently administratively detained by local authorities.

On the evening of January 10, Douyin officially announced having severely addressed 23,652 pieces of false earthquake-related information and disseminated rumor debunking content. The announcement also mentioned that in 2023 and 2024, Douyin issued notices concerning AI content governance, clarifying that the platform discourages the use of AI for low-quality virtual character creation and will strictly punish violations involving the use of AI to generate virtual characters for content that contradicts scientific common sense, falsifies information, or spreads rumors.

Image source: Today's Headlines screenshot

Previously, "Qu Xie Shang Ye" mentioned in the article "In 2024, How Many People Were 'Harvested' by AI?" that AI-generated content is dominating social networks. Virtual content like "cats singing with their mouths open" and "Empress Zhen Huan machine-gunning Emperor Xuanzong" can still be discerned as AI creations, but many current AI-generated human images and videos are indistinguishable from reality with the naked eye.

Chen Mu (pseudonym), a frequent short video browser, noted that encountering AI-created content is increasingly likely. "After watching a face-swapping video, the platform incessantly pushes similar AI face-swapping secondary creations." However, current social media platform audits cannot accurately identify all AI-created content, requiring users to consciously check and label posts. Moreover, due to the inconspicuous identification of AI content, some content is still perceived as genuine even when clearly labeled as AI-generated.

Media reports revealed that in mid-June 2024, Wang so-and-so, the actual controller of an MCN agency, was administratively detained by police for using AI software to generate and widely disseminate false news, disrupting public order. According to the police report, Wang so-and-so operated a total of 5 MCN agencies and 842 accounts. Since January 2024, Wang so-and-so generated false news through AI software, peaking at 4,000 to 7,000 pieces daily.

Image source: Weibo screenshot

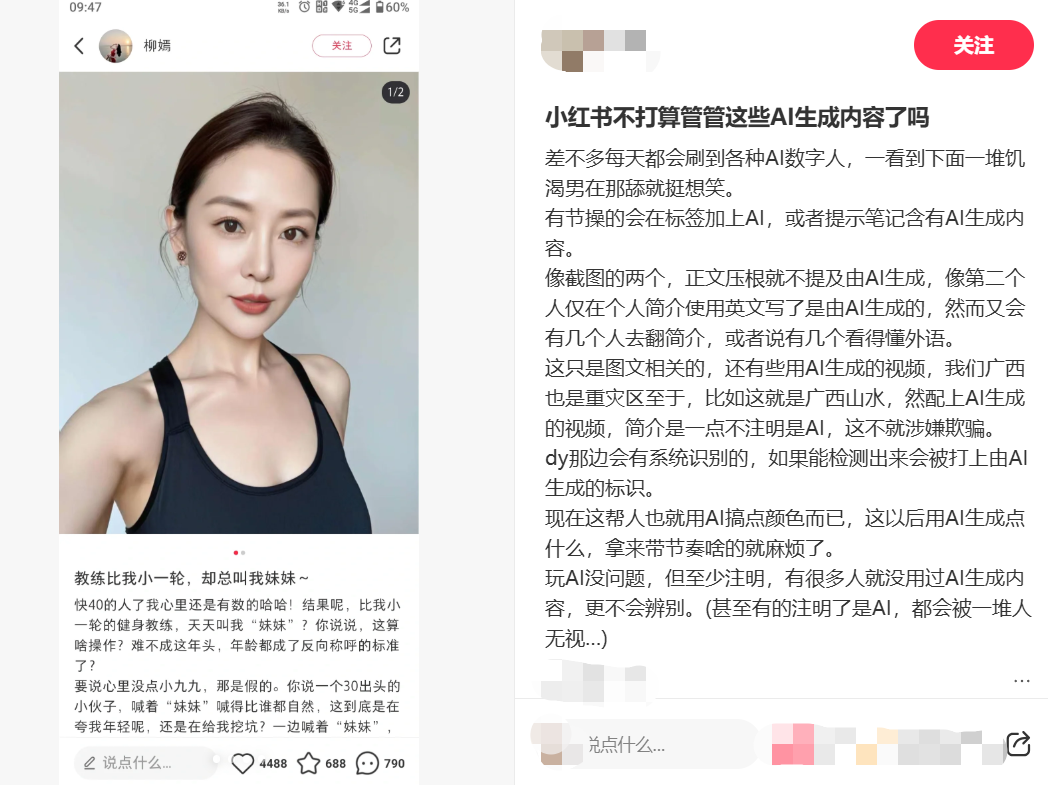

In the latter half of 2024, some netizens claimed controlling 1,327 AI beauty accounts on Xiaohongshu through account matrix management software, none of which were identified by the platform. Due to the abundance of AI human accounts, Xiaohongshu has also witnessed bloggers specializing in exposing fakes.

Image source: Xiaohongshu screenshot

Some netizens suggested that to avoid technical flaws, many AI-generated "human images" typically avoid showing fingers. "Many use prompts to keep hands out of frame, place them behind the body, make fists, or cover them; for human videos, attention should be on lip sync, as AI-generated videos often have mismatched lip sync and dialogue, or appear unnatural."

However, not everyone cares about image and video authenticity. Some netizens remarked, "As long as it looks good and provides emotional value, it's fine. Do real bloggers look exactly like their photos? Since none are real, does it matter if it's AI?"

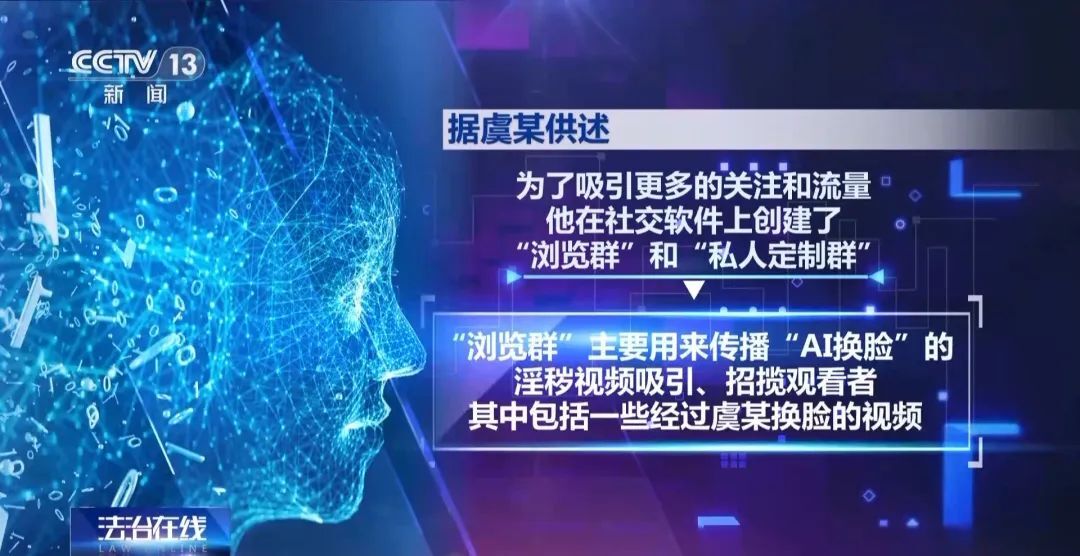

Due to insufficient vigilance against "digital humans," fraud cases involving AI face-swapping have emerged incessantly.

According to the Beijing Business Daily, in February 2024, a fraudster utilized Deepfake technology to create a video of company executives speaking, tricking employees into transferring HK$200 million to a designated account 15 times.

Additionally, there have been illegal instances of using AI face-swapping on real individuals to create pornographic videos and steal medical insurance funds. Someone in Hangzhou once used AI face-swapping to forge pornographic videos involving female celebrities and even set up group chats to fulfill demands for customized face-swapped pornographic videos, charging fees based on video duration and difficulty. The Xiaoshan Prosecution in Hangzhou seized over 1,200 obscene videos from the group.

Image source: CCTV News screenshot

AI has blurred the line between truth and falsehood, causing distress to a growing number of people.

Lawyer Zhang Zihang from Beijing Zhoutai Law Firm stated that in public events, deliberately distorting facts, using AI or other technical means to create images and videos, and disseminating them on social platforms to cause negative impacts necessitates pursuing the producer's responsibility first. The producer may face administrative or criminal liability.

Furthermore, social platforms that publish false information may also bear legal responsibility. Lawyer Zhang Zihang explained that although the platform is not the provider or producer of technology and content, it has the obligation to review and supervise. If the social platform fails to fulfill its obligations, leading to the spread of false images and causing serious negative impacts, the regulatory department has the right to impose penalties such as fines and ordering rectifications on the publishing platform.

02. AI is 'Polluting' the Internet

On social platforms, numerous netizens have expressed concerns about the "AI invasion".

A Xiaohongshu netizen noted that large AI models are iterating rapidly. In 2023, using AI to draw human hands and feet was still chaotic, but in 2024, the Flux model's accuracy in drawing hands significantly improved. In a few more years, AI might correctly depict various human body parts and movements. "If management isn't strengthened, the social network could become chaotic by then."

Image source: Xiaohongshu screenshot

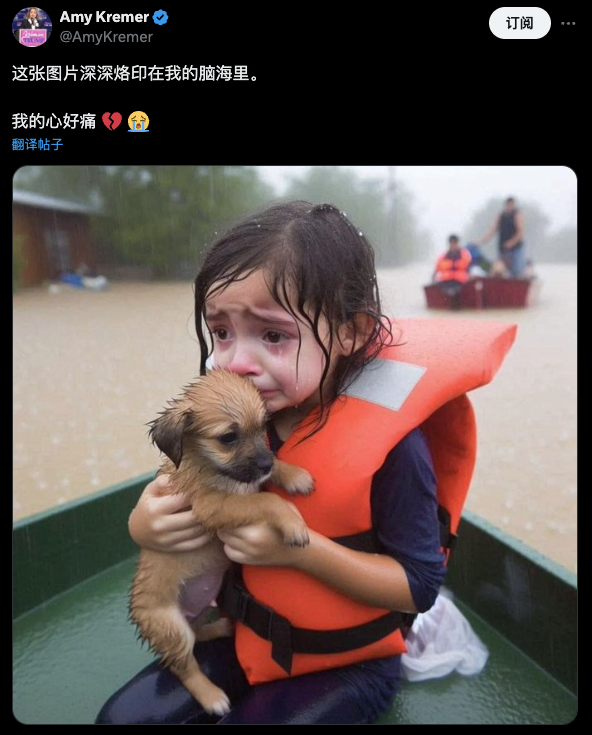

AI-generated content is also flooding overseas social networks, some seriously infringing on celebrities' rights and interests, and others having significant economic impacts.

In January 2024, AI-generated "indecent photos" of the celebrity Taylor Swift spread on social media platforms like X (formerly Twitter) and Facebook, garnering over 10 million views and causing public concern.

According to data released by the cybersecurity company Home Security Heroes in 2023, there were 95,280 detectable Deepfake videos on the internet in 2023, 98% of which were pornographic, with 99% of the protagonists being women.

In May 2023, a photo of a Pentagon explosion was widely shared on Twitter, including by many investment-related websites and social media accounts. According to media reports, the photo was released just in time for the opening of the US stock market that day, triggering a brief panic sell-off.

Image source: Weibo screenshot

When AI-generated content frequently mingles with real news, it becomes challenging for ordinary people to discern truth from falsehood, even for some professional institutions.

Analysts from the Internet Watch Foundation (IWF) stated that illegal content generated by AI (including images of child abuse, AI celebrity face-swapping videos, etc.) is rapidly increasing. Organizations like ours or the police may be overwhelmed by the identification of hundreds or thousands of new images. We're not always certain if there's a genuine child in need among them.

Image source: Twitter screenshot

Lawyer Zhang Zihang believes that AI is gradually making people realize that seeing is not necessarily believing. The advancement of AI technology may cause netizens to swing from believing everything to believing nothing regarding online information.

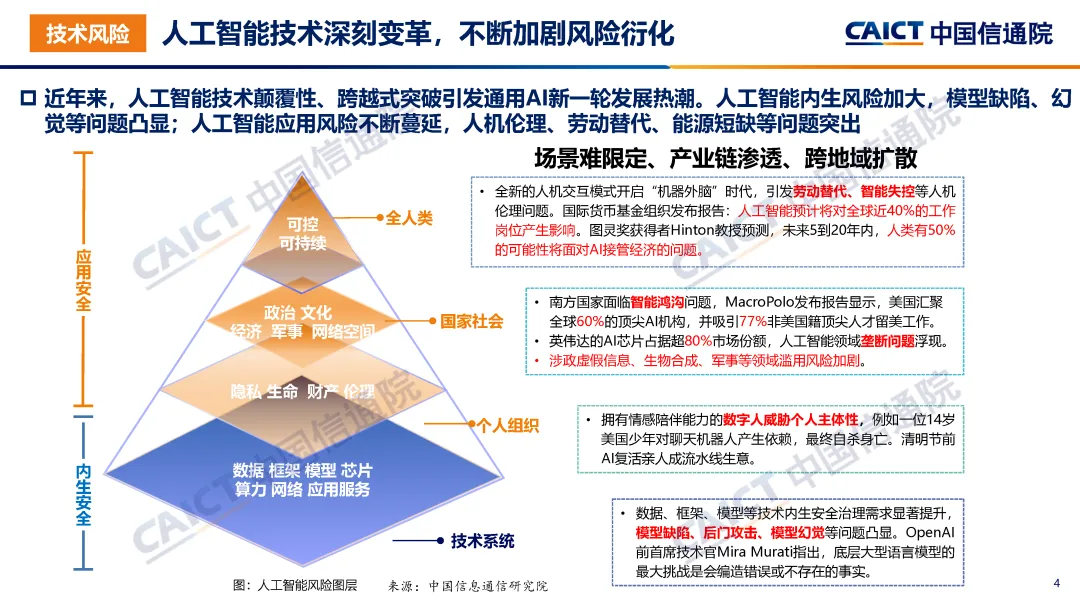

Beyond invading the internet content ecosystem, AI-generated content also poses risks to large model training. The "Blue Book on AI Governance (2024)" released by the China Academy of Information and Communications Technology mentions that in recent years, artificial intelligence's endogenous risks have increased, with issues like model defects and hallucinations becoming prominent; AI's application risks have also continued to spread, with issues like human-machine ethics, labor substitution, and energy shortages being prominent.

Image source: "Blue Book on AI Governance (2024)"

Mira Murati, the former CTO of OpenAI, once pointed out that the biggest challenge of underlying large language models is their tendency to invent false or non-existent facts.

Dr. Ilya Sutskever of the University of Oxford and his team published a paper in "Nature" mentioning that when generative models begin training on AI content, the quality of the final generated content significantly declines, and the feedback loop may cause the model output to deviate from reality or even contain obviously biased content. Researchers refer to this phenomenon as "model collapse".

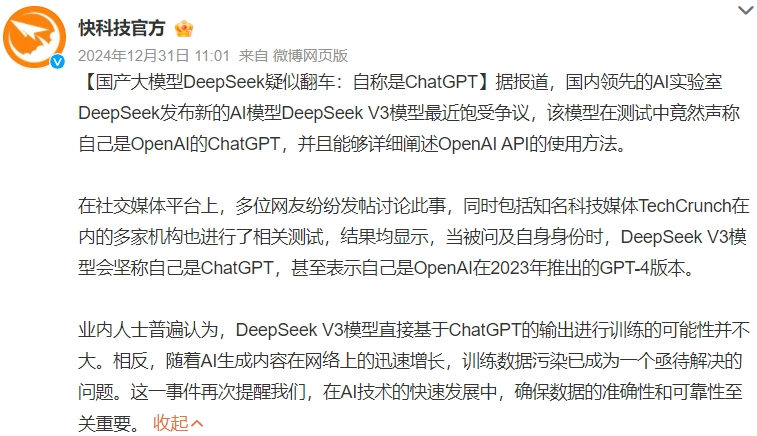

Previously, the leading domestic AI laboratory DeepSeek released a new AI model, the DeepSeek V3, which unexpectedly claimed to be OpenAI's ChatGPT during testing and elaborated on the usage of the OpenAI API.

Image source: Weibo screenshot

Zi Lu (pseudonym), who has participated in text generation model research, explained that data for training large models is screened and cleaned before input. Image and video generation is technically more complex, and training data samples are easier to clean, such as consistently sourcing works from photographers. "In contrast, text generation training data is more mixed and prone to contamination. Without careful manual screening, false news might be included."

If the rise of AIGC is inevitable, how can ordinary users effectively identify such content? Lisa (pseudonym), an AI security researcher at a major internet company, said that ordinary users can distinguish AI creations based on images' visual texture features. "Generally, generated images have more vivid colors and smoother textures, while generated videos have slow camera movements, blurry image quality, and often contain garbled content resembling text."

Image source: Xiaohongshu screenshot

Additionally, text and images can be identified with third-party tools. The "Blue Book on AI Governance (2024)" mentions that "using technology to govern technology" is becoming a necessary tool to balance AI safety and innovation.

According to "Qu Xie Shang Ye's" comprehension, there are now AIGC detection tools capable of identifying AI-generated content, such as text and images, leveraging methods including similar search, content analysis, and watermark tracing. Lisa emphasized that as generated content becomes increasingly realistic, the distinctions between authentic and synthetic images are narrowing, thereby escalating the complexity of model learning for detection tools.

However, the majority of users are laypersons unfamiliar with AI technology and lack the awareness and ability to differentiate. The task of identifying AIGC and distinguishing truth from falsehood should not fall upon users. Creators should voluntarily disclose the nature of their content, while platforms, with their advanced technical resources and teams, should assume primary responsibility for identifying and reviewing AI content.

03. Balancing Development and Governance

Attorney Zhang Zihang believes that to mitigate risks associated with AI-generated content, platforms must strengthen regulations in addition to enhancing users' verification awareness and skills. "Particular scrutiny should be given to content that experiences rapid viewership and sharing. If confirmed as AI-generated and false, it should be promptly removed. If authenticity cannot be verified, the content should be clearly labeled as AI-generated with an accompanying disclaimer."

According to "QuJieShangYe," several social media platforms have already implemented regulatory norms tailored to AIGC content.

In 2023, Xiaohongshu initiated the development of an AIGC governance system, prominently flagging content suspected of being AI-generated. Douyin issued a series of announcements in 2023 and 2024, including "Platform Norms and Industry Initiatives on AI-Generated Content" and "Governance Announcement on AI-Generated Virtual Characters," continuously cracking down on various types of fraudulent AIGC content. This year, in January, Kuaishou established a "Debunk" section within the platform, sharing debunking content through official accounts, leveraging algorithm optimization to increase exposure, and simultaneously pushing debunking information to users who have previously engaged with related rumors.

Image source: Weibo screenshot

Bo Wenxi, chief economist for China at the China Enterprise Capital Alliance, suggests that platforms can also enhance user reporting mechanisms and establish copyright protection frameworks in addition to strengthening supervision and governance. Users must adhere to relevant copyright laws when utilizing AI-generated content, clearly indicating the source and copyright ownership to prevent infringement. Simultaneously, focus should be placed on user education, improving users' ability to identify AI content and their awareness of distinguishing truth from falsehood through official channels such as guides, tutorials, and case studies.

Attorney Zhang Zihang further stated that AI generation tools should feature prominent identification functionality. Editing services that significantly alter personal identity traits, like face replacement and voice synthesis, must be mandatorily labeled as AI-generated. "If such services are not appropriately labeled and the creator fails to disclose their AI origin, I personally believe it constitutes joint infringement and requires the bearer to assume a portion of tort liability."

Image source: Weibo screenshot

From a practitioner's perspective, Zi Lu believes that curbing the proliferation of AI-generated false content also necessitates user restrictions. "'Harmless' and 'helpful' were the two primary criteria we previously considered when generating text. While overly safe content may lack utility, particularly interesting and useful content can pose risks. Hence, enterprises also prioritize the safety of generated content."

"AI tool companies strive to avoid generating content with distorted values, such as pornography and violence, but they cannot control how the content is used. For instance, if someone requests AI to create a face-swapped photo of an attractive woman, the photo itself is benign. However, if the user disseminates rumors or slanders the woman using this photo, the issue lies with the user and represents an area challenging for AI companies to govern."

Currently, both domestic and international entities are placing increasing emphasis on the regulation and compliance of AIGC. At least 60 countries have formulated and implemented AI governance policies.

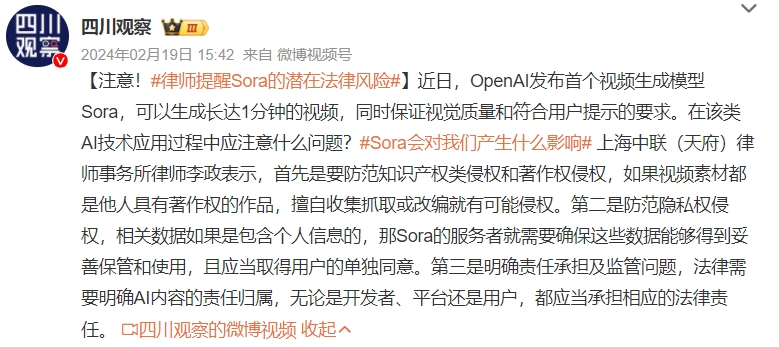

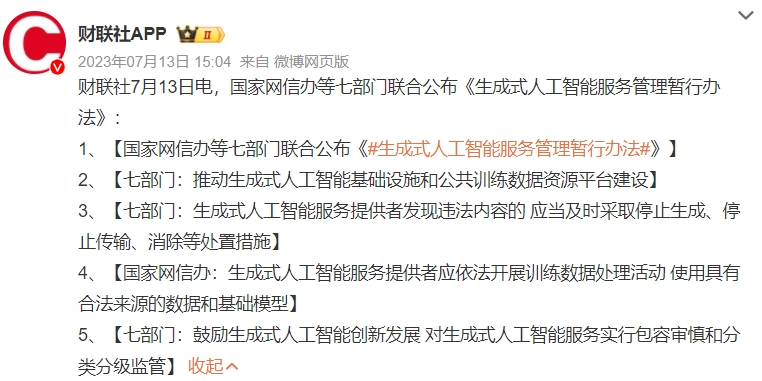

On January 10, 2023, the "Provisions on the Administration of Deep Synthesis of Internet Information Services," jointly issued by the Cyberspace Administration of China, the Ministry of Industry and Information Technology, and the Ministry of Public Security, came into effect, aiming to strengthen the management of deep synthesis of internet information services, safeguard national security and social public interests, and protect the legitimate rights and interests of citizens, legal persons, and other organizations. Subsequently, on August 15, 2023, the Cyberspace Administration of China, in conjunction with the National Development and Reform Commission and other departments, announced the "Interim Measures for the Administration of Generative AI Services," which also came into effect.

Image source: Weibo screenshot

On September 14, 2024, the Cyberspace Administration of China issued a notice for public consultation on the "Measures for the Identification of AI-Generated Synthetic Content (Draft for Comment)" to regulate the identification of AI-generated synthetic content, safeguard national security and social public interests, and protect the legitimate rights and interests of citizens, legal persons, and other organizations. The successive implementation of multiple regulations and supervisory policies has provided more concrete avenues for rigorous governance.

New technological advancements necessitate new regulatory systems that cannot solely rely on the self-discipline of technology and business enterprises. It necessitates the collaborative efforts of regulators and platforms. Despite the extensive and ambiguous application scope of AI, the boundaries of law and morality remain distinct. In the future, the intensity of policy supervision and platform audits will inevitably intensify, and it is worth monitoring how these changes will impact the content ecosystem on social platforms.