Reviewing 2024: The Prevalence of Cost-Effective AI Commercialization

![]() 01/16 2025

01/16 2025

![]() 703

703

The past year witnessed AI emerge as a dominant investment theme in global capital markets, with commercialization becoming the linchpin for large AI models.

In summary, 2024 saw a mixed bag for large model commercialization. On the positive side, successful AI paid use cases abounded, ranging from $9.9 AIGC pet portraits to $200 monthly ChatGPT Pro subscriptions, and even all-in-one large models priced at hundreds of thousands per unit. Both consumer (C-end) and business (B-end) markets appeared to be thriving.

However, from a revenue standpoint, both domestic and international model factories faced significant pressure. In 2024, rumors circulated about several overseas AI startups and members of the "AI Six Tigers" halting pre-training and seeking acquisitions. Models akin to Sora and o1 were characterized by high computational demands, limited commercial applications, and steep payment thresholds. While actively exploring new technological avenues, many model factories grappled continuously with commercialization challenges.

The fluctuating trends in AI generated continuous headlines and unprecedented buzz. Let's take stock at year's end by revisiting three industry narratives that encapsulate the primary commercialization thread for large models in 2024.

GPT-5 ultimately missed its 2024 launch date, but the lack of urgency was palpable. Technical personnel might still care, but users and enterprises were no longer eager to rush to keep pace with OpenAI's latest offerings. This marked a stark contrast to the earlier fervor surrounding Sora.

The new darling in the model world became the domestic startup DeepSeek. Some overseas institutions translated Chinese reports on DeepSeek into English to gain insights into this Chinese company. Renowned for its ultra-high cost-effectiveness, this AI enterprise has been dubbed the "Pinduoduo of the AI world." In May 2024, DeepSeek V2 was released, pushing computational and inference costs to their limits and igniting domestic token price wars. By year's end, DeepSeek V3 was said to have surpassed GPT-4o in model performance, ranking seventh on the Chatbot Arena large model rankings—the only open-source model in the top 10, costing just $6 million.

In 2024, the API market's focus shifted from TOP models to cost-effectiveness.

Public acceptance of AI subscription fees increased in 2024, evidenced by the proliferation of increasingly expensive AI subscription memberships.

For instance, OpenAI's ChatGPT Pro, launched at the December conference, charges $200 per month yet attracts many tech bloggers willing to pay. The AI programming platform Devin even raised its monthly payment threshold to $500.

While it may be argued that programmers earn high salaries and are willing to pay for AI, many ordinary individuals also shell out for AI applications.

The head of a national-level Chinese APP remarked, "It's said that Chinese people aren't keen on spending now, but I haven't experienced that. After our products integrated AI, the payment rate surged tens of times. Among them, smart picture books for parents, AI PPT generation, report intensive reading, mind map creation, and other workplace productivity tools are high-frequency payment scenarios."

As users lavish spending on AI, they are no longer exclusively relying on TOP models dominated by OpenAI and Google but are instead adopting a tailored "combination punch" of product solutions.

For example, a programmer in a development scenario might simultaneously purchase monthly memberships for Cursor, sonnet, Claude, and anthrope, using them synergistically. A media professional in a creative scenario would command multiple "AI employees" such as kimi, Wenxin Yiyan, Doubao, and DeepL. Personally, I also use Tencent Yuanbao for intensive paper reading and mind map generation.

To summarize the mindset of subscription users, it's not that top models are unaffordable but rather that multiple models offer a superior cost-effectiveness ratio.

In 2024, I interviewed a data company that shared, "In 2023, to embrace AI as soon as possible, we spent a fortune when everything was at its most expensive. We invested heavily in cards and then sought model manufacturers for training, spending even more. This year, we're determined not to upgrade (large models) and will stick with the old ones."

An AI application entrepreneur, seeking to use the o1 pro model, recharged their ChatGPT Pro membership only to find it wasn't significantly better than o1 or 4o, so they decided against renewing.

Another AI startup tested GPT4-Turbo for non-code scenarios and found the costs unexpectedly high. The company couldn't afford these training expenses and hadn't found a viable business model to pass on the costs to customers, so it swiftly switched back to the lower version of GPT3.5, considering GPT4 only when absolutely necessary.

"Even if lower-version models have weaker capabilities, combining various solutions results in better performance than the latency of newer model versions." Furthermore, many scenarios can't be tackled by advanced models alone.

After a year of struggle, the first batch of enterprise users entering the large model market placed greater emphasis on balancing costs and benefits in 2024, no longer blindly paying for larger parameter scales and higher computational overhead. Enterprises entering the market in 2024 were naturally more cautious about AI, focusing on risk control and aiming to maximize intellectual return with minimal investment.

Consequently, in the ToB government and enterprise market, the depreciation rate for large models began to slow down. Upon launching a new model, the old model wouldn't be easily discarded or completely overhauled but could continue to be utilized in business through engineering methods. This shift implies that large model algorithms, as software technology, won't become obsolete quickly. As a digital asset for enterprises, they have a longer effective use cycle and thus a higher cost-effectiveness ratio.

From the above three narratives, it's evident: Cost-effectiveness was the primary commercialization thread for large models in 2024. Why do we say that?

At the model level, commercial utilization no longer prioritizes "scale above all else."

The notion that the Scaling Law might have hit a wall gained traction. New models' performance improvements weren't proportional to computational consumption, and the return on investment gradually declined, fostering skepticism in both academia and industry. Concurrently, explorations of other technological pathways began yielding fruits.

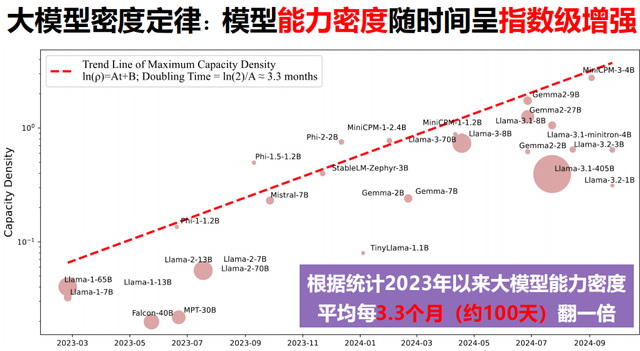

For instance, DeepSeek innovated comprehensively on the model architecture, ensuring model performance while reducing inference costs. Facebook AI Research introduced the concept of "Capacity Density" to achieve greater capabilities with fewer resources, enabling sustainable AI technology development. OpenAI also presented the o1-mini model, which underwent reinforcement fine-tuning and boasts faster inference speeds and lower computational consumption compared to the standard o1 version.

Apart from model scale, commercial users began evaluating other dimensions such as cost-effectiveness, ease of use, service level, and ecological support.

At the market level, the focus shifted from "delivering large models" to "delivering intelligence."

In the three stories, APIs considered rankings and cost-effectiveness ratios, subscriptions utilized combination strategies to achieve optimal outcomes, and project systems pursued overall revenue balance. These indicate that large model commercialization has moved beyond the initial stage of "delivering large models" into the second stage of "delivering intelligence." Users and enterprises are no longer deploying models for novelty but are leveraging AI large models to deliver practical benefits in an increasing number of specific task scenarios. Consequently, the selection and willingness to pay for large models began diverging.

For example, in straightforward task scenarios, lower-version or free models paired with prompt words suffice. In complex tasks, even GPT-5 might falter, making cost-effectiveness plus engineering combinations more worthwhile investments.

Particularly for large government and enterprise entities, if large models depreciate before deployment, it hinders asset evaluation. Therefore, they either integrate with hardware to form a "box-selling" business model or extend the model's effective use cycle to enhance its cost-effectiveness ratio.

As emphasized by Mike Krieger, Chief Product Officer of Anthropic, "You're not just delivering a product; you're delivering intelligence." If a large model is a plant on the soil of computing power, intelligence is the fruit, and what commercial users are actually purchasing is the fruit.

Commercial users' payment decisions aren't solely determined by whether the plant is the tallest and most beautiful in the orchard but are influenced by factors such as purchase convenience, delivery logistics, timeliness, and palatability. For example, when ChatGPT debuted, the secondary market became a business for proxy account registration and overseas credit cards. This cumbersome usage method swiftly faded with the abundance of domestic large models. From an overall experience perspective, "cost-effectiveness" better reflects the core value of large models.

Cost-effectiveness can be deemed the primary commercialization thread for large models in 2024.

From "delivering large models" to "delivering intelligence," 2024 marked a turning point for large model manufacturers.

Large models that could better and more fully deliver intelligent effects gained significant development opportunities. Notably, large model families supported by cloud infrastructures thrived, benefitting from full-link support ranging from underlying computing power to development suites to AI applications and business ecosystems. Examples include Baidu Intelligent Cloud + Wenxin Yiyan, Huawei Cloud + Pangu large model, Volcano Engine + Doubao large model, Alibaba Cloud + Tongyi Qianwen, and Tencent Cloud + Hunyuan large model.

Among AI startups, Zhipu AI and DeepSeek, which boast a superior ratio of model performance to cost, demonstrated stronger development momentum. In contrast, ZeroOneThings, which refrained from price wars, lacked cost-effectiveness compared to competitors and couldn't avoid "bleeding price reductions" through underlying technological innovation. It struggled to gain a foothold in both C-end and B-end markets, encountering operational setbacks.

2024 was the inaugural year of cost-effectiveness for "delivering intelligence." So, what changes can we anticipate in the large model market in 2025?

Firstly, stable growth of the large model "orchard." This is reflected in the expansion of intelligent boundaries, with low-performance models showing growth and high-performance models experiencing no significant setbacks, leading to a stable landscape. Advanced models and lower-version models, TOP models from large factories, and "punch models" from small and medium-sized factories will each excel in different task scenarios, all capable of obtaining a share of commercialization opportunities, growing synergistically. For small and medium-sized factories, this could be good news as competitive pressure eases.

Secondly, the rise of multimodal large model "banyan trees." In 2024, model factories launched complex large model families tailored to different scenarios, such as OpenAI's GPT series, 4o series, and o series, which could be confusing. Different tasks required switching between multiple models for collaboration. The narrow capability boundaries of single models hindered AI's productivity-enhancing potential. Therefore, a solution with a higher cost-effectiveness ratio must inevitably gravitate towards multimodal models, enabling users to focus on one model for text, image, audio, and video inputs and outputs, becoming a "banyan tree" for users to inhabit, more likely to thrive on the fertile soil of cloud vendors' computing power.

Thirdly, the continued growth of super individuals (small AI startups and individual developers) will be pivotal. As we advance into the era defined by the intelligence-to-price ratio, individuals will gain greater intellectual support from platforms, leading to a decrease in entry barriers. It is thus foreseeable that AI application innovation will increasingly be driven by small AI startups and developers, comprising these super individuals. This trend will spark a substantial surge in personalized AI custom software and AIGC content creation. These super individuals, akin to hummingbirds, will disseminate the seeds of intelligence across multiple industries, particularly those in the long tail.

From this vantage point, domestic AI ecosystems that offer a higher intelligence-to-price ratio are poised to facilitate the widespread accessibility of AI technology and propel the development of industry intelligence at a reduced cost. Consequently, the commercialization of large models will unfold at a pace faster than anticipated.

Having navigated the inaugural year of the intelligence-to-price ratio era in 2024, large models are now embarking on a prosperous 2025.