Lenovo Unveils NVIDIA's N1 Chip: Is AI PC Poised for a "Qualitative Leap"?

![]() 01/22 2025

01/22 2025

![]() 428

428

The future of AI PCs is set to pivot around enhancing edge computing power.

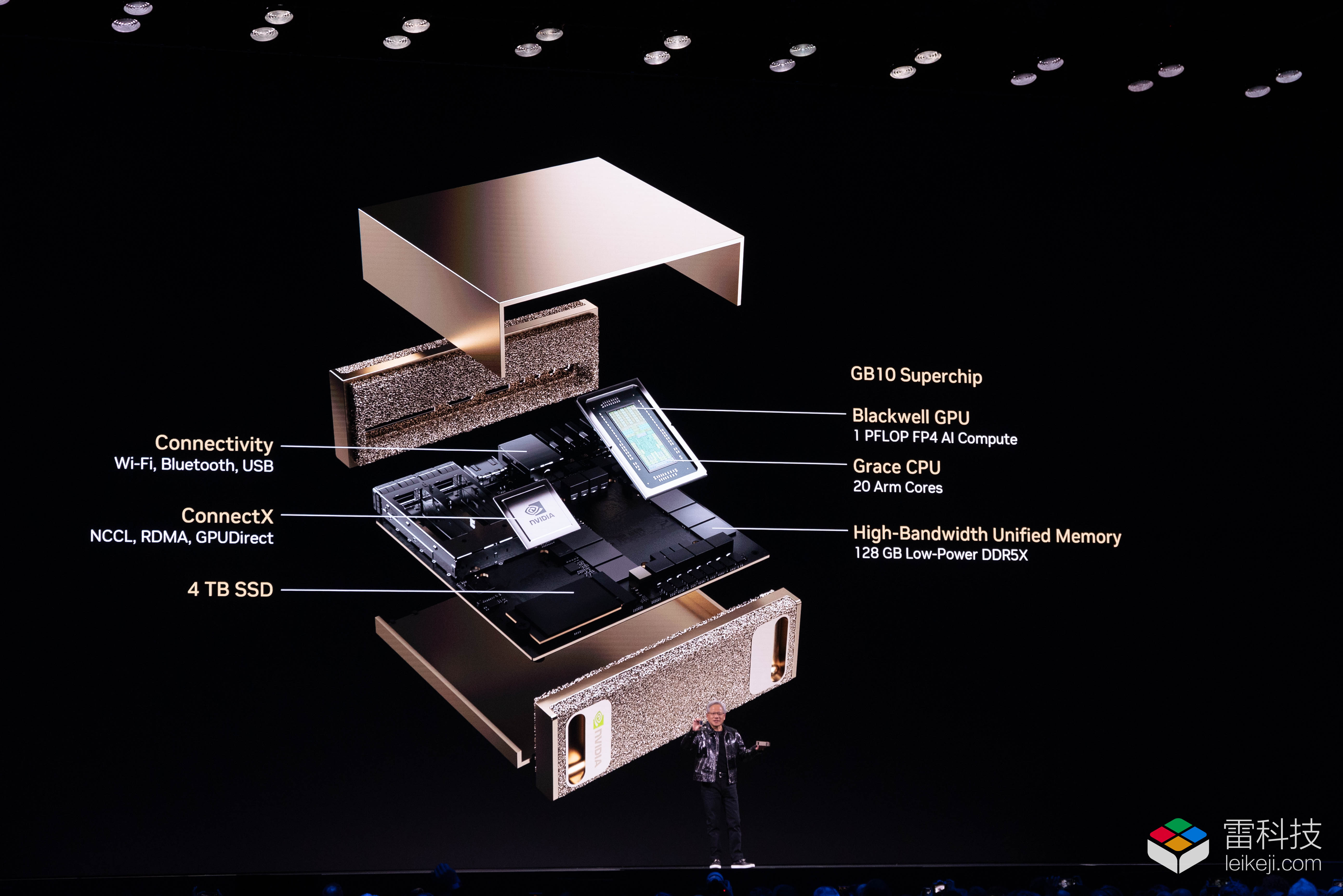

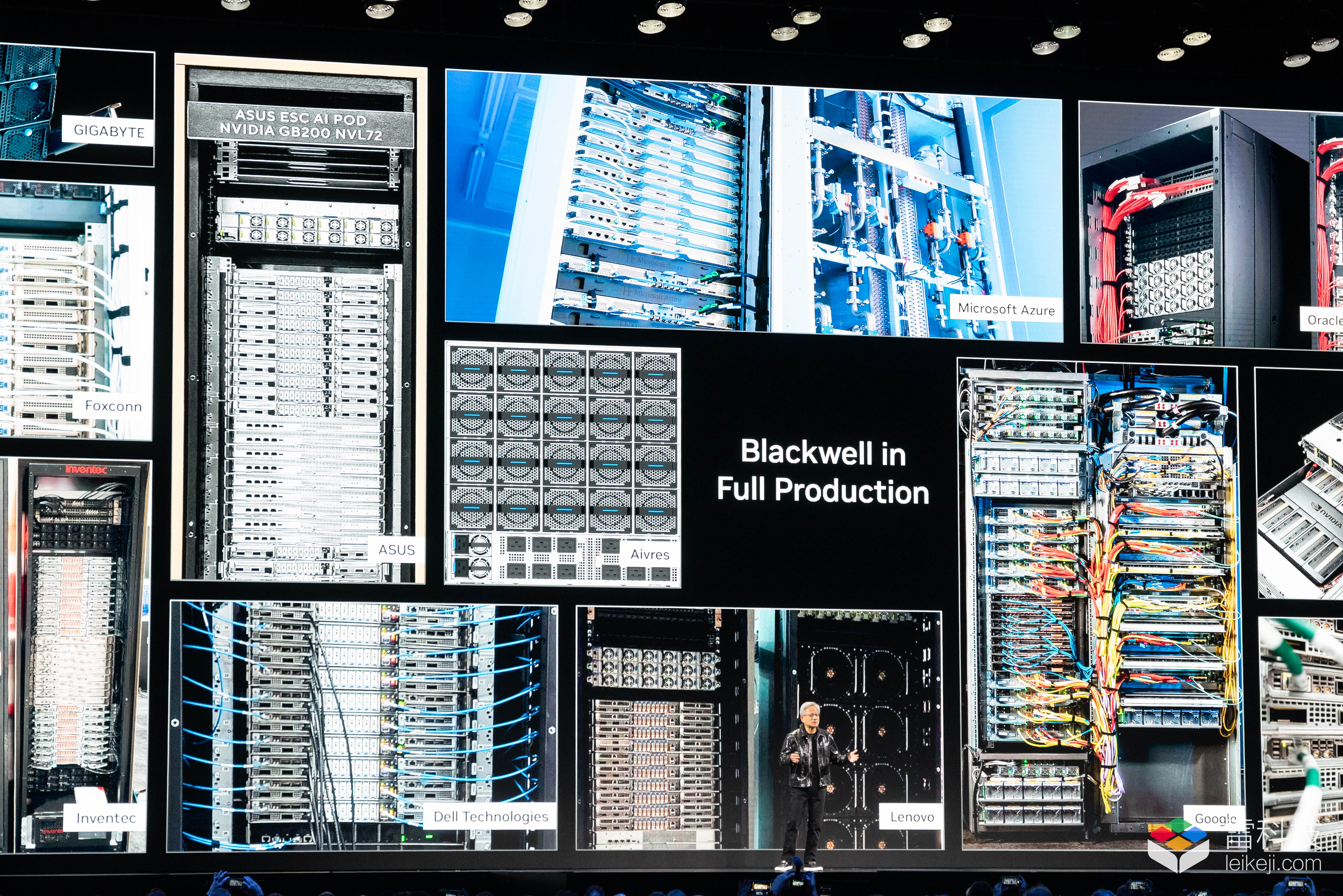

At CES 2025, NVIDIA redefined desktop AI supercomputing with Project DIGITS, showcasing the boundless potential of the GB10 superchip, a fusion of the Blackwell and Grace CPUs. Inspired by Intel NUC's success, numerous brands have embarked on transforming desktop mini PCs into high-performance mobile workstations. NVIDIA has further announced plans to collaborate with third-party partners to extend Project DIGITS, potentially integrating GB10 into diverse product forms.

Image source: Lei Technology

However, the 'future' arrived sooner than anticipated.

A recent Lenovo job posting garnered online attention, not for the post itself, but for its description: the role entailed hardware design and development for the new-generation SoC (NV N1x) within the company. Thus, NVIDIA's new chip was unexpectedly revealed.

So, how powerful is the NVIDIA N1 chip?

Although neither NVIDIA nor Lenovo have disclosed much about the N1 chip, eager netizens have uncovered its general specifications. The 'N1' processor is an ARM-based chip series, segmented into the high-end N1x and mid-range N1 models. The possibility of secondary tier models within the N1 series cannot be ruled out. Fabricated using TSMC's 3nm process technology, the NVIDIA N1 series is a collaborative effort between NVIDIA and MediaTek, leveraging the Blackwell architecture.

By now, it's evident that the N1 series chips are homologous to NVIDIA's GB10 superchip. However, due to laptops' slight disadvantage in performance output compared to desktop mini PCs like Project DIGITS, the N1 chip is theoretically not a full 20-core version of the GB10 superchip, explaining its actual performance of 180-200 TOPS.

Image source: NVIDIA

When comparing the computing power of mainstream AI PCs on the market, the N1 chip stands out as a 'formidable' player—most AI PC laptops adopting a CPU+NPU+iGPU solution typically do not exceed 50 TOPS in computing power. Numerically, the N1's performance rivals that of previous RTX dedicated GPU laptops.

Regarding related hardware, it's confirmed that NVIDIA's N1 will debut in Lenovo's new 2-in-1 laptop, expected to be unveiled at Computex Taipei mid-year and shipped in Q4 2025. Considering the high price of NVIDIA's Project DIGITS, which reaches up to $3,000, laptops equipped with the N1 chip are anticipated to be priced above $10,000.

Is AI PC finally poised for a "qualitative leap"?

Despite the N1 chip's high post-commercialization price, destining it not for "most users"—data from Runto reveals that the average selling price of the online laptop market in mainland China in Q3 2024 was 6,472 RMB, and the average selling price of the top 10 laptop models sold on Amazon in 2024 was only $653, approximately 4,776 RMB—consumers willing to spend over $10,000 on a high-performance laptop remain a minority.

Image source: Lei Technology

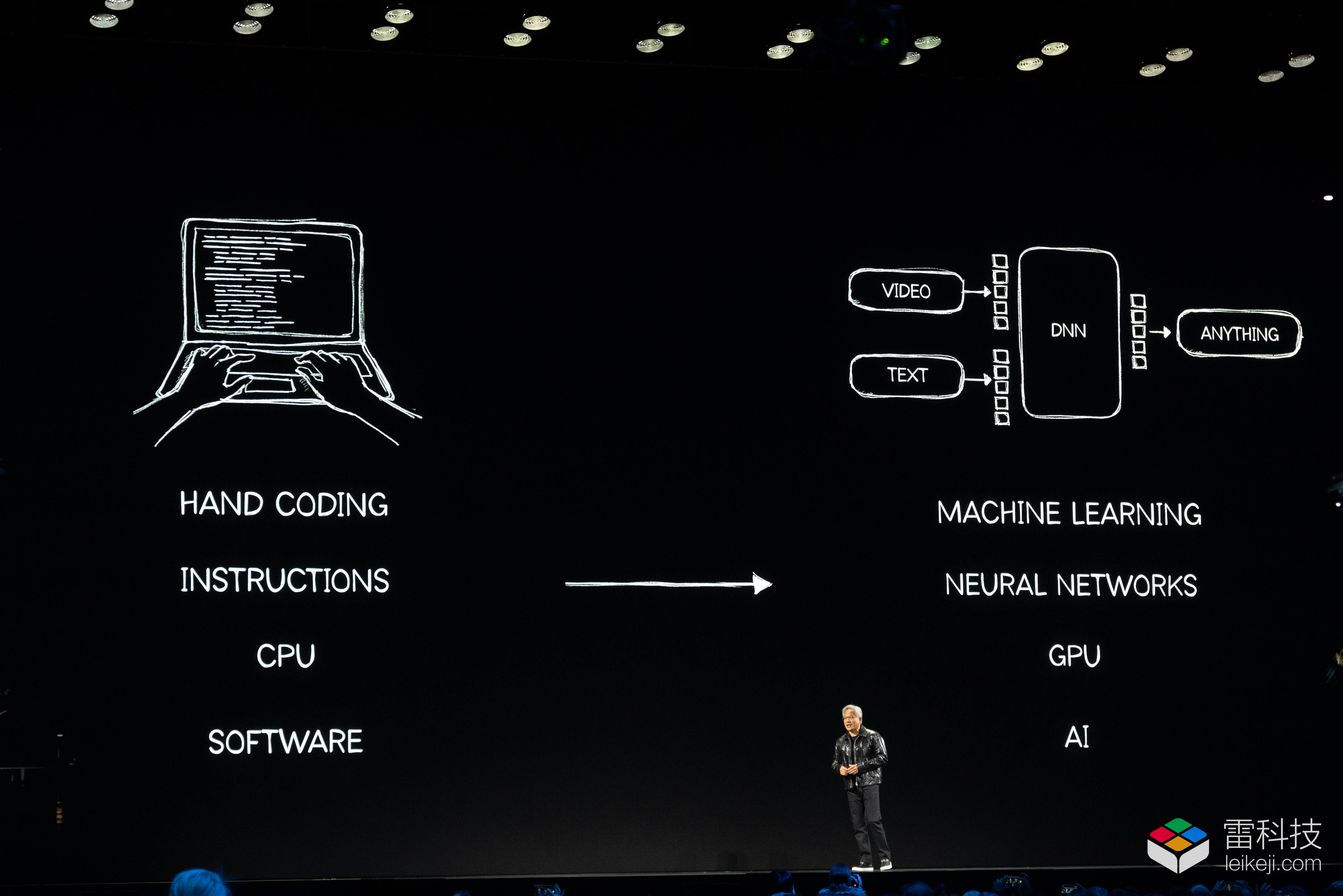

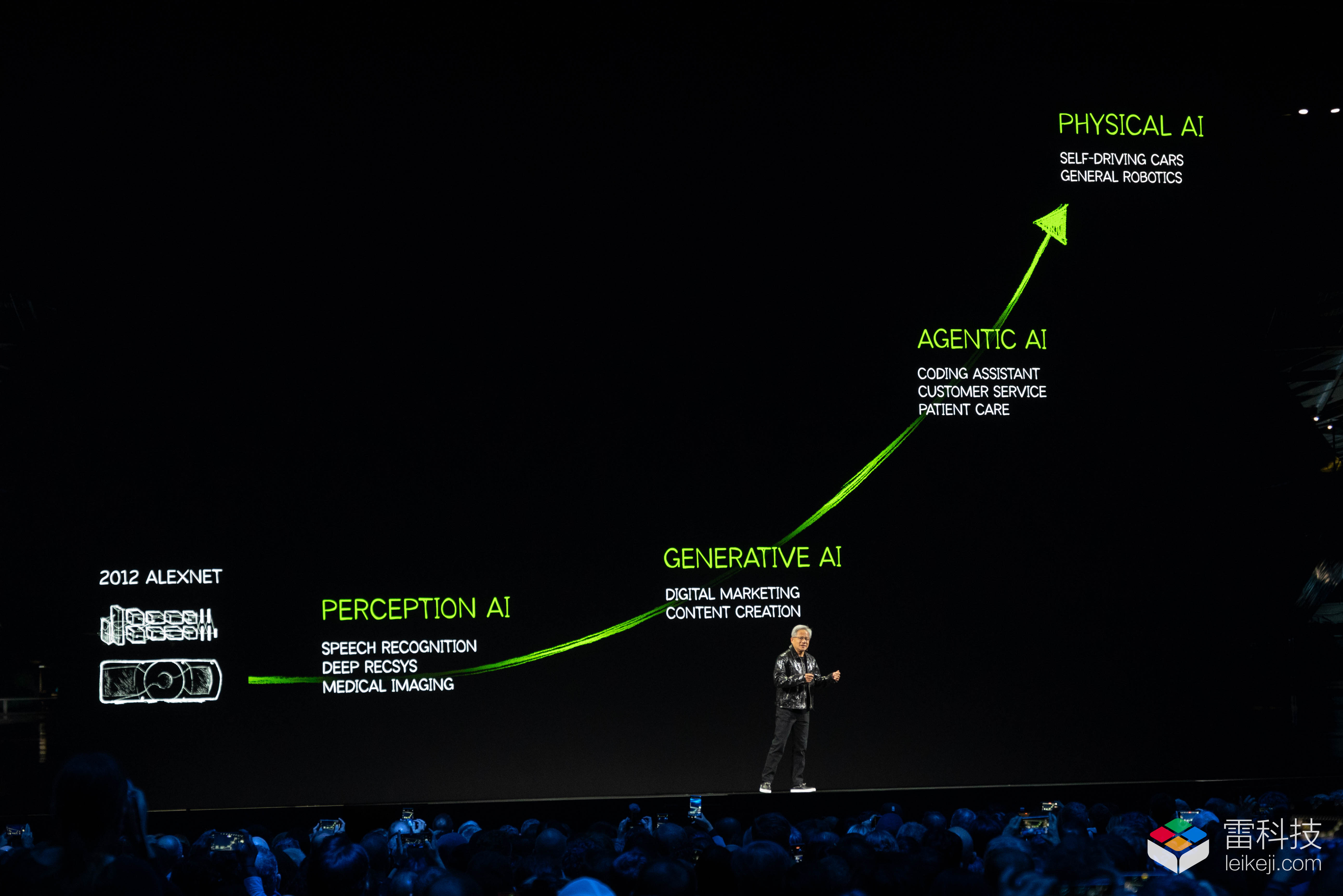

Nevertheless, from the perspective of the AI PC industry's long-term development, the emergence of the N1 chip holds significant importance. Firstly, the advent of truly high-performance AI PC chips like N1 can drive the transformation of the AI PC software ecosystem from the hardware side. At CES 2025, many brands listed edge AI capabilities as their top priority for the coming year. Unlike mainstream hybrid AI solutions, edge AI necessitates AI PCs to possess sufficient local AI computing power, rather than outsourcing large AI tasks to cloud servers for processing.

Image source: Lei Technology

Undoubtedly, high-performance ARM-based AI PCs, spearheaded by N1X, will significantly impact existing AI PC workstations (like the Xeon W series mobile workstations). Higher hardware integration, superior AI interface support, and the ARM architecture's iconic power consumption advantage will open new avenues for edge AI PC workstations.

For instance, in sports event broadcasting, AI mobile workstations with enhanced performance can drastically reduce the number of high-performance servers deployed on-site. For events like F1 that traverse the globe, fewer large devices mean lower logistics costs and faster transition speeds.

Moreover, the N1 chip's WoA (Windows on ARM) feature can propel the ARM PC ecosystem's development, leveraging the ARM architecture's advantages in low-power local AI inference and real-time model processing, thereby boosting the entire WoA ecosystem. Simultaneously, hardware technologies developed by enterprises based on ARM architecture SoCs can be swiftly reused on other ARM-based mobile devices like smartphones and tablets, fostering competition for low-power, high-performance capabilities.

From the AI industry's perspective, the advent of local high-performance AI workstations also marks a true performance milestone for AI PCs. Currently, the mainstream computing power of non-dedicated GPU laptops generally does not exceed 50 TOPS, necessitating cloud service connections for primary AI functions. The emergence of high-performance AI PCs signifies that the era of AI PCs subsidizing local computing power with cloud computing power has concluded.

Image source: Lei Technology

For general consumers, the experience gap between cloud computing power and local computing power is minimal. However, for government and enterprise users, particularly those in healthcare and finance, where information confidentiality is paramount, high-performance edge AI PCs allow for deep learning inference or data processing in a locally closed environment, enabling sensitive industries to adopt AI technology. Of course, in an era of insufficient local computing power, users in these sensitive industries could also deploy their own private edge AI servers to establish controllable AI computing power. However, from the perspective of deployment thresholds and maintenance costs, high-performance AI PC workstations are clearly a more cost-effective solution.

Local computing and cloud computing are not mutually exclusive.

In the long run, local computing power will not fully supplant cloud computing, as large-scale model training and massive data processing still require the support of supercomputing clusters. However, the emergence of high-performance AI PCs has broadened the boundaries of edge computing, reducing over-reliance on cloud resources and expanding the reach of AI applications, providing enterprise users with more options.

With the burgeoning industry demand for high mobile computing power, guided by the 2025 "industry consensus" on developing edge AI, it's certain that we will witness more high-performance commercial AI solutions like N1 in the future. Concurrently, the trend towards localizing AI computing power will also propel the enhancement of AI performance in consumer-grade devices from the software ecosystem's perspective.

Image source: Lei Technology

After establishing the AI PC label using cloud AI computing power, the PC industry inevitably fell into a scenario of AI use case homogenization—after all, there are only a handful of cloud AI service providers, and computers' local AI computing power is insufficient to support more complex models. For PC brands seeking new growth amid AI PC homogenization, edge AI computing power is indispensable.

Driven by large model intelligent agents, RTX 50 series graphics cards, and NVIDIA's N1 chip, the AI PC category has finally awaited a "qualitative leap." For brands and the entire industry chain, this shift in computing power signifies not only an upgrade in hardware architecture and product iteration pace but also puts forth higher matching requirements for the developer ecosystem, software frameworks, and AI industry business models, laying a solid foundation for the next stage focusing on local intelligence evolution.

As for the surprises AI PCs will bring us after this "qualitative leap," perhaps we will find the answer at next year's CES.

Source: Lei Technology