Robots and Models: The Deep Integration Sparking the Second Wave of Intelligent Upgrades, Ushering in the Era of Physical AI

![]() 01/24 2025

01/24 2025

![]() 513

513

The CES 2025, kicking off the year, unveiled a grand spectacle of innovative intelligent hardware products. Numerous smart devices made their debut, showcasing a myriad of applications bolstered by AI technology. Despite the long road ahead for AI development, it's evident that the shift from cloud to terminal is well underway, marking the commencement of the era of intelligent hardware.

Among these hardware marvels, robot products, especially humanoid robots, stood out as the epitome of cutting-edge technological advancements. NVIDIA's keynote, featuring a "humanoid robot army" of 14 robots, coupled with Huang Renxun's assertion that "the ChatGPT moment for general-purpose robots is imminent," captivated the audience.

Looking back at the evolution of humanoid robots, earlier innovations focused on precise control and task execution, underpinned by AI technology. However, recent advancements center around a pivotal buzzword—AI. AI has transcended traditional training methods, embracing synthetic data that promises to enable it to tackle any cognitive task within the next 3-4 years. This transformation is already reshaping human life and heralds unprecedented changes in the future, where AI can accomplish virtually anything.

As leaders in the humanoid robot revolution, Tesla has publicly stated its commitment to the Optimus robot. Tesla aims to manufacture thousands of humanoid robots in 2025, with production scaling up tenfold in 2026, targeting 50,000 to 100,000 units, potentially doubling annually thereafter.

Beyond humanoid robots, consumer-grade intelligent robots, such as companion robots, cleaning robots, and commercial service robots, are emerging, diversifying their functionalities. Notably, the surge of innovative AI companion robots earlier this year ignited a new trajectory for AI robot toys.

As mentioned in our previous article, "CES Intelligent Terminals Rise to Lead Computing Power Sinking, Terminal-Side AI Chip Performance Innovates Again," NVIDIA's AI evolution trajectory spans from Perception AI to Generative AI, progressing to Agentic AI, ultimately realizing Physical AI with sensing and execution capabilities.

In its ultimate form, Physical AI encompasses any device capable of perceiving and executing operations, unleashing its potential through AI empowerment. This concept spearheads AI technology innovation on robot terminals, bridging the digital and physical worlds through the deep integration of hardware and models.

The Second Wave of Intelligentization: Robots and Models Converge for Innovation

Leveraging intelligent models to enhance robots' understanding and decision-making through multimodal capabilities is the current industry trajectory. This is evident in the recent advancements in humanoid robots.

Recently, Agahsaxi collaborated with Qualcomm to unveil the world's first terminal-side multimodal AI large model humanoid robot, "Ultra Magnus," powered by Qualcomm's SoC. Positioned as an embodied intelligent humanoid robot solution based on terminal-side generative AI, it showcases cutting-edge technology.

Qualcomm's QCS8550 processor underpins the robot's motion control, perception, decision planning, and voice interaction. The robot employs terminal-side large language model technology and terminal-side language small model recognition for seamless voice interaction and user intent comprehension. Additionally, it integrates visual perception technology to orchestrate motion control and operations.

Galaxy General's Galbot G1, featured in NVIDIA's "humanoid robot army" keynote, is equipped with an embodied grasping foundation large model. Galaxy General also co-released GraspVLA, a grasping foundation large model, with researchers from the Beijing Academy of Artificial Intelligence (BAAI), Peking University, and the University of Hong Kong. Galbot, a wheeled humanoid robot, prioritizes upper body functionality over bipedal mobility, utilizing a mature wheeled mobile platform for cost-effective and efficient motion control.

While debating robot forms, wheeled robots offer cost advantages, with mature wheeled mobile platforms simplifying motion control and potentially accelerating commercialization. Galbot's model layer boasts generalized closed-loop grasping ability, achieved through self-developed synthetic data simulating millions of scene and grasping data.

Lingchu Intelligence, founded last year, recently introduced Psi R0, its first reinforcement learning (RL)-based embodied model. This perception-operation model supports dual dexterous hands for complex operations, integrating multiple skills for item and scene generalization.

Not just 2B robots, but consumer robot products, especially those integrating language and perception models with terminal sides, are rapidly embracing this trend.

Elephant Robotics designs companion robots with animal appearances, equipped with AI large models for human semantics and emotional understanding, providing intelligent interaction with emotional value at its core.

TCL's newly launched split-type smart home companion robot Ai Me, built on an AI large model, engages in multimodal natural interactions, offering emotional companionship and anthropomorphic interaction. It also moves intelligently, capturing cherished family moments. Through user interaction, Ai Me continuously learns and adapts to family habits, controlling home devices and balancing home intelligence central control with emotional value, showcasing increasingly diverse functionalities.

NARWAL, a veteran in cleaning appliances, is transforming towards embodied intelligence with robots + models. The Xiaoyao series, an embryonic form of embodied intelligence, issues cleaning instructions through large models, with the robot relying on semantic understanding to act, recognize, and complete cleaning tasks.

Consumer robots, particularly those offering emotional value like companion robots, possess strong toy attributes. With model technology advancements, these robots have evolved from simple interactive devices to integrated education, companionship, and entertainment hubs. Like figurines, dolls, and collectibles, they provide high emotional value and market acceptance, gradually expanding their market space.

Broadening our perspective, many innovative physical terminal devices are incorporating AI for functional iteration. With terminal devices and terminal-side AI deeply integrated, future gadgets like smartphones, PCs, appliances, automobiles, and toys may blur the lines between robots. These physical intelligent terminals embody the vision of Physical AI.

In these terminal markets, terminal-side AI is evolving towards multimodal fusion, model miniaturization, and adaptation, aligning with terminal hardware configurations. Model capabilities empowering terminal-side hardware have made Physical AI feasible.

Physical AI: Unleashing Robots' Potential with Models

Physical AI's hardware demands are comprehensive. Focusing on models, a robot's ability to operate normally in uncertain environments partly hinges on its "brain" with generalized decision-making capabilities. Establishing a "world model" that accurately models, understands, and reasons about spatial and physical processes is crucial for embodied intelligence.

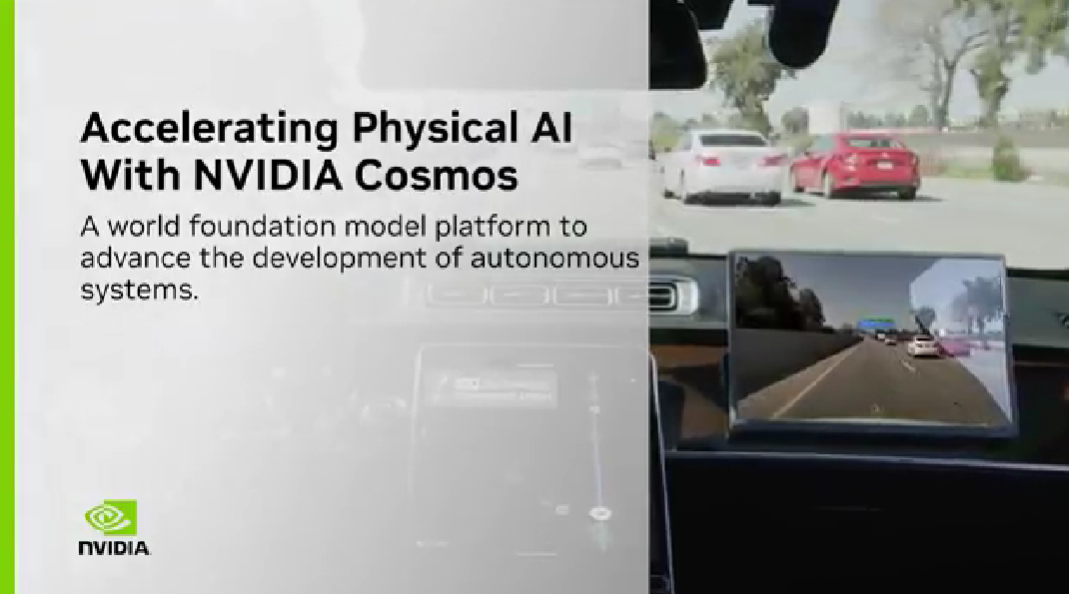

NVIDIA's CES 2025 revelation, Cosmos, the latest "World Foundation Model" for physical AI development, is a set of "open-source diffusion and autoregressive Transformer models for physical perception video generation." It includes pre-trained world foundation models predicting and generating physically perceived virtual environment videos. By generating synthetic data from inputs like text, images, videos, and motion, this model simulates virtual worlds, accurately replicating scene object spatial relationships and physical interactions.

Under current AI architectures and models, capturing a four-dimensional mirror image of real-world spacetime through generative physical simulation to obtain vast physical data is key to embodied intelligence. Unlike language large models, robot world models require precise calibration for learning and generalization, making real-world data collection challenging. Moreover, collected multimodal data is hard to calibrate, and inconsistent measurements render data unusable.

The "Sim to Real" approach for robot models has emerged as an efficient path, generating controllable physics-based synthetic data and simulating virtual worlds to mimic real-world object spatial relationships and physical interactions. Pre-deployment simulation testing and debugging, along with virtual environment reinforcement learning to accelerate AI agent learning, further refine robots' execution accuracy through partial real data alignment, ultimately realizing the Physical AI vision.

True Physical AI necessitates substantial computing power and extensive data learning and training for world model establishment and application. Besides world models guiding Physical AI, terminal-side large and segmented small models continuously drive terminal device intelligentization, enabling them to perceive environmental changes, optimize decisions based on observation data, and interact physically with enhanced accuracy. Future integration of comprehensive and realistic world models with terminal robots will propel AI towards Physical AI's ultimate goal.

With world models and terminal-side language, perception, and operation models jointly empowering robots, Physical AI will continually endow these "terminal physical devices" with adaptive and deep decision-making capabilities, enhancing their real-world prowess.

Here, the emphasis is on "terminal physical devices," with robots as a prime example. Physical AI's ultimate goal isn't necessarily today's robots, let alone exclusively humanoid ones. Physical form is merely a carrier, adaptable to task execution with AI support. As terminal devices and terminal-side AI deeply integrate, future appliances, autonomous vehicles, and other gadgets may transcend traditional robot definitions. These physical intelligent terminals embody the Physical AI vision.

Closing Thoughts

The World Foundation Model provides physical world knowledge and high-fidelity data, laying the generalization foundation. Terminal-side models, refined, compressed, and optimized through multimodal fusion to suit terminal device computing needs, coupled with advancements in dedicated computing chips and AI accelerators, are gradually making the Physical AI era a reality.