Can DeepSeek Truly Surpass the US AI Industry?

![]() 01/31 2025

01/31 2025

![]() 519

519

By Zheng Yijiu

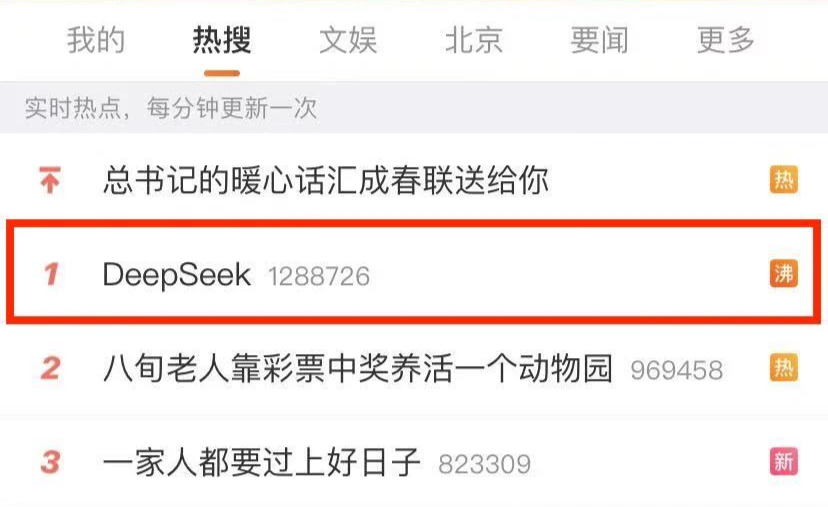

At the dawn of 2025, when the evolution of AI large models seemed to be slowing or even stagnating, the emergence of the domestic large model DeepSeek reignited the industry's passion. It not only demonstrated impressive performance in multiple standard evaluations but also garnered widespread community support through its open-source strategy. Global media, industry experts, and even institutional investors have sung high praise for it. These accolades reverberated back to China, where some hailed it as a "milestone for domestic AI," while others linked it to "national fortune." Consequently, DeepSeek instantly broke through the circles and topped the Weibo hot search list.

Weibo Screenshot

However, amidst this chorus of praise, it is crucial to maintain a clear understanding. After all, in the realm of AI, we have witnessed too many fleeting "star products" that either get eliminated by the market or prove to be mere facades after their brief glow.

When evaluating DeepSeek, we must not only acknowledge its innovative technological route but also consider the principles and limitations behind its implementation path. More importantly, in the current stage of rapid AI development, we should adopt a rational attitude towards technological innovation.

01

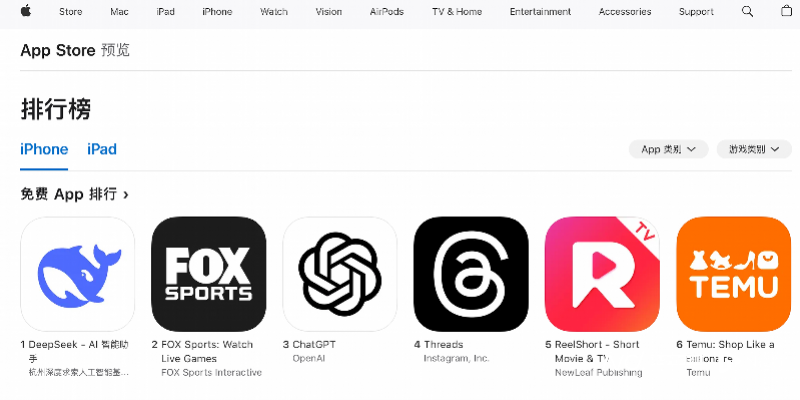

What makes DeepSeek capable of shaking up the US AI industry? DeepSeek first sparked a wave of enthusiasm in the US, where it recently surpassed ChatGPT to top the free app rankings in the US Apple App Store. The entire Silicon Valley and AI community have shown strong curiosity about this product.

Apple App Store Free App Rankings

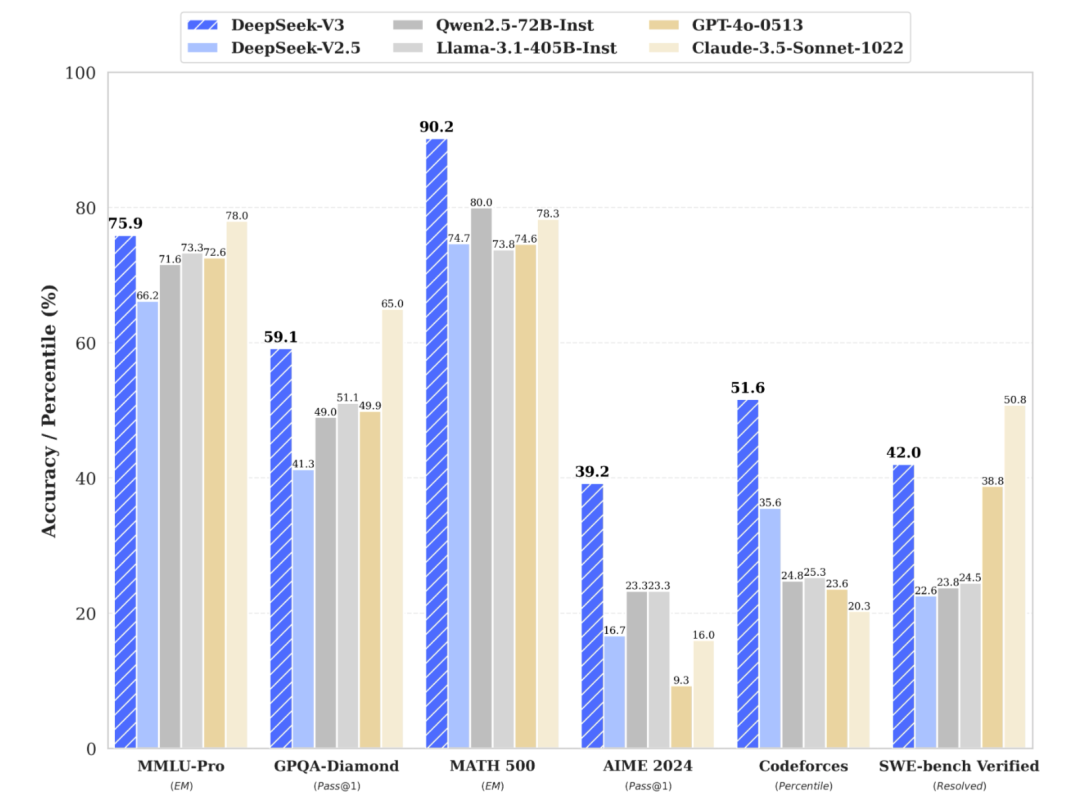

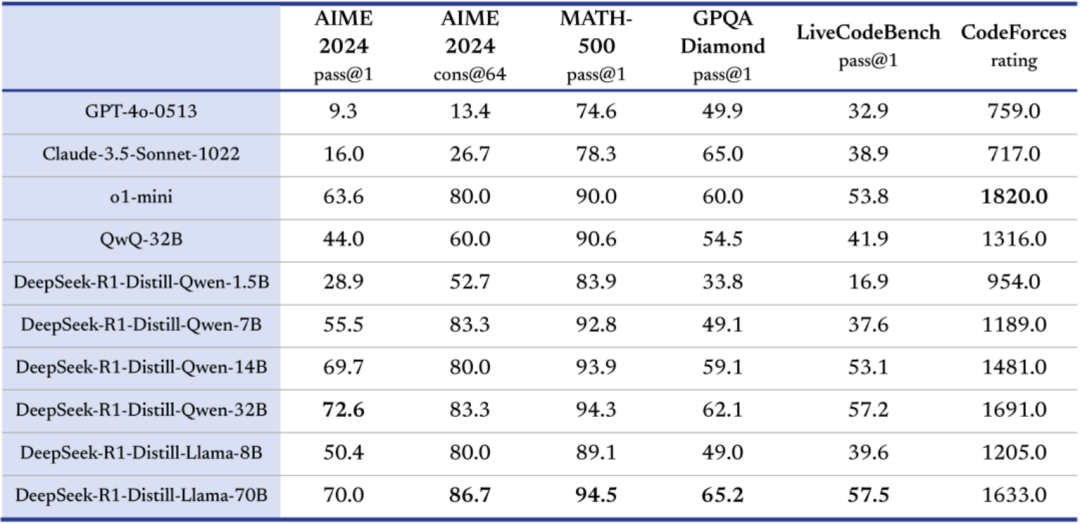

Undoubtedly, the recently released DeepSeek-R1 model by DeepSeek has sparked a storm of technological innovation in the AI field. This open-source inference large language model, based on the DeepSeek V3 mixture-of-experts model, achieves performance comparable to OpenAI's cutting-edge inference model GPT-4 in mathematical, programming, and reasoning tasks, while reducing training costs by 90-95%. This breakthrough not only showcases that open-source models are rapidly catching up with closed commercial large models in their journey towards AGI but also reveals a new AI training paradigm. The technological innovations of DeepSeek are primarily reflected in the following aspects:

Firstly, a breakthrough in model training efficiency. DeepSeek V3 achieved a level close to GPT-4 using only 2788K H800 training hours (approximately $5.6 million in cost), a figure that shocked the industry. More importantly, they adopted an innovative method of "AI training AI": using the R1 model to generate synthetic data to enhance the capabilities of V3.

DeepSeek V3 Evaluation

Secondly, a pioneering attempt in pure reinforcement learning. DeepSeek actually developed two R1 models: the public R1 and the more groundbreaking R1-Zero. The unique aspect of R1-Zero is that it completely abandons the traditional "human feedback-based reinforcement learning" (RLHF) method and adopts pure reinforcement learning instead. They set up two reward functions for the model: one to judge the correctness of the answer and another to evaluate the rationality of the thinking process. This method allows the model to simultaneously attempt multiple different answers and score them using these two reward functions. During this autonomous learning process, researchers observed an interesting phenomenon, which they termed the "enlightenment" moment. Just like humans suddenly grasping a solution to a difficult problem, the AI model learned a new way of thinking during training: it would stop and rethink the problem without rushing to a conclusion. This discovery tells us that as long as AI is given enough learning space and the right goals, it can develop complex thinking abilities on its own, without the need for humans to teach it in detail.

However, while this fully autonomous AI is intelligent, its way of thinking is difficult for humans to understand. It's like a genius student solving problems with self-created methods; although the answers are correct, teachers and classmates cannot understand the problem-solving process. To address this issue, DeepSeek developed the more practical R1 model. They first taught AI to express its ideas in a way that humans can easily understand and then let it learn and improve autonomously. This process is akin to preserving a genius's creativity while teaching them how to clearly express their thoughts.

Furthermore, in terms of knowledge transfer structure, DeepSeek discovered a method for small AI models to progress rapidly: having more powerful AI teach them. This is similar to having a senior professor cultivate young teachers, which often yields better results than letting young teachers figure things out on their own. This discovery is significant as it provides a new perspective on solving the cost issue in the popularization of AI. Most surprisingly, small models trained through this method have even outperformed some ultra-large AIs in certain mathematical tests. These innovative achievements are indeed exciting, especially the breakthroughs in efficiency improvement and cost reduction, which bring new possibilities for the further popularization of AI technology.

However, before celebrating these achievements, perhaps more sober thinking is needed: Are these innovations really as perfect as they seem? Is DeepSeek's development path truly sustainable? Meanwhile, in the rapidly iterating AI field, it may be more appropriate to step back and assess these new breakthroughs with a rational and pragmatic attitude.

02

AI needs demystification; stop blindly worshipping new "gods"

While acknowledging DeepSeek's achievements, we must also clearly recognize its limitations. Yann LeCun, Chief Scientist of Meta AI Research Department FAIR, recently made a profound point: When people see DeepSeek's outstanding performance and believe that "China has surpassed the US in AI," this is actually a misinterpretation. The correct understanding should be that "open-source models are surpassing closed-source proprietary models." He pointed out that DeepSeek's success largely benefited from open-source research and the open-source community, such as PyTorch and Llama from Meta, which built new ideas on the work of others. Because these works are publicly released and open-source, everyone can benefit from them—this is the power of open-source research and the open-source spirit. This comment truly hits the nail on the head.

Taking DeepSeek's latest model distillation practice as an example, it released the distilled Llama model under the MIT license, which actually violates Llama's original license agreement.

DeepSeek Model Distillation Practice

While Meta's Llama large model is "open-source," it is not completely unrestricted like the MIT license, and DeepSeek has no right to unilaterally change this license term. This issue not only exposes DeepSeek's deficiencies in intellectual property management and business compliance but also reflects that its development largely depends on the contributions of the open-source community. Moreover, although DeepSeek does have innovations at the technical level, most of these innovations are optimizations and improvements within the existing technical framework rather than fundamental breakthroughs. Looking at the development history of the entire AI field, true technological innovations often come from breakthroughs in basic theories and the establishment of new paradigms. From this perspective, DeepSeek's innovations are still at the level of "improvement" on the path, and there is still a long way to go before true technological breakthroughs.

Secondly, at the practical application level, DeepSeek still faces many challenges. The commercialization of large language models requires not only strong technical strength but also considerations of system stability, data security, cost-effectiveness, and other dimensions. Currently, DeepSeek lacks verification in large-scale commercial applications, and its performance in complex real-world scenarios remains to be tested. For example, due to the continuous rise in popularity in recent days, more ordinary users have started to flock to it, resulting in multiple service downtimes on the 27th alone.

DeepSeek App Screenshot

Currently, a significant revenue source for AI products actually comes from enterprise applications, where stability and reliability are often more important than simple performance indicators. From an industrial development perspective, the AI field has now entered a stage of deep competition. Leading enterprises are not only continuously investing in technology but also actively building complete ecosystems. In comparison, DeepSeek still appears somewhat thin. Relying solely on an open-source strategy and technological innovation, it is difficult to establish a sustained advantage in the fierce market competition. How to convert technological advantages into market competitiveness and how to establish a sustainable business model are issues that DeepSeek urgently needs to address.

Meanwhile, DeepSeek's methods have also been fed back into the open-source community, and it is evident that more companies will further develop based on its technology and theories. More importantly, we need to rethink our attitude towards AI technological innovation. In the current wave of AI development, overhyping a specific product or technical solution is a dangerous sign. Technological development is a gradual process that requires finding the optimal solution through continuous trial and error and improvement. Excessive expectations can not only bring unnecessary pressure to enterprises but also mislead the development direction of the entire industry.

Image Source: Internet

As Elvis, the founder of DAIR.AI, said, "All conspiracy theories and overinterpretations of DeepSeek-R1 are embarrassing. We should return to the academic and AI applications of DeepSeek-R1, seeing the value of reinforcement learning from the perspective of researchers and stronger model capabilities and local model scenarios from the perspective of developers. Instead of letting these false narratives blind you and cause you to miss the value and opportunities that DeepSeek-R1 can bring. Open-source research and the open-source spirit are still thriving." This may be the truly rational and cautious attitude towards innovation—giving technology the necessary time and space to grow and focusing on the technology itself rather than prematurely declaring victory or defeat in some kind of uncomprehending blind comparison or forcibly linking a technological evolution in a niche field with some grand narrative.

For DeepSeek, achieving innovation through technological decisions based on the accumulations of predecessors itself proves its capabilities and value. Currently, the positive response from the entire open-source community also affirms the feasibility of this positive feedback. What it needs to do next is to further broaden the derivatives of its technology and continuously deepen its research results. From a broader perspective, DeepSeek further proves that the competition and innovation in AI technology have not yet reached their conclusion.

Innovation in the entire industry is not solely formed by amassing computing power and unlimited funds. This also reignites hope for more small and medium-sized startups, as no one wants the AI industry to turn into a "winner-takes-all" scenario like the internet industry too early.