Autonomous Driving Technology: Ahead of the Curve, Yet Commercialization Still Requires Testing

![]() 02/05 2025

02/05 2025

![]() 536

536

Autonomous driving technology stands at the forefront of a transformative shift within the global automotive industry, heralded as the linchpin of future transportation. Its evolution has not only revolutionized traditional car manufacturing but also ushered in new applications in smart cities, logistics, and ride-sharing. From Advanced Driver Assistance Systems (ADAS) to fully autonomous driving (L5), the autonomous driving tech stack encompasses cutting-edge domains such as AI, machine learning, sensors, the Internet of Vehicles, and high-performance computing. This advancement promises to markedly enhance traffic safety, travel efficiency, and profoundly impact energy usage and urban planning.

Current State of the Autonomous Driving Industry

1. Development Stages and Market Status of Autonomous Driving

The journey of autonomous driving technology began in the 1980s, evolving through three distinct phases: the nascent stage (1980-2010), rapid development (2010-2020), and commercialization exploration (2021-present). In its early days, research focused on laboratory settings, simulating human driving via sensors and computer technology. The rapid development phase witnessed a leap from theory to preliminary application, fueled by deep learning and big data, exemplified by early tests of Tesla Autopilot and Google Waymo. Currently, the commercialization phase sees technology progressively integrated into urban roads, logistics, and other domains.

Globally, Levels 2 and 3 autonomous driving dominate the market. Level 2 functions, including Lane Keeping Assist (LKA), Adaptive Cruise Control (ACC), and Traffic Jam Assist (TJA), are widespread in popular models. By 2023, Level 2 features were present in 38.96% of new Chinese vehicles, surpassing 40% in Europe and North America. Conversely, Level 3 and above applications are limited, with core features like Highway Navigation on AutoPilot (NOA) and Urban NOA. Chinese brands like NIO and XPeng have made initial strides, yet market penetration stands at 7.62% and 3.85%, respectively, indicating nascent marketization of high-level autonomy.

2. Market Competition Landscape and Corporate Ecosystem

The autonomous driving landscape comprises diverse players: technology firms, emerging automakers, and traditional manufacturers. This diversified ecosystem fosters technological and business model innovation.

Technology firms spearhead autonomous driving, with Google Waymo and Baidu Apollo leading the charge. They focus on Level 4 and 5 systems, commercialized through Robotaxi services. Waymo offers driverless taxis in Phoenix since 2018, leading with technology and data. Baidu Apollo leverages China's vast road test data, launching driverless services in Beijing, Changsha, and beyond, laying groundwork for large-scale operations.

Emerging automakers like Tesla, XPeng, and NIO have carved niches with flexible product strategies. Tesla's Autopilot pioneered end-to-end deep learning, enabling Over-The-Air (OTA) updates for lifelong technological advancement. XPeng's Urban NOA epitomizes urban autonomy in China, covering 243 cities via multi-sensor fusion and high-precision mapping.

Traditional automakers adopt a phased approach. Volkswagen and Toyota leverage existing market share, using advanced driver assistance as differentiators, gradually upgrading systems through collaboration or in-house R&D. Volkswagen invested in Argo AI for Level 4, while Toyota explores Level 4+ commercialization with its Guardian system.

3. Policy and Regulation Promotion and Challenges

Regulatory advancement is crucial for autonomous driving's widespread adoption. Nations worldwide have formulated policies supporting testing and commercialization. California pioneered public road testing for Level 4 vehicles, with clear accident reporting and mileage requirements. China's "Intelligent and Connected Vehicle Access and Road Traffic Management Regulations (Trial)" provides a legal framework, while local pilot policies accelerate driverless vehicle applications, with Beijing, Shanghai, and Guangzhou offering Robotaxi testing and operational licenses.

However, regulatory formulation faces challenges. Accident liability remains contentious, especially at Level 3, with a grey area between driver and system responsibility. Data privacy and cybersecurity are prominent issues, as large-scale sensors and cloud computing pose privacy risks. The industry must integrate legislation with technology to ensure autonomous driving's legal framework.

Technical Trends in Autonomous Driving

1. Multi-sensor Fusion: The Core of Perception Technology

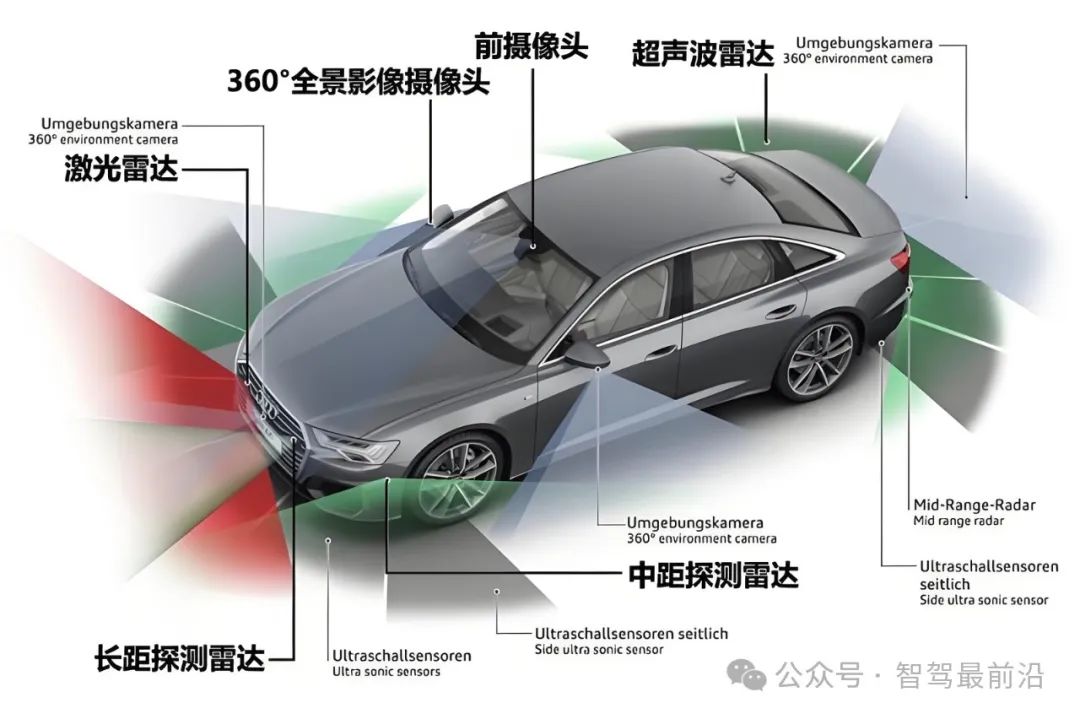

The perception system underpins autonomous driving, dictating environmental accuracy and safety. Vehicles 'perceive' surroundings via sensors, converting real-world data into digital signals for path planning and control. Single sensors are insufficient for complex scenarios, hence multi-sensor fusion's centrality.

1. Technological Progress and Challenges of LiDAR

LiDAR, the 'eye' of autonomous driving, detects distances and shapes by emitting laser pulses to generate 3D point clouds. It excels in long-distance and adverse weather detection, suitable for dynamic targets. Current LiDAR evolves towards high resolution and multi-beam configurations, with Velodyne and Luminar commercializing 128-beam products for enhanced accuracy.

However, LiDAR's high cost and mass production challenges hinder adoption. In 2020, a single unit cost thousands of dollars, and even mass-produced solid-state LiDAR exceeds $1,000. Adaptability to bright light and rainy/foggy conditions needs improvement. Industry trends show high-end vehicles using multiple LiDAR for redundancy and safety, while mid-to-low-end models blend LiDAR and cameras to balance cost and performance.

2. Cameras: Evolution from 2D to 3D

Cameras, mimicking human vision, are widely used in autonomous vehicles. They range from monocular, binocular, to multi-camera systems. Monocular cameras recognize lane lines, signs, and pedestrians via 2D images, while binocular and multi-cameras measure depth using parallax. Deep learning-based image semantic segmentation enhances complex scene target identification.

Tesla's Autopilot relies solely on cameras, using eight high-definition units for 360-degree coverage. It detects objects, recognizes traffic signals, and plans paths via deep learning. Despite abandoning LiDAR, the camera system's dependence on lighting and weather limits stability in extreme conditions.

3. Millimeter-wave Radar and Ultrasonic Sensors

Millimeter-wave radar detects target speed and distance, excelling in high-speed scenarios with a 200-meter range, unaffected by rain or fog. It is prevalent in Adaptive Cruise Control (ACC) and collision warning systems. XPeng's Highway NOA combines millimeter-wave radar with cameras for precise distance maintenance and path prediction on busy highways.

Ultrasonic sensors detect short-range obstacles, aiding parking and low-speed object detection. Despite a short range (usually < 10 meters), their low cost and flexible installation make them valuable autonomous vehicle perception tools.

4. Multi-sensor Fusion: Technical Architecture and Algorithm Optimization

Multi-sensor fusion enhances perception system performance by integrating LiDAR, camera, and millimeter-wave radar data. LiDAR provides precise distance data, cameras identify object attributes, and millimeter-wave radar supplements speed information. Fusion algorithms process this data to create a comprehensive environmental model.

Fusion methods include low-level, mid-level, and high-level fusion:

- Low-level fusion: Directly merges raw data, suitable for high-volume sensor combinations requiring high computational power.

- Mid-level fusion: Optimizes combined results after independent target detection by each sensor, including target association and trajectory prediction.

- High-level fusion: Uses detection results for planning and control decisions, suitable for high real-time performance applications.

Baidu Apollo employs mid-level fusion, combining LiDAR's 3D point clouds with camera image recognition for accurate and robust target detection. XPeng uses its BEV (Bird's Eye View) model to convert multi-sensor data into a unified spatial coordinate system, providing precise environmental information for trajectory planning.

2. Decision-making and Planning: From Modular to End-to-End Technological Evolution

1. Modular Decision-making and Planning System

Traditional autonomous driving architectures comprise modular decision-making and planning systems, including behavior prediction, path planning, and motion control. Modularity ensures clear functionality and easy optimization. The path planning module generates optimal routes based on high-precision maps and perception data, while motion control converts paths into steering, acceleration, and braking commands.

However, modular design has limitations: independent optimization may lead to inconsistent overall performance. Module data transmission can introduce errors, amplified in complex scenarios, affecting system performance.

2. The Rise of End-to-End Models

End-to-end deep learning models simplify traditional architectures by directly inputting sensor data and generating control commands. Relying on massive data training, neural networks process from perception to control. XPeng's BEV+Transformer model uses end-to-end learning, leveraging the Transformer structure to capture global environmental features and generate high-precision trajectory planning.

End-to-end models offer flexibility and adaptability, improving through continuous online training, especially in dynamic urban environments. However, their interpretability is poor, and data quality dependence raises industry debate about safety and reliability.

3. Application of Reinforcement Learning in Decision Systems

Reinforcement learning, an algorithm that mimics biological learning processes, has witnessed widespread adoption in autonomous driving decision systems in recent years. By implementing a reward function, these models can autonomously train in simulated driving environments, optimizing driving behavior. Waymo, for instance, employs a reinforcement learning-driven path planning algorithm in its driverless system, facilitating more efficient navigation through complex intersections.

In practical applications, the primary challenges of reinforcement learning center around training efficiency and generalization capability. Given the complexity and variety of real-world road scenarios, creating efficient simulation environments and accelerating training speed are at the forefront of current research endeavors.

Business Models and Industrial Chain Layout of Autonomous Driving

1. Transition from Hardware to Software: Evolution of Profit Models

The business model for autonomous driving technology is evolving, shifting from hardware sales to software subscriptions and services. Tesla's FSD subscription service exemplifies this shift, generating sustained revenue through continuous OTA updates of driving functionalities. Additionally, robotaxi services and logistics fleet operations are emerging as promising business models in the autonomous driving landscape.

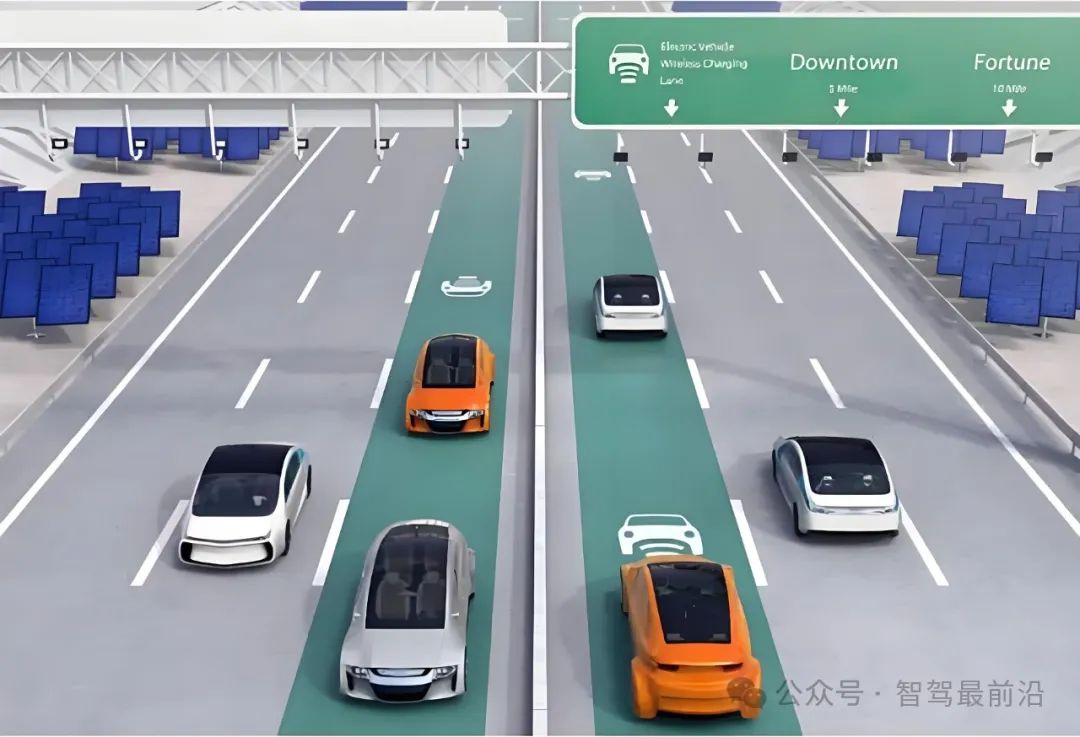

2. Intelligent Connectivity and Vehicle-Road Coordination: Future Industrial Chain Collaboration

Vehicle-to-Everything (V2X) technology significantly enhances the safety and efficiency of autonomous driving through seamless vehicle-road coordination. Numerous cities in China, such as Guangzhou, have established intelligent road test zones, enabling real-time transmission of traffic light data, which in turn provides autonomous vehicles with more precise driving routes.

Conclusion

The ascendancy of autonomous driving technology heralds a new era of intelligence in the automotive industry. Through technological advancements, multi-scenario applications, and innovative business models, autonomous driving is progressively transforming our travel paradigms. Nonetheless, its widespread adoption still necessitates addressing various challenges, including technological, regulatory, and cost issues. As the industrial chain matures and technology进一步优化, autonomous driving is poised to not only become ubiquitous in personal transportation but also play a pivotal role in smart city construction and logistics transformation.

-- END --