NVIDIA Unveils DeepSeek Amid Stock Decline

![]() 02/05 2025

02/05 2025

![]() 444

444

Despite DeepSeek causing a 17% plunge in NVIDIA's stock price in a single day, the tech giant has chosen to embrace the future.

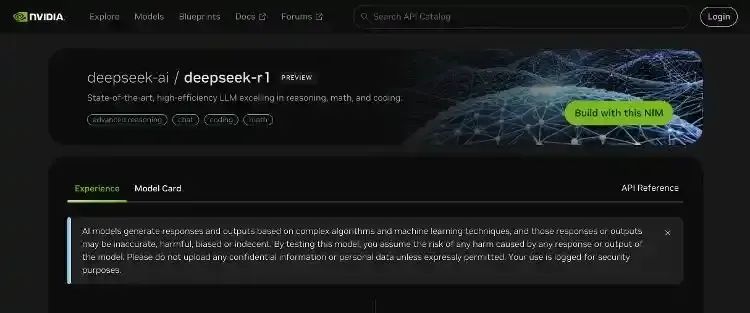

NVIDIA's official website reveals that the DeepSeek-R1 model is now available as an NVIDIA NIM microservice preview, targeted at developers. Described as the most advanced and efficient large language model, DeepSeek-R1 excels in reasoning, mathematics, and coding.

For DeepSeek itself, the impact has been significant.

In the US AI community, DeepSeek has sparked a major buzz, with virtually all AI-related companies mentioning it. Even at Meta's earnings conference, discussions and comparisons to DeepSeek were prominent.

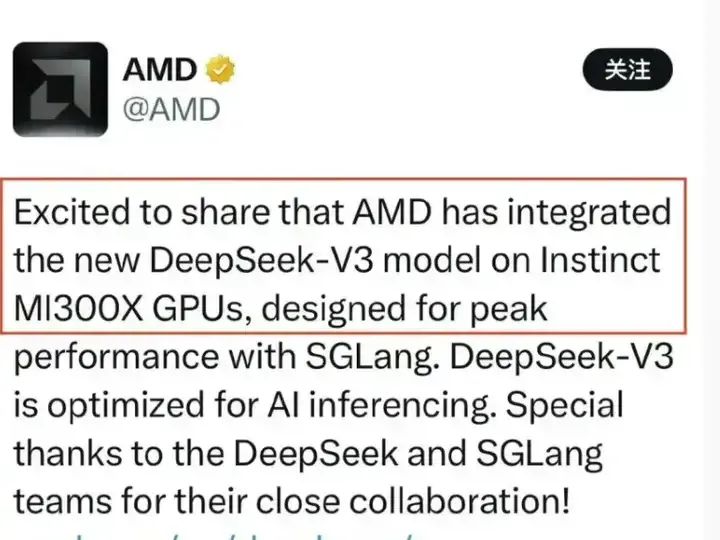

NVIDIA's long-standing rival AMD has officially poked fun at the situation, claiming that using AMD cards for DeepSeek inference can reduce costs by 30%.

For NVIDIA, this situation is somewhat dramatic.

On one hand, DeepSeek disrupts NVIDIA's vision of unlimited computational power stacking in AI with extremely low training costs (using 2,000 castrated H800s).

On the other hand, due to its open-source nature, DeepSeek has attracted a flood of corporate and individual developers, potentially forming a thriving and expansive ecosystem, akin to the Linux of the large model field. For application-side enterprises and individuals, this means they don't need to reinvent the wheel; they can directly develop on DeepSeek's open-source ecosystem. As this ecosystem rapidly develops, it may drive enterprises and individuals to potentially require a large number of computing cards for inference.

However, this second scenario was not originally envisioned by NVIDIA; it can only be considered a "second-best option." NVIDIA's original plan was for everyone to create their own models and purchase massive amounts of computing cards for training, as CUDA represents the true moat, while inference cards are relatively fewer and do not necessarily have to be NVIDIA's.

When alternatives enter the market, NVIDIA cannot maintain high profits. Why not use AMD with large video memory for inference? Ascend is also a viable option.

This is the market's doubt about NVIDIA, which ultimately led to a 17% drop in stock prices in a single day.

NVIDIA's launch of DeepSeek is clearly aimed at increasing promotional efforts to attract more corporate and individual developers to join the DeepSeek open-source community and strive for leadership, thereby selling more cards.

However, I personally question how beneficial this is for NVIDIA. The market's feedback indicates that NVIDIA's stock price continues to decline, with a 3.67% drop.

When ChatGPT emerged, I held the view that it was the result of great efforts leading to a miracle. The reason Microsoft and Google didn't come up with it is that such listed giants cannot afford huge investments with uncertain outcomes. OpenAI's greatness lies in its ability to invest billions of dollars to produce a result: a path in the right direction.

With DeepSeek's emergence, the era of relying on hardware to stack computational power is gradually coming to an end, and the path of reducing hardware dependence through large model technical optimization has only just begun.

In recent days, DeepSeek has dominated huggingface, with various third-party quantized models continuously emerging, and even enthusiasts running it on Raspberry Pi.

While the current performance is not optimal, with the collective efforts of numerous open-source enthusiasts worldwide, it won't be long before an open-source large model capable of inference or even training with extremely low computational cost is developed.

For example, when Flux from BlackForest was first released, it was impressive but resource-intensive, barely running on a 4090 with 24GB of video memory. Due to its open-source nature, it has now been optimized by various developers to run smoothly with 8GB or even 6GB of video memory.

When large models like DeepSeek are eventually optimized by open-source enthusiasts to the point where consumer-grade graphics cards can perform inference, NVIDIA's bubble will burst.