DeepSeek's AI Robots Steal the Show with Yangko Dance, Making This Spring Festival Unforgettable

![]() 02/06 2025

02/06 2025

![]() 709

709

Original by Digital Economy Studio

Author | Uncle You

WeChat ID | yds_sh

As the Year of the Snake ushered in the Spring Festival, bidding farewell to the old and welcoming the new, DeepSeek emerged as a groundbreaking force, with AI robots performing yangko dance on the Spring Festival Gala. These industry-leading "mysterious Eastern powers" sent shockwaves through the global technology community.

Through its own efforts, DeepSeek suppressed the valuation of AI technology giants.

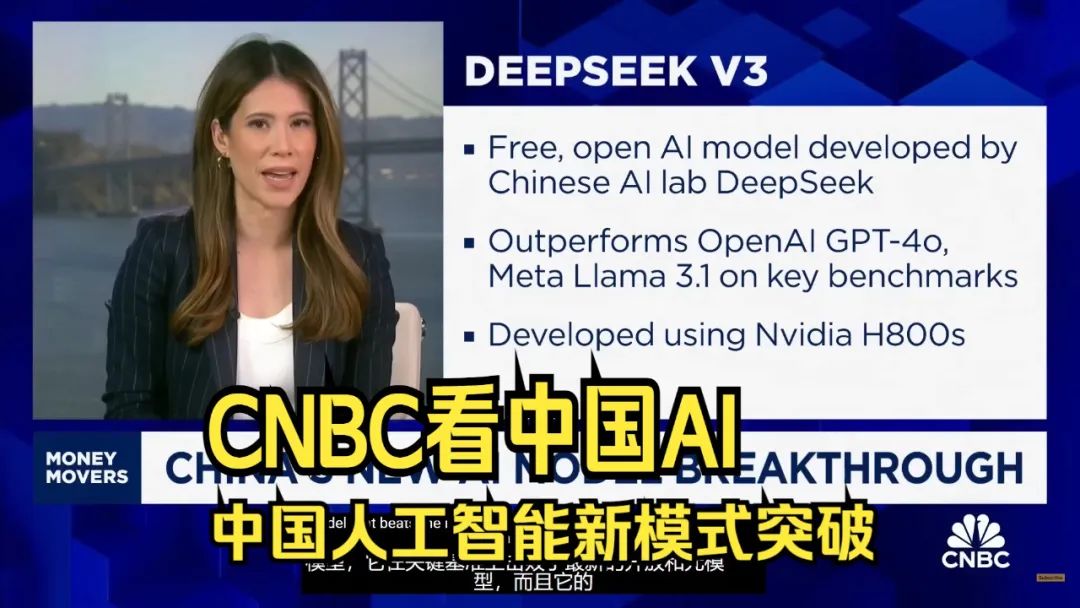

DeepSeek (Deep Search), a large model company under the quantitative giant Huanfang Quant, officially released the inference large model DeepSeek-R1 on January 20. On January 27, the DeepSeek app topped the free APP download rankings in both the Apple App Store in China and the United States. Behind DeepSeek stand AI products like ChatGPT from OpenAI and Google Gemini. This is the first time a Chinese technology product APP has topped both charts.

The shockwaves grew even stronger later. On January 27, U.S. time, NVIDIA's (Nasdaq: NVDA) stock price plummeted 16.86% to close at $118.58 per share, reaching its lowest point since October of the previous year; the total market value was $2.90 trillion, with $590 billion (approximately RMB 4.28 trillion) evaporating in one day, setting a record for the largest single-day individual stock market value evaporation in history. Led by NVIDIA, the U.S. stock semiconductor chip sector suffered a collective setback, with Oracle falling 13.78%, AMD falling 12.49%, chipmaker Broadcom falling 17.4%, and TSMC falling 13%.

Why did these technology giants get "overturned" in the stock market? This is because DeepSeek has achieved high-performance large models with open source and lower costs, thus triggering concerns in the capital market about the valuation of AI technology giants.

First, let's discuss open source. The "American AI ecosystem organism" constructed by companies like Microsoft, OpenAI, and NVIDIA is based on the premise that "open source is not allowed" for commercialization—it requires charging for the AI model itself or incorporating it into paid products to complete the business loop. In contrast, DeepSeek-R1 is an open-source inference large model. Based on relevant license agreements, DeepSeek-R1 allows global developers to freely modify, use, distribute the model, and even engage in derivative development and commercial use.

Besides commercial considerations, another huge challenge that DeepSeek's open source poses to OpenAI is that when the "capabilities" of open-source products are close to or even surpass those of closed-source products, the impact on closed-source products will be enormous. One of the core reasons behind the surge in DeepSeek downloads is precisely open source: users can deploy DeepSeek-R1 on their own servers or in the cloud for free, significantly reducing (or even eliminating) API call costs.

The second astonishing aspect of DeepSeek is its extremely low pre-training cost. DeepSeek-V3, which performs on par with GPT-4o, invested $5.58 million in R&D, with a training cost of less than 1/20 of GPT-4o, and only used a GPU cluster of 2048 H100s, taking only 53 days. At the same level, even the top companies in the global AI first tier must use at least 16,000 GPUs or more for training.

DeepSeek, which has significantly reduced technical costs, has had a strong impact on the computing power market. The man behind it has also come into the spotlight.

A quantitative private equity tycoon who turned the tables and sparked a price war with large models.

On January 20, 2025, Liang Wenfeng, the founder of the AI startup Deep Search, was invited to attend a symposium organized by relevant departments and made a related speech. There are no less than five major domestic companies working on general AI large models, but only Liang Wenfeng was invited as a representative of entrepreneurs in the AI field. And on this day, DeepSeek-R1 was released, and Liang Wenfeng immediately attracted the attention of the public.

Liang Wenfeng was born in 1985 in an ordinary family in Wuchuan, Zhanjiang, Guangdong Province, with both parents being elementary school language teachers. This teenager from a fourth-tier city was particularly interested in mathematics, completing high school mathematics during junior high school and even starting to study university mathematics.

In 2002, the 17-year-old Liang Wenfeng was admitted to the undergraduate program in Electronic Information Engineering at Zhejiang University with the first rank in his school, and then to the graduate program in Information and Communication Engineering at Zhejiang University in 2007. During his university years, he encountered two events that changed his life: falling in love with machine learning and being fascinated by quantitative trading.

After graduation, Liang Wenfeng and his classmates began to accumulate market data and explore fully automated quantitative trading. In 2015, when others were still frightened by the ups and downs of the stock market, 30-year-old Liang Wenfeng founded Huanfang Technology in Hangzhou, devoting himself to fully automated quantitative trading and aspiring to become a world-class quantitative hedge fund.

Although the company only had 10 GPU graphics cards at the time, in October 2016, Huanfang Quant launched its first AI model, and the first trading position generated by deep learning went live. By the end of 2017, almost all quantitative strategies were calculated using AI models. With the support of AI, the company reached a scale of 10 billion in four years and surpassed the 100 billion mark in another two years.

From the beginning, making money from investments was not Liang Wenfeng's only goal. Instead, earning enough money allowed him to better research artificial intelligence. In 2019, Huanfang Quant established an AI company, and its self-developed deep learning training platform "Firefly One" had a total investment of nearly 200 million yuan and was equipped with 1,100 GPUs; two years later, the investment in "Firefly Two" increased to 1 billion yuan, equipped with about 10,000 NVIDIA A100 graphics cards. It is generally believed that 10,000 NVIDIA A100 chips are the threshold for computing power to build a self-trained large model, and from a pure computing power perspective, Huanfang even obtained an earlier entry ticket for ChatGPT than many large companies.

With these accumulations, Liang Wenfeng founded Deep Search (DeepSeek) in July 2023 to build an AI large model. "Now that we're entering the field, how can we compete with industry giants like OpenAI?" Faced with doubts, Liang Wenfeng, who firmly believes that "artificial intelligence will definitely change the world," did not explain much. In less than a year, everyone shut up and was left with admiration.

In May 2024, DeepSeek released DeepSeek-V2, which quickly became famous as the "Pinduoduo of the AI world" with its innovative model architecture and unprecedented cost-effectiveness. Liang Wenfeng, who opposes the intense competition among large companies, replicated Huang Zheng's story of "coming from behind" in the AI field and sparked a price war for large models in China that drove prices down significantly.

Luo Fuli, one of the key developers of DeepSeek-V2, once wrote on social media that "in terms of the Chinese language proficiency of the DeepSeek-V2 model alone, it is truly in the first tier of domestic and foreign closed-source models" and that "with a price of only 1 yuan per million input tokens, which is only 1/100 of the GPT4 price, it is the king of cost-effectiveness." This Luo Fuli is the genius girl who was previously rumored to be offered a ten million yuan annual salary by Xiaomi founder Lei Jun.

Liang Wenfeng's approach to hiring is also very interesting: no returnees, only local engineers; no veterans, preferring fresh graduates; no KPIs, just working based on interest. He said, "An exciting thing may not be measured purely by money. It's like buying a piano at home—one because you can afford it, and two because there is a group of people eager to play music on it."

A subversion in the underlying technology roadmap to achieve overtaking on the curve.

From a technical principle perspective, the rise of DeepSeek, especially the success of the latest generation DeepSeek R1, stems from the RL reinforcement learning strategy it adopts, which is the fundamental reason why it can achieve results similar to GPT-4o at an extremely low cost.

Traditional AI, represented by GPT, essentially employs a strategy of "a guessing game under human selection"—GPTs do not actually think but generate things that "seem reliable but cannot be delved into" through data training. For example, early painting AIs would draw six fingers on human hands because the AI did not know how many fingers humans have; it could only generate something that "looks about right" through a large amount of data training. Then humans would screen and remove unreliable results to obtain the final work.

For DeepSeek, it completely abandons this "guessing" training method and instead adopts the RL strategy (reinforcement learning) commonly used in the fields of Go and intelligent driving. If the previous strategy was humans telling AI what is right and what is wrong, then the RL strategy truly allows AI to learn to understand the world, grasp the laws of things, and explore through reasoning more autonomously.

Under the traditional technical path, 90% of computing power is consumed in the trial-and-error process, while DeepSeek's autonomous learning mechanism can reduce ineffective training by 60%. Due to the subversion in the underlying technology roadmap, DeepSeek R1 also greatly reduces operating costs—compared to the billions of dollars of investment and superclusters of tens of thousands of graphics cards often seen in Silicon Valley, this domestic large model achieves similar or even better results with just over 2,000 graphics cards and a cost of around $6 million.

For China's startups, a more critical point is that the RL strategy reduces the demand for parallel computing by 40% compared to traditional architectures, which directly breaks the American AI path of stacking computing power and data, making it possible for domestic graphics cards and chips to replace overseas giants like NVIDIA.

Speaking of this, the rise of DeepSeek is not just about technical significance: a private company born in China, with a team of no more than 200 local young engineers, has bypassed the "successful model path" explored by Americans, adopted innovative ideas and open-source methods, and achieved remarkable achievements in the industry at an extremely low cost, realizing overtaking on the curve.

As the "mysterious Eastern power" in the eyes of Silicon Valley, Scale AI founder Alexandr Wang commented on DeepSeek, "Over the past decade, the United States may have been ahead of China in the AI race, but the release of DeepSeek's AI large model could 'change everything.'"

Written at the end...

The emergence of DeepSeek has left META and CHAT gpt, which boasted of being at least 10 years ahead, a bit lost. Dubbed the "Star Wars" 2.0 version and planning to spend astronomical amounts on the Stargate, it seems like it should be closed before it's even opened—U.S. President Trump mentioned at a meeting that the emergence of DeepSeek has sounded the alarm for American companies, "We need to concentrate on winning the competition." One day later, according to foreign media reports, multiple U.S. officials called DeepSeek "theft" and are conducting a national security investigation into it.

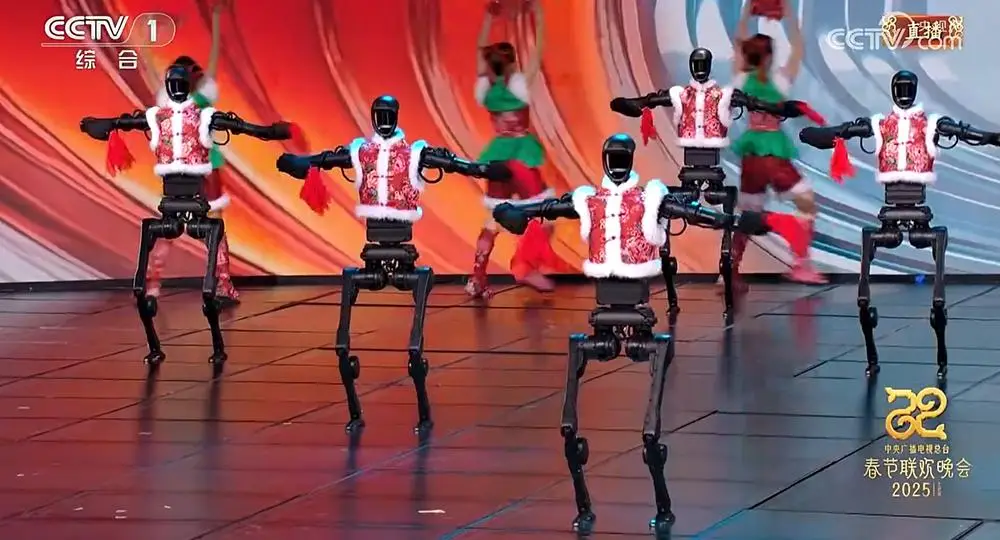

Also shocking the world on New Year's Eve was Unitree Robotics, also from Hangzhou. At the CCTV Spring Festival Gala, the humanoid robot H1 from Unitree Robotics performed an AI robotic yangko dance and could even twirl handkerchiefs from multiple angles.

These robots are equipped with multiple sets of high-tech devices, such as high-precision 3D laser SLAM autonomous positioning and navigation, multi-agent collaborative planning, advanced networking solutions, and full-body AI motion control, allowing them to walk steadily on stage with neat actions like copy and paste. These technologies not only provide them with ultra-precise positioning and ultra-stable connection but also enable them to handle various emergencies. It can be said that this is the first large-scale fully AI-driven automatic clustered humanoid robot in human history. Boston Dynamics robots, which were unstoppable in previous years, seem to have been caught up by Unitree in just a few moves.

From DJI, Unitree to the groundbreaking 6th generation aircraft and DeepSeek, high-tech enterprises that rewrite the world's technological landscape are constantly emerging in the great eastern country. It has kept the world too busy to catch a glimpse, and even forced some countries to take underhand countermeasures.

"China will undoubtedly require individuals to spearhead technological advancements," Liang Wenfeng remarked in a past interview, reflecting on the past three decades of the IT revolution, during which China largely remained on the sidelines of genuine technological innovation. "We perceive that the paramount priority now is to immerse ourselves in the tide of global innovation," said Liang, often viewed as an idealist by outsiders. "For numerous years, Chinese enterprises have grown accustomed to leveraging technological innovations developed by others, converting them into profitable applications. However, this should not be taken for granted. In this current wave, our primary objective is not to seek immediate profits, but rather to push to the forefront of technology and foster the growth of the entire technological ecosystem."