315 Crackdown on Fakes: Time to Tackle Scams Uncovered by AI | Voices of 315

![]() 03/14 2025

03/14 2025

![]() 447

447

"Malicious" AI Still Requires Human Intervention

Original by You Dian Shu - Digital Economy Studio

Author | You Shu

WeChat ID | yds_sh

As a well-known celebrity discusses education topics on a short video platform, an advertisement unexpectedly interrupts: "Feed your child Brand X milk powder from a young age, and they'll be smart and strong." Despite mismatched facial expressions and lip movements, the voice is eerily similar. Only upon close inspection does one realize it's the work of "AI face-swapping and voice mimicry".

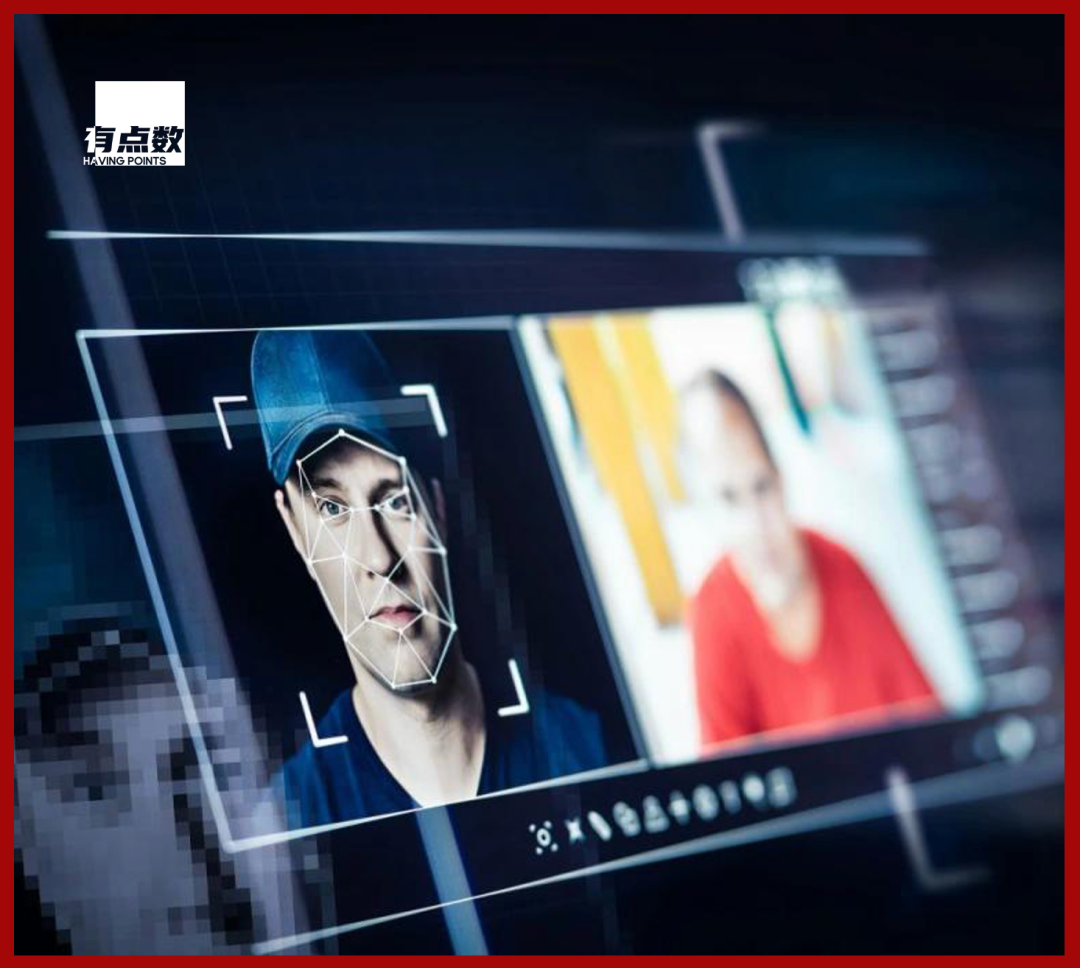

With the iterative advancements of AI technology, while it brings convenience to our daily lives, it also presents opportunities for criminals. Incidents of "AI face-swapping and voice mimicry" fraud and infringement are becoming increasingly prevalent. As 315 International Consumer Rights Day approaches, let's delve into the consumption chaos fueled by artificial intelligence.

How should we respond when fraud combines with AI technology?

"Zhang Wenhong" Live Streaming and Selling Goods? AI Fuels the "Information Locust Plague"

Renowned infectious disease expert Zhang Wenhong morphs into a late-night live streamer, vigorously promoting a particular brand of protein bars?

Recently, in a video posted by a vlogger, "Doctor Zhang Wenhong" enthusiastically endorsed a protein bar, fooling many netizens into believing it, resulting in nearly 1,200 sales in a short span.

In response to this blatant infringement, Zhang Wenhong subsequently clarified that the video was AI-synthesized: "I immediately reported the AI-synthesized video upon discovering it, but there were multiple such sales accounts, and they kept changing. I complained to the platform multiple times, but it kept happening, and I eventually ran out of steam."

In these live streams, individuals behind the scenes leverage AI technology to utilize Zhang Wenhong's image and voice, slightly tweaking scripts to "deceive," allowing unknown, inferior, or even counterfeit and substandard goods to sell out instantly. If the scam is exposed, they simply create a new account to continue live streaming sales.

"These fake AI-synthesized messages are akin to locust plagues, recurring frequently and harming people like swarms of locusts in the sky. Many unsuspecting civilians, especially those unfamiliar with AI and older individuals, are easily deceived." The video in question has been removed from the posting account, and the account has been permanently banned by the platform. Besides complaining to the platform, Zhang Wenhong is also considering seeking police assistance, but as these perpetrators are invisible and constantly change accounts, identifying an accurate target for reporting is challenging.

The 2024 AI Security Report reveals that the number of deepfake fraud incidents based on AI surged by 3000% in 2023, with AI-synthesized videos becoming tools for malicious individuals to manipulate public opinion and make money. Invisible, intangible, yet eerily matching... These advantages of AI-synthesized videos continuously challenge people's cognitive threshold of "seeing is believing" and erode consumers' legitimate rights and interests.

Not only Zhang Wenhong but also numerous public figures, such as education expert Li Meijin, film stars Andy Lau, and Jin Dong, have called for strengthened supervision of "AI face-swapping and voice mimicry" on various occasions. Lei Jun, a deputy to the National People's Congress, also included proposals on the governance of illegal infringement of "AI face-swapping and voice mimicry" in his suggestions during this year's Two Sessions.

Whether It's Jack Ma or Jin Dong, AI Can Swap Faces and Mimic Voices

Being misled by "AI face-swapping and voice mimicry" live streaming sales and thus purchasing counterfeit and substandard goods inevitably damages consumers' rights and interests, but even more harmful are fraud activities leveraging AI technology. According to media reports, someone used AI to swap their face with that of tycoon Jack Ma on social media platforms, conducting video calls to gain the trust of netizens. The target audience is mostly middle-aged and elderly individuals who lack AI awareness, and then they deceived them of money under the pretext of "doing poverty alleviation projects." Others impersonated actor Jin Dong on short video platforms to contact middle-aged and elderly individuals, inducing them to transfer money under the pretense of needing funds for filming.

In addition to impersonating public figures, there are criminals who use AI to imitate important people in your life, making it even harder to guard against. For example, in an AI fraud case disclosed by the Tianjin police, Mr. Li received a text message from someone claiming to be a leader from his work unit, requesting to add him on social media for further discussion. After becoming friends, the other party asked Mr. Li to transfer money on the pretext of having an emergency that required funds, promising to return the money afterward. The other party even proactively called for a video call. Seeing that the "leader" did indeed appear on the other end of the video, Mr. Li instantly lowered his guard and transferred 950,000 yuan to the other party in three installments. That afternoon, when Mr. Li mentioned this to his friends, he felt more and more uneasy and reported it to the police.

After receiving the report, the Tianjin police quickly initiated a linkage mechanism with the bank and conducted emergency stop payments on the three bank cards in a timely manner. The bank successfully froze 830,000 yuan of the involved funds. According to police, criminals use AI technology to replace the faces of acquaintances or relatives with those of others, impersonating important people in the lives of their fraud targets, and implementing fraud through the synthesis of "indistinguishable" videos or photos.

The Hong Kong police also disclosed a case of multi-person "AI face-swapping" fraud involving up to HK$200 million. According to CCTV News, employees of the Hong Kong branch of a multinational company were invited to participate in a "multi-person video conference" initiated by the Chief Financial Officer of the headquarters and were required to make multiple transfers as requested, transferring HK$200 million in 15 separate transfers to five local bank accounts. Later, when they inquired with the headquarters, they realized they had been deceived. Police investigations revealed that in this case, only the victim was a "real person" in the so-called video conference, and all other "participants" were fraudsters after "AI face-swapping".

Compared to AI face-swapped videos, AI-synthesized voices are even harder to discern in fraud. A survey report released by McAfee, a global security technology company, points out that with just 3-4 seconds of recorded audio, free AI tools on the internet can generate cloned voices with 85% similarity. Among the more than 7,000 global respondents who participated in the survey, 70% were uncertain whether they could distinguish AI-synthesized voices, and about 10% had encountered AI voice fraud.

On social media, many international students have posted that they have encountered scams where AI was used to synthesize their own voices to target their parents in China. These scams, under the pretense of kidnapping, release AI-synthesized distress calls and use the time and space difference to deceive large amounts of money while causing panic.

Prevent Information Leakage and Carefully Discern AI Scams

It's evident from the above cases that artificial intelligence is a double-edged sword. From a sub-industry perspective, more and more financial institutions have introduced AI for customer service, which also provides criminals with opportunities. Some consumers have received calls from someone claiming to be from an insurance company's customer service, requesting bank card information and payment of "renewal premiums," resulting in financial loss. Behind this is criminals using AI to mimic the voices of insurance company customer service representatives or chatbots, contacting consumers by phone, and inducing them to disclose personal information.

Considering this, we find that current fraudulent methods leveraging AI technology are showing a trend of lower thresholds and more realistic effects. So, in the face of various scenarios of new AI scams, how should we improve our awareness and response capabilities?

While AI technology can indeed achieve face-swapping and voice mimicry, to carry out a scam, one must first collect the personal identity information, a large number of facial photos, and voice recordings of the target to generate indistinguishable audio and video through AI; steal the social media account of the face (voice)-swapped target; and have personal information on the fraud target, familiarizing oneself with their social relationships, etc. This reminds us to enhance our awareness of personal information protection, not to post pictures on social media platforms that contain personal information such as names, ID numbers, phone numbers, and key locations, such as airline tickets, certificates, and photos, and not to easily provide biometric information such as facial scans and fingerprints to others.

How to discern or identify whether it's AI face-swapping or voice-changing for online marketing impersonating celebrities or generating fake news? Industry experts remind that one can check facial features, observe expressions, movements, and the match between voice and lip movements; on the other hand, verify the source of the information and ask the other party to cover their face with their hand or perform other facial movements to judge whether the picture is coherent.

AI-synthesized videos often exhibit significant flaws in facial details: lack of micro-expressions (such as difficulty in capturing fine lines at the corners of the eyes and twitching of the mouth), mismatched lighting and shadows (transitions of contours and shadows do not conform to the laws of light reflection), and overly smooth skin (lacking pores, textures, and imperfections). In terms of eye contact and expressions, there is mechanized eye movement (discontinuous rotation, abnormal blink frequency), and stiff facial expressions (abrupt transitions, lack of authenticity in smiles).

Although voice cloning technology has made significant progress in recent years, almost reaching the level of indistinguishability, upon careful listening, one can find that AI-generated voices are usually smoother, faster, and less likely to have filler words or pauses like 'um,' 'huh,' or 'that is to say.' With more exposure, one can still identify AI-generated voices.

Conclusion

In the face of AI chaos, while consumers need to be vigilant, government supervision must also keep pace and act swiftly. China has successively promulgated laws and regulations such as the "Interim Measures for the Administration of Generative AI Services" and the "Provisions on the Administration of Deep Synthesis of Internet Information Services," aimed at regulating AI applications.

For AI technology to benefit humanity, regulation and raising public awareness of prevention are essential. Only by continuously increasing the cost and difficulty of illegal fraud and enhancing the public's ability to distinguish between truth and falsehood can we prevent AI technology from degenerating into a tool for technological fraud.