2025 Edge AI Report: Real-Time Autonomous Intelligence, from Paradigm Innovation to the Technological Foundation of AI Hardware

![]() 03/31 2025

03/31 2025

![]() 556

556

This marks my 365th column article.

In my previous article, "Giants Enter TinyML, Edge AI Ushers in a New Turning Point," I noted the rebranding of the TinyML Foundation, now known as the Edge Intelligence Foundation.

Recently, the Edge Intelligence Foundation released the latest version of the "2025 Edge AI Technology Report," offering a comprehensive view of Edge Intelligence and Tiny Machine Learning (TinyML) trends.

The report suggests that TinyML's maturity may surpass many expectations, with numerous real-world applications already emerging.

Here are the report's highlights:

Technical Drivers of Edge AI: The report explores hardware and software advancements supporting Edge AI, focusing on specialized processors and ultra-low-power devices overcoming limitations in processing power and scalability in resource-constrained environments.

The Role of Edge AI in Industry Transformation: The report reveals how Edge AI impacts operational models across industries by enabling real-time analysis and decision-making.

Future Technologies and Innovations: The final chapter outlines emerging technologies that may influence Edge AI's future, such as federated learning, quantum neural networks, and neuromorphic computing.

Today, we summarize the "2025 Edge AI Technology Report," providing an in-depth understanding of TinyML and Edge AI's latest advancements and overall development landscape.

Real-Time, Local, Efficient: Innovative Edge AI Applications in Six Major Industries

With growing demand for low-latency and real-time processing, Edge AI is disrupting industries, particularly automotive, manufacturing, healthcare, retail, logistics, and smart agriculture. By performing real-time analysis and decision-making at the data source, Edge AI significantly enhances efficiency, optimizes resource allocation, and improves user experience.

First, let's explore autonomous vehicles.

As camera resolutions reach gigapixels and LiDAR systems emit millions of laser pulses per second, Edge AI accelerates response times and enhances safety. For instance, Waymo expanded its simulation training to handle rare driving scenarios. Xpeng Motors anticipates its end-to-end model will learn from over 5 million driving data clips by year-end.

Similarly, in the rapidly growing AI market, real-time Edge AI is crucial for improving efficiency and reducing downtime. In automotive production plants, intelligent sensors immediately flag temperature peaks or mechanical stresses, enabling teams to prevent disruptions before issues escalate. NIO's NWM (NIO World Model) demonstrates ultra-fast AI predictions' capabilities. Edge AI-based analysis detects micro-defects on production lines with astonishing precision.

Combining speed, reliability, and device intelligence, real-time data processing is transforming autonomous vehicles' standard practices, paving the way for a more adaptable and efficient future.

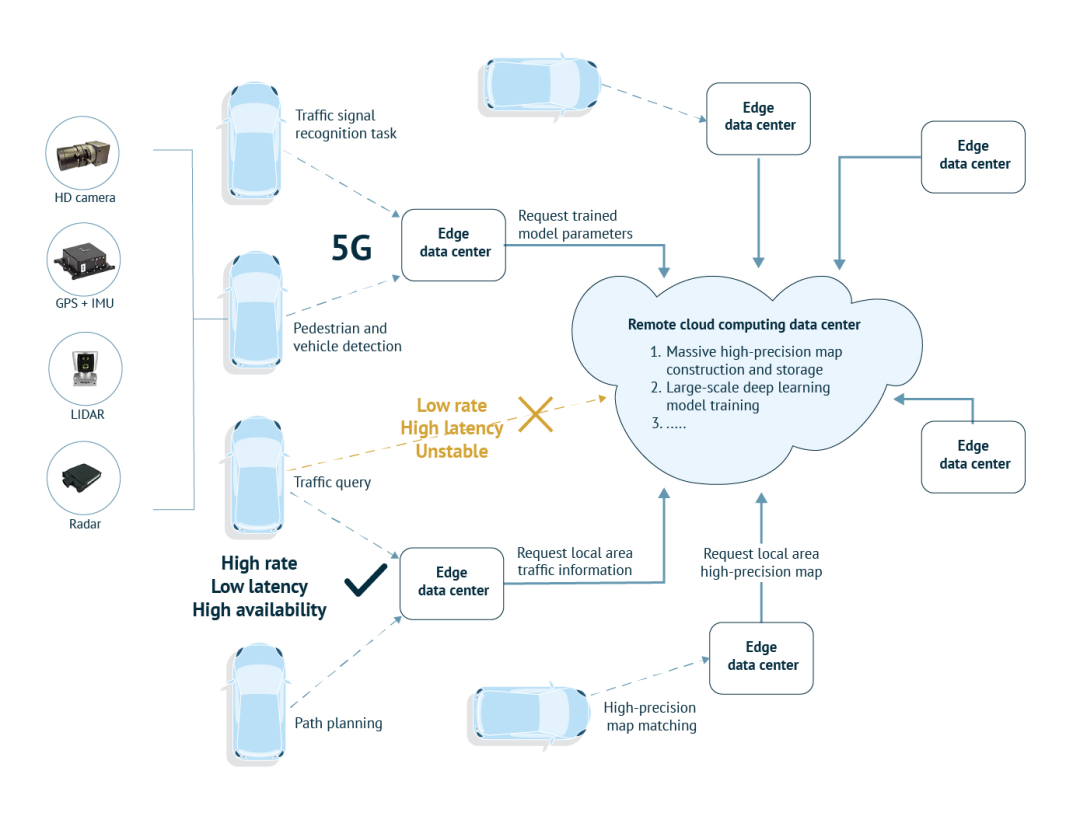

Three main reasons underscore Edge AI's criticality in autonomous vehicles:

1. Edge systems reduce cloud relay dependency, achieving a response time of less than 50ms for collision avoidance, crucial for handling sudden pedestrian crossings or highway emergencies.

2. Edge AI enables autonomous or semi-autonomous vehicles to maintain safety features even in cellular dead zones. The 5G Automotive Association's (5GAA) updated Cellular Vehicle-to-Everything (C-V2X) technology roadmap emphasizes a hybrid V2X architecture combining edge processing with 5G-V2X direct communications. Edge AI hardware and sensor fusion algorithms reduce decision latency by 30-40%, achieving response times as low as 20-50 milliseconds.

3. Integrating data from cameras, LiDAR, and radar improves perception reliability for safe navigation. Innoviz's 2024 LiDAR upgrade employs edge-optimized neural networks to process point cloud data at 20 frames per second, minimizing obstacle detection latency.

Next, let's turn to the manufacturing sector.

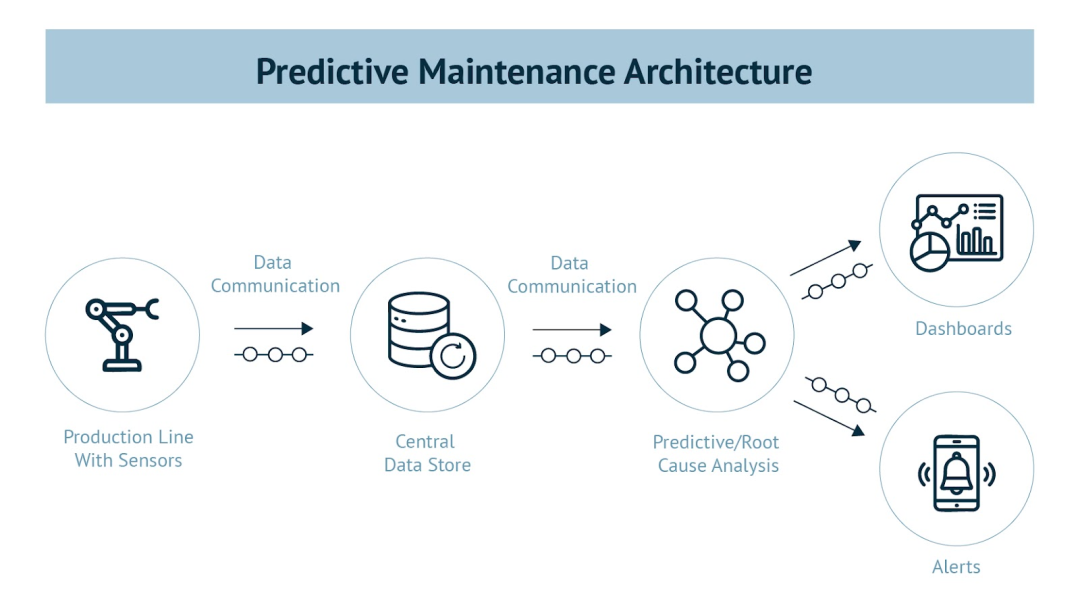

Production lines generate vast amounts of data daily. Studies show smart factories generate over 5 petabytes of data per week. Edge AI systems process this information locally, providing instant insights and automated responses. Edge AI's impact is evident in predictive maintenance, quality control systems, and process optimization.

Predictive maintenance systems using real-time sensor data analysis reportedly reduce maintenance costs by 30% and downtime by 45%. By continuously monitoring equipment performance, Edge AI algorithms detect anomalies and potential failures before they occur, enabling proactive maintenance and minimizing unexpected downtime.

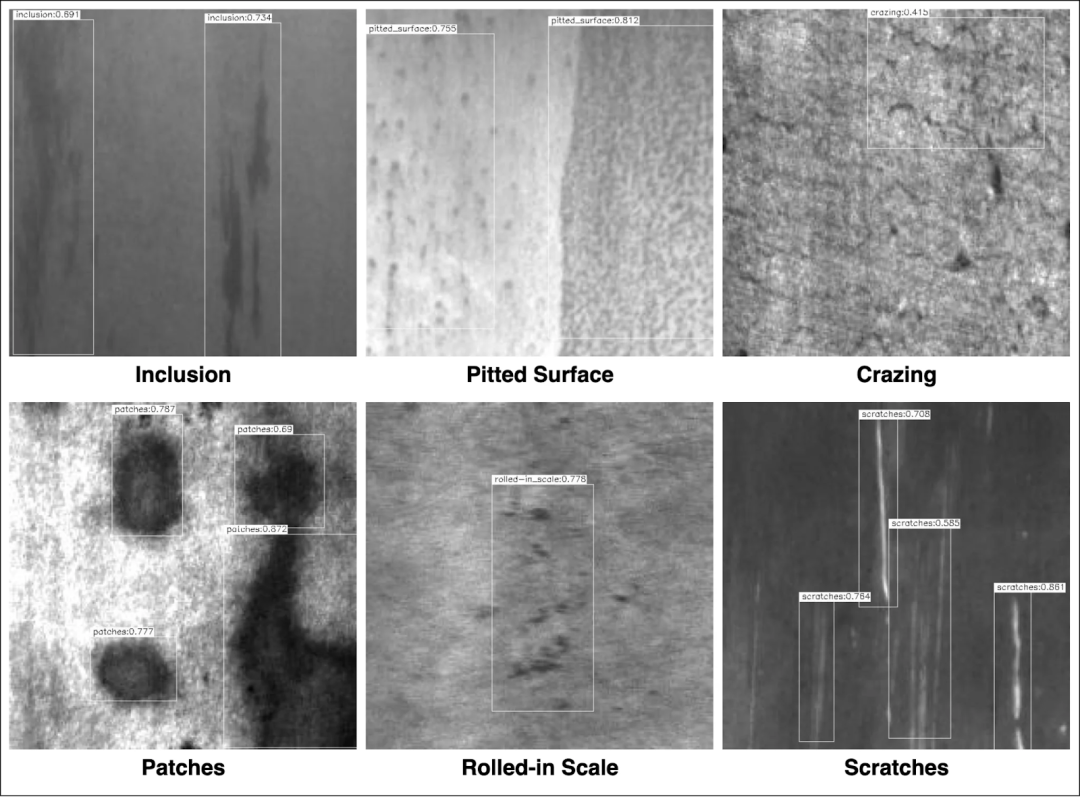

In terms of quality, Edge AI enhances quality control through real-time inspection and defect detection. For example, a major food and beverage manufacturer deployed visual AI at the edge for quality inspection and closed-loop quality control. The system continuously monitors product variations and suggests equipment settings adjustments, reducing inspection cycles by 50-75% and improving accuracy.

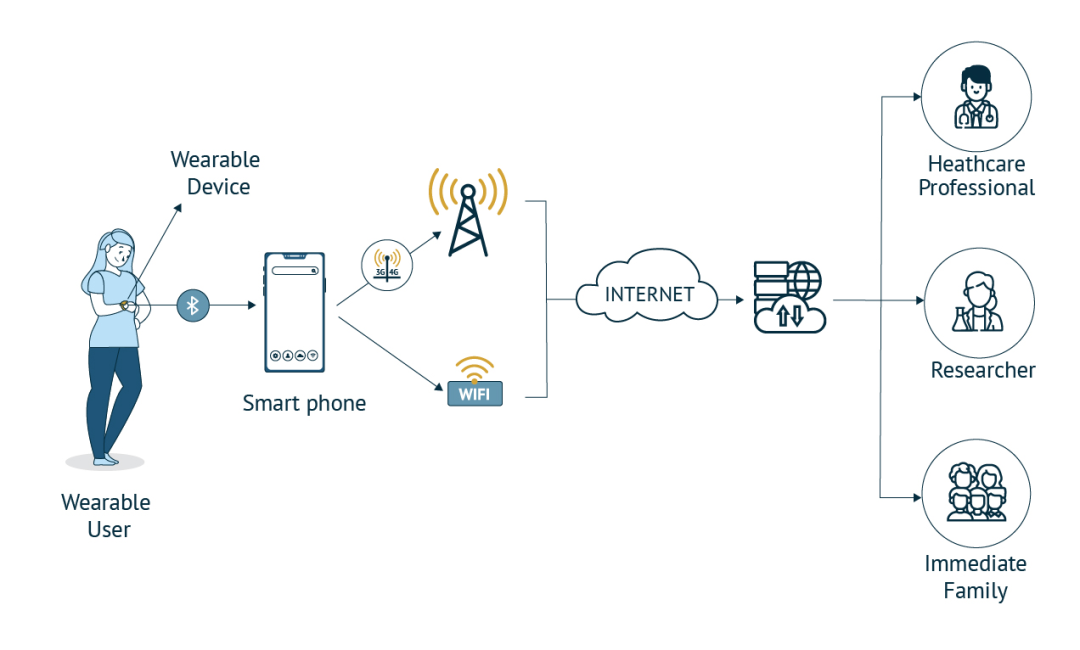

Thirdly, in healthcare, localized AI accelerates diagnosis and improves patient outcomes by processing medical data directly within devices.

Edge AI-powered remote patient monitoring devices (like portable ECG and blood pressure monitors) analyze heart rhythms and vital signs in real-time. Devices from companies like AliveCor and Biobeat allow clinicians to detect arrhythmias and other abnormalities without waiting for cloud-based analysis, reducing response times in critical situations.

Fourthly, Edge AI is transforming retail by optimizing in-store operations and enhancing customer experiences through real-time behavior analysis.

AI-driven smart shelves and checkout systems process customer interactions locally, analyze purchasing patterns, and adjust inventory forecasts without relying on cloud synchronization. Retailers deploy AI-driven video analysis to detect abnormal foot traffic, monitor inventory levels, and reduce checkout times, thereby increasing efficiency and lowering operational costs.

On the operational front, AI-powered smart retail shows promise in 2025. AI-driven computer vision enables fully contactless transactions, reducing average checkout times by 30%. Amazon Fresh automatically checks out customers as they leave by installing cameras on shelves or trolleys, providing real-time spending previews.

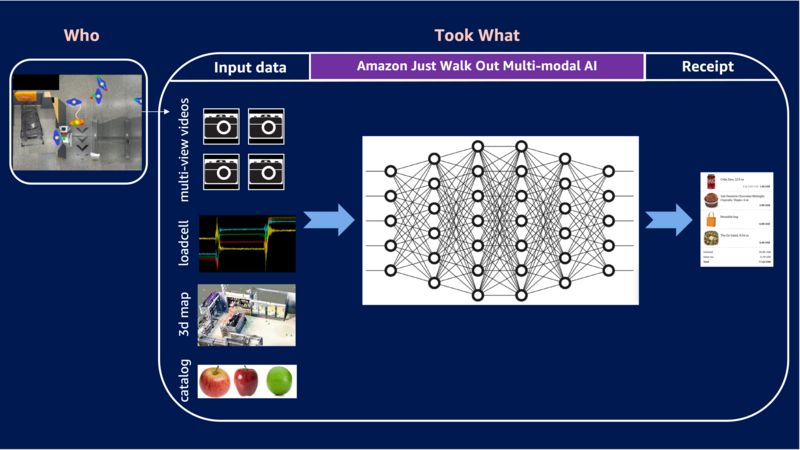

Amazon's Just Walk Out (JWO) system exemplifies edge AI in retail, integrating sensor arrays, device analytics, and advanced machine learning models. All computations are processed locally on custom edge hardware, enabling real-time decision-making and enhancing customer convenience and operational efficiency.

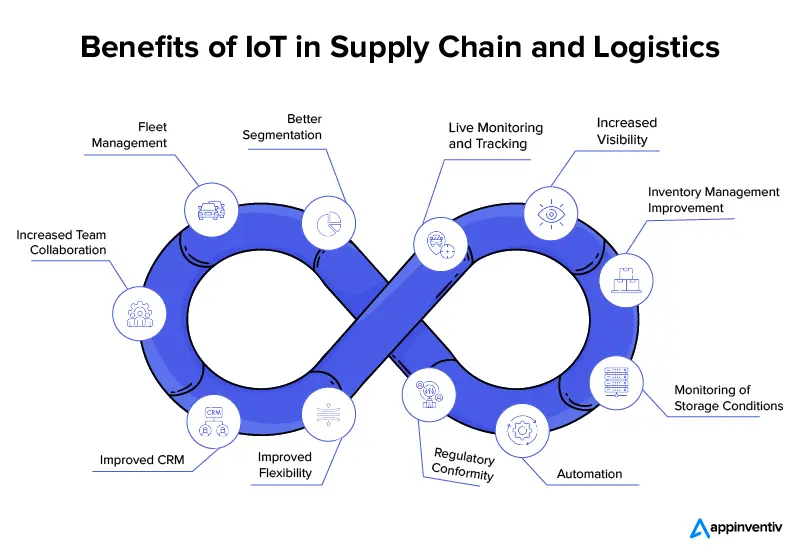

Fifthly, Edge AI integrated with IoT sensors enhances logistics intelligence by processing logistics data directly at distribution centers, warehouses, and transport hubs.

Instead of transmitting vast amounts of information to centralized servers, smart sensors analyze temperature fluctuations, motion anomalies, and inventory shortages on-site, triggering immediate alerts when deviations occur. For instance, P&O Ferry masters use AI-driven ship loading programs to optimize freight capacity by 10%, maintaining real-time visibility across the entire supply chain. Additionally, AI-driven predictions help reduce logistics costs by 20%.

Finally, Edge AI is helping smart agriculture expand precision farming to meet the growing global food demand.

With the global population expected to reach 9.8 billion by 2050, agriculture must scale intelligently to meet this demand while minimizing environmental impact. Edge AI enables farms to expand technological reach without increasing complexity, analyzing soil conditions, monitoring weather patterns, and implementing automated irrigation systems.

Advanced sensors and AI models assess factors like soil moisture or pest activity without sending data to remote servers, enabling rapid interventions. Projects like CrackSense demonstrate how real-time sensing ensures fruit quality in crops like citrus, pomegranates, and grapes, reducing disasters and waste.

Smart irrigation systems equipped with Edge AI have successfully dynamically adjusted water distribution based on local soil moisture analysis, reducing water usage by 25%. Similarly, AI-driven pest detection reduces pesticide use by 30%, ensuring precision farming and minimizing waste.

Edge AI Ecosystem: Collaborative Innovation under a Three-Tier Architecture

The current Edge AI ecosystem stands at a critical juncture: project success hinges on collaboration among hardware vendors, software developers, cloud providers, and industry stakeholders. This collaborative state is likely to persist, making inter-enterprise collaboration a key focus.

Without interoperability standards, scalable deployment models, and shared R&D efforts, Edge AI risks fragmentation, limiting its application in critical sectors like manufacturing, healthcare, and logistics.

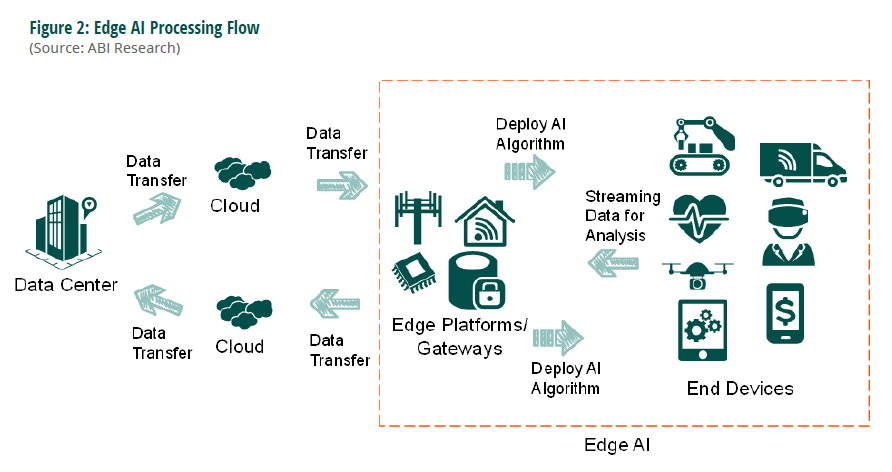

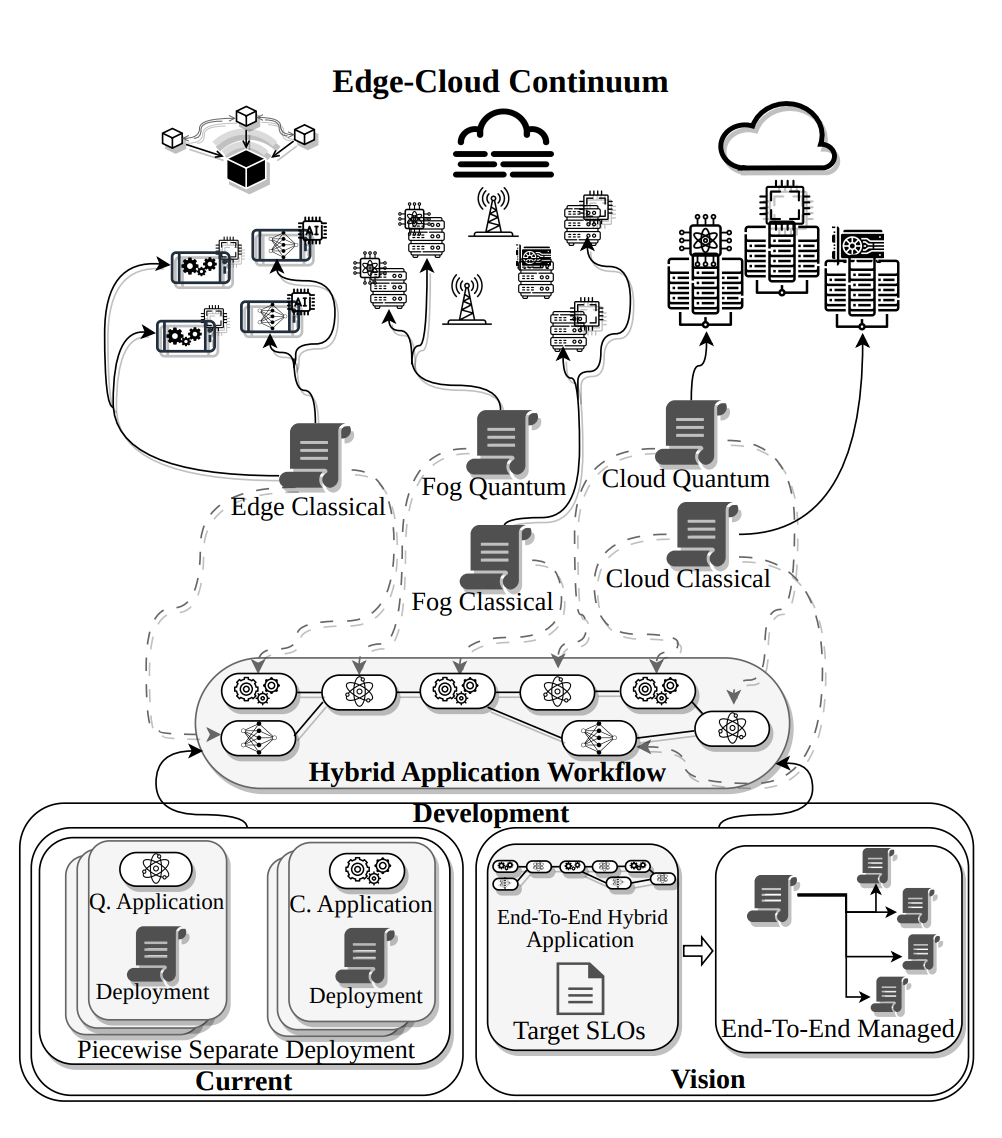

The Edge AI ecosystem generally adopts a three-tier architecture, distributing computing workloads across edge devices, edge servers, and cloud platforms. This structure allows AI models to perform real-time inference at the edge while leveraging higher computing power when needed. Each tier plays a unique role in processing, aggregating, and optimizing data for intelligent decision-making.

Edge devices and terminals are the first points of interaction with real-world data, including IoT sensors, industrial robots, smart cameras, and embedded computing systems in manufacturing, healthcare, automotive, and retail environments. Their primary function is low-latency AI inference, processing data on-site without continuous cloud connectivity.

Edge servers act as computational intermediaries between edge devices and the cloud, typically deployed in factories, hospitals, retail stores, and autonomous vehicle networks. They aggregate data from multiple sources and execute more complex AI workloads. A key advantage of edge servers is localized AI inference, enabling heavier models to run without offloading data to remote data centers, reducing latency, bandwidth costs, and security risks associated with cloud dependency.

It's important to distinguish between edge computing and terminal devices. While they can be grouped, they differ significantly due to constraints like power consumption, size, and computing power. Edge devices (e.g., embedded cameras or industrial sensors) are designed for low-power AI inference, while more powerful edge servers act as intermediaries, processing complex AI workloads before forwarding data to the cloud.

The cloud remains crucial for model development, large-scale data analysis, and storage. It serves as the backbone for training deep learning models before optimization and deployment to the edge. Once trained, AI models are deployed to edge devices and servers to perform inference tasks in production environments. The cloud also acts as the backbone for AI model monitoring, analysis, and centralized orchestration, ensuring efficient deployment across thousands or even millions of edge endpoints.

While the three-tier architecture encompasses the full spectrum of Edge AI, cross-border cooperation among enterprises is intensifying.

Semiconductor companies are collaborating with AI developers to enhance model efficiency on specialized hardware. Cloud providers are integrating edge-native computing solutions, and research institutions are partnering with industry leaders to advance scalable architectures.

In hardware and cloud collaboration, Intel promotes Edge AI adoption through its Edge AI Partner Enablement Package, providing companies with tools, frameworks, and technical resources to accelerate deployments.

A notable collaboration involves Qualcomm and Meta, working to integrate Meta's Llama large language model directly onto Qualcomm's edge processors, reducing dependence on cloud-based LLMs and enabling devices to perform generative AI workloads on-site.

MemryX and Variscite have also announced a partnership to enhance Edge AI efficiency by combining MemryX's AI accelerators with Variscite's System-on-Module (SoM) solutions, simplifying AI deployment on edge devices, particularly for industrial automation and healthcare applications.

Google and Synaptics have collaborated to develop an edge AI system, integrating Google's Kelvin MLIR-compatible machine learning core into Synaptics' Astra AI-Native IoT computing platform. The companies will work together to define the best implementation of IoT Edge context-aware computing multi-mode processing for applications such as wearable devices, home appliances, entertainment, and surveillance.

Collaboration between government, industry, academia, and research institutions plays a pivotal role in advancing edge AI research and deployment, with numerous nations initiating pilot projects and collaborative platforms dedicated to this burgeoning field.

In the United Kingdom, the National Centre for Edge AI serves as a hub for academia, industry, and the public sector to unite in the pursuit of advancing edge AI technology. Led by Newcastle University, this center brings together interdisciplinary teams from across the UK, aiming to enhance data quality and decision-making precision for time-sensitive applications such as healthcare and autonomous electric vehicles.

Similarly, the National AI Research Resource (NAIRR) pilot program, initiated by the US National Science Foundation, is a significant endeavor aimed at democratizing AI. Participants from industries including Intel, NVIDIA, Microsoft, Meta, OpenAI, and IBM contribute computing power and AI tools to researchers developing secure and energy-efficient AI applications.

From Federated Learning to Neuromorphic Computing: The Top 5 Frontier Trends in Edge AI

Technology is evolving rapidly, and five emerging trends in edge AI are reshaping AI systems. These include federated learning, edge-native AI models, quantum-enhanced intelligence, and edge-generated AI. When combined, these trends empower autonomous vehicles to train each other independently of centralized datasets, hospitals to deploy AI models that evolve in real-time based on patient data to ensure highly personalized treatment, and industrial robots to operate with predictive intelligence, detecting and rectifying issues proactively.

Emerging innovations in fields such as neuromorphic computing, multi-agent reinforcement learning, and post-quantum cryptography are also redefining the potential of edge AI, making AI systems faster, safer, and more efficient.

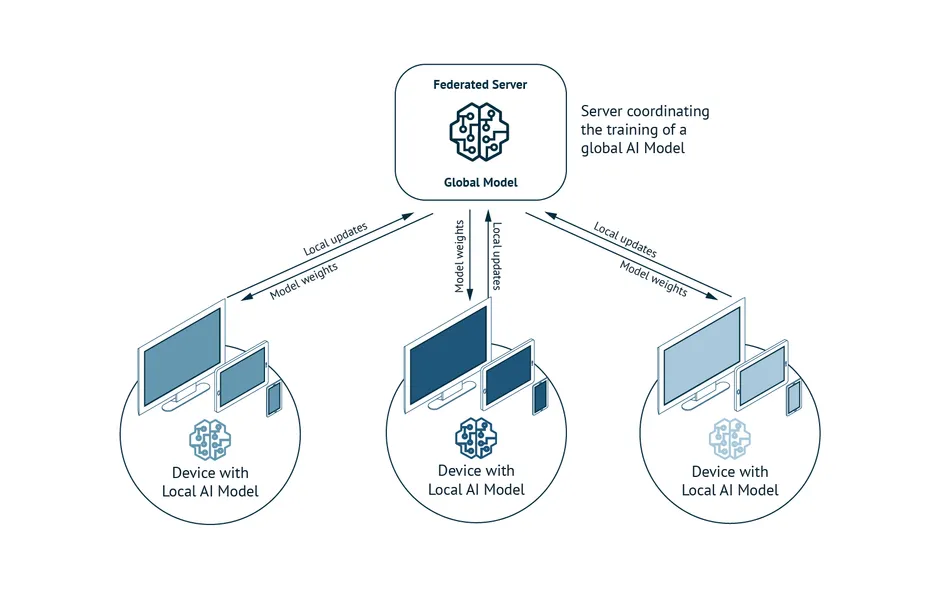

1. Federated Learning: Decentralized Intelligence at the Edge

Federated Learning (FL) is evolving beyond privacy protection to become the cornerstone of decentralized intelligence. Over the next five years, federated frameworks are anticipated to significantly enhance model adaptability, autonomy, and cross-industry collaboration. Market projections estimate that by 2030, federated learning could achieve a market value of nearly $300 million, with a compound annual growth rate of 12.7%.

Another major catalyst for the evolution of federated learning is its integration with next-generation networks like 6G. As the scale of edge deployments expands, ultra-low-latency networks will facilitate more effective synchronization of AI models across distributed devices, reducing the time required for optimization and deployment updates. Additionally, Quantum Federated Learning (QFL) is being explored to lessen the communication burden between devices, enhancing efficiency for large-scale IoT networks.

2. Edge Quantum Computing and Quantum Neural Networks

Quantum computing is poised to redefine the capabilities of edge AI. While current edge AI relies on optimized deep learning models and low-power hardware accelerators, quantum computing introduces a fundamentally different approach, leveraging quantum states to process exponentially larger datasets and optimize decisions at speeds unattainable by traditional methods. As Quantum Processing Units (QPUs) move beyond cloud-based infrastructures, hybrid quantum-classical AI will emerge at the edge, bolstering real-time decision-making in industries such as finance, healthcare, energy, and industrial automation.

Quantum Neural Networks (QNNs) are a novel type of AI model that harnesses quantum properties to detect patterns and relationships in data that are challenging for 'traditional AI' to achieve. Unlike existing neural networks that require increased power and memory for performance enhancements, QNNs can process information in a more compact and efficient manner.

To date, quantum computing has been constrained to cloud-based data centers due to hardware requirements, including extreme cooling. However, recent advancements in mobile QPUs suggest the potential for executing quantum algorithms at room temperature. In the coming years, quantum computing will transcend the cloud, embedding itself in autonomous systems, industrial robots, and IoT devices at the edge.

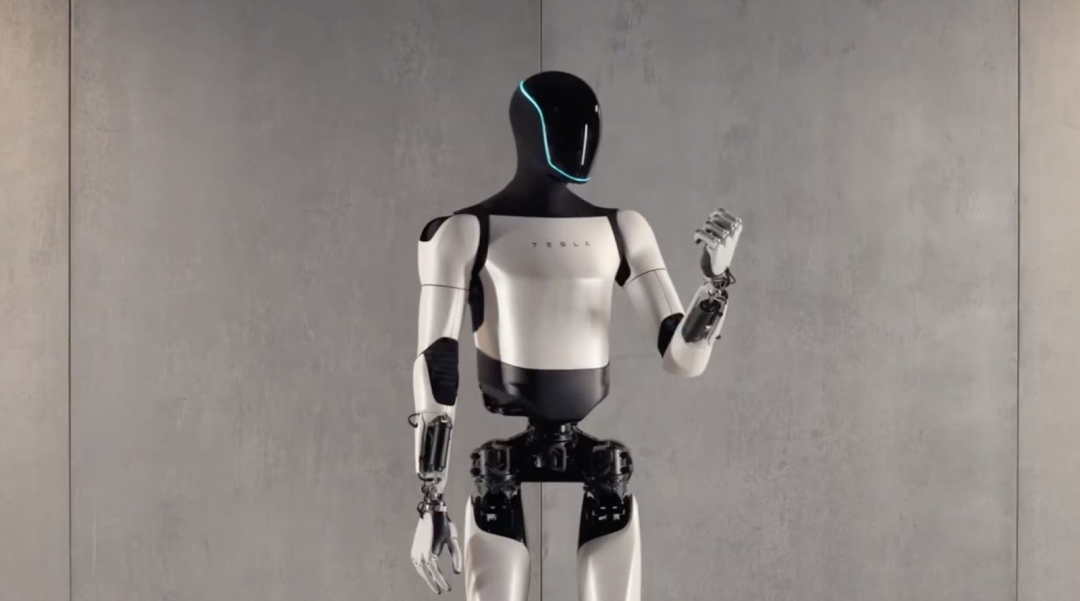

3. Edge AI for Autonomous Humanoid Robots

The next phase of humanoid robots will be characterized by embodied intelligence, where AI models become more adaptive, responsive, and self-improving.

In retail settings, humanoid robots can assist customers by answering verbal queries, analyzing facial expressions, and navigating store layouts. Concurrently, in hospitals and nursing homes, AI robots can monitor patients, aid with mobility, and detect subtle behavioral changes indicative of medical emergencies, all while ensuring data privacy and security through on-device processing.

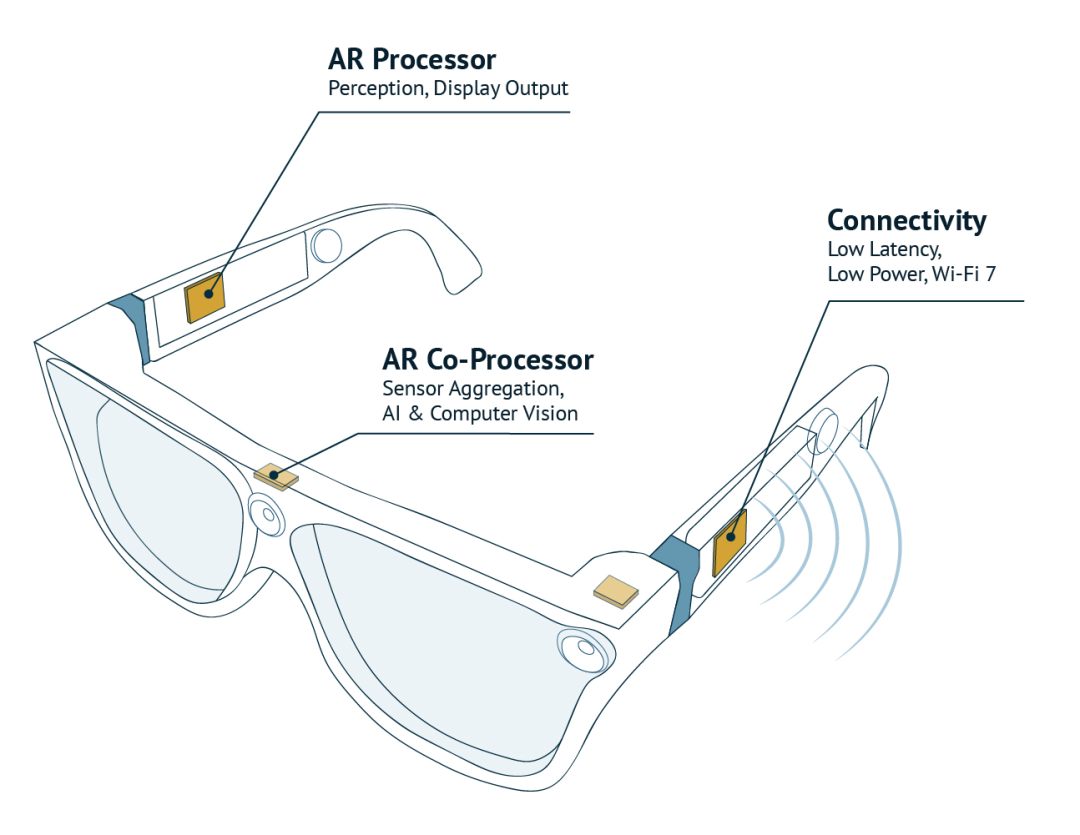

4. AI-Driven AR/VR: The Next Evolution

Augmented Reality (AR) and Virtual Reality (VR) are no longer confined to gaming and entertainment, with edge AI serving as a pivotal driver of their development. Next-generation AR/VR devices will process information locally, enabling real-time responsiveness and improved energy efficiency.

AI-driven spatial computing will empower AR glasses and VR headsets to dynamically adjust overlays, depth perception, and environmental interactions based on context.

In industrial environments, this translates to AR-driven workspaces providing engineers with hands-free, AI-generated instructions that adapt in real-time to real-world conditions. In healthcare, AI-assisted surgeries will integrate AI capabilities to enhance precision, updating within milliseconds based on the surgeon's movements without cloud-induced latency.

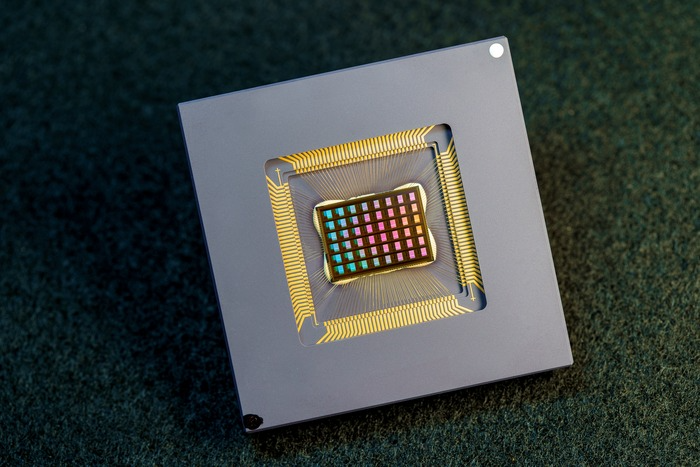

5. Neuromorphic Computing: The Future of Low-Power AI

By introducing brain-inspired architectures, neuromorphic computing is set to become increasingly prevalent in edge AI, offering substantial advantages in energy efficiency and processing power. Unlike traditional computing systems that separate memory and processing units, neuromorphic systems integrate these functions, mirroring the parallel and event-driven nature of the human brain. This design enables them to handle complex real-time data processing tasks with minimal energy consumption, making them ideal for edge applications.

For instance, a NeuRRAM chip introduced in a 2022 study in Nature magazine simulates the energy efficiency of computing architectures that are twice as efficient as state-of-the-art 'in-memory computing' chips, capable of performing complex cognitive tasks on edge devices without cloud connectivity. This leap signifies a transformation akin to the shift from desktop computers to smartphones, unlocking portable applications once deemed impossible.

Research and early commercial deployments indicate that neuromorphic chips have the potential to redefine how intelligence is deployed at the edge.

Closing Thoughts

Edge AI is revolutionizing industries ranging from autonomous vehicles and smart manufacturing to healthcare, retail logistics, and smart agriculture. By bringing the power of AI closer to the source of data, edge AI facilitates unprecedented real-time insights, autonomous decision-making, and resource optimization.

Its rise signifies a fundamental shift from centralized cloud models of AI to distributed intelligence.

As the edge AI ecosystem continues to mature, the pace of innovation accelerates. From federated learning to neuromorphic computing, quantum-enhanced intelligence to AI-driven augmented reality, cutting-edge technologies are redefining the possibilities of edge AI. Looking ahead, edge AI stands poised to become a pivotal force driving industry transformation and social progress.