Horizon's Autonomous Driving Solution Breakdown

![]() 04/14 2025

04/14 2025

![]() 542

542

In today's rapidly evolving landscape of intelligent driving technology, the prominence of domestic solutions hinges on their deep accumulation across multiple dimensions, including chip architecture, algorithm models, and system engineering. Since its inception in 2015, Horizon Robotics has adhered to the R&D philosophy of 'software and hardware integration', leveraging self-developed edge AI chips as a breakthrough to construct a full-link technology system encompassing perception to decision-making. Committed to delivering advanced driver assistance systems (ADAS) and high-level autonomous driving solutions that are safe, reliable, and cost-effective, Horizon has not only proposed the evolution of end-to-end automotive computing but also continuously optimized the intelligent computing architecture to seamlessly align with increasingly intricate autonomous driving algorithms.

Development History of Horizon

Evolution Route of Horizon's Intelligent Computing Architecture

Tracing back to Horizon's technological inception, the first-generation BPU (Bernoulli Processing Unit) intelligent computing architecture emerged in 2016. It efficiently parallelized the matrix multiplication and addition operations of deep learning networks through custom hardware acceleration units and instruction sets, achieving the low-power, high-performance computing essential for automotive environmental perception and decision-making. Compared to general-purpose GPUs, the BPU offers higher computational density at the same power consumption, maximizing algorithm execution efficiency on the chip through hardware-level resource scheduling. This innovation laid a solid foundation for subsequent algorithm iterations.

With the continuous advancement of autonomous driving algorithms, Horizon successively launched the Bernoulli 2.0 and Bayes 2.0 architectures in 2020 and 2021, respectively. At the microstructural level, these generations of BPU improved energy efficiency by 10% to 15% per TOPS of computing power through optimizing pipeline depth, expanding vector unit width, and enhancing on-chip cache design. Under the same power consumption constraints, they further boosted computational output. The architectural evolution at this stage primarily aimed to enhance the frame rate of ADAS algorithms and meet the demand for higher-precision object detection, ensuring the system could maintain real-time performance and energy consumption control when processing data fusion from multiple cameras and radars.

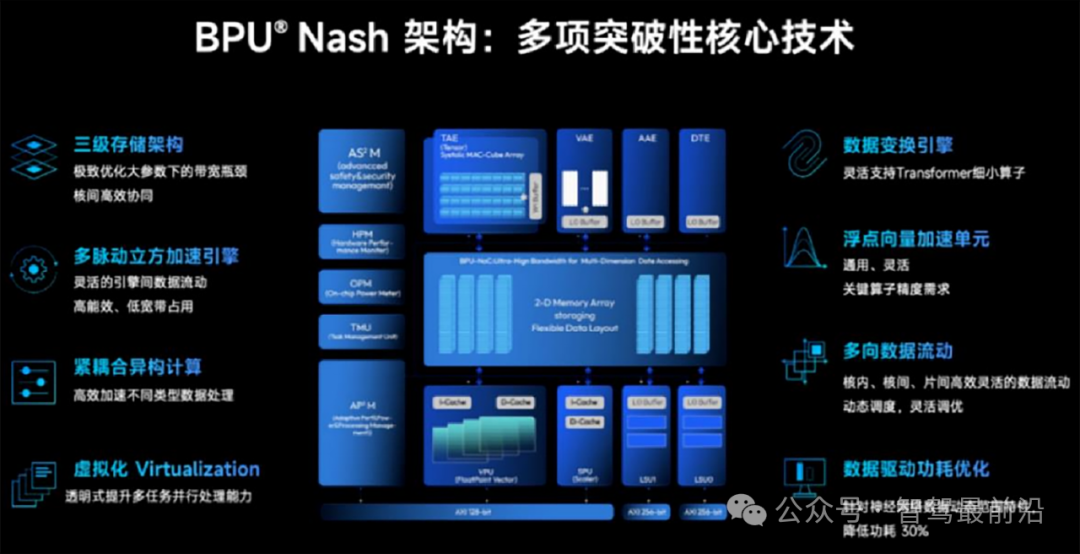

The true leapfrog evolution came with the 'Nash' architecture released in 2023. It achieved deep hardware-software co-optimization for large-parameter Transformer models: at the hardware level, it upgraded the multiply-accumulate units from 16×16 to 32×32 and introduced a sparse tensor processing engine to support efficient execution of Sparse4D algorithms; at the software level, it closely collaborated with compilers and performance analyzers to achieve parallel scheduling of data loading and computation through hardware-level instruction prediction and algorithm-level pipeline fusion. Experimental results demonstrated that compared to the first-generation BPU, the overall computational performance increased by approximately 246 times, while the acceleration effect for Transformer inference exceeded 27 times, far surpassing the 16-fold growth achievable by Moore's Law, doubling every 18 months, and truly widening the performance gap between domestic automotive-grade AI chips and general solutions.

BPU Dedicated Intelligent Computing Architecture

Evolution Route of Horizon's Automotive Intelligent Computing Solutions

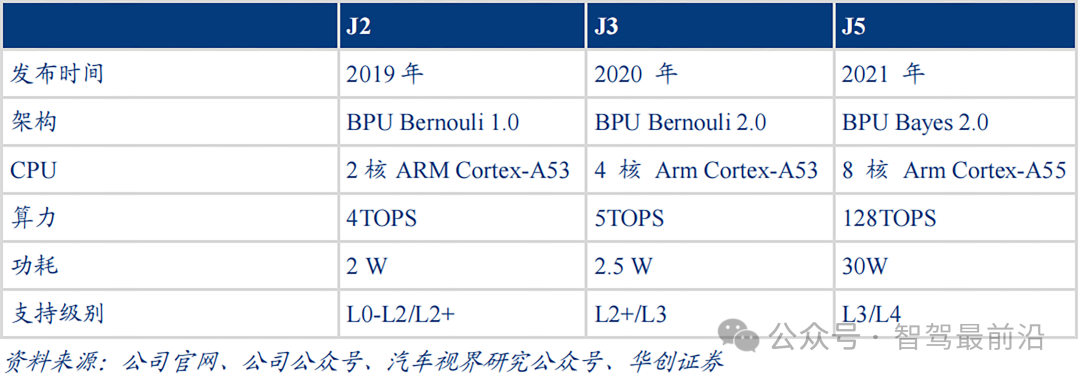

While the BPU architecture continued to evolve, Horizon developed a gradient series of 'Journey' automotive intelligent computing solutions based on this architecture to address diverse needs, ranging from basic ADAS to high-level autonomous driving. The Journey 2 chip, first launched in 2019, offered 4 TOPS of computing power and 2W of power consumption, meeting the needs of active safety and ADAS applications from L0 to L2 levels. The Journey 3, introduced in 2020, increased computing power to 5 TOPS, supporting L2+ and preliminary L3 functions. The Journey 5, unveiled in 2021, significantly boosted computing power to 128 TOPS and was equipped with 30W power management, enabling real-time processing of high-resolution multi-camera and millimeter-wave radar data on mass-produced models like the L8, ushering in the era of domestic high-computing-power intelligent driving.

Journey 2, Journey 3, Journey 5 Chip Overview

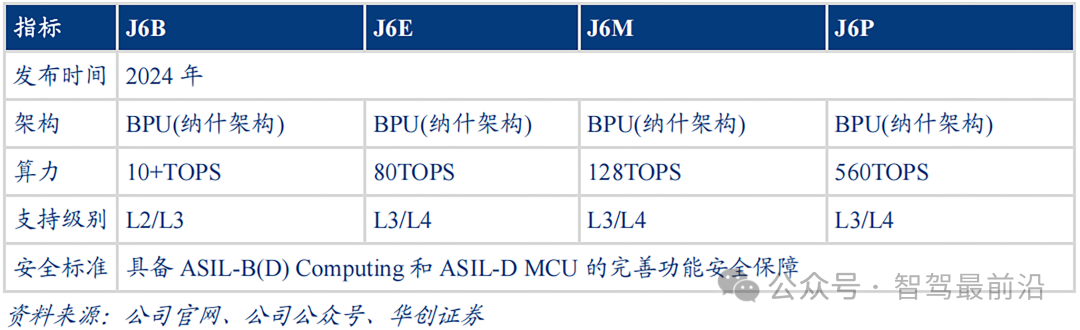

In April 2024, the Journey 6 series emerged, covering a computing power range from 10 TOPS to 560 TOPS, all based on the Nash architecture. It not only achieved a significant leap in performance compared to its predecessors but also provided one-stop coverage of low-, mid-, and high-level intelligent driving scenarios through platform design and functional safety assurance (meeting ASIL B/D and ASIL D MCU standards). This allowed OEMs to quickly interface and complete differentiated functional development and engineering implementation using only one set of software, hardware, and toolchains.

Journey 6 Series Chips

Horizon's Autonomous Driving Technology Solution

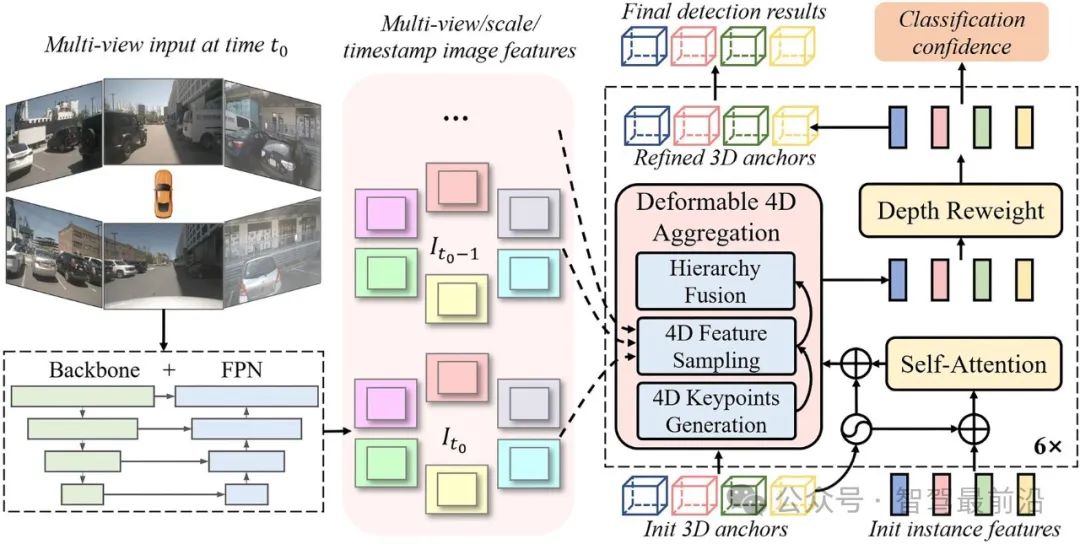

Since proposing the concept of end-to-end autonomous driving perception evolution in 2016, Horizon has maintained technological leadership in perception, prediction, planning, and control. Addressing the high sparsity of LiDAR data, Horizon introduced the Sparse4D end-to-end perception algorithm in 2022, deeply fusing multi-frame point clouds and camera images through spatio-temporal sparse tensor encoding. This not only enhanced the detection accuracy of small targets and distant obstacles but also reduced computational load. Building on this, Horizon released the UniAD large model in 2023, which won the Best Paper Award at CVPR. UniAD seamlessly integrates perception, prediction, planning, and control, simulating behavior decision-making in multi-vehicle, multi-pedestrian scenarios through an interactive game mechanism, enabling efficient passage and safety assurance in complex intersections and lane-changing scenarios. This model can complete full-scenario inference with a latency of less than 50ms on the BPU Nash architecture, truly meeting the stringent real-time requirements of high-level autonomous driving.

Sparse4D Algorithm Framework Diagram

To swiftly translate these algorithms into mass-producible features, Horizon has also established a comprehensive technology stack covering algorithm development to software deployment. Its core tools include the 'TianGongKaiWu' algorithm development chain, which integrates a modular algorithm library, visual debugging interface, and automated testing framework, enabling algorithm engineers to complete the closed loop from code writing to BPU hardware verification in minutes; the 'TaGe' embedded middleware provides automotive-grade operating system interfaces, sensor abstraction layers, and security monitoring services, accelerating vehicle integration and functional verification; and the 'AIDI' software platform supports model management, data annotation, OTA upgrades, and version control, ensuring efficient closed-loop algorithm iteration and production controllability. This entire toolchain not only lowers the development threshold but also enables OEMs and Tier 1 suppliers to complete the entire process from algorithm verification to vehicle road testing within half a year, significantly accelerating the commercialization of new features.

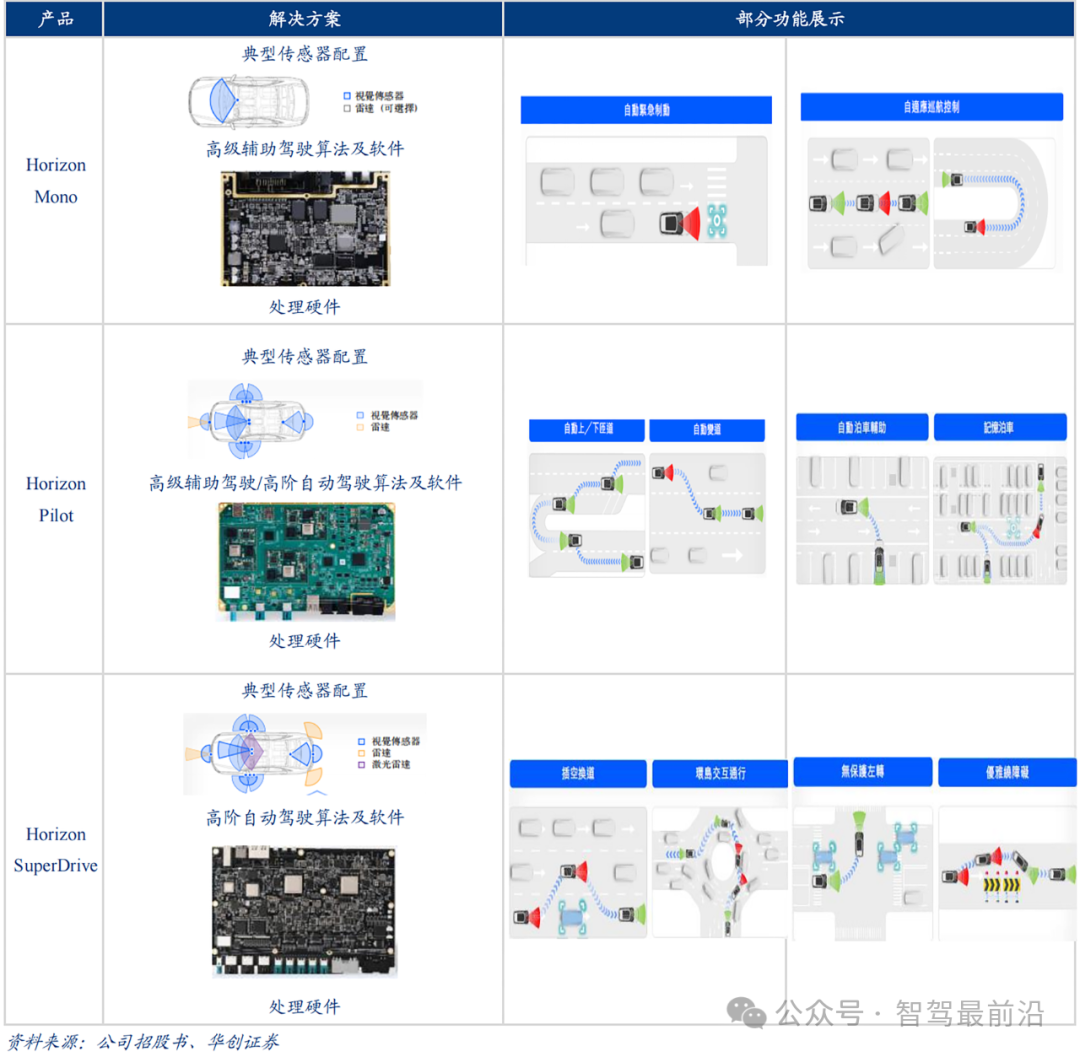

At the product application level, Horizon has formed three gradient solutions: Horizon Mono, Pilot, and SuperDrive. The Mono solution, based on a monocular camera and equipped with Journey 2 or 3 chips, has been stably verified in basic assistance scenarios such as AEB, ACC, and traffic jam assistance since mass production in 2021. The Pilot solution integrates millimeter-wave radar and automatic parking functions, equipped with Journey 3 or 5 chips, and has been mass-produced on multiple models since 2022, enabling seamless takeover experiences of over 200 kilometers on typical highway scenarios. For the most challenging complex urban roads, the SuperDrive solution deeply fuses cameras, radars, and LiDARs, with plans for mass production in 2026, embedding Journey 6 series chips, aiming to achieve smooth, human-like driving in all-weather, multi-scenario conditions.

Different Intelligent Driving Solutions

As of 2024, the Journey 6 series has been adopted by over 20 OEMs for platformization, with partners spanning domestic brands, new forces, and joint ventures. In 2025, it is expected to empower over 100 mid- to high-level intelligent driving models. The platformization design allows OEMs to cover the development and deployment of low-, mid-, and high-level scenarios using only one set of software, hardware, and toolchains, significantly shortening project cycles and reducing engineering costs. Simultaneously, Horizon is collaborating deeply with ecosystem partners in areas such as perception algorithms, map fusion, and simulation testing to jointly build an open and efficient intelligent driving ecosystem.

With the gradual liberalization of regulations for L3+ to L4 autonomous driving and the continuous maturation of business models, intelligent driving systems will face heightened requirements for safety and reliability. The next phase of technological breakthroughs for Horizon includes further compressing the inference size and latency of large models to meet millisecond-level automotive-grade deployment needs; exploring best practices for pure vision and multi-sensor fusion to enhance robustness in complex urban scenarios without LiDAR solutions; strengthening multi-sensor redundancy and fault self-diagnosis mechanisms to build a more stringent functional safety closed loop; and continuously promoting software-hardware collaborative innovation in an open ecosystem to bring safer, more efficient, and more intelligent travel experiences to the industry. Through this series of technological and industrialization practices, Horizon Robotics is gradually transitioning from a 'follower' to a 'leader', helping China's intelligent driving achieve a leapfrog development on the global stage.

-- END --