Google TPU: Ironwood Revolutionizes AI Inference

![]() 04/17 2025

04/17 2025

![]() 506

506

Produced by Zhineng Zhixin

At the 2025 Google Cloud Next conference, Google unveiled its seventh-generation Tensor Processing Unit (TPU), codenamed 'Ironwood'.

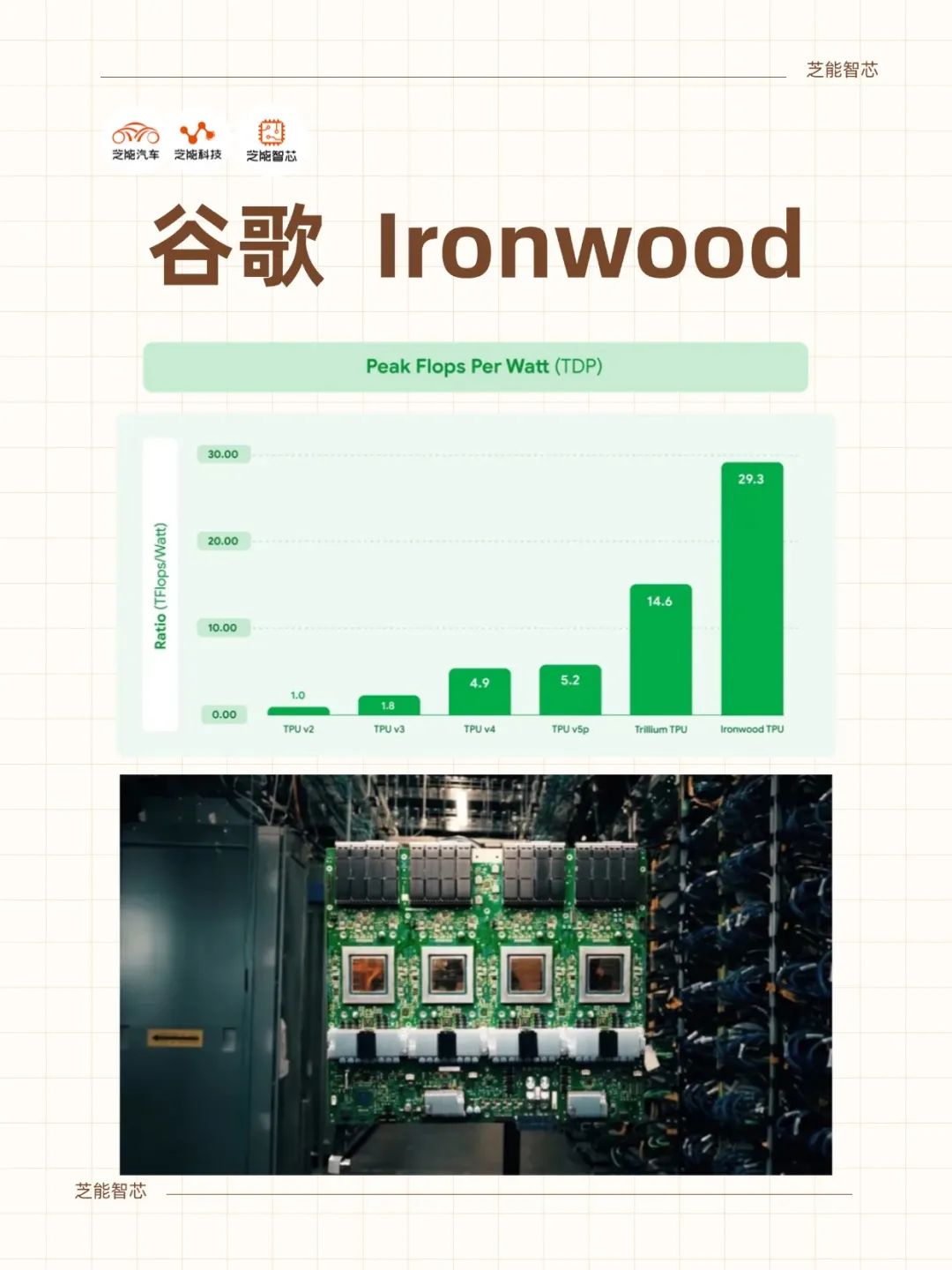

As Google's most powerful AI chip to date, Ironwood is tailored for AI inference tasks, marking a transition from traditional 'reactive' models to 'proactive' agents in AI technology. Compared to the 2018 first-generation TPU, Ironwood boasts a 3600x improvement in inference performance and a 29x increase in efficiency.

Each chip comes equipped with 192GB of high-bandwidth memory (HBM), delivering a peak computing power of 4614 TFLOPs and supporting an inter-chip interconnect (ICI) bandwidth of 1.2Tbps.

Compared to its predecessor, Trillium, Ironwood offers double the energy efficiency. A cluster of 9216 chips at maximum configuration delivers a total computing power of 42.5 Exaflops, over 24 times that of the world's largest supercomputer, El Capitan. Ironwood is anticipated to be available to customers via Google Cloud later this year, providing developers with unparalleled AI computing capabilities.

Part 1

Ironwood's Technical Architecture and Innovations

Built using a 5-nanometer process, Ironwood is Google's seventh-generation TPU, setting a new benchmark in AI chip hardware specifications.

Each chip features 192GB of HBM, offering a peak computing power of 4614 TFLOPs and enabling efficient distributed computing via an ICI bandwidth of 1.2Tbps.

Compared to Trillium, Ironwood significantly enhances memory capacity, computing power, and communication capabilities, laying a solid foundation for handling large-scale AI workloads.

● High-Bandwidth Memory (HBM): Ironwood's HBM capacity stands at 192GB, six times that of Trillium (32GB). This substantial increase alleviates data transmission bottlenecks, enabling the chip to manage larger models and datasets simultaneously.

For large language models (LLMs) or mixture-of-experts (MoE) models requiring frequent memory access, Ironwood's HBM is crucial. Additionally, its HBM bandwidth of 7.2TBps is 4.5 times that of Trillium, ensuring rapid data access to meet the memory-intensive demands of modern AI tasks.

● Peak Computing Power: With a single-chip peak computing power of 4614 TFLOPs, Ironwood excels in performing large-scale tensor operations. This level of computing power supports complex AI model training and inference tasks, such as ultra-large LLMs or advanced inference applications requiring high-precision calculations. In contrast, Trillium's single-chip computing power pales in comparison, highlighting Ironwood's remarkable improvement.

● Inter-Chip Interconnect (ICI) Bandwidth: Ironwood's ICI bandwidth reaches 1.2Tbps, with a bidirectional bandwidth 1.5 times that of Trillium. The high-speed ICI network ensures low-latency communication between chips, enabling efficient synchronization in multi-TPU coordination. This design is particularly suited for ultra-large-scale clusters, such as a TPU Pod configuration with 9216 chips, fully leveraging the total computing power of 42.5 Exaflops.

● Amidst the escalating global demand for AI computing power, energy efficiency has become a pivotal consideration in AI chip design.

◎ Thanks to Google's innovations in chip design and cooling technology, Ironwood offers double the performance per watt compared to Trillium and nearly 30 times the overall energy efficiency of the first cloud TPU in 2018.

◎ Through optimized architectural design, Ironwood minimizes energy consumption while maintaining high-performance output.

◎ In today's data centers with strained power supplies, this feature offers customers a more cost-effective AI computing solution. For instance, when handling AI tasks of the same scale, Ironwood consumes only half the power of Trillium, significantly reducing operational costs.

◎ To address the challenges of high power density, Ironwood employs advanced liquid cooling solutions. Compared to traditional air cooling, liquid cooling maintains up to twice the performance stability, ensuring efficient chip operation under sustained high loads.

This design not only extends hardware lifespan but also supports reliable operation of ultra-large-scale clusters, such as a TPU Pod with 9216 chips consuming nearly 10 megawatts of power.

● Ironwood introduces an enhanced version of SparseCore and Google's proprietary Pathways software stack, further enhancing its applicability across diverse AI tasks.

◎ SparseCore is a dedicated accelerator for handling ultra-large embedding tasks, such as sparse matrix operations in advanced ranking and recommendation systems.

Ironwood's SparseCore has been expanded compared to its predecessor, supporting a broader range of workloads, including financial modeling and scientific computing. By accelerating sparse operations, SparseCore significantly boosts Ironwood's efficiency in specific scenarios.

◎ Pathways is a machine learning runtime developed by Google DeepMind, enabling efficient distributed computing across multiple TPU chips.

Through Pathways, developers can effortlessly leverage the combined computing power of thousands or even tens of thousands of Ironwood chips, simplifying the deployment of ultra-large-scale AI models. The synergistic optimization of this software stack with Ironwood hardware ensures efficient resource allocation and seamless task execution.

Part 2

Ironwood's Performance Advantages and Application Scenarios

Ironwood's performance enhancements are nothing short of remarkable. Compared to the 2018 first-generation TPU, it offers a 3600x improvement in inference performance and a 29x increase in efficiency.

Compared to Trillium, Ironwood doubles energy efficiency, significantly boosting memory capacity and bandwidth. A cluster of 9216 chips at maximum configuration delivers a total computing power of 42.5 Exaflops, far surpassing the 1.7 Exaflops of the world's largest supercomputer, El Capitan.

Ironwood's single-chip computing power of 4614 TFLOPs is ample for handling complex AI tasks, and the total computing power of 42.5 Exaflops from a cluster of 9216 chips is unprecedented.

In comparison, El Capitan's 1.7 Exaflops appear insignificant. This computing power advantage enables Ironwood to effortlessly handle ultra-large LLMs, MoE models, and other computationally intensive AI applications.

In an era where AI computing power is a scarce resource, Ironwood's high energy efficiency is particularly crucial. With double the performance per watt compared to Trillium, it provides more computing power under the same power consumption. This feature not only reduces operating costs but also aligns with the global push for green computing.

● Ironwood's design philosophy shifts from 'reactive' AI to 'proactive' AI, enabling it to actively generate insights rather than merely responding to commands. This paradigm shift broadens Ironwood's application scenarios.

◎ Ironwood's high computing power and large memory make it an ideal platform for running LLMs. For example, cutting-edge models like Google's Gemini 2.5 can achieve efficient training and inference on Ironwood, supporting high-speed execution of natural language processing tasks.

◎ MoE models require robust parallel computing capabilities due to their modular design. Ironwood's ICI network and high-bandwidth memory can coordinate the computation of large-scale MoE models, enhancing model accuracy and response speed, making them suitable for scenarios requiring dynamic adjustments.

◎ In fields such as financial risk control and medical diagnosis, Ironwood supports real-time decision-making and prediction. Its powerful inference capabilities enable rapid analysis of complex datasets, generating high-precision insights to provide crucial support for users.

◎ The enhanced SparseCore enables Ironwood to excel in handling recommendation tasks with ultra-large embeddings. For instance, in e-commerce or content platforms, Ironwood can improve the quality and speed of personalized recommendations.

Google offers two TPU Pod configurations (256 and 9216 chips) with Ironwood, providing customers with flexible AI computing resources. This strategic layout enhances Google Cloud's competitiveness in the AI infrastructure domain.

Ironwood will be available through Google Cloud later this year, catering to diverse needs ranging from small-scale AI tasks to ultra-large-scale model training. The 256-chip configuration is tailored for small and medium-sized enterprises, while the 9216-chip cluster targets customers requiring extreme computing power.

Google Cloud's AI supercomputer architecture optimizes the integration of Ironwood with tools like Pathways, lowering the barriers to entry for developers.

Through this ecosystem, Google not only provides hardware support but also fosters a comprehensive solution for AI innovation.

Summary

As Google's seventh-generation TPU, Ironwood heralds a new era of AI inference with its exceptional hardware specifications and innovative design. With 192GB of HBM capacity, a single-chip computing power of 4614 TFLOPs, and a cluster performance of 42.5 Exaflops, Ironwood leads the pack in computing power, memory, and communication capabilities.

The enhanced SparseCore and Pathways software stack further expand its application range, from LLMs to recommendation systems and financial and scientific computing, showcasing Ironwood's unparalleled flexibility. More importantly, its double energy efficiency compared to Trillium and advanced liquid cooling technology set a new benchmark for sustainable AI computing.