GPT-5 Preview: Diving into OpenAI's Most Advanced Model, o3: What Sets It Apart?

![]() 04/18 2025

04/18 2025

![]() 587

587

After experiencing o3, my anticipation for GPT-5 has only grown.

OpenAI has been on a roll lately. Following the successful launch of GPT-4's native image generation in late March, which captured global attention, OpenAI unveiled the new GPT-4.1 series models on April 15, Beijing time, superseding the previous GPT-4 models. And just this morning (April 17), OpenAI kept its promise by introducing two new o series reasoning models, o3 and o4-mini, replacing the earlier o1 and o3-mini. Critically, o3 and o4-mini not only boast enhanced reasoning capabilities, supporting the direct integration of images into the "thinking process," but they are also the first reasoning models capable of independently utilizing all ChatGPT tools, officially hailed as:

"The most intelligent models we (OpenAI) have released to date, marking a significant advancement in ChatGPT capabilities."

While the question of whether this truly represents a leap forward remains, one point stands out. In February this year, OpenAI CEO Sam Altman publicly disclosed the internal model roadmap on the X platform, stating that GPT-4.5 (Orion) would be "OpenAI's last non-reasoning (chain-of-thought) model," and that subsequent GPT-5 would integrate GPT and o series models:

"o3 will no longer be launched as an independent model."

Image/ X

However, it appears none of Sam Altman's promises have materialized. Not only did OpenAI release new GPT-4.1 series non-reasoning models, but it also independently launched the o3 reasoning model. And what about GPT-5, the subject of much speculation? Will we indeed see it this summer?

OpenAI unveils another wave of models, with o3 being the most exceptional.

Prior to the launch of the GPT-4.1 series, o3, and o4-mini, there was considerable criticism about OpenAI's plethora of models, not only from ordinary ChatGPT users but also from developers who found OpenAI's model lineup overwhelming.

Fortunately, despite releasing several new models in the past few days, OpenAI has also phased out some "older models." Following the official launch of GPT-4.1, OpenAI announced that the GPT-4 model would be fully decommissioned from ChatGPT on April 30 and that the GPT-4.5 preview would be deprecated in the API (for developers).

As a general-purpose base model, GPT-4.1 is actually divided into flagship, mini, and nano versions, supporting up to millions of tokens of context and outperforming the current GPT-4o series models in terms of performance, cost, and speed. However, it is currently only available to developers via the API.

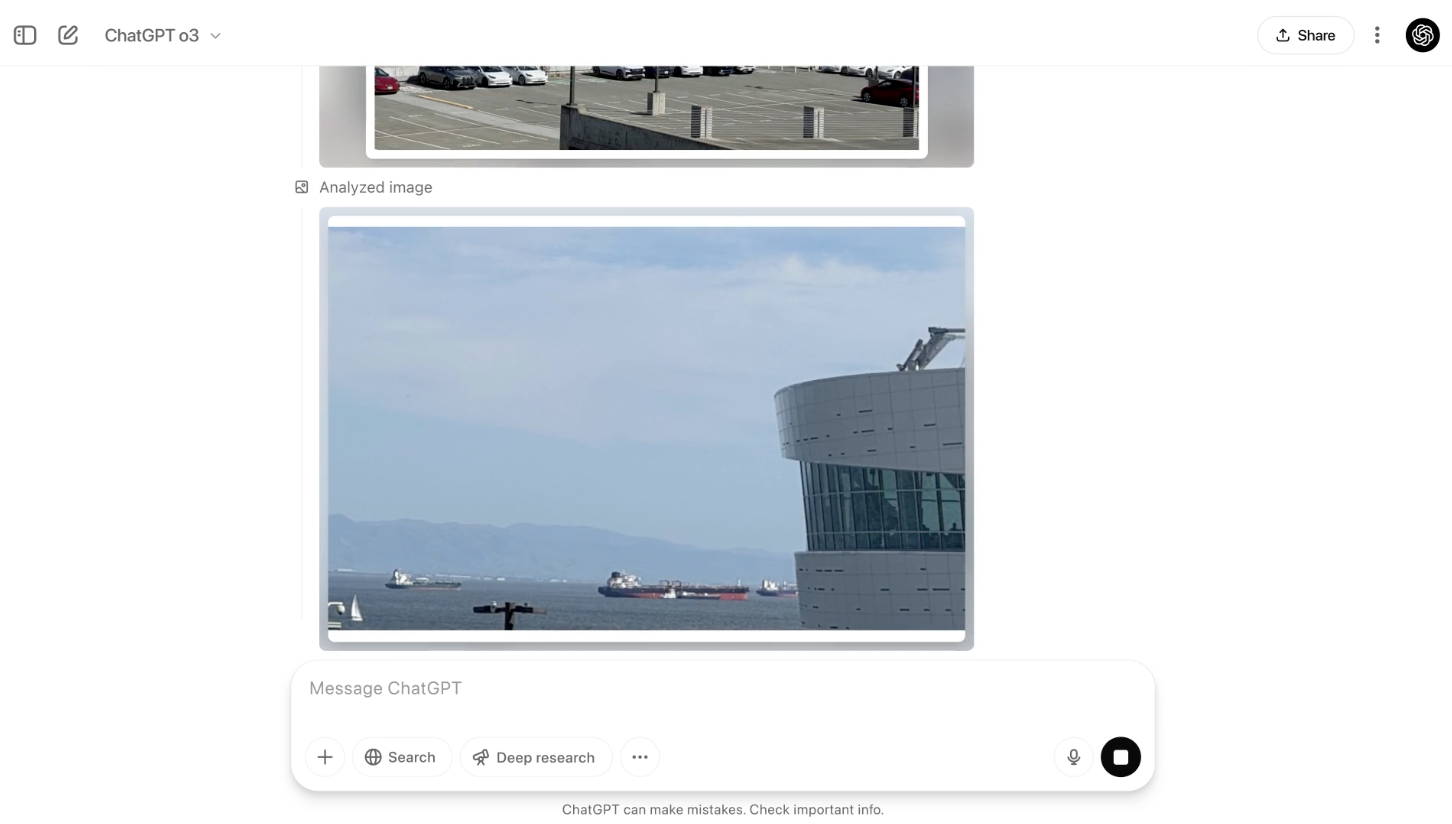

But if GPT-4.1 represents OpenAI's present, o3 and o4-mini may embody its future. As OpenAI's latest achievements in exploring the technological path of reasoning models, o3 and o4-mini are the first to genuinely incorporate image understanding capabilities into the chain of thought.

Image/ OpenAI

Simply put, it can not only recognize information but also incorporate these visual inputs into the thinking process, becoming an integral part of a complete logical chain. Unlike GPT-4o, a multimodal model, GPT-4o can "see" images but primarily to provide natural language responses. In contrast, the image processing of o3 and o4-mini is aimed at problem-solving—it is an integral part of the reasoning process.

Furthermore, as the officially certified most powerful model, o3 is also the first reasoning model to invoke all ChatGPT tools, including web search, Python, image generation, code interpreters, file reading, and more. With the same latency and cost as o1, o3 achieves superior performance in ChatGPT.

However, actual performance can only be judged through hands-on experience.

Hands-on with OpenAI's "most powerful reasoning model": A name well-deserved.

For reasoning models, reasoning ability is naturally paramount. Let's start with an ethical reasoning question, a great test of a model's reasoning prowess. We also compared it with two top models, DeepSeek-R1 and Gemini-2.5-Pro.

But before revealing the answers, the most impressive aspect of o3 is the rigor and fluidity of its thinking process derivation. In contrast, DeepSeek-R1's thinking process is quite lengthy and repetitive, taking a long time to deliberate but resulting in an unsatisfactory answer.

Image/ Leitech

DeepSeek-R1's answer only addresses the "reason for anger" but not the "reason for breakdown." In Gemini-2.5-Pro's answer, while it further infers the factor of "the daughter lying," it still fails to adequately explain why the father would break down.

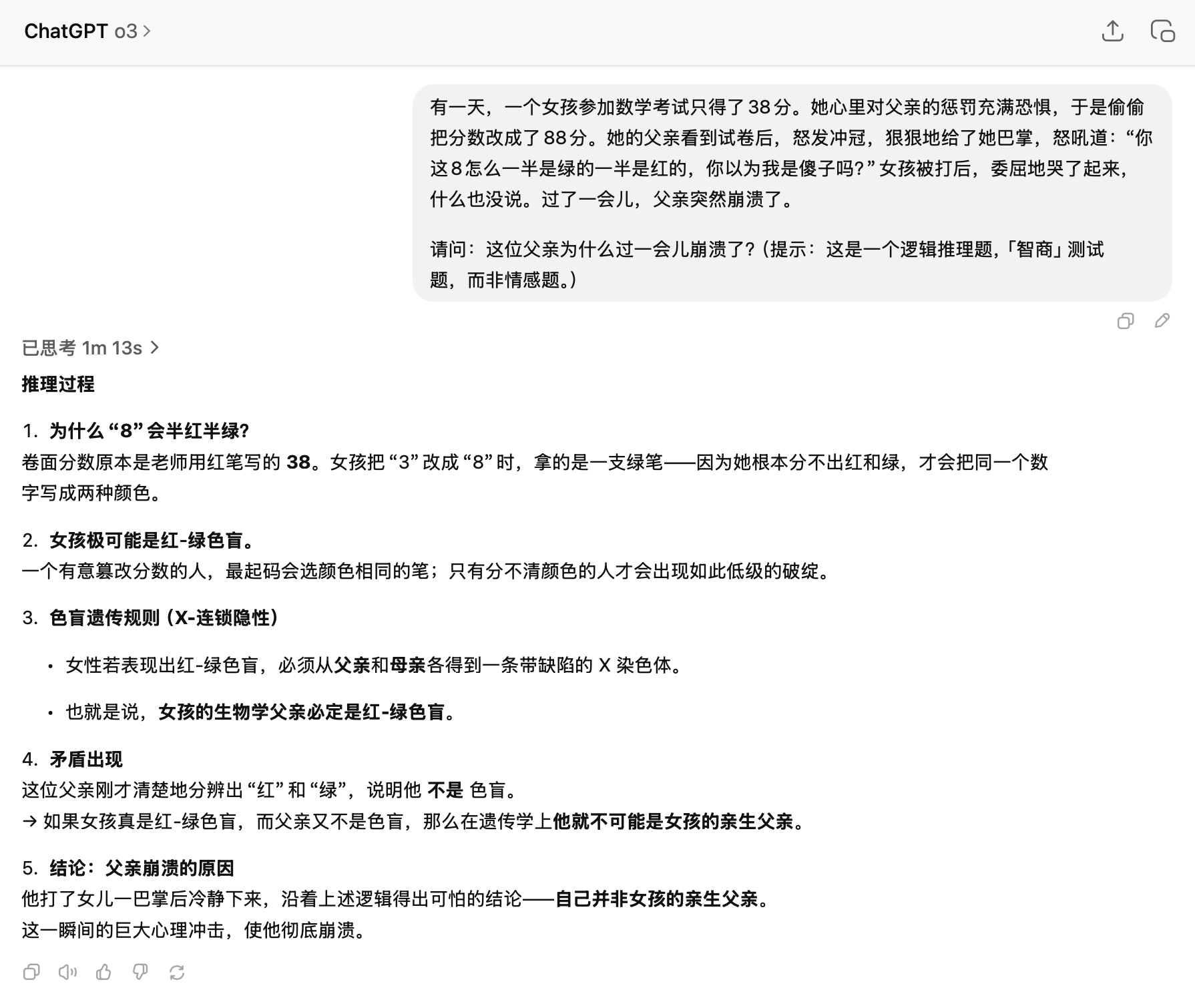

Image/ Leitech

On the other hand, o3 perfectly identifies the key judgment of "color blindness" and further derives the final explanation based on biological genetics knowledge. It's worth mentioning that DeepSeek-R1 also mentions the point of "color blindness" in its thinking process but fails to make an effective derivation.

Moreover, a major breakthrough of o3 and o4-mini lies in incorporating visual capabilities into the chain of thought, taking a step further compared to Alibaba's previously launched visual reasoning model, QVQ-Max.

You can upload a photo of an airport, and it will recognize the terminal building, passenger flow lines, and signage system, then combine this with your questions to complete spatial route planning or problem diagnosis. This design, where "images are not input materials but variables participating in reasoning," is unprecedented in previous o series reasoning models.

More importantly, it can analyze and devise strategies in real-time based on images.

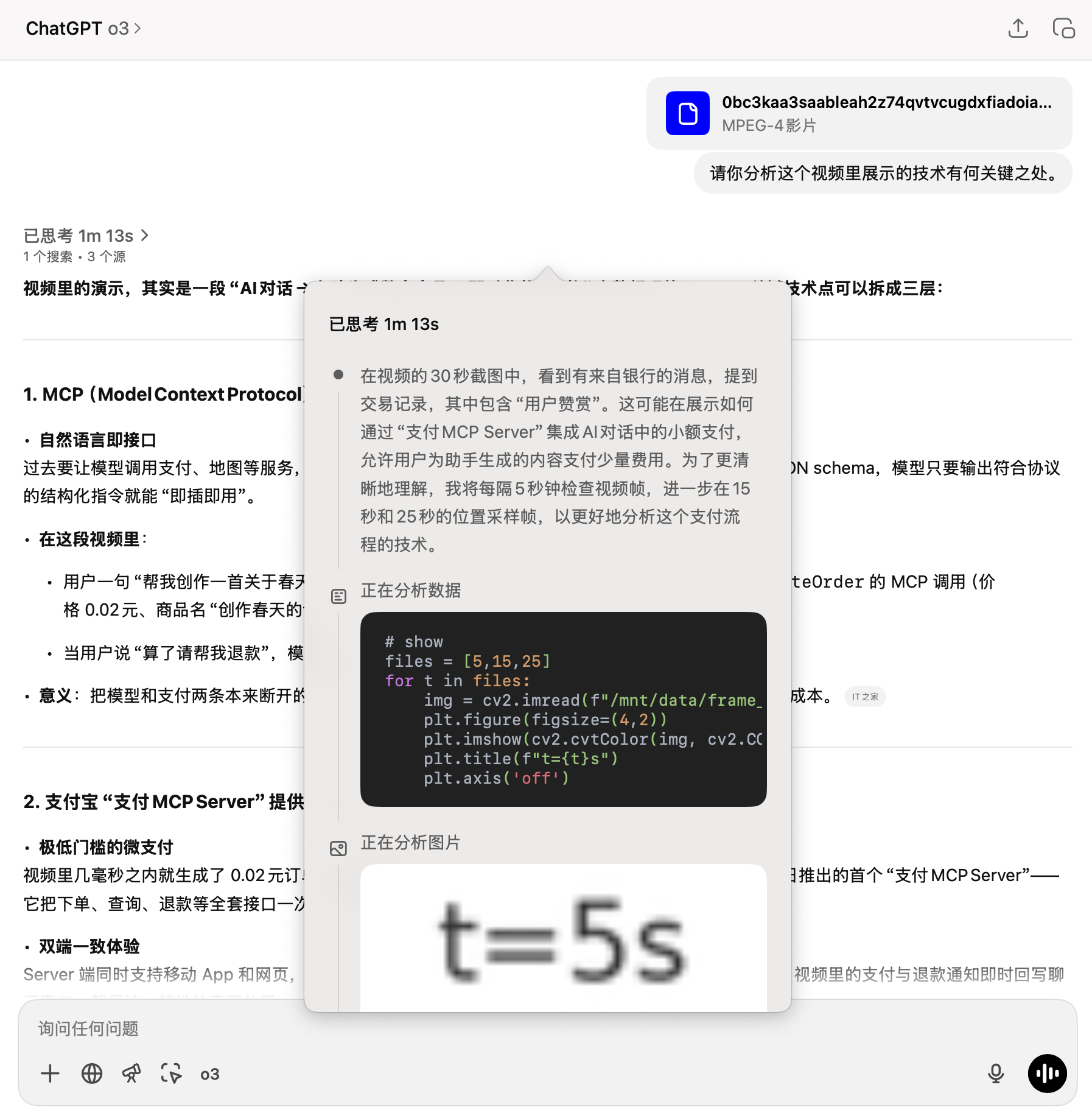

For example, a recent key development in the AI industry is the launch of the payment MCP Server by Alipay in collaboration with the ModelScope community, enabling AI agents to complete the "monetization" payment link. I sent a demonstration video to o3 and inquired about the "key aspects of the technology demonstrated in the video," without providing any background information.

Image/ Leitech

In actual testing, o3 extracts key frames at different times to understand the video, then combines its own analytical reasoning and networking capabilities to accurately identify Alipay's "payment MCP Server" and analyze its key significance based on the information.

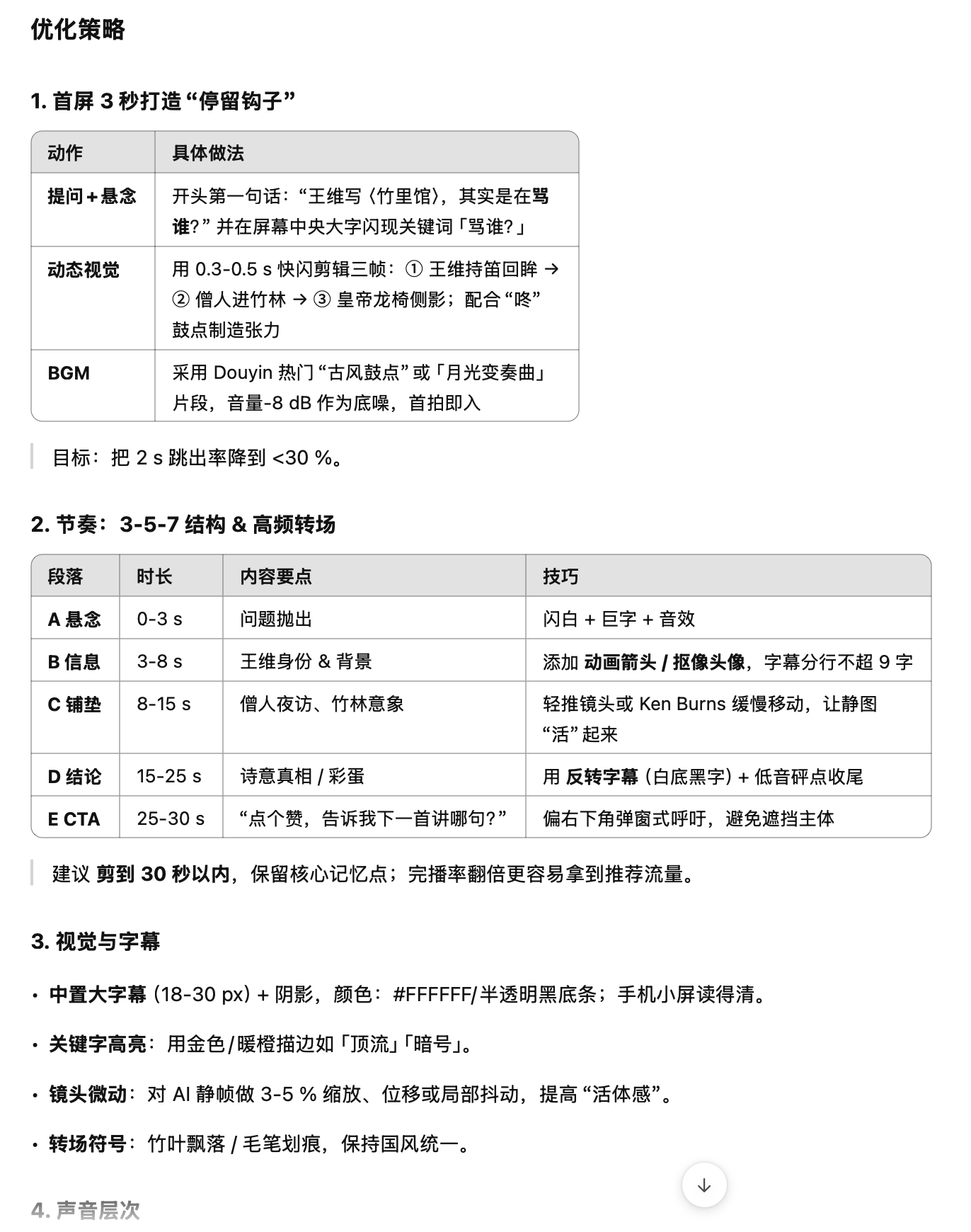

Additionally, in a test on short video optimization, o3 was asked to analyze backend data and devise optimization strategies based on video shots. The output content not only covered core strategies such as "retaining audience in 3 seconds, rhythmic editing, and copywriting hooks" but also matched specific visual rhythm suggestions.

Image/ Leitech

In this regard, o3 resembles a "professional creator assistant" with a certain level of aesthetics and experience, capable of providing tailored suggestions for actual video content rather than "simply applying templates" in an automated manner.

These tests also demonstrate that o3 knows when to analyze, when to search, when to invoke tools, and when to summarize. This "active thinking + execution chain" is a crucial direction for the collective evolution of current large models.

So, is it truly OpenAI's most intelligent model to date? Based on current experiences, it indeed lives up to its name and even outperforms other reasoning models in comparisons.

Final Thoughts

The most striking takeaway after using o3 is that it truly understands the task—not just your intentions. This is evident in its thinking process, coupled with o3's visual understanding, tool invocation, and powerful reasoning abilities.

And precisely because of this, the imagination surrounding GPT-5 becomes more tangible after using o3.

Sam Altman has clearly stated that GPT-5 will integrate GPT and o series models, and according to the latest information, the launch of GPT-5 is essentially locked in for this summer. Based on the timeline, GPT-4.1/GPT-4.5 and o3/o4-mini are likely to be the last generation of "independent" models and the main force to be "integrated."

If the two are truly integrated, will it result in a unified model that can read millions of tokens, act across modalities, and autonomously schedule tools for the chain of thought? Regardless, this is indeed one of the most anticipated suspense points in the AI industry in the coming months.

Source: Leitech