Why LiDAR Remains Indispensable in Autonomous Driving

![]() 05/12 2025

05/12 2025

![]() 644

644

RoboSense's financial report for 2024 reveals a total revenue of approximately RMB 1.65 billion, marking a 47.2% year-on-year growth rate and the third consecutive year of robust expansion. Notably, LiDAR sales surged by a significant 109.6% to approximately 544,000 units. Amidst the trend towards pure vision systems among automakers, why has LiDAR experienced such counterintuitive growth? What makes LiDAR irreplaceable in autonomous driving?

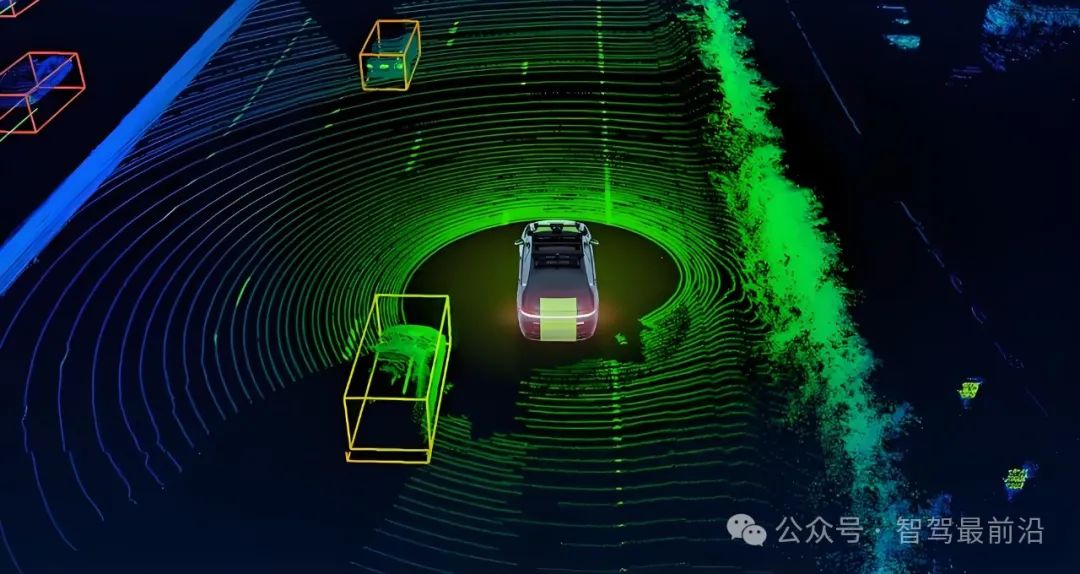

Environmental perception, the "primary sense" of autonomous driving, is paramount for ensuring vehicle safety and enhancing decision-making efficiency. Among the various perception technologies, LiDAR stands out as a crucial sensor due to its precise distance measurement and 3D point cloud construction capabilities. In the perception module of autonomous driving systems, LiDAR leads the way in environmental modeling and obstacle recognition. Unlike cameras, which capture 2D image information, LiDAR emits tens of thousands to hundreds of thousands of laser pulses, measuring the round-trip time to obtain precise distances and construct high-density 3D point clouds. These point clouds accurately restore the 3D shapes of roads, pedestrians, vehicles, and various facilities, with millimeter-level accuracy and a wide detection range, enabling the system to intuitively and meticulously "see" the surroundings and lay a solid foundation for path planning and obstacle avoidance.

On highways, LiDAR's long-range detection capability shines. At high speeds, early warning of obstacles is crucial. LiDAR can detect oncoming vehicles, obstacles, and potholes from hundreds of meters away, providing real-time feedback to the decision-making module, thus allowing ample time for braking or lane changing. Furthermore, in low-light or nighttime conditions, where cameras struggle, LiDAR maintains stable ranging performance due to its independence from natural illumination, enhancing driving safety in complex scenarios.

Urban roads present a more complex and dynamic environment, with intersections, pedestrians, bicycles, and dynamic obstacles intertwined. LiDAR's dense point clouds help algorithms accurately segment ground points from non-ground points, remove road reflection noise, and identify pedestrian and vehicle contours. Combined with deep learning models, it achieves target detection and tracking. When integrated with high-precision maps, LiDAR point clouds assist in precise positioning by matching real-time point clouds with pre-stored 3D environmental models, correcting the vehicle's pose within centimeter-level accuracy, ensuring stable driving and path planning.

In contrast, millimeter-wave radars, while having some penetration in harsh weather, lack the resolution and ranging accuracy of LiDAR, making it difficult to distinguish small, similarly shaped targets. Cameras excel in color and texture recognition but cannot directly obtain depth information and are susceptible to lighting changes and backlighting. Therefore, LiDAR's high resolution, precision, and robustness not only complement cameras and millimeter-wave radars but also provide a more comprehensive and accurate environmental perception capability for autonomous driving systems.

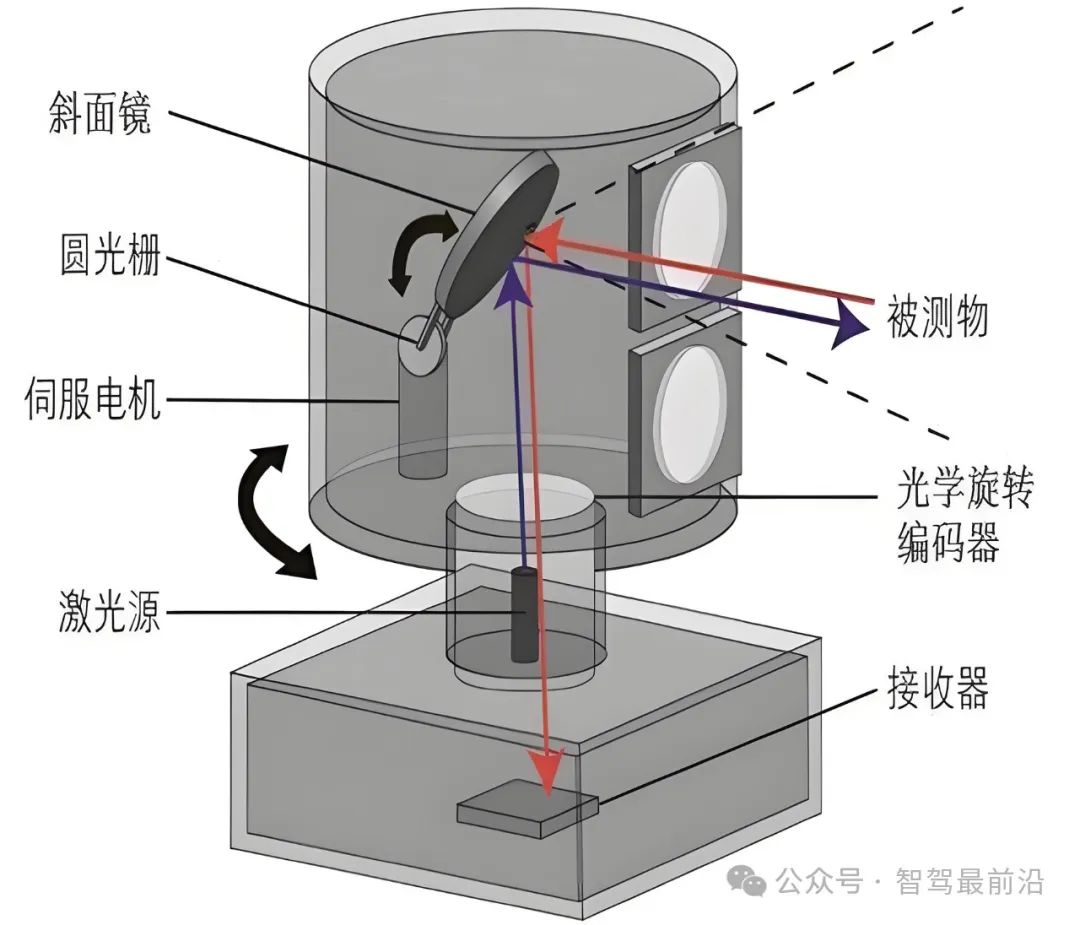

LiDAR technology itself is rapidly evolving from mechanical spinning to solid-state. Early mechanical LiDARs achieved omnidirectional scanning through rotating lenses or heads, offering high detection ranges and field-of-view coverage but suffering from high costs, large sizes, and decreased reliability due to mechanical wear and inertia. Recently, solid-state LiDAR technology has emerged, utilizing MEMS micromirrors and optical phased arrays (OPAs) to eliminate rotating structures, significantly reducing size, cost, and improving anti-vibration capabilities, paving the way for large-scale mass production and commercial deployment.

Examining LiDAR's core components, we find laser emitters, optical scanning systems, receiving detectors, and data processing units. Laser emitters typically operate in the 905-nanometer or 1550-nanometer wavelength bands, with the former being cheaper and more efficient but less safe, and the latter offering higher safety and environmental interference resistance at a higher cost. Common receiving detectors include avalanche photodiodes (APDs) and single-photon avalanche diodes (SPADs), crucial for system sensitivity and signal-to-noise ratio in low-light or long-distance detection. The choice of optical scanning system affects field of view and scanning speed, while the data processing unit must handle point cloud processing and real-time transmission efficiently to meet autonomous driving's stringent timeliness requirements.

Regarding point cloud data processing and algorithmic support, autonomous driving systems typically undergo stages like denoising, ground segmentation, object detection, semantic segmentation, multi-frame fusion, and localization matching. The denoising module filters out false points caused by weather conditions using statistical analysis and environmental models. Ground segmentation separates roads from obstacles through model fitting or deep learning. Object detection and semantic segmentation rely on neural networks like PointNet and VoxelNet to convert point clouds into semantic labels and 3D bounding boxes. Multi-frame fusion technology combines IMU and odometry information to align point clouds from different time points, enhancing environmental perception completeness and continuity. Finally, based on SLAM algorithms like LOAM and FAST-LIO, the system constructs maps dynamically and achieves real-time localization during driving.

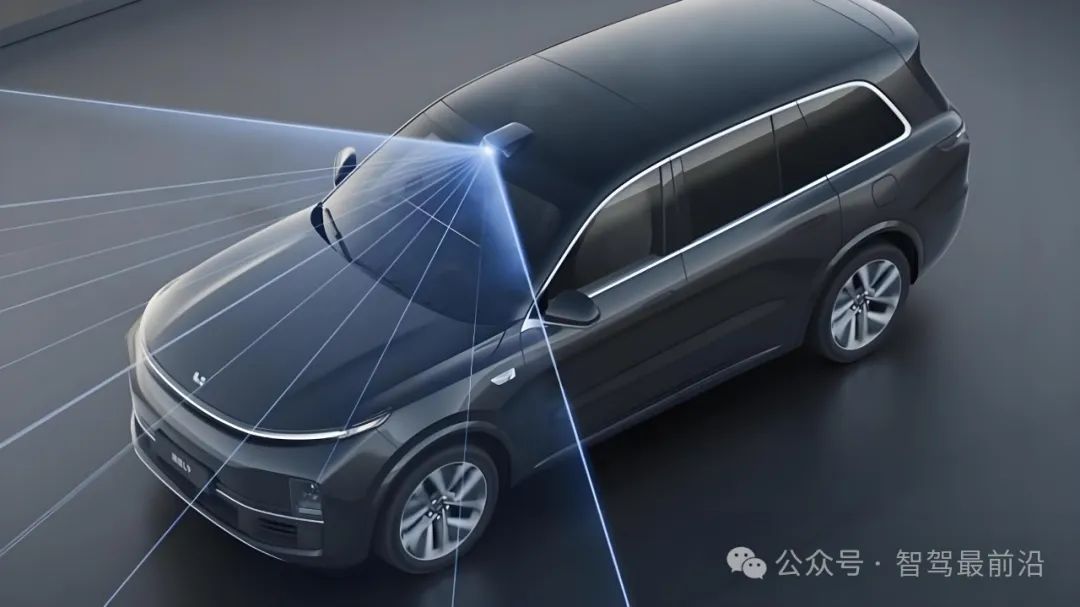

In practice, LiDAR often forms a multi-sensor perception system with cameras, millimeter-wave radars, IMUs, and high-precision maps. Cameras provide rich color and texture information, enhancing scene understanding and obstacle classification when combined with LiDAR point clouds. Millimeter-wave radars are reliable in detecting metal targets in harsh weather, complementing LiDAR. IMUs provide high-frequency attitude change information, bridging short-term positioning gaps during low LiDAR frame rates. High-precision maps offer prior environmental information for LiDAR positioning and decision-making, enabling advanced vehicle-road coordination.

At the system integration and calibration level, extrinsic parameter calibration, time synchronization, and thermal diffusion and vibration compensation between LiDAR and other sensors are crucial. Calibration accuracy directly impacts multi-sensor fusion precision, often addressed using calibration plates, targets, and automatic calibration algorithms. Time synchronization requires hardware triggers or the IEEE 1588 PTP protocol to maintain microsecond-level consistency in sensor data acquisition, preventing data fusion errors due to time delays. To mitigate measurement errors from vibrations and temperature changes, compensation mechanisms must be integrated into hardware design and software algorithms, ensuring long-term measurement stability.

While LiDAR is not without challenges, such as performance degradation in harsh weather and high costs, its unique advantages in distance measurement, 3D environmental modeling, and high-precision positioning make it indispensable in autonomous driving. With continuous advancements in solid-state, large-scale, and intelligent technologies, LiDAR is poised to play an increasingly critical role in autonomous driving and broader intelligent transportation systems, heralding a future of safer and more efficient travel.

-- END --