Physical AI: Fueling Innovation with Data, Realizing Potential through Simulation

![]() 05/20 2025

05/20 2025

![]() 595

595

Produced by ZhiNeng ZhiXin

Following the surge of artificial intelligence across manufacturing, healthcare, and transportation, robots are transitioning from the digital realm to physical spaces. This evolution is powered by a profound understanding and technological implementation of "Physical AI".

NVIDIA stands at the forefront of this transformation, not merely manufacturing chips but constructing a comprehensive ecosystem that spans from data generation to robot deployment. Leveraging open-source initiatives, NVIDIA is accelerating the integration of humanoid and industrial robots into mainstream applications.

AI agents, essentially digital robots, must learn in a simulated environment adhering to real-world physics to perceive and act in the physical world.

Therefore, creating a high-fidelity simulation environment that mirrors real-world physical responses is essential for training physical robots.

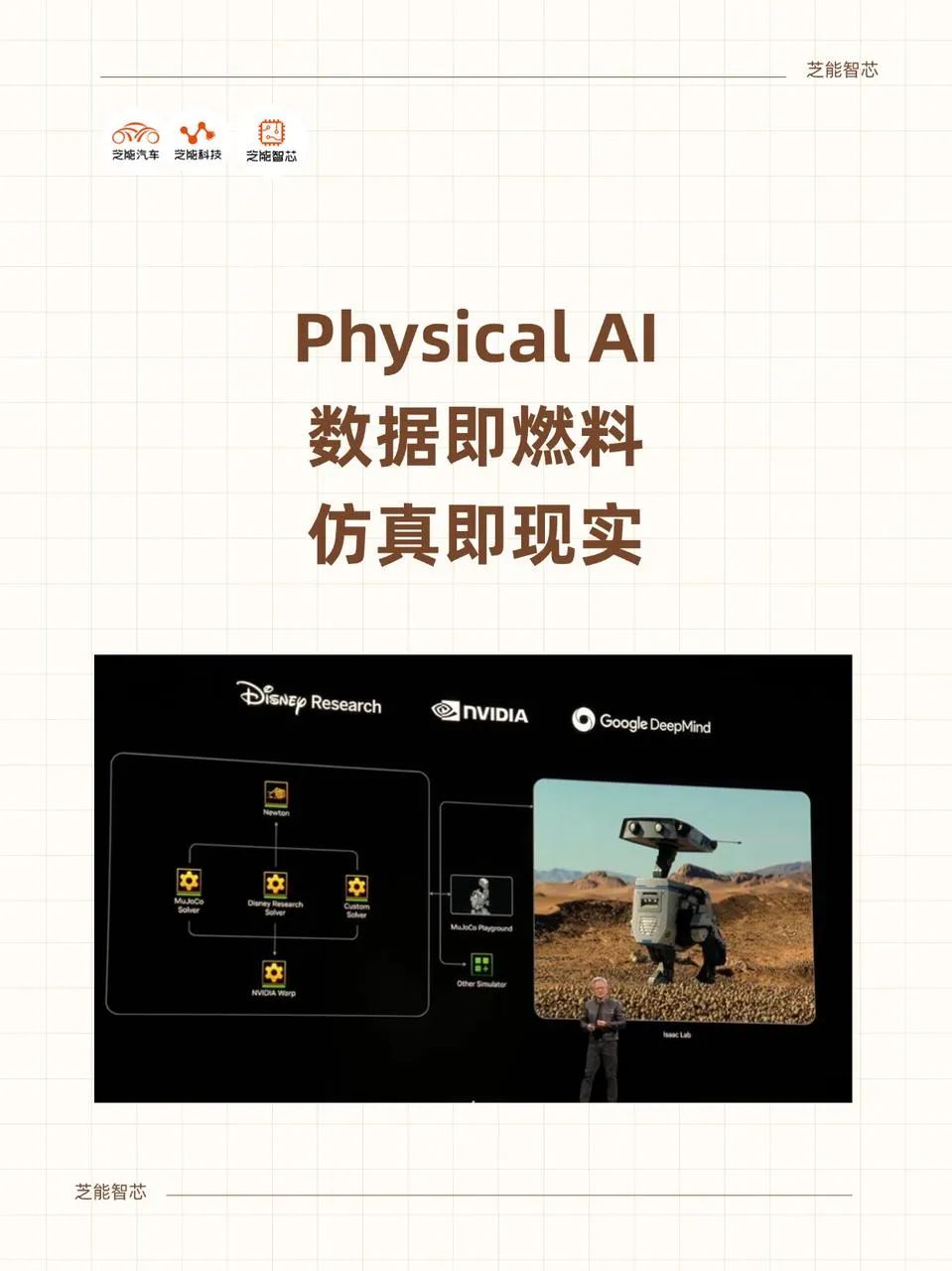

To this end, NVIDIA, in collaboration with DeepMind and Disney Research, has developed the cutting-edge physics engine Newton, set for global open-source release in July.

The release of Newton signifies not only a technical breakthrough at the engine level but also establishes "Physical AI" as the next strategic frontier in AI technology.

Physical AI diverges from previous generative AI models reliant on large datasets for text or image generation. Instead, it focuses on "action generation and understanding," endowing robots with full-chain capabilities including perception, decision-making, and execution.

NVIDIA has established a full-stack software and hardware infrastructure encompassing three pivotal computing platforms: DGX servers as the AI training hub, RTX PRO workstations and servers for simulation and synthetic data generation, and Jetson AGX as the edge deployment platform for robots.

Crucially, NVIDIA integrates these platforms through Omniverse, forming a seamless development-training-deployment loop.

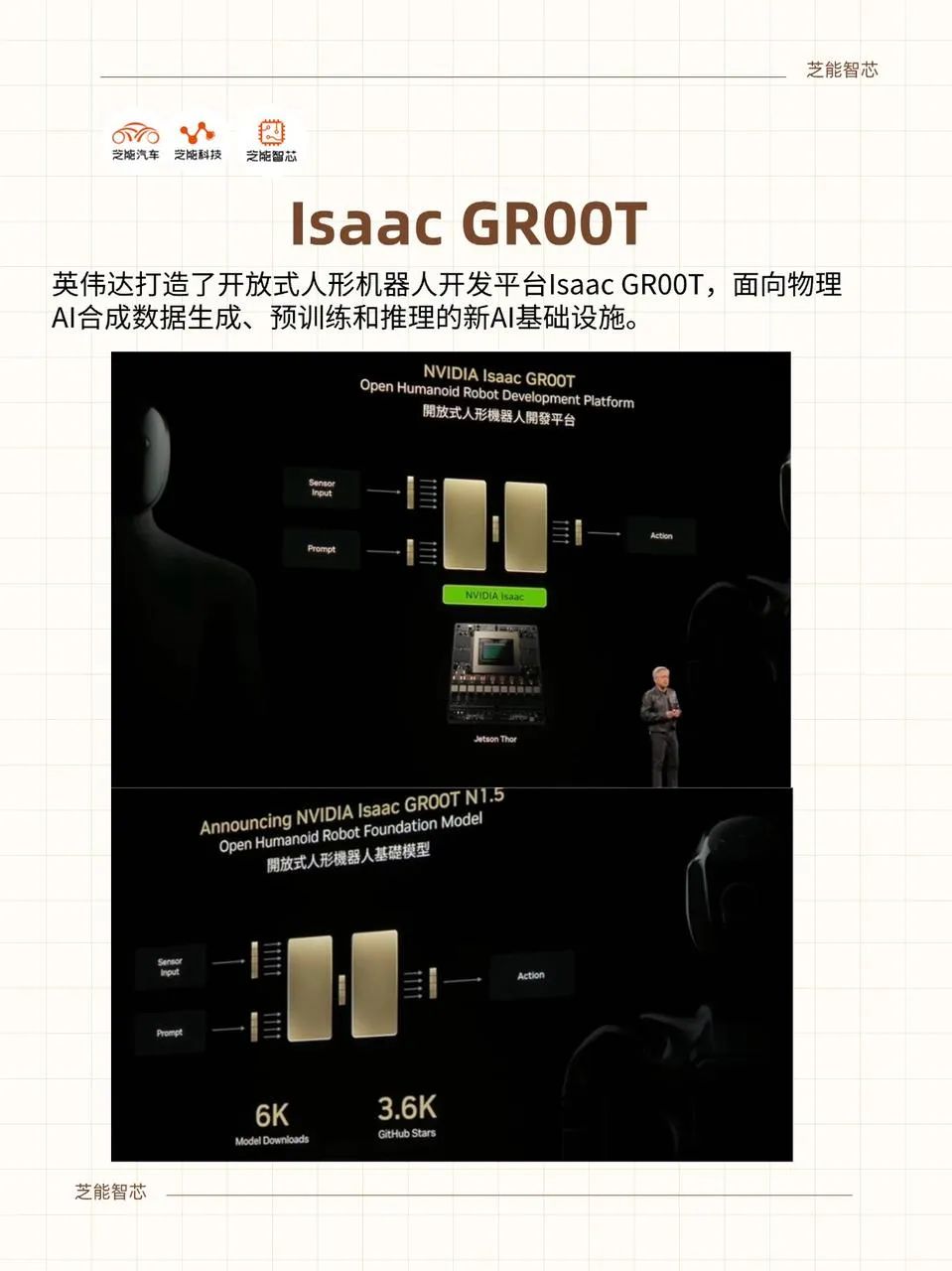

Building on this foundation, NVIDIA has introduced Isaac GR00T, an open-source humanoid robot development platform targeting the rapidly growing humanoid robot segment.

Isaac GR00T transcends being a mere framework; it's an AI infrastructure integrating base models, data generation, simulation, and training processes.

Its latest release, Isaac GR00T N1.5, is an open, versatile, and fully customizable base model for humanoid robots. Compared to its predecessor, it exhibits enhanced adaptability to new environments and execution capabilities for complex tasks, particularly in object recognition, picking, and placing accuracy.

By open-sourcing this model, developers worldwide can conduct personalized training tailored to different robot brands and integrate it with the Isaac GR00T-Dreams blueprint to generate extensive synthetic training data.

Enhancing data generation capabilities is vital for accelerating robot research and development.

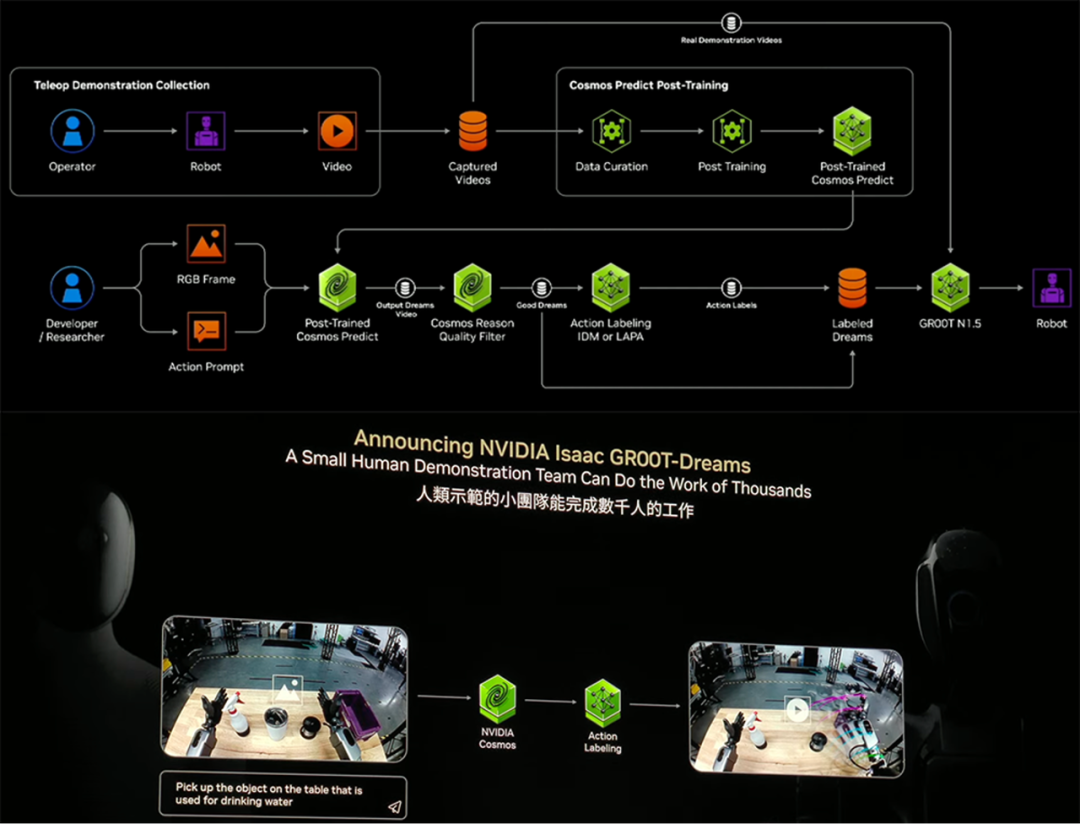

Traditional manual demonstration collection methods are time-consuming and labor-intensive, insufficient for the diverse motion learning needs of humanoid robots. The Isaac GR00T-Dreams blueprint addresses this by expanding limited manual demonstrations into large-scale "dreams"—visual videos of robots performing tasks in a simulated environment, leveraging NVIDIA's Cosmos Physical AI World base model.

These "dreams" not only exhibit realistic physical responses but also filter high-quality samples through a reasoning engine, converting them into 3D motion trajectories for training Isaac GR00T N1.5. This process, completed in just 36 hours, contrasts sharply with the months required by traditional methods. GR00T-Dreams enables small teams to accomplish data collection tasks typically requiring thousands of people, significantly lowering the barrier to entry for humanoid robot development.

This paradigm shift from "real data-driven" to "real-to-real" synthetic data-driven also holds great potential in industrial robots.

Companies like TSMC, Foxconn, Pegatron, and Quanta use NVIDIA's Omniverse platform to build digital twins of factories, pre-enacting and optimizing complex manufacturing processes in the virtual world.

For instance, TSMC has developed an AI-driven digital twin pipeline system using Omniverse and the cuOpt platform, converting traditional 2D CAD drawings into interactive 3D factory models and leveraging visual language models to enhance wafer defect recognition efficiency, thereby accelerating the entire process from chip design to manufacturing.

This fusion of virtual and real processes has shortened new factory planning time by several months, saving tens of millions of dollars in costs.

Foxconn has deployed the Omniverse-based Fii digital twin platform in its Taiwan factory, integrating the Isaac GR00T-N1 model and GR00T-Mimic blueprint to train industrial robotic arms to perform complex tasks ranging from cable insertion to assembly.

By utilizing virtual robots for large-scale training in Omniverse and deploying them to real workshops, physical AI is seamlessly integrated into the production line.

Furthermore, by constructing a superchip simulation platform in liquid-cooled PODs, Foxconn can test the real operating conditions of AI factories, thereby optimizing hardware resource allocation.

Behind these industrial practices lies a trend: Future robot development will shift from large-scale offline testing to extensive pre-training and iterative optimization using digital twins and synthetic data, ultimately enabling efficient deployment.

This approach applies not only to humanoid robots but also extends to other forms such as collaborative robots, autonomous mobile robots, and AI agents.

NVIDIA's software and hardware stack, Omniverse platform, Isaac toolkit, and open-source data generation blueprints are becoming the "standard equipment" for robot developers.

In urban scenarios, Linker Vision and the Kaohsiung City Government are leveraging Omniverse to create a city-level digital twin system, simulating unpredictable situations, and utilizing AI agents to achieve real-time response mechanisms.

This model underscores that the integration of digital twins and physical AI transcends factory boundaries, extending into broader areas such as smart cities and public safety.

Summary

From crafting digital mirrors of the physical world to generating motion trajectories from pixels and embedding AI into robots for task execution, NVIDIA is propelling robot development into a new era of "data as fuel, simulation as reality" through a comprehensive open ecosystem.

Physical AI serves as the core engine driving this transformation. In the near future, we may witness more humanoid robots capable of autonomous movement, command understanding, and task execution entering factories, hospitals, shopping malls, and even homes. Behind this lies a revolution in data generation capabilities and physics simulation engines.