What is "Point Cloud" in Autonomous Driving?

![]() 05/21 2025

05/21 2025

![]() 481

481

In autonomous driving systems, point cloud technology stands as a cornerstone of 3D spatial perception, furnishing vehicles with precise distance and shape information. This enables critical functions such as object detection, environmental modeling, positioning, and map construction. So, what exactly is this "point cloud," and how does it influence autonomous driving?

What is a Point Cloud?

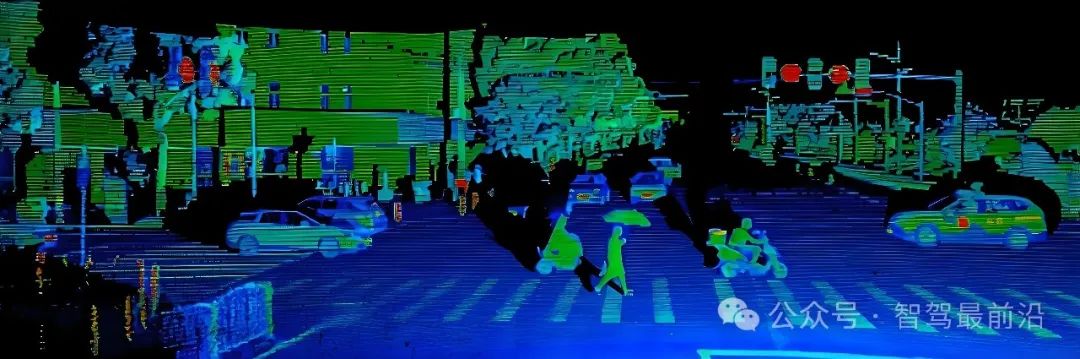

A point cloud is a compilation of data comprising numerous discrete points in three-dimensional space. Each point possesses its own Cartesian coordinates (X, Y, Z) and may be accompanied by attributes like color, intensity, and timestamps, detailing the spatial distribution and surface features of objects. These points, within a unified spatial reference system, collectively delineate the shape of the target. Primary sources of point cloud data include LiDAR, 3D scanners, and photogrammetry-based reconstruction techniques. Among them, LiDAR stands out as the most prevalent method for acquiring point clouds in autonomous driving due to its high precision and long-distance detection capabilities.

LiDAR operates by emitting laser beams and receiving reflected signals. It calculates distances by combining the speed of light with time differences and determines each point's position in three-dimensional space using horizontal rotation and vertical angle information, thereby generating point cloud data on a massive scale. RGB-D camera-based point clouds add color information, suitable for close-range, small-scale modeling and analysis, but they remain less stable than LiDAR in long-distance, high-dynamic scenarios.

Role of Point Cloud in Autonomous Driving

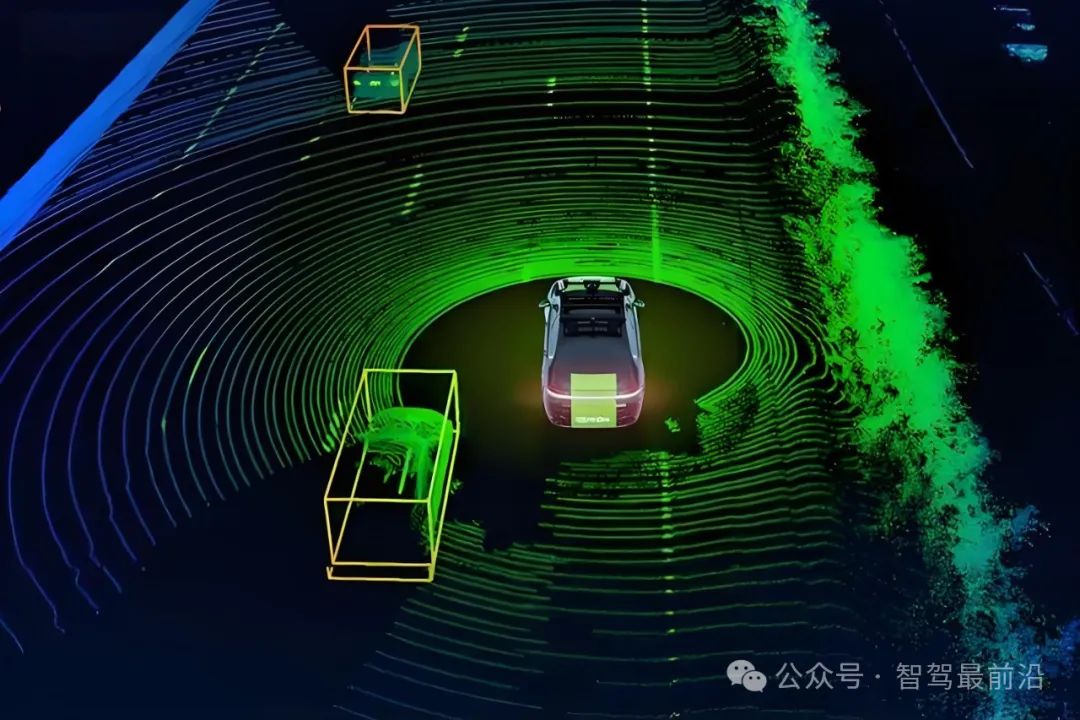

In autonomous driving, the perception system's core tasks involve identifying and locating surrounding dynamic and static objects. Point cloud technology, with its advantage of three-dimensional depth information, provides crucial support for these tasks. It enables object detection and 3D segmentation, accurately extracting the spatial contours and positions of objects like pedestrians, vehicles, and obstacles through clustering and semantic segmentation of point cloud data. Deep learning models like PointNet and PointRCNN, specifically designed for point cloud data, achieve industry-leading performance on public datasets such as KITTI and NuScenes. Unaffected by factors like lighting, shadows, and preceding vehicle headlights, point cloud data maintains stable detection capabilities in low-light and backlight environments, an advantage that pure vision systems struggle to match. Furthermore, point cloud data offers geometric constraints for multi-sensor fusion, significantly enhancing obstacle location accuracy and system robustness when combined with camera and millimeter-wave radar data.

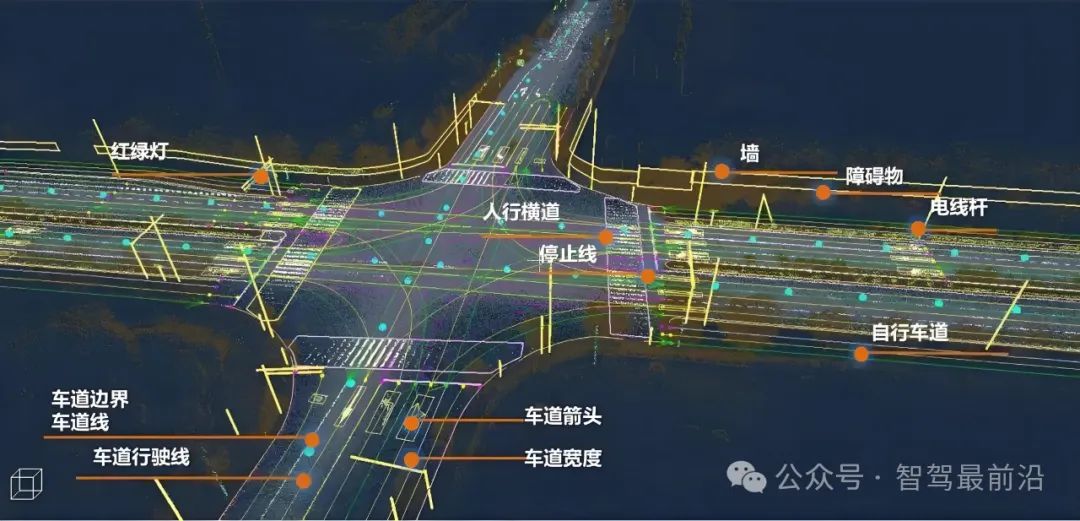

High-precision positioning and environmental map construction form the backbone of decision-making and planning in autonomous driving. Point cloud serves as a vital data source for SLAM (Simultaneous Localization and Mapping) and high-definition map creation. Laser SLAM algorithms based on point clouds achieve real-time pose estimation and dense map generation through consecutive frame registration (e.g., ICP algorithm), aiding vehicles in maintaining precise positioning in complex roads and adverse weather conditions. Point cloud maps capture three-dimensional structural details like roads, curbs, and signage, providing rich geometric information for path planning and behavior decision-making, significantly enhancing autonomous driving adaptability and safety in urban, highway, and other scenarios.

Point cloud data directly enhances the accuracy and robustness of the autonomous driving perception system. With millimeter-level distance resolution, LiDAR point clouds detect objects within tens to hundreds of meters with centimeter-level accuracy, fulfilling real-time obstacle avoidance requirements in high-speed scenarios. While the high-dimensional sparsity of point cloud data poses challenges for deep neural network feature representation and processing, it has spurred the development of efficient sparse convolution and point cloud feature extraction methods like Voxel and Voxel-Free, continually driving system performance improvements. Point clouds exhibit robust performance in adverse weather conditions like rain, snow, and haze, where LiDAR provides reliable distance information while cameras may encounter obstruction or increased recognition errors. Thus, point cloud integration significantly enhances multi-sensor fusion systems' adaptability across environments.

Challenges and Trends in Point Cloud Technology Application

Despite its advantages in autonomous driving, large-scale point cloud technology application faces several challenges. Point cloud data volumes are immense, with a single complete rotation generating millions to tens of millions of points, necessitating high storage, transmission, and real-time processing capabilities, posing stringent tests on onboard computing resources. Point cloud processing algorithms (e.g., ICP registration, semantic segmentation, object tracking) are computationally complex, requiring sparse data structures and GPU acceleration for real-time optimization, complicating system design and algorithm implementation. Moreover, LiDAR hardware costs are high, especially for high-end, high-resolution devices priced in the tens to hundreds of thousands of yuan, limiting the mass-market adoption of autonomous vehicles. Environmental factors like reflective surfaces, snow and rain cover, and dust interference can introduce noise and occlusion into point cloud data, further complicating point cloud filtering and completion algorithms.

To address these challenges, point cloud technology and applications are rapidly evolving. Deep learning-based point cloud sparsification, compression, and hierarchical coding algorithms significantly reduce data volume and bandwidth requirements while preserving information fidelity, offering new avenues for vehicle-cloud collaborative processing. Continuous optimization of multi-sensor fusion algorithms, enabling coordinated perception of point clouds, images, and millimeter-wave radar data from diverse distances and perspectives, further enhances object recognition and tracking accuracy. At the hardware level, innovations like solid-state LiDAR and Flash LiDAR are achieving miniaturization, cost reduction, and reliability improvements, with expectations of mass-market adoption in the coming years. Additionally, with the maturity of edge computing and V2X networks, point cloud data can be shared and calibrated within vehicle-to-vehicle and vehicle-to-road collaborative networks, constructing a larger-scale real-time 3D environmental awareness platform and laying the groundwork for achieving L4/L5 level autonomous driving.

Conclusion

Point cloud technology plays an indispensable role in 3D perception within autonomous driving systems, supporting full-scenario, high-robustness autonomous driving from precise ranging to environmental reconstruction, object detection to positioning and navigation. Facing the dual challenges of massive data and algorithmic complexity, the industry must pursue algorithmic innovation and hardware iteration in tandem to continuously reduce costs and improve performance. With advancements in deep learning, sensor fusion, and vehicular networking, point cloud technology will further mature, paving the way for the realization of true fully autonomous driving.

-- END --