From "Computing Power Nuclear Bomb" to Generative AI, How Far is the New Era?

![]() 03/27 2024

03/27 2024

![]() 962

962

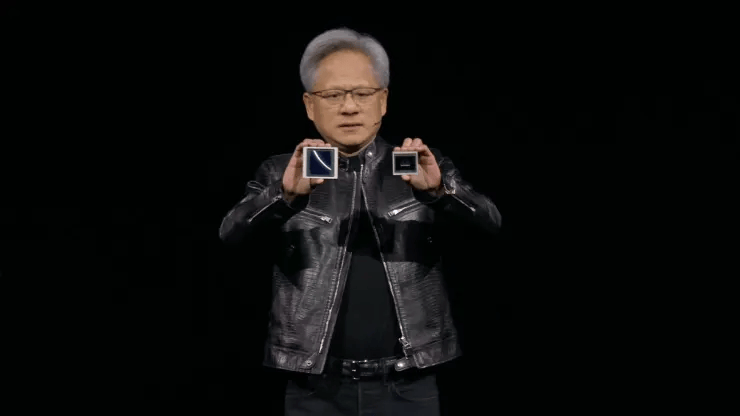

"We need bigger GPUs!"

On the early morning of March 19th, GTC 2024, the annual "AI Wind Vane" conference, arrived as scheduled.

NVIDIA CEO Jensen Huang announced a series of groundbreaking technological achievements at the conference, including the new generation of accelerated computing platform NVIDIA Blackwell, Project GR00T humanoid robot base model, Omniverse Cloud API, NVIDIA DRIVE Thor centralized in-vehicle computing platform, and more.

Among them, NVIDIA Blackwell, as NVIDIA's "ace in the hole," once again pushed the technical standards of AI chips to new heights.

Subsequently, NVIDIA and Amazon Web Services announced an expanded partnership, with Blackwell soon to be available on AWS and combined with AWS's proud network connectivity, advanced virtualization, and hyperscale clusters to deliver significant performance gains for trillion-parameter scale large model inference workloads.

The trillion-parameter scale is currently within the actual parameter range of the world's top large models, and users may soon experience the improvements brought by new hardware in various generative AI applications.

The Birth of the "Ace" AI Chip

How much computing power is needed to train trillion-parameter large models?

At GTC, Jensen Huang first did a math problem. Taking OpenAI's most advanced 1.8 trillion-parameter large model as an example, the model requires several trillion tokens for training.

Multiplying trillion parameters by several trillion tokens gives the computational scale required to train OpenAI's most advanced large model. Huang estimated that if a petaflop-level GPU (performing 1 quadrillion operations per second) were used for computation, it would take 1,000 years to complete.

After the invention of Transformers, the scale of large models is expanding at an astonishing rate, doubling every six months on average, meaning that trillion-level parameters are not the upper limit for large models.

Under this trend, Huang believes that the iteration and development of generative AI require larger GPUs, faster GPU interconnection technology, more powerful supercomputer internal connection technology, and larger supercomputer megasystems.

NVIDIA has traditionally launched two types of GPU architectures, with the GeForce RTX series for gaming based on the Ada Lovelace architecture and the Hopper architecture for professional graphics cards for AI, big data, and other applications. The world-renowned H100 is based on the Hopper architecture.

Although Hopper can already meet the needs of most commercial markets, Huang believes that is not enough: "We need bigger GPUs, and we need to stack GPUs together."

As a result, Blackwell, a product using both architectures, was born. Blackwell is NVIDIA's sixth-generation chip architecture. This tiny GPU, integrating 208 billion transistors, boasts incredible computing power and revolutionizes previous products.

According to Huang, NVIDIA invested $10 billion in research and development for this chip. The new architecture is named after David Harold Blackwell, a mathematician from the University of California, Berkeley, who specialized in game theory and statistics and was the first black scholar elected to the National Academy of Sciences.

Blackwell's FP8 performance in single-chip training is 2.5 times that of its previous generation architecture, and its FP4 performance in inference is 5 times that of its previous generation architecture. It features fifth-generation NVLink interconnect with twice the speed of Hopper and scalability to 576 GPUs.

So, Blackwell is not just a chip but a platform.

The NVIDIA GB200 Grace Blackwell superchip connects two NVIDIA B200 Tensor Core GPUs with NVIDIA Grace CPUs through a 900GB/s ultra-low-power inter-chip interconnect.

Its significant performance upgrade provides AI companies with 20 petaflops or 2 trillion computations per second, representing a 30-fold improvement in large language model performance compared to H100, while consuming only 1/25 of the energy.

It is easy to see that the significant performance improvement of the Blackwell platform is in preparation for the next generation of generative AI. From OpenAI's recently released Sora and the ongoing development of a more powerful and complex GPT-5 model, it is clear that the next step for generative AI is multimodal and video, implying larger-scale training. Blackwell brings more possibilities.

Today, from Google's boundless search engine to Amazon's cloud paradise and Tesla's intelligent driving, major technology giants are joining NVIDIA's Blackwell camp, kicking off an exciting AI accelerated computing feast.

Industry leaders such as Amazon, Google, Dell, Meta, Microsoft, OpenAI, Oracle, and Tesla are scrambling to deploy and prepare to make a splash in the new era of AI.

Unconcealed Strategic Anxiety

Benefiting from the popularity of generative AI since last year, NVIDIA's latest quarterly financial report, announced after the market closed on February 21st, once again exceeded market expectations. The report showed that NVIDIA's total revenue for fiscal year 2024 reached $60.9 billion, a year-on-year increase of 125.85%; net income was $29.76 billion, representing a year-on-year increase of over 581%; adjusted earnings per share were $12.96, a year-on-year increase of 288%. This is the fourth consecutive quarter that NVIDIA has exceeded market expectations.

NVIDIA's accelerated performance actually reflects the surging demand for AI computing power among global technology companies. With the emergence of applications like Sora, the world has seen the enormous potential of large models.

Generative AI is likely to enter a stage of "arms race," accompanied by a continuous increase in chip demand from technology companies.

Data from Counterpoint Research shows that NVIDIA's revenue will soar to $30.3 billion in 2023, an 86% increase from $16.3 billion in 2022, making it the world's third-largest semiconductor vendor in 2023.

Wells Fargo predicts that NVIDIA will generate up to $45.7 billion in revenue in the data center market in 2024, potentially setting a new record.

However, NVIDIA, which has created history, is not complacent. NVIDIA's "monopoly" status in AI computing does not satisfy everyone, with competitors striving to break NVIDIA's dominance, and customers also need a second AI chip supply source.

Although NVIDIA's GPUs have many advantages, they may consume too much power and be complex to program when used for AI. NVIDIA's competitors range from startups to other chip manufacturers and technology giants.

Recently, OpenAI CEO Sam Altman is raising over $8 billion in funding from global investors such as Abu Dhabi's G42 Fund and Japan's SoftBank Group to establish a new AI chip company with the goal of using the funds to build a network of factories to manufacture chips, directly targeting NVIDIA.

On February 17th, industry insiders revealed that Masayoshi Son, the founder of Japan's investment giant SoftBank Group, is seeking to raise up to $100 billion to create a massive joint venture chip company that can complement Arm's chip design division.

In the past, AMD has been conducting its new generation of AI strategic planning, including mergers and acquisitions and departmental reorganizations, but the emergence of generative AI has further expanded the company's product lineup: The MI300 chip released last December is specifically targeted at complex AI large models, equipped with 153 billion transistors, 192GB of memory, and 5.3TB of memory bandwidth per second, which are approximately 2 times, 2.4 times, and 1.6 times those of NVIDIA's most powerful AI chip, the H100.

Amazon Web Services is also continuously investing in self-developed chips to improve the cost-effectiveness of customers' cloud workloads. AWS launched two series of chips early on for AI, Trainium for training and Inferentia for inference, and continues to update and iterate them.

The recently launched Trainium2 can provide 65 exaflops of AI computing power through cloud expansion and network interconnection, enabling the training of a 300 billion-parameter large language model in just a few weeks. These AI chips have been used by leading generative AI companies such as Anthropic.

These major companies have unanimously invested heavily in self-developed AI chips, revealing that no one wants to hand over the technological discourse power and dominance to large chip manufacturers. Only by being at the top of the "AI food chain" can one possibly grasp the key to the future.

R&D as the Foundation, Ecosystem as the Path

Jensen Huang has expressed this sentiment in many places: NVIDIA is not selling chips, but selling problem-solving capabilities.

Driven by this concept of co-construction of the industrial ecosystem, NVIDIA has built an ecosystem encompassing hardware, software, and development tools around the GPU.

For example, NVIDIA's investment in the field of autonomous driving has yielded significant results, with its Drive PX series platform and later the Drive AGX Orin system-on-a-chip becoming key components for many automakers to achieve advanced driver assistance systems (ADAS) and autonomous driving. This is a successful case of deep integration of underlying technological innovation with practical application scenarios.

Facing industry competition, NVIDIA hopes to leverage the overall ecological collaboration to serve the industry and the market.

NVIDIA's partnership with AWS, the "leader" in cloud computing, has also yielded remarkable results, spanning from the first GPU cloud instance to the current Blackwell platform solution. The partnership has lasted over 13 years. Customers will soon be able to use infrastructure based on NVIDIA GB200 Grace Blackwell Superchip and B100 Tensor Core GPUs on AWS.

The combination of NVIDIA's ultra-powerful computing chip system and AWS's leading technologies such as Elastic Fabric Adapter (EFA) network connectivity, advanced virtualization (Amazon Nitro System), and hyperscale clusters (Amazon EC2 UltraClusters) enables customers to build and run trillion-parameter large language models on the cloud faster, on a larger scale, and more securely.

In the field of large model research and development, the trillion-parameter scale was previously considered a threshold. According to public reports, GPT4, released in the middle of last year, has a model parameter of 1.8 trillion, consisting of 8 220B models; the recently released Claude3 model did not disclose its parameter size, while Musk's latest open-source Grok large model has a parameter size of 314 billion.

This collaboration between the two parties is expected to provide new possibilities for breakthroughs in the field of generative AI by accelerating the research and development of trillion-scale large language models.

NVIDIA's own AI team has specifically built the Project Ceiba on AWS to help drive future innovations in generative AI.

The Ceiba project made its debut at the AWS 2023 re:Invent global conference in late November 2023, one of the world's fastest AI supercomputers built in collaboration between NVIDIA and AWS, with a computational performance of 65 exaflops at the time.

With the addition of the Blackwell platform to the Ceiba project, it brings 7 times the original computational performance, and now, this AI supercomputer will be able to handle up to 414 exaflops of AI computing power.

The new Ceiba project features a supercomputer with 20,736 B200 GPUs, built using the new NVIDIA GB200 NVL72 system, which connects 10,368 NVIDIA Grace CPUs using fifth-generation NVLink technology.

The system is also expanded through AWS's fourth-generation EFA network, providing each Superchip with up to 800 Gbps of low-latency, high-bandwidth network throughput.

In addition, AWS plans to offer Amazon EC2 instances equipped with new NVIDIA B100 GPUs and capable of large-scale deployment in Amazon EC2 UltraClusters.

Huang has high expectations for this collaboration: "Artificial intelligence is driving breakthroughs at an unprecedented speed, leading to new applications, business models, and cross-industry innovations.

NVIDIA's partnership with AWS is accelerating the development of new generative AI capabilities and providing customers with unprecedented computing power to push the boundaries of possibility."

With so many industries and complex innovations, NVIDIA is building an increasingly powerful AI ecosystem with its partners to jointly lead the new era of generative AI. As Huang puts it, when computer graphics, physics, and artificial intelligence intersect, NVIDIA's soul is born.

Related Reading

From Data to Generative AI, It's Time to Rethink Risks

What is the Key to Building Generative AI Applications?

With GPU Constraints, Can Domestic AI Large Models Deliver?

Is "Storage-Computing Integration" the Key to Breaking the Impasse for Large Model AI Chips?

[Original Reporting by Technology Cloud]

Please indicate "Technology Cloud Reporting" and include a link to this article when republishing.