Although Apple did not bring many technological innovations, it provided plenty of engineering practices in the implementation of AI on mobile devices and other terminals.

Written by: Niu Hui

Edited by: Zhou Luping

During the nearly two-hour keynote speech at the main forum, Apple devoted half of the time to artificial intelligence.

At 1:00 AM Beijing time on June 11, 2024, Apple's 2024 Worldwide Developers Conference (WWDC) opened in Cupertino. If last year's conference highlighted the MR device Vision Pro, this year, Apple, criticized for lagging behind its peers in the AI race, finally unveiled a plethora of AI-related products and features.

For instance, the new Siri can understand context for multi-turn conversations, facilitate information flow between multiple apps, and incorporate the capabilities of ChatGPT. It can also automatically categorize emails and generate responses, transcribe voice memos into text and summaries, support image search and removal, and automate video editing. Apple is integrating these AI capabilities into various apps and scenarios on the phone.

Although there were not many eye-catching innovations, one significant advantage for Apple and other terminal manufacturers is that they do not need to create demands and scenarios. They only need to address existing pain points on the phone with AI to provide users with a different experience.

Apple summarized these AI capabilities with a clever term - Apple Intelligence, attempting to cultivate users' mindset into equating Apple Intelligence (Apple's intelligence) with Artificial Intelligence.

01

How will AI change the iPhone experience?

After an hour of the keynote, Apple finally launched Apple Intelligence, with the application scenarios introduced upfront.

Firstly, generating emojis and images. When users cannot find a suitable emoji to express what they want to say, they can freely create their own emojis using natural language. Additionally, when messaging friends, users can generate images of different styles using their friends' photos, making conversations more interesting. Currently, it supports sketch, illustration, and animation styles.

Secondly, call transcription and summaries. While the iPhone has not supported call recording due to privacy concerns, it will now provide this feature with intelligent summaries. However, when a user starts recording, the other party will receive a notification, which may be an awkward situation.

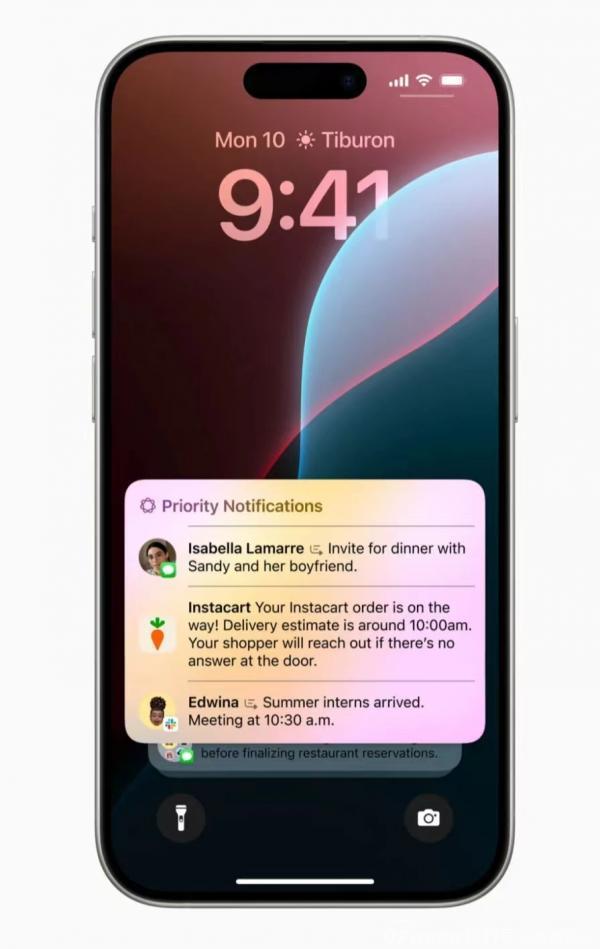

Thirdly, information refinement and generation. This includes refining web page information in Safari, categorizing and summarizing emails, and generating email responses or text refinement. Additionally, Apple AI will summarize key information from mobile app notifications for prioritized alerts.

Fourthly, image removal and creating vlogs. Users can input descriptions and Apple's system will utilize the existing photo library to create "movies with unique narrative arcs." They can also directly tell the phone to search for images with specific characteristics, and the system will find the corresponding images from the vast photo library. Users can also eliminate unwanted elements in images with a one-click effect.

Fifthly, Siri has become more intelligent, supporting multi-turn conversations in natural language, understanding context, and supporting text input. Siri, as a voice assistant, has been around for over a decade but has always been lacking in intelligence, only handling simple tasks like "setting timers" and "creating reminders." Many questions would only yield a list of search links, devoid of intelligence.

With the support of large models, Siri has a new logo,