Apple Wants to Equip AirPods with Cameras for Spatial Computing and AI?

![]() 07/03 2024

07/03 2024

![]() 690

690

Have you ever thought that headphones might one day be equipped with cameras?

While there are similar products combining headphones and cameras, we generally refer to such products as "smart glasses." Their positioning is similar to portable cameras and cycling recorders, where the photography function is the core, and the headphone function is merely incidental.

After all, from the perspective of actual experience and product design, it's hard to imagine what kind of usage would require adding a camera to wireless headphones. Considering limitations like wearing experience, battery life, and others, this doesn't seem like a good idea, but Apple seems to think otherwise.

In early February of this year, foreign media reported that Apple was exploring some "future possibilities" for wearable devices. Rumors at the time claimed that Apple was planning AirPods with cameras and smart rings. At that time, most voices believed that this was just an experiment in Apple's labs and was unlikely to become an actual product.

Image Source: Google

However, reality is always more magical. The latest information indicates that Apple plans to mass-produce AirPods with infrared camera modules in 2026. Compared to this information, rumors about adding a screen to the AirPods charging case seem incredibly normal. But if we combine these two pieces of information, it paints a picture of a brand-new AirPods: a wireless headset with a screen and camera support. What is this? A portable mini camera?

With all these new things being tinkered with on a tiny AirPods, what exactly does Apple want to achieve?

AirPods, Another Piece of the Puzzle for Apple's Spatial Computing

What does Apple want? Before answering this question, we first need to understand what the infrared camera mentioned in the rumors is. Simply put, traditional cameras mainly receive visible light through sensors and convert it into image signals, but infrared cameras are different. In addition to sensors that can receive infrared light, they are also equipped with emitters that can emit infrared light. By actively emitting and receiving reflected light, they generate images of the detection range.

Image Source: cctvcamerapros

Compared to traditional cameras, infrared cameras can meet the image monitoring needs in low-light or no-light environments and are more concealed. Since the infrared light emitted by infrared emitters is invisible to most animals (including humans), they are often used for applications like outdoor animal monitoring.

At first glance, the main uses of infrared cameras seem unrelated to the scenarios where AirPods might use imaging functions. So what is the positioning of this device? In fact, infrared cameras have another use that traditional cameras don't have: "ranging."

By calculating the time difference between emitting infrared light and receiving reflected signals, infrared cameras can determine the straight-line distance between the emitter and the reflecting object. By emitting infrared light multiple times in a short period, the camera can calculate the movement angle and speed of the emitter relative to the object's surface through algorithms.

At this point, some may have guessed that the reason for equipping AirPods with cameras is not for taking photos or other miscellaneous new features, but solely to further enhance the effect of spatial audio. In fact, if generating photo-grade image information is not considered, the power and size of this infrared camera can be reduced to an acceptable level.

Image Source: Apple

However, this means that scenarios envisioned by netizens like "your photo effect is worse than my earphones" are highly unlikely. Essentially, this is a sensor that detects and receives infrared light, and it's difficult to even call it a camera. At most, it provides simple image information to help the chip determine the environmental spatial state of the device.

In addition to external infrared monitoring equipment, Apple seems to have prepared a biological monitoring module for AirPods, which is expected to replace the existing inner infrared sensor. The original sensor is mainly used for detecting wearing status and other needs, while the new biological monitoring module will also provide heart rate, body temperature, and other data monitoring services.

With precise spatial positioning and biological data monitoring, AirPods are clearly more than just a pair of headphones. With the release of Vision Pro, Apple is planning to transform this popular smart wearable device into a brand-new spatial computing aid, completing the audio aspect of the spatial computing ecosystem.

Image Source: Apple

The precise 3D spatial audio provided by AirPods, combined with Vision Pro's nearly indistinguishable spatial display effect, will elevate the user's virtual experience to a new level.

It has to be said that Apple's idea is quite bold. Wanting to cram so many sensors into a wireless headset while maintaining battery life and wearing comfort requires incredibly high industrial design capabilities. So it's not surprising that even in the leaks, it was only referred to as an idea "planned" by Apple. I wouldn't be surprised if these goals are not achieved by 2026.

In fact, it's already quite difficult for current wireless headsets to calculate real-time data transmitted by infrared cameras. The best solution I can think of is to utilize the computing power of wirelessly connected devices like phones. But this would lead to issues like delays in spatial audio signals, which obviously doesn't meet Apple's stringent requirements for the new generation of "spatial audio."

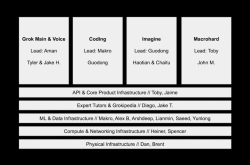

Based on previous reports, Apple's plan is to utilize artificial intelligence technology and deploy NPU in the headset to meet the computing needs of spatial data. This is indeed a feasible solution. In terms of spatial computing and other aspects, NPU has significant advantages over traditional processors. AI algorithms built through deep learning can more efficiently complete spatial data calculations, and power consumption is also much lower than traditional processors.

Based on the current rate of technological progress, achieving this goal is not out of reach. It actually depends more on when the integration cost of related hardware can be reduced to an acceptable level.

Additionally, I'm still pondering a question: Will Apple stop here?

In the AI era, are wearable smart devices the future?

Currently, the representative of personal intelligent terminals is undoubtedly the smartphone. However, in the AI era, the form of intelligent terminals will undoubtedly become more diverse. With the help of cloud computing power and AI large models, even just a pair of headphones can complete many complex tasks in the future. Wearable smart devices and smartphones will no longer be purely subordinate to each other.

In fact, Apple is already promoting this process, and Vision Pro is a good example. To enable users to use it outdoors, Apple has even gone so far as to add an external flat-panel display and a series of cameras and sensors to achieve external facial reproduction and internal reality projection.

Image Source: Apple

However, spatial computing devices like Vision Pro are obviously not suitable for being considered as conventional daily wearable smart devices. To some extent, such devices are more like laptops and are only worn on the head and used when people need immersive experiences or to complete complex visual interaction tasks.

The off-grid battery life of Vision Pro design also proves this point. It's not a product designed for long-term portability, so Apple obviously needs other devices to fill in the missing link. What will it be? The answer is actually quite clear: Apple Watch + AirPods + AR glasses, and the responsibilities of the first two will overlap somewhat, allowing users to choose device combinations more freely.

When it was revealed that AirPods will be equipped with infrared camera modules, there was actually another piece of information: In 2023, Apple launched a project called B798, aiming to equip AirPods with a lower-resolution camera to achieve image-level data collection.

Compared to infrared camera modules that can only be used for spatial positioning, the advanced camera module can serve as an AI assistant, allowing the AI inside AirPods to "see" the user's surroundings and provide help based on image data.

Image Source: beebom

For example, when you walk up to a restaurant and want to know its reputation and average price per person, the current approach is to pick up your phone and search for the restaurant's name using apps like Dianping before confirming the reviews. In the future, you can simply ask Siri directly through voice, and she will confirm the restaurant's name through the camera on the AirPods, search for relevant information about the restaurant based on location and other information, and then directly feedback the results through the headset.

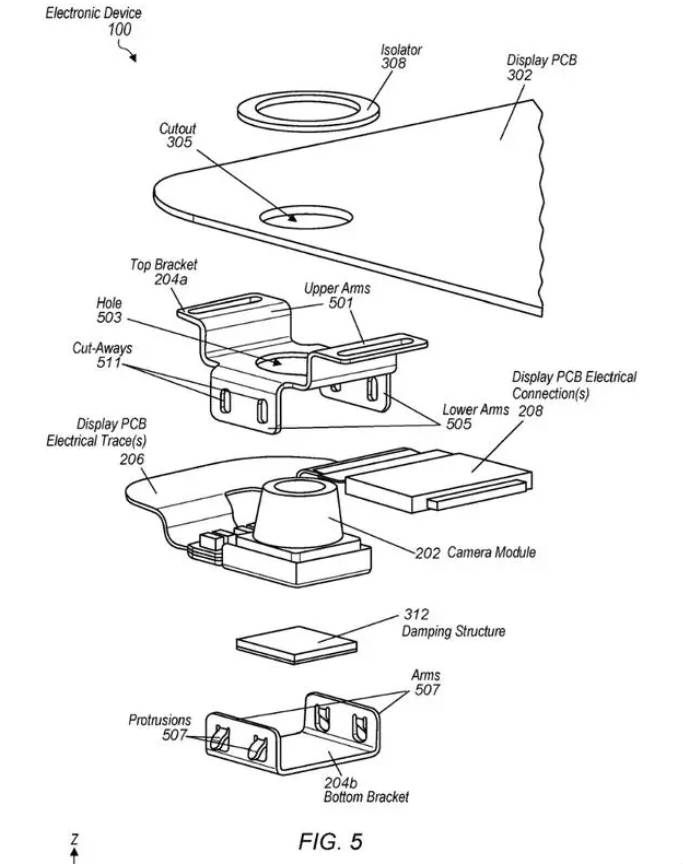

Interestingly, in Apple's plans, AirPods are not the only wearable smart device to add a camera module. In a patent exposed in March this year, Apple has found a solution to equip Apple Watch with an under-screen camera module that can achieve functions like photography, facial tracking, and Face ID, making Apple Watch a more independent intelligent terminal.

Image Source: Apple

Combining Apple's moves in AI and other areas, it's not hard to guess the main purpose of this camera module. It's probably the AI visual assistance module for Apple Watch, providing real-time image collection capabilities to enable it to replace the iPhone's usage to a certain extent.

However, when using the Apple Watch's camera, whether it's an under-screen or side-mounted type, there's actually a problem: it's not natural. I don't think anyone would want to keep their arm raised while walking, right? This gives rise to the need for a camera on AirPods, realizing intelligent applications in different scenarios through the complementarity of the two wearable smart devices.

In general, users only need to call upon AirPods' image data to complete tasks like navigation and information queries. When higher-precision image data is needed, Apple Watch can provide additional help. I even suspect that in Apple's vision, will the iPhone eventually become a thing of the past? Imagine if Apple uses AR glasses to solve image display needs, is there still a need for the iPhone?

At that time, perhaps the iPhone will become something else, like a portable local computing power terminal. All hardware will serve the built-in high-performance AI chip, and this terminal may not even need a screen. Apple Watch, AirPods, and Apple AR Glasses (paired with a smart ring) can provide various real-time interactive experiences in different scenarios, which is far more effortless and convenient than swiping your finger on a glass screen.

Of course, so far, this is just a crazy speculation based on Apple's related patents and product layout. In fact, to realize such a grand blueprint, we probably need to make significant progress in multiple industries like semiconductors.

Moreover, the intelligent ecosystem based on spatial computing devices is still in its infancy. At least in the short term, handheld intelligent terminals like the iPhone will still be the core of the entire mobile intelligent ecosystem.

Source: Leikeji