NVIDIA and Qualcomm are competing to bet on how "AI Frame Interpolation" is changing gaming.

![]() 08/07 2024

08/07 2024

![]() 522

522

"

Over the past half-century, video games have been a key force driving advancements in computer technology. From Atari's first-generation consoles to the ongoing console wars spanning multiple generations, from NVIDIA's graphics cards to GPUs in mobile chips, the progress of gaming has been instrumental. Conversely, the development of games is also inseparable from computer technology.

In the past few years, whether on PCs or mobile devices, the improvement in gaming performance has benefited not only from continuous advancements in GPUs but also from rapid progress in super-resolution and frame interpolation technologies. Earlier, AMD unveiled a technical preview of its new generation of frame generation (commonly known as "frame interpolation") technology, AFMF 2, attracting widespread attention from the gaming community.

AMD AFMF 2 Arrives: Gaming Experience Enters a New Round of "Frame Interpolation" Race

AFMF stands for AMD Fluid Motion Frames. The first-generation AFMF was officially launched for all users in January this year, and now, just six months later, a technical preview of the new-generation AFMF 2 has been quickly released.

AFMF 2 Technical Preview Launched, Image/Leitech

Compared to the first generation, AFMF 2 focuses on significantly reducing latency, optimizing smoothness, and enhancing performance, with optimizations for fast motion, broader game compatibility, and more settings and gameplay options. AMD officially stated that AFMF 2 represents a significant advancement in frame generation technology.

Members of Digital Foundry, after experiencing the AFMF 2 technical preview, generally acknowledged AMD's improvement in gaming performance, although noticeable artifacts appeared in some architectural scenes in "Cyberpunk 2077".

Theoretically, when paired with AMD FSR 3's super-resolution technology, AFMF 2 can deliver even better gaming performance and image quality.

Not only AMD but also other manufacturers have either already introduced or plan to introduce their respective frame interpolation technologies, driving technological iteration and popularization. Among the major players, NVIDIA, which was among the first to champion an AI-driven graphics revolution, has developed its DLSS (including super-resolution and frame interpolation technology) to version 3.5; Qualcomm's AFME super-frame technology based on the Snapdragon platform has also begun adapting games with its 2.0 version.

Tencent Game's "Tales of Tarir" Adapted for Qualcomm AFME 2.0, Image/Leitech

Additionally, Intel XeSS plans to introduce its own frame generation technology. Anton Kaplanyan, Intel's Vice President of Graphics Research, introduced a solution called ExtraSS last year, which includes frame interpolation technology. However, MediaTek's approach is intriguing. Although it was among the first to introduce super-resolution technology into mobile gaming scenarios, its frame interpolation technology remains focused on video.

Looking back at the history of frame interpolation technology in gaming, its roots can be traced back to early linear interpolation techniques, which used simple algorithms to generate transition frames between key frames. This was originally applied in the video domain. With the increase in computing power and advancements in algorithms, frame interpolation technology gradually evolved into more complex and intelligent systems, finally making its way into interactive gaming.

Especially in recent years, NVIDIA's DLSS technology, representing super-resolution and frame interpolation, has undergone several iterations, from the initial DLSS 1.0 to the current DLSS 3.5, evolving from AI-based pixel prediction to AI algorithm-based rendering and introducing frame generation technology.

However, despite the many advantages of AI frame interpolation technology, its application in gaming still faces challenges and issues. For instance, in rapidly changing scenes, AI may generate inaccurate frames, leading to artifacts and blurring. Additionally, the varying implementation methods and technological paths among different manufacturers have left many users with questions and confusion when making choices.

All are frame interpolation, but what are the differences?

Although frame interpolation technologies from various manufacturers can achieve the basic function of "frame interpolation," they differ significantly in their implementation methods and effects.

We all know that videos are essentially composed of individual frames, with the mainstream format for movies being 24 frames per second, meaning 24 images per second. Specifically in gaming, the core of frame interpolation technology is to generate new frames between the original frames (graphic rendering) through AI, thereby enhancing the game's frame rate and smoothness.

However, how these new frames are generated and processed affects the latency and visual effects of frame interpolation technology in actual gaming experiences. The different methods and paths adopted by different manufacturers to achieve this function directly lead to varying results and differing evaluations from the gaming community.

NVIDIA DLSS 3: Truly AI-driven

"DLSS is a revolutionary breakthrough in AI-driven graphics," Image/NVIDIA

For instance, NVIDIA's DLSS (Deep Learning Super Sampling) introduced frame generation technology based on motion vectors and motion detection starting from version 3.0, utilizing deep learning and AI algorithms to generate new frames, thereby enhancing the game's frame rate and image quality.

Compared to other algorithms that analyze image changes and guess motion, DLSS's frame generation achieves higher accuracy and better results, interpolating up to two times or more without significantly impacting the rendering of original frames.

Tests conducted by Digital Foundry show that on an RTX 4090, "Marvel's Spider-Man" at 4K resolution with DLSS 3 enabled can perform up to 203.6% better than without DLSS, while controlling image degradation to an almost imperceptible level.

Image/Digital Foundry

However, it's essential to note that DLSS's frame generation relies on Tensor Cores (AI computing units) and motion vector data to acquire motion information between frames and generate high-quality intermediate frames. Therefore, in practice, DLSS frame generation depends both on NVIDIA GPUs equipped with Tensor Cores and on game compatibility and support.

Fortunately, NVIDIA's leading position in the gaming GPU market and long-standing partnerships make it influential among game developers, with many AAA titles already adapted to use the frame generation technology in DLSS 3.

AMD AFMF 2: Moving Towards AI-driven with Significant Latency Improvements

In contrast, AMD's newly launched AFMF 2 requires no active game adaptation and supports all DX11 and DX12 games, as well as all products based on AMD's RDNA 2 architecture, including AMD Radeon RX 6000 and 7000 series GPUs or newer, and APUs based on 700M.

In short, AMD AFMF 2 offers stronger compatibility at both the hardware and game levels. The core lies in the fact that AFMF 2 does not rely on motion vectors, unlike NVIDIA's DLSS, which depends on AI computing unit hardware acceleration.

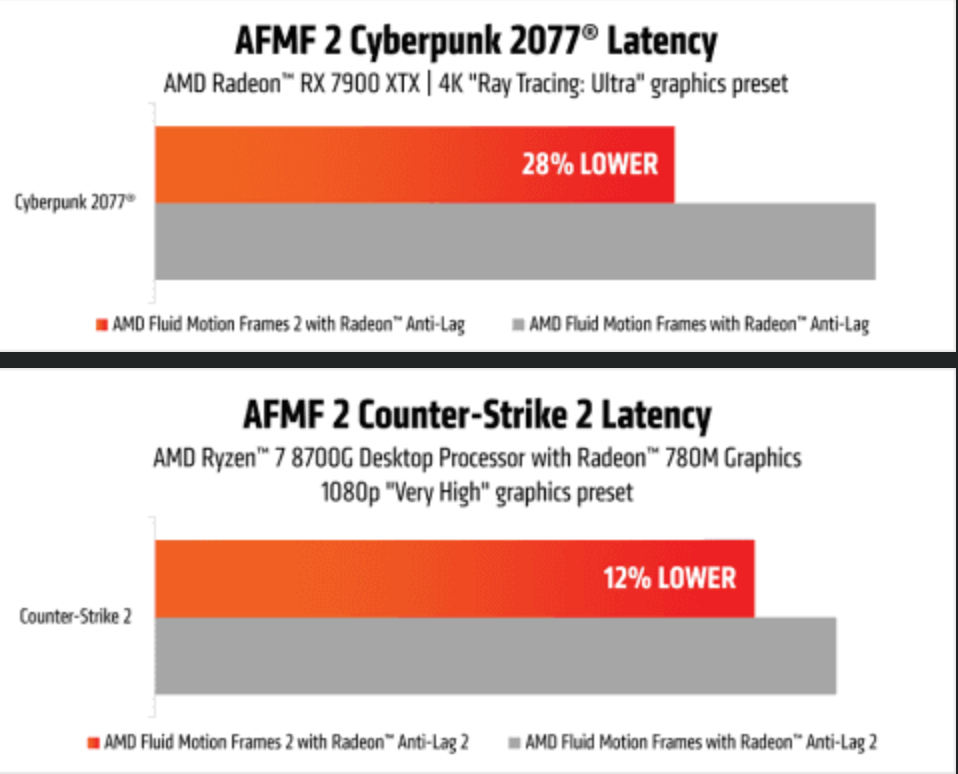

While AFMF 2 may still lag behind DLSS 3 in frame generation quality, AMD claims that in "Cyberpunk 2077" (4K, maximum ray tracing settings), AFMF 2 reduces latency by 28% compared to the previous-generation AFMF.

Image/AMD

Running "Counter-Strike: Global Offensive" on a computer equipped with an AMD Ryzen 7 8700G processor and Radeon 780M integrated graphics, it can achieve a display frame rate of over 120fps at 1080p resolution with very high graphics settings, while also reducing latency by 12%.

In terms of settings, AFMF 2 offers two modes: "Search" and "Performance." The former maximizes image quality and frame rate, while the latter minimizes power consumption to ensure smooth gameplay.

However, according to AMD's Chief Technology Officer Mark Papermaster, "(AMD) is leveraging AI to upgrade our gaming devices." Furthermore, with RDNA 3, AMD has finally integrated AI computing units, suggesting that the next-generation FSR (including AFMF) will truly be designed and operated based on AI.

Qualcomm's AFME, or Adreno Frame Motion Engine, was first introduced in 2021 and upgraded to version 2.0 at last year's Snapdragon Summit. Unlike other common mobile game frame interpolation solutions, AFME 2.0 does not require an external chip or CPU, relying solely on the GPU for computations.

At the Qualcomm Gaming Technology Awards held in July, Tencent Games' "Tales of Tarir" announced the introduction of AFME 2.0 technology, which, without utilizing the CPU, results in more stable frame performance.

"Tales of Tarir" Introduces AFME, Image/Leitech

While the focus of frame interpolation technology on PCs is to improve game graphics and frame rates, its application on mobile devices is more significant in reducing load and power consumption.

According to data provided by Hu Haoxiang, head of the engine team at Tencent Games' Domestic Publishing Line Ecosystem Development Department, AFME 2.0 reduces energy consumption by approximately 30% compared to native 60fps solutions, resulting in smoother gameplay without compromising image quality.

Meanwhile, OnePlus is also leveraging the Snapdragon platform to enhance gaming performance by completing the entire frame generation and computation process on Qualcomm chips, allowing the generated frames to be just one 120Hz vertical sync period apart from the game-rendered frames.

AI Frame Interpolation, a True Game Changer

The mutual achievement of gaming and GPUs is self-evident:

As game graphics and complexity improve, GPU graphics rendering performance must continuously advance to meet players' demands for high frame rates, high resolutions, and realistic visuals. To support the latest AAA games, GPU manufacturers enhance computational power and optimize image processing technologies, which, in turn, drives improvements in game graphics and complexity, enhancing players' immersion and interactive experience.

However, it's evident that the improvement in GPU graphics rendering performance has reached a bottleneck to some extent. AI-based frame interpolation technology presents a new opportunity for the development of games and GPUs.

In traditional image processing, complex graphic rendering is required for each frame, consuming significant computing power. AI frame interpolation technology, on the other hand, reduces the computation required per frame by generating new intermediate frames, significantly boosting frame rates and smoothness.

Taking NVIDIA's DLSS 3.5 as an example, this technology leverages AI algorithms and optical flow accelerators to significantly increase game frame rates without sacrificing image quality. This not only enhances players' gaming experiences but also reduces the burden on GPUs, enabling mid-to-low-end graphics cards to run demanding games.

The impact of frame interpolation technology is multifaceted. Under its influence, game developers can enhance game graphics and detail while maintaining high frame rates, attracting more players.

Furthermore, AI frame interpolation represented by DLSS 3 has the potential to reduce development costs and time as developers no longer need to optimize image processing algorithms for each scene and action individually. The popularization of AI frame interpolation diversifies the game market, making it easier for independent developers and small studios to create high-quality games, increasing market competitiveness and creative space.

On the other hand, traditional GPU designs primarily focus on enhancing computational power and graphics rendering performance, while the introduction of AI frame interpolation technology requires GPUs to possess stronger AI computing capabilities.

Image/NVIDIA

In response, GPU manufacturers are integrating more AI processing units into their products to support AI frame interpolation and other AI-driven image processing technologies. NVIDIA's RTX 40 series graphics cards controversially integrate more Tensor Cores specifically for AI computing and deep learning tasks. AMD has also begun introducing AI computing units.

Simultaneously, the rapid development of AI technology will inevitably drive the advancement and popularization of AI frame interpolation.

Following current trends, both software and hardware are increasingly embracing AI-accelerated computing, fundamentally transforming the computational environments of various hardware. Compared to graphics rendering, AI-driven game performance improvements offer not only higher efficiency but also greater room for improvement.

Source: Leitech