OpenAI o1 pioneers "slow thinking", while domestic AI has already gathered at CoE and set out in groups

![]() 09/19 2024

09/19 2024

![]() 567

567

After the release of OpenAI o1, its complex logical reasoning capabilities amazed the industry, with mathematical abilities reaching a doctoral level. For example, the long-standing issue of "which is bigger, 9.9 or 9.11?", which had previously plagued LLMs, was resolved in the o1 era.

Hence, a saying emerged: In this round of AI wave, the more China chases, the further behind it falls, widening the gap with OpenAI.

Is this really the case? Instead of rushing to conclusions, let's consider three questions together:

How has the path to AGI, dominated by large models in recent years, developed?

To what extent has Chinese AI progressed along the world-class technology roadmap?

And in the face of o1, can domestic AI respond, and how?

Undoubtedly, OpenAI has consistently been the "locomotive" of AI technological innovation. From ChatGPT to the present, OpenAI has proven with one model after another the three technical directions towards AGI:

1. The GPT route. From ChatGPT to GPT 4o, the core of this route is the general technology of statistically modeling token streams using models, where tokens can be text, images, audio, action choices, molecular structures, etc. The latest 4o is a representative of multimodal fusion. Industry experts have suggested that a more fitting name for this route might be "Autoregressive Transformer" or something similar.

2. The Sora route. Like GPTs, Sora also employs the Transformer architecture, but why is it considered a separate branch? Because it demonstrates a modeling capability for complex phenomena in the real world. Yann LeCun, Turing Award winner and Chief Scientist at Meta, believes that Sora may be a more accurate modeling approach, closer to the essence of the world, after shedding some of the knowledge interventions imposed by human experts.

3. The o1 route. Both GPTs and Sora are probabilistic models, which sacrifice reasoning efficiency, theoretically leading to deviations in the form of "hallucinations" and unreliability. How can models acquire genuine logical reasoning capabilities? The o1 route, through inference methods based on reinforcement learning (RL) capabilities, decomposes complex problems using CoT chains of thought and collaborates with multiple sub-models to solve them, akin to automating complex prompts, significantly enhancing the model's reasoning abilities, especially for mathematical problems and complex tasks that LLMs struggle with.

It's not difficult to see that over the past two years, the three directions led by OpenAI are all achievable for Chinese AI with clear goals. Currently, domestic GPT-like and Sora-like models, in terms of underlying architecture, specific technologies, and deployed products, are not inferior to OpenAI's models, rapidly narrowing the technological gap.

This also demonstrates that each time OpenAI clarifies a direction, the result is not "the more we chase, the further behind we fall"; instead, it allows Chinese AI to focus and concentrate resources for effective research and development, further narrowing the gap.

Specifically for o1, we believe it will continue this trend, and domestic AI will soon achieve breakthroughs. So, how prepared is the industry currently?

The statement "There are no obstacles on the path to AGI" is not an exaggeration when describing the significance of o1. Such a leapfrogging breakthrough—is domestic AI truly prepared? Let's delve into the essence of the technology.

Greg Brockman, co-founder and scientist at OpenAI, analyzed the underlying logic of o1 in his blog. He wrote: "OpenAI o1 is our first model trained through reinforcement learning that thinks deeply before answering questions. The model engages in System 1 thinking, while chains of thought unlock System 2 thinking, yielding extremely impressive results."

System 2 thinking refers to the slow, complex reasoning that the human brain performs relying on logic and rational analysis. It can collaborate with System 1, responsible for rapid intuitive decision-making, to achieve better model performance.

Zhou Hongyi, founder of 360 Group, suggested that OpenAI o1 might follow the "Dual Process Theory," where the core lies in the synergistic operation of the two systems rather than their independence. From this, it can be inferred that the more intelligent models built internally may be fusion systems combining GPT and the o-series with chains of thought, with the former for "fast thinking" and the latter for "slow thinking." The Collaboration-of-Experts (CoE) architecture brings together numerous large models and expert models, achieving "fast thinking" and "slow thinking" through chains of thought and "multi-system collaboration."

Readers familiar with domestic AI may have noticed, "Slow thinking," where have I heard that before?

Indeed, enhancing machines' cognitive intelligence is a long-standing topic in AI, and the concept of "slow thinking" is not unique to OpenAI. As early as the ISC.AI2024 conference held at the end of July this year, Zhou Hongyi mentioned that 360 would "build a slow-thinking system based on an agent-based framework to enhance the slow-thinking capabilities of large models and combine multiple large models to solve business problems."

So, rest assured that in today's world where technological innovation highly depends on global intellectual collisions, no technological approach can be monopolized. In fact, Chinese AI circles proposed "slow thinking" even earlier.

Of course, one might worry that while having advanced ideas is one thing, possessing the engineering capabilities to build a model based on System 2 thinking is another. Specifically, are domestic AI products ready to create o1-like models? I believe three conditions have been met:

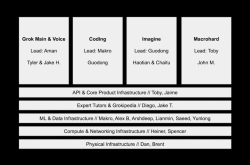

1. Consistent approach. The underlying framework of models is the result of long-term accumulation and gradual progress. Domestic 360 maintains consistency with o1's technical architecture. 360's pioneering CoE architecture was officially launched on August 1st. CoE stands for Collaboration-of-Experts, where multiple models collaborate and work in parallel to execute multi-step reasoning. The CoE architecture shares similar concepts and methods with o1 but was released earlier, proving that domestic AI has not lagged behind in technical directions and has already begun exploration.

2. Product deployment. Currently, o1 is still in preview version, with actual user experience differing from official use cases and usage restrictions. For example, o1-mini limits ChatGPT Plus users to 50 prompts per week. No matter how good the technology is, it's useless if it's not accessible. Here, domestic AI's productization advantages emerge, such as the implementation of the CoE architecture in 360AI Search, enhancing its stability in facing uncertain or complex inputs and delivering more accurate, timely, and authoritative content. Based on the CoE architecture, 360AI Search has surpassed Perplexity AI and rapidly grown into the world's largest AI-native search engine, expanding at an extremely high rate of 113% month-on-month.

Furthermore, the AI assistant in 360AI Browser allows users to intuitively experience features like model arenas and multi-model collaboration. As the CoE architecture has integrated 54 large model products from 16 leading domestic vendors, including Baidu, Tencent, Alibaba, Zhipu AI, Minimax, and Dark Side of the Moon, users can select any three large models for collaboration, achieving significantly better results than a single model. The first acts as an expert, providing initial answers; the second serves as a reflector, correcting and supplementing the expert's answers; and the third summarizes and optimizes the previous rounds of responses. In many real-world examples, even if the first expert model provides an incorrect answer, the reflector and summarizer models can correct it during subsequent collaboration, mimicking human decision-making processes.

3. Leading capabilities. Someone might ask, given that domestic large models still lag behind OpenAI in data, algorithms, and computing power, can CoE's "strength in numbers" truly catch up with o1? We can test this with practical examples.

Using well-known complex logical reasoning problems, such as holiday scheduling questions and comparing 9.9 to 9.11, and querying GPT-4o, o1-preview, and 360AI Browser simultaneously, it becomes apparent that 360's multi-model collaboration effectively combines the strengths of each model, achieving "grouping" effects. For instance, when asked, "What is my relationship to the son of my grandfather's brother's son's wife's sister?", both 360's multi-model and o1-preview gave correct answers, while 4o was incorrect. The power of multi-model collaboration is once again validated.

In summary, domestic AI's collaboration through the CoE architecture often outperforms 4o and competes favorably with o1. This is just the beginning, and as the CoE approach proves viable, future enhancements in chains of thought, slow thinking, and collaboration modes will likely elevate domestic hybrid models' capabilities to rival o1's.

So, let them be strong; the wind blows over the mountain ridge. While OpenAI o1 has indeed removed obstacles on the path to AGI, domestic AI has not merely stood idly by but has already woven its net and gathered on the CoE route.

Does domestic AI have to forever follow in OpenAI's footsteps as a mere follower?

Certainly not. The different scenarios, industrial endowments, and technological soil in China and the US have nurtured their respective advantages.

Admittedly, OpenAI has consistently been a pioneer and leader in new directions, but it's also evident that Sora and o1 both bear the aura of "futures," still difficult to scale up due to product maturity issues, high costs, and challenges in enterprise deployment. For example, when AI startups use o1 to solve practical business problems, they find the number of tokens to be enormous and costly, making long-term use in existing businesses unfeasible. Some startups even abandon the latest model versions to balance costs.

In this regard, o1 also presents opportunities for domestic AI to build upon its strengths, mainly manifested in:

1. The value of base models is reaffirmed. Previously, leading general-purpose large model vendors entered a period of stagnation. o1 has rekindled the market's awareness of the critical value of base models' logical reasoning capabilities for businesses, a core aspect of this AI wave that cannot be overlooked. This is good news for vendors focusing on base models, enhancing industry and societal confidence and facilitating the continued advancement of domestic general-purpose large models.

2. The advantages of technological productization are amplified. Compared to Sora's video generation and 4o's voice interaction, o1's productization path is less clear, and how to recoup costs will be a significant challenge for OpenAI. In this regard, domestic AI, which places more emphasis on large model productization and application, may find faster routes to deploy o1-like models.

Taking the productization of the CoE architecture as an example, the deployed product 360AI Search has achieved a commercial closed loop, with commercial revenues covering corresponding reasoning costs. This is because the CoE model has always prioritized reducing API interface and token usage costs while accelerating reasoning speed.

3. Acceleration of AI innovation and the intelligent economy. Domestic AI's pragmatic approach, which prioritizes ROI over AGI, was once viewed as less technologically devout than OpenAI. However, the lofty goal of AGI relies on the AI-driven transformation of various industries, which is fundamental to preventing this AI wave from turning into another bubble. Consequently, discussing ROI becomes essential in the context of industry-wide intelligence, as businesses incur costs when introducing AI. Domestic AI has taken a more solid approach, with domestic large models striving to "improve efficiency and reduce costs" this year, lowering token usage and innovation costs for small and medium-sized AI enterprises and developers.

Building upon this foundation, as CoE and o1-like models evolve, they enable AI to penetrate industries and address specific business challenges with greater value. RL+CoT further lowers the threshold for prompt engineering, ushering in a new cycle of growth for China's intelligent economy.

In summary, there are no shortcuts on the path to AGI and the intelligent era; Chinese AI must measure its progress step by step. Over the past two years, three routes have proven that Chinese AI pioneers like 360 have already set out in groups on this new technological path.

Wherever you go, you leave a footprint; wherever you work hard, you will reap the rewards. From LLM to CoE, Chinese AI will never be absent from this wave of technological change.