Let AI think slowly and reflectively, 360 is ahead of OpenAI this time

![]() 09/19 2024

09/19 2024

![]() 671

671

Written by: Daoge

"Does AI have the ability to think?" "What kind of thinking ability should AI possess?" These are difficult questions that have been frequently discussed and tackled by the technology industry.

Since OpenAI released its new model o1-preview, this puzzle has largely been solved:

Someone used a MENSA test question to "interrogate" o1-preview and was stunned by its IQ score of 120;

After interacting with o1-preview, the math expert Terence Tao discovered that it could successfully identify Cramer's Rule;

An author of astrophysics papers, using only 6 prompts, enabled o1-preview to create a code-running version within an hour, equivalent to 10 months of his doctoral work.

...

In other words, o1-preview has already acquired thinking ability and can even "look before you leap."

It is understood that the primary difference between o1-preview and the GPT series models is that o1-preview answers user questions after thoughtful consideration, outputting high-quality content rather than quickly responding with invalid answers. In other words, it replaces the previous "fast thinking" that prioritized quick responses with "slow thinking" that mimics human thought processes.

However, this idea and method is not original to OpenAI, nor is it unique. As early as the ISC.AI2024 conference at the end of July, Zhou Hongyi, the founder of 360 Group, announced, "Using an agent-based framework to create a slow-thinking system, thereby enhancing the slow-thinking ability of large models."

01 Great minds think alike

As mentioned earlier, the reason o1-preview has become more powerful and intelligent is essentially because it replaces the previous "fast thinking" that prioritized quick responses with "slow thinking" that mimics human thought processes.

Great minds think alike. Zhou Hongyi not only proposed this concept earlier than OpenAI but also emphasized similar ideas on multiple occasions thereafter.

Regarding the launch of o1-preview, Zhou Hongyi stated in his latest short video, "Unlike previous large models that were trained using text, o1-preview is like playing chess with itself, achieving this ability of thought chains through reinforcement learning."

In Zhou Hongyi's view, human thinking can be divided into "fast thinking" and "slow thinking." Fast thinking is characterized by sharp intuition, unconsciousness, and quick responses but with insufficient accuracy. GPT-like large models draw from vast amounts of knowledge and primarily learn fast-thinking abilities, which is why they can respond instantly but often provide irrelevant or nonsensical answers. "Just like humans, the probability of speaking fluently and accurately without thinking is very low."

Slow thinking, on the other hand, is characterized by slowness, consciousness, and logicality, requiring detailed steps. It's like writing a complex article, where one must first outline the structure, gather materials, discuss, write, refine, and revise until the final draft is ready. "o1-preview possesses the qualities of human slow thinking, repeatedly pondering and deliberating before answering questions, possibly asking itself thousands of questions before finally providing an answer."

While o1-preview has made impressive progress with the support of "slow thinking," it is still imperfect, plagued by issues such as hallucinations, slow speed, and high costs, which limit its range of applications.

In contrast, 360, which realized the importance of "slow thinking" for AI early on, has already achieved application implementation before the launch of o1-preview, leveraging its industry-first, fully self-developed CoE (Collaboration of Experts) technical architecture and hybrid large model.

It is understood that in the CoE technical architecture officially released by 360 at the end of July this year, the use of "slow thinking" has been emphasized, driving multiple models to collaborate and work in parallel to perform multi-step reasoning.

Moreover, the CoE technical architecture offers finer division of labor, better robustness, higher efficiency and interpretability, and deeper generalizability, accelerating reasoning speed and reducing costs associated with API interfaces and tokens.

It can be said that this time, AI enterprises from China and the United States are unusually aligned in their research and development thinking, with Chinese enterprises starting earlier.

02 Gather the Dragon Balls to Summon Shenron

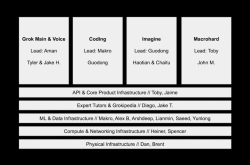

What distinguishes CoE's technical architecture from its competitors is that it does not merely connect to one enterprise's model. Instead, led by 360, it comprises a "joint fleet" of 16 domestic mainstream large model vendors, including Baidu, Tencent, Alibaba, Zhipu AI, Minimax, and Dark Side of the Moon.

Simultaneously, it connects to many expert models with billions or even fewer parameters, making the entire system more targeted, proactive, and intelligent.

This two-pronged approach enables the CoE technical architecture to easily achieve customized solutions and maximize resources and utility: on one hand, "gather the Dragon Balls to summon Shenron," allowing the strongest models to tackle the toughest challenges; on the other hand, more precise and distinctive smaller models are called upon to solve simple, superficial questions.

Currently, the CoE architecture serves as the underlying support and has been implemented in products such as 360AI Search and 360AI Browser.

The "In-depth Answer" mode in 360AI Search involves 7-15 large model calls, which may include one intent recognition model call, one search term rewriting model call, five search calls, one webpage sorting call, one main answer generation call, and one follow-up question generation call.

The resulting workflow consists of three steps: first, building an intent classification model to identify the user's question; second, creating a task routing model to decompose the question into "simple tasks," "multi-step tasks," and "complex tasks" and scheduling multiple models; and finally, constructing an AI workflow to enable multiple large models to work together.

In this way, 360 AI Search not only considers the dynamic and complex nature of tasks but also adjusts processing strategies and resource allocation in real-time based on the specific circumstances of each task, making it more flexible and efficient in handling complex tasks.

03 Stronger teamwork, greater combat effectiveness

In addition to "multi-model collaboration" in 360AI Browser, another highlight is the introduction of the country's first large model competition platform - Model Arena.

The "Model Arena" also supports the invocation of 54 large models from 16 mainstream domestic large model vendors, including functions such as "team competition," "anonymous competition," and "random matchups," helping users obtain optimal solutions in the shortest time.

Especially with the "team competition" feature, users can freely select three large models to compete against one or two other large models.

The benefits of this approach are obvious. Multiple large models compete fiercely in the same space and time, engaging in quantitative comparisons or "muscle-flexing" across dimensions such as speed, time consumption, and efficiency. For users, after cross-comparison, they can more intuitively evaluate and select the best solution.

In fact, while many domestic large models can match or even outperform GPT-4 on individual metrics, the gap becomes apparent when considering overall strength.

The idea behind the CoE technical architecture is to change this "lone wolf" approach and build a "team of elites" or "group battle" strategy for large models, drawing on their respective strengths to form a "super brain" to compete with o1-preview and GPT-4o.

At the same time, in the rich atmosphere of "learning from each other and striving for excellence," some industry standards emerge, improving compatibility between different models and upgrading user experience.

Following the cause and effect, thanks to the integrated innovation of the underlying architecture, 360's hybrid large model achieved a comprehensive score of 80.49 out of 100 in 12 specific evaluations, including translation, writing, subject exams, and code generation, significantly outperforming GPT-4o's score of 69.22. In particular, in subcategories with more Chinese characteristics, such as "poetry appreciation" and "idiocy," the lead was further expanded.

Even when faced with o1-preview, 360's hybrid large model demonstrated its ability to compete without specific optimization.

Test results from 21 complex logical reasoning questions showed that its performance was comparable to OpenAI's o1-preview, surpassing GPT-4o and sometimes even o1-preview.

It can be said that the entire CoE process embodies the "slow thinking" of human thought processes, encompassing critical steps such as analysis, understanding, and judgment, with an increasingly "human-like" orientation.

As Zhou Hongyi believes, "The model knows when it doesn't understand something and then finds ways to 'query' or 'verify' the answer instead of relying solely on its stored knowledge."

Closing Remarks

In the race for AI, "slow thinking" is undoubtedly a significant breakthrough in the development of artificial intelligence to date.

In the long run, "slow thinking" is even more crucial in determining the winner in the AI race. "The competition will no longer be about how quickly an answer can be provided but about how complete and accurate that answer is. This will also change the landscape of AI services, and AI will ultimately have to reference the structure of the human brain to build its working model," said Zhou Hongyi.

With its forward-thinking technical insights and dedicated efforts, 360 has found an AI development path with unique Chinese characteristics. This path offers new ideas for China's AI progress and makes it possible for Chinese large model vendors to rival or even surpass OpenAI.