From Google to Cambrian: Two Pathways to Rival NVIDIA

![]() 11/17 2025

11/17 2025

![]() 546

546

On October 29, NVIDIA's stock price soared, marking it as the first company globally to surpass a market capitalization of $5 trillion. On May 30, 2023, NVIDIA had just joined the exclusive trillion-dollar market cap club.

NVIDIA's meteoric rise reaffirms the value of being a 'shovel seller' in a gold rush. While companies driving the current AI boom with large models have yet to turn a profit, NVIDIA has amassed over $100 billion in revenue in just two years.

Within the AI ecosystem, NVIDIA stands as the sole company reaping substantial profits, revealing an unhealthy state of the AI industry chain. Yet, companies are left with no choice but to depend on GPUs, which excel in handling massive parallel computing tasks, making them ideal for large model training and inference. With few competitors in the GPU industry, NVIDIA enjoys a de facto monopoly in the AI computing acceleration chip sector, boasting a global market share exceeding 80% in 2024.

This context raises pertinent questions: How long can NVIDIA sustain its monopoly? When will a formidable challenger emerge to rival NVIDIA?

Currently, apart from its long-standing rival AMD, the market hosts two types of challengers. One group consists of international giants striving to break free from NVIDIA's GPU dominance, exemplified by Google. The other comprises Chinese firms, including Huawei, Cambrian, Moore Threads, Hygon Information, and Biren Technology, compelled to independently develop AI computing acceleration chips due to U.S. sanctions on AI chip exports.

These companies refuse to be 'strangled' by NVIDIA and are investing heavily in technology research and development or supporting competitive forces. AMD and Moore Threads pursue the GPU route, directly challenging NVIDIA. Google, Huawei, and others opt for the more efficient ASIC route over GPUs, presenting significant potential despite numerous hurdles.

From both technical and commercial standpoints, it is undeniable that NVIDIA will find it challenging to maintain its monopoly advantage indefinitely. The emergence of challengers capable of rivaling NVIDIA in the industry is inevitable and merely a matter of time.

/ 01 /

International Giants and Domestic Contenders: Challenging NVIDIA

Currently, these challengers still lag significantly behind NVIDIA, but their potential should not be underestimated.

Among them, AMD is NVIDIA's longstanding rival. According to official data, AMD's latest mass-produced MI355X chip outperforms NVIDIA's B200 in inference performance, ranking second only to NVIDIA's current flagship product, the B300.

However, overall, while the MI355X utilizes TSMC's 3nm process, it does not significantly outperform NVIDIA's 4nm B200. It also lags behind NVIDIA in software ecosystems, interconnection, data transmission, and other areas.

Besides AMD, NVIDIA faces two categories of challengers domestically and internationally. The first includes international giants like Google.

Google, a veteran in the AI field, began research and development on TPUs (Tensor Processing Units) as early as 2013. These ASICs are designed to accelerate machine learning and deep learning tasks. The TPU was first publicly unveiled and applied to AlphaGo in May 2016 and has since evolved to the seventh generation.

The Ironwood TPU v7, released in April this year, rivals NVIDIA's B200 in single-chip FP8 computing power, memory configuration, and memory bandwidth. Its superior scalability is considered Google TPU's greatest strength. Currently, Anthropic, the developer of the Claude model, is one of Google's largest customers.

However, Google TPUs are not currently sold as standalone products but are only rented out as computing power through Google Cloud services. Its ecosystem also requires improvement, and its customer base is far smaller than NVIDIA's.

Besides Google, nearly all large AI companies are accelerating their self-developed AI chips. For instance, OpenAI and xAI are purchasing NVIDIA chips on a large scale while promoting the development of their self-developed computing chips. Meta has also been reported by the media to be collaborating with Broadcom to develop custom chips.

The domestic landscape is different. Starting in 2022, the United States began restricting AI chip exports to China, with escalations continuing. Currently, NVIDIA's H20 can enter the domestic market, but it has been exposed to have 'backdoor vulnerabilities' and poses security risks. Its future market sales are highly uncertain. NVIDIA's higher-computing-power H100 and even higher-grade products remain prohibited from entering the domestic market.

In this context, domestic companies are accelerating the pace of computing power substitution, forming multiple routes. For example, Moore Threads, like NVIDIA, focuses on full-function GPUs that support AI computing acceleration, graphics rendering, video decoding, physical simulation, and other functions.

Currently, Moore Threads is the only domestic company mass-producing full-function GPUs (capable of both graphics display rendering and AI computing acceleration). In its prospectus, Moore Threads stated that its latest S5000 computing acceleration card has a computing power of 32 TFlops in FP32 precision, approaching NVIDIA's H20's 44 TFLOPS.

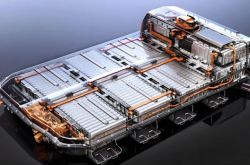

Companies like Hygon Information, Biren Technology, and MetaX specialize in GPGPUs (omitting graphics functions for AI computing acceleration), focusing on AI computing acceleration. Huawei Hisilicon and Cambrian adopt a non-GPU route, following the same ASIC path as Google to customize AI computing acceleration chips and launch NPU (embedded neural network processor) products.

Huawei's currently mass-produced Ascend 910C has reportedly achieved 60% of the inference performance of NVIDIA's H100. In August this year, Huawei Cloud CEO Zhang Ping'an introduced that Huawei Cloud service production efficiency has reached three times that of NVIDIA's H20 chip.

Cambrian is a pioneer in the domestic ASIC route. Its existing ThinkForce 590 chip is widely believed to have performance reaching 80% of NVIDIA's A100.

In 2024, in the domestic AI computing acceleration market, NVIDIA ranks first, holding a 54.4% market share. Huawei Hisilicon accounts for 21.4%, and AMD has a 15.3% market share.

From a market share perspective, Huawei Hisilicon is the domestic leader in AI computing acceleration chips, while other domestic AI chip companies still need to make breakthroughs. Under the logic of great power competition, domestic computing power is accelerating its development, becoming another important force to replace NVIDIA.

Overall, domestic and international AI computing companies have formed two main lines to counterbalance NVIDIA. Driven by the urgent needs of industry development, this long-term battle to challenge NVIDIA has already commenced.

/ 02 /

ASIC Technology: Potentially the Key to Counterbalancing NVIDIA

Currently, NVIDIA's competitors still lag significantly behind. However, from both technical and commercial perspectives, NVIDIA's monopoly status is unlikely to be sustained indefinitely.

Technically, the continuous development of large models and the highly anticipated world models both necessitate processing vast amounts of data, leading to sustained growth in computing power demand.

Looking ahead, unless disruptive technologies emerge, AI models will inevitably need to confront data at more levels to achieve true artificial intelligence. This also implies that the computing power demand in the AI industry is unlikely to diminish.

Based on this foundation, replacing existing GPUs with specially customized AI computing chips (i.e., ASICs) aligns more closely with the iteration logic of semiconductor chips: efficiency first.

The 'efficiency first' logic has a long history in the semiconductor industry. Among current chip types, the energy efficiency ratio is ranked as ASIC > FPGA (Field-Programmable Gate Array) > GPU > CPU. Driven by the pursuit of efficiency, most industry application chips follow the above logic for iteration.

As is well known, people initially used CPUs for image rendering. Later, to enhance efficiency, GPUs specifically designed for processing pixels emerged, bringing stronger processing efficiency with more specialized products.

The same was true in the previous Bitcoin mining machine field. Initially, people could mine Bitcoins using home computer CPUs. However, as mining difficulty increased, people turned to more efficient GPUs.

Subsequently, to further enhance mining efficiency, companies specifically developed FPGAs. Later, with the mining algorithm fixed, ASIC chips became the ultimate choice.

GPUs can run a large amount of parallel computing, offering greater versatility and flexibility. AI computing tasks are relatively singular. ASIC chips, completely customized for AI training and inference, have a higher upper limit of computing capability and possess strong potential to replace GPUs. This is also one of the reasons why companies like Google and Huawei choose the ASIC route.

Currently, the most prominent ASIC chip globally is Google's TPU, as mentioned earlier. Its paper strength already rivals NVIDIA's sub-flagship product, the B200, and is considered by the market to be NVIDIA's strongest rival.

In late July last year, Apple published a paper showcasing its use of Google TPU chips to train its AI models. The news caused NVIDIA's stock price to plummet by over 7%.

Additionally, domestic Huawei Hisilicon and Cambrian's NPUs have also demonstrated certain substitution effects on NVIDIA's GPUs in specific areas.

The commercial logic is simpler. Based on the expectation that the AI industry's massive demand for computing power will persist in the long run, AI giants will inevitably continue to promote the process of self-developing AI chips or supporting new AI chip suppliers, considering both cost and supply chain security. This is beyond doubt.

Currently, NVIDIA is also continuously adjusting its layout to cope with the risk of substitution. Technically, it is laying out in the ASIC field. Previously, there were reports that NVIDIA had established an ASIC department and would recruit over 1,000 chip designers, software developers, and AI researchers.

Throughout its historical development, NVIDIA has also drawn inspiration from Google's TPU and introduced Tensor Cores for matrix operations. Subsequently, from Volta, Ampere, Hopper to the latest Blackwell architecture, the number and size of Tensor Cores have continuously increased, bringing stronger computing capabilities.

Commercially, NVIDIA has made intensive AI investments in recent years, targeting not only giants like OpenAI and xAI but also some potentially promising small and medium-sized companies. It aims to bind downstream customers, bet on potential technological routes and development directions, and strengthen its ecosystem to mitigate possible development risks through a wide range of investment layouts.

However, from any perspective, the AI industry does not want a monopolistic NVIDIA. The emergence of challengers capable of rivaling NVIDIA may only be a matter of time.

Article by Dong Wuying