What is the true value of DingTalk's open AI ecosystem strategy? Many people haven't understood it yet

![]() 07/02 2024

07/02 2024

![]() 521

521

Source: @Chief Digital Officer

Hello everyone, we are the Chief Digital Officer, the leading digital media watched by digital leaders.

Follow me, and I'll tell you a business case every day.

Today, we're going to talk about: What is the strategic significance of DingTalk's open AI large model ecosystem?

"Whoever wins Apple first, wins the victory."

Jim Fan, a senior research scientist at NVIDIA, commented on Apple's collaboration with OpenAI on social media.

At the previous Apple Developer Conference, Apple announced that ChatGPT integration powered by GPT-4 will land on Apple systems later this year, leading to Jim Fan's comment.

Looking back at Apple's development history, the reason why Apple products truly entered millions of households is not just their unique appearance and pursuit of product hardware to the extreme, but their pioneering introduction of the "App Store" into their products, creating a complete software ecosystem for users, making themselves an indispensable "digital hub" for users.

A similar business narrative is also playing out in the field of "AI large models + toB".

DingTalk, the largest intelligent collaborative office and application development platform in China with 700 million users, announced its openness to all large model vendors, building China's most open AI ecosystem.

This was the latest strategic decision announced by DingTalk President Ye Jun at the "Make 2024 DingTalk Ecosystem Conference" on June 26th.

It is reported that in addition to Alibaba's own Tongyi large model, which has been integrated before, DingTalk has brought in six of the top AI large model vendors currently on the Chinese market in one fell swoop - MiniMax, Dark Side of the Moon, Zhipu AI, Orion Star, Zero One Universe, and BaiChuan Intelligence. They have all been integrated into DingTalk.

When users use certain functions of DingTalk, they can freely choose the model they need based on their own requirements to produce the most needed results.

On-site, Ye Jun demonstrated the power of DingTalk with AI capabilities:

In a DingTalk poker group, just tell the AI assistant where and when to play cards, and the AI assistant will automatically create a message and remind everyone, while also creating a schedule and even cooperating with other AI assistants in the group to complete user-assigned task instructions.

Ye Jun said in his speech, "Model openness is a further step in DingTalk's open ecosystem strategy. As the industry moves from model innovation to application innovation, exploring large model application scenarios is DingTalk's responsibility. DingTalk has a large number of enterprise customers, with the advantages of data and scenarios overlapping, and both sides need each other. On the other hand, large enterprise customers on DingTalk also have demands for model openness."

Obviously, the capabilities demonstrated by today's AI large models have been recognized and accepted by various industries. How to use large models to change and reshape their own business scenarios is a common challenge faced by all digital transformation enterprises.

And now, the large model vendors who "make hammers" and DingTalk, which is deeply rooted in enterprise business scenarios and processes like a "nail," have come together for the first time.

It's like they've been pulled into the same poker group to solve customer problems together.

1. Difficult-to-implement large model toB

After two years of development, the path to the implementation of large models has actually not been smooth.

During the roundtable discussion session in the afternoon of the DingTalk Ecosystem Conference, Luo Yihang, the founder of Silicon Valley Insider, who served as the host, posed two questions to the guests:

ToB or toC, how should large models choose for commercialization?

After burning money, how can large models make money?

Essentially, these are both issues of large model commercialization, and the guests have their own views. But all guests highly agree on one thing: At this stage, toB is the best path for large models to achieve rapid commercialization.

But toB means needing to land on the enterprise side.

From a global perspective, overseas AI large model products have indeed blazed their own commercialization paths on the B side.

For example, OpenAI generates revenue by selling large model API interfaces (this service is currently closed in China); Microsoft provides the 365 Copilot service for office scenarios through a subscription model; and Salesforce and SAP have also integrated AI technology into their own SaaS products.

On the surface, enterprises have a large number of ready-made business scenarios that need to be optimized by large models, which is a rigid demand for the large model toB model. However, unlike overseas direct access or procurement of standardized products, China's enterprise service market has a large number of fragmented and customized business scenarios, with extremely high industry Know-how barriers.

But general large models do not have industry knowledge or business scenario knowledge.

Because whether it's the world's top OpenAI or domestic AI large model upstarts like MiniMax, Dark Side of the Moon, Zhipu AI, Orion Star, Zero One Universe, and BaiChuan Intelligence, their ultimate goal is to achieve AGI through Scaling law.

This means that from the start, they were not designed to serve a specific industry or industry and are not familiar with specific business scenarios or processes, making it difficult for them to land smoothly on the enterprise side.

Fu Sheng, the founder of Orion Star, said directly in his speech that although the generalization capabilities of large models have reduced the threshold for understanding many businesses, they still did a lot of business research to enter the agricultural and sideline products processing scenario.

Zhang Fan, COO of Zhipu AI, also expressed a similar view.

In his opinion, if we consider only the model capabilities, industry Know-how is not a problem that hinders the implementation of large models on the industrial side. But once the model needs to be integrated into business scenarios, the processes, data, business knowledge, etc., required by the large model will increase significantly.

Liu Jinzhu, CEO of YHYD, also said in an interview that when facing industrial end-users, AI large models need to face a series of issues including process integration, data processing, permission management, and security governance, and each issue will hinder the implementation of large models.

In other words, if AI large model vendors are not familiar with specific industries and business scenarios and only have a large model data scale, reasoning capabilities, and generalization capabilities, they will fall into the dilemma of "holding a hammer and looking for nails."

This is also the most complained issue about the commercialization of AI large models by users.

And looking at China's toB market as a whole, DingTalk undoubtedly has the most diverse scenarios. Because DingTalk is inherently rooted in enterprise business scenarios.

As of the end of December 2023, DingTalk had 700 million users, 25 million enterprise organizations, and 120,000 software-paying enterprises.

At the AI level, DingTalk has already completed AI transformation of its own products, becoming the first nationally-recognized work application that fully opens AI, with 700,000 enterprises using it.

"Every day, there are tens of millions or even hundreds of millions of expense approval processes running on DingTalk. In the past, financial personnel had to use online banking to make payments after the approval process was passed. Now, DingTalk has fully integrated with major domestic banks, allowing payments to be completed directly through DingTalk," Ye Jun introduced DingTalk's typical financial business scenario in his speech.

2. The two-way journey of hammer and nail

"I don't believe in Scaling law. The technical curve of large models has clearly slowed down, which means that large models will explode in application scenarios, and there will definitely be a flourishing future."

Zhu Xiaohu, the managing partner of GSR Ventures, recently evaluated AGI, and his view is quite popular in the industry.

Although Zhu Xiaohu's view seems to be contrary to that of large model vendors, over the past year, the large model industry has experienced a period of explosive growth and rapid iteration. As model capabilities continue to improve, the view that "large-scale applications in real scenarios are the only way to verify the value of large models and lead to AGI" has become a consensus.

And DingTalk, as the largest intelligent collaborative office and application development platform in China, has a huge number of users and application scenarios across various industries.

Looking back at DingTalk's AI development journey, in April last year, DingTalk integrated the Tongyi large model and rebuilt the product with AI, and has so far completed AIization of more than 80 functions across more than 20 product lines; in August last year, DingTalk opened up AI PaaS to help ecological partners reshape products with AI.

Now, DingTalk is opening its products and scenarios to all large model vendors, building the most open AI ecosystem, and exploring the path of large model applications together with partners.

According to Ye Jun, DingTalk and large model ecological partners will cooperate and explore in three modes.

First, in DingTalk's most proficient general office collaboration scenarios, DingTalk's IM, documents, audio-video, and other products' AI capabilities are mainly supported by Alibaba's own Tongyi large model.

On this basis, DingTalk will combine the characteristics of other large models to explore the application of different model capabilities in products and scenarios. For example, DingTalk is working with Dark Side of the Moon to explore educational application scenarios based on the long-text understanding and output capabilities of large models.

Second, in response to the increasing demand for business digitization and process personalization, DingTalk has opened up the AI Assistant (AI Agent) development platform to large model ecological partners. When developers create AI assistants on DingTalk, in addition to the default Tongyi large model, they can also choose large models from different vendors based on their own needs to meet the exclusive needs of enterprise business scenarios.

Third, for some highly customized and difficult-to-deliver industry scenarios that require extensive integration with various systems in the enterprise production environment, DingTalk will work with large model vendors to customize corresponding intelligent solutions for customers, providing services such as model training and tuning, AI solution development, AI custom application development, and can also achieve private deployment of models.

In addition to the above three scenarios, DingTalk is also embracing AI together with many ISVs in its own software ecosystem to jointly explore enterprise business scenarios.

On-site, Ye Jun released the functional upgrade of DingTalk version 7.6, where the "Integrated Suite" product is jointly developed by DingTalk and ISV vendors, with each proposing their core capability modules and embedding them together to provide better services for enterprise users.

For example, SaaS After-sales Treasure, a customer service application, adopts the API inside mode to embed DingTalk AI Assistant capabilities into its own application, creating an "AI Customer Service Assistant" to provide functions and services such as natural language question answering and data analysis for customers.

According to Ye Jun, in the past year, DingTalk has jointly launched five categories of management suites with 22 ecological partners, including intelligent HR, intelligent contracts, intelligent finance, intelligent travel, and intelligent marketing.

3. The key strategy of the "Seven Dragon Balls" of large models

It's easy to see that large models need to leverage DingTalk to enter business scenarios, and enterprise users also have higher AI capability demands on DingTalk.

Therefore, the cooperation between AI large model vendors and DingTalk is undoubtedly a two-way journey of hammer and nail.

But it's difficult for outsiders to see that behind this two-way journey, DingTalk has actually taken a very big step.

Last year, DingTalk released an extremely important strategic platform - AI PaaS.

This is an intelligent foundation open to ecological partners and customers, able to "connect the dots" between large models and the real needs of users across various industries.

This is a strategic platform released by DingTalk after proposing the "PaaS First" strategy in 2022. It is also based on AI PaaS that DingTalk is now able to gather the "Seven Dragon Balls" of AI large models from a technical perspective.

From a cloud perspective, the role of the PaaS layer is that it abstracts away the details of the underlying hardware and operating system, allowing users to focus on their business logic without managing and controlling cloud infrastructure, thereby better addressing actual business needs.

And the value of AI PaaS lies in this as well. It allows enterprise users to call models based on AI PaaS without needing to understand the development and training of the underlying large models, allowing users to focus their energy on their own business scenarios.

For enterprise users, they can directly dynamically load business data into the large model's scheduling engine based on AI PaaS, rather than injecting it into the base model for training, thereby reducing the cost of using large models for enterprise users and allowing users to enjoy continuously upgraded intelligent capabilities, reliability, and security capabilities in real-time with the iteration of the base model.

When DingTalk released the AI PaaS platform last year, Ye Jun revealed that the AI PaaS model training platform can provide each enterprise with a secure and controllable dedicated industry-specific model training platform that can connect to different large models and solve the problem of integrating proprietary enterprise data with large models in combination with DingTalk's "Alchemy Furnace Engineering" application.

After a year, the strategic value of DingTalk AI PaaS has become even more prominent.

In the view of "Chief Digital Officer," AI PaaS is not only a key foundation for large model technical support but also the foundation for DingTalk's AI large model ecosystem construction.

This is an open strategy with enormous imagination space. It not only satisfies DingTalk's diverse needs among different enterprise users but also allows more AI large model vendors to access DingTalk, further building the AI large model ecosystem and achieving a positive cycle.

4. The ecosystem is the only deciding factor

Supported by AI PaaS and integrating many AI large models to jointly serve enterprise users, DingTalk provides a worthy reference for ecosystem construction in China's toB market.

According to the long-term attention paid by "Chief Digital Officer" to DingTalk, since first proposing the "PaaS First, Partner First" ecosystem strategy in March 2022, DingTalk has been building an AI ecosystem around its own business scenario capabilities.

Now, ecological openness has become one of DingTalk's most important core strategies.

From a data perspective, currently, DingTalk has over 5,600 ecological partners, of which over 100 are AI ecological partners.

In addition to AI large model ecological partners, there are also partners in different fields such as AI Agent products, AI solutions, and AI plugins, with DingTalk AI being called over 10 million times daily.

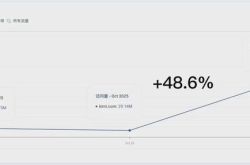

In January this year, DingTalk released AI Assistant. As of the end of May, the total number of AI Assistants created on DingTalk was about 500,000; in April this year, DingTalk officially launched the AI Assistant Market (AI Agent Store), covering categories such as enterprise services, industry applications, efficiency tools, tax and legal affairs, education and learning, and lifestyle entertainment. In just over a month, the number of AI Assistants listed has exceeded 700.

So many ecological partners choose DingTalk because they have truly earned money on DingTalk.

Over the past year, DingTalk and its ecological partners' deeply integrated suite products have achieved rapid development. The suites integrate the functions of ecological partners into DingTalk's own products, providing customers with a unified and seamless user experience.

As of the end of May, DingTalk's suite had a total of 22 ecological partners, generating nearly 100 million yuan in revenue in the past year.

Among them, the "DingTalk Customer Management" suite in collaboration with Tanji generated over 10 million yuan in revenue; a total of 11 ecological partner suite products, including Moka, eQianbao, Yonyou Changjietong, Lanling, Polaris, and Honghuan, generated revenues exceeding the million-yuan level respectively.

DingTalk has brought tangible benefits to its ecosystem partners, while also improving its software and service ecosystem.

More importantly, the prosperity of the ecosystem not only provides DingTalk with more opportunities to become an operating system level entry point for enterprise digital transformation, but also builds DingTalk's own business moat.

Just like Apple and Microsoft, which rely on the software ecosystem to establish themselves invincible, DingTalk is now following the same path in the digital field of Chinese enterprises.

I believe that soon, other collaborative office or AI PaaS platform products in China will also follow suit and accelerate the construction of their own AI ecosystem. This may become a key variable in the Chinese toB market in the future, and also a key winner for the success of AI+toB.