If Shannon knew that Tencent had done this...

![]() 07/04 2024

07/04 2024

![]() 569

569

In June, Tencent proposed and participated in the development and maintenance of the AVS3P10 audio decoding standard, which has now been finalized.

What is startling is that industry insiders believe that, in a sense, this standard has broken through Shannon's limit under traditional conditions, thus proving that AI Codec can significantly improve coding efficiency compared to traditional Codec. It heralded the prelude to AI changing the audio and video codec industry.

The introduction of the AVS3P10 audio decoding standard means that each of us has the opportunity to obtain a better communication experience in this fully digital era. It also signifies that China's AVS standard has already made a move ahead in the game of competing with international standards such as MPEG for global audio and video standards setting power.

What is worth pondering is how Tencent, known for being "product-oriented" in our memory, began to enter the field of underlying technology research and development, and what advantages and methodologies they have that enabled them to produce world-class research results so quickly. The success of internet products driving the integration of multiple technologies such as communications and artificial intelligence, forming a path to qualitative change, what enlightening significance does this hold for the internet industry, which is generally more adept at creating products and applications?

— Introduction

01

Simple words, significant matter

Recently, Tencent released a press release with simple content - the new generation of real-time voice coding industry standard AVS3P10 is about to be officially released.

This standard was proposed, promoted, and maintained by Tencent, taking Tencent's first neural network speech codec Penguins as the prototype. The key sentence is: "Penguins tightly integrates AI with traditional technology, making a large number of systematic innovations from algorithm research, engineering, and productization levels, breaking the performance limit of traditional Shannon's law."

Reading this, the author couldn't sit still; I think any reader who studies telecommunications engineering, informatics, or has a basic understanding of the history of human information science development would also be unable to sit still.

Why? Because Shannon's law holds a crucial position in this industry, or rather, in this era. It is the yyds (acronym for "forever your deity") of information workers.

From early civilizations to today, humans have only experienced four eras - the hunting and gathering era, the agricultural era, the industrial era, and today's information era.

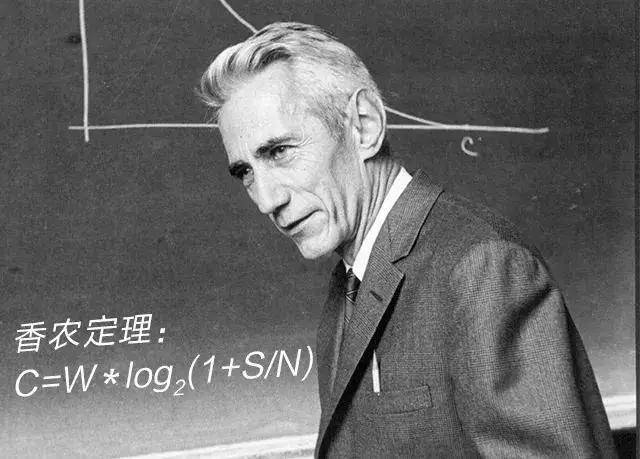

Claude Elwood Shannon, a scientific giant born into an ordinary family in Michigan, is a pioneer of the information age. It can be said that he single-handedly opened the door to a revolution in human communication methods.

In 1948, Shannon published his groundbreaking master's thesis, "A Mathematical Theory of Communication," which, like a bolt of lightning piercing the sky, announced the beginning of information theory on the historical stage.

Some believe that Shannon's law should be included among the "five most important laws in human history," on par with Newton's laws of classical mechanics and Einstein's theory of relativity.

For example, the smallest unit of measurement for building the information world today - the bit - was proposed by Shannon. In other words, even if humanity enters the metaverse era in the future, the measurement standard for that universe would still be proposed by Shannon.

In 1948, Shannon's information entropy solved the problem of how to measure the amount of signal information in telegraphy, telephony, radio, etc.

But a specific question is how to further improve channel capacity in long-distance communication, that is, where the upper limit of information transmission rate lies. This "where" is the so-called "Shannon limit."

Shannon's brilliance lies in the fact that the Shannon limit is not a hypothesis because Shannon provided a specific channel capacity formula, known as the "Shannon formula," which forms the basis for almost all modern communication theories.

The concept of signal-to-noise ratio must be mentioned. Simply put, in a communication process, the stronger the signal and the lower the noise, the higher the communication quality, which is commonly referred to as a high signal-to-noise ratio.

It can be said that since Shannon's formula, the mainstream direction of human communication development has centered around expanding bandwidth and improving signal-to-noise ratio.

To some extent, one of the important reasons for the development of human technology from 1G to today's 5.5G is that by increasing bandwidth, we can even easily handle signal-to-noise ratios less than 0.

Although theoretically, bandwidth is king. However, laws are laws, and the real world we face is always much more complex than experiments.

For example, real-time audio communication scenarios like online meetings and voice calls often face scenarios where continuous communication is required in environments with poor network connectivity, such as elevators, basements, tunnels, etc. Similar situations also occur on rapidly moving vehicles (such as high-speed trains, cars), as well as in areas with poor signal coverage (such as rural areas, remote regions).

You can increase the signal in a weak signal area (such as adding another transmission tower), but how can you predict every weak signal point encountered by each person during each trip?

And for the research and development personnel of Tencent Meeting's Tianlai Lab, whose applications cover scenarios such as Tencent Meeting and QQ voice, these are problems they have to solve every day - and this is their motivation for challenging Shannon's limit.

Based on Shannon's formula as the foundation of communication theory, continuously innovating technology to improve signal-to-noise ratio and bandwidth utilization efficiency is the main direction.

However, Xiao Wei, an expert researcher at Tencent Meeting's Tianlai Lab, the project leader of Penguins, and the Editor of the AVS3-P10 standard, said, "Since the reality is that we cannot guarantee sufficient bandwidth at all times, we will strengthen the encoder's capabilities and do the opposite - ensuring normal and high-quality information transmission even under very low bandwidth conditions, relying on a smaller bit rate. Of course, when bandwidth is sufficient, the audio quality can still compete with existing solutions."

Don't think this is simple. The distance between proposing an idea and realizing it is not something that can be crossed in one leap.

For example, more than 30 years ago, the International Telecommunication Union proposed that the acceptable delay for two people communicating from opposite ends of the earth is within 400 milliseconds.

But even with 6G on the horizon today, humans still cannot guarantee that the delay of any long-distance communication remains within 400 milliseconds.

The same goes - industry standards can be very high, but the actual bandwidth environment is always intricate and complex.

Coding technology is a meaningful direction. Its core significance is to package the original sound (which we can consider as the goods to be transported) in a specific form. If the packaging is done cleverly enough, the volume can be very small, allowing for more goods to be transported with the same transportation capacity. But if one blindly pursues compressing the volume, it may "damage" the goods, thus failing to obtain high-quality speech.

The challenge for Tencent Meeting's Tianlai Lab is that existing mainstream audio codec standards such as EVS and OPUS are already mature and widely used, but the evolution of technology has stagnated at these standards for a long time.

Xiao Wei said, "One of the backgrounds for our work was that when the bit rate dropped below 10 kbps, the transmission speech quality of any existing encoder deteriorated significantly, affecting user experience. This meant that we could not simply improve on others' technology but had to make changes and innovations at the underlying technology level."

This is Tencent Meeting's Tianlai Lab's challenge to Shannon's law limit, but this time they had an extra teammate - Tencent AI Lab.

When traditional methods are almost exhausted, is emerging AI technology the new gospel for audio and video encoding?

02

The amount of black technology is proportional to the amount of engineering transformation

First, let's talk about Tianlai Lab's goal. Their general goal is to significantly reduce the required bit rate while maintaining or even improving speech quality.

That is to say, since the current watershed is 10 kbps, the new coding technology needs to achieve a subjective experience of 4 points or even 4.5 points (out of 5) in terms of speech quality under lower bit rates (such as 6 kbps).

Here, we need to introduce the concept of Codec, which generally refers to codecs or software that support video and audio compression (Encoding) and decompression (Decoding). With the rise of AI technology, AI Codec technology has emerged, referring to codec technology innovation empowered by AI methodology.

Industry insiders point out that AI Codec's compression performance surpasses traditional H.264, H.265, using only one-sixth of the development time of traditional methods. From H.261 in 1988 to H.266 in 2020, compared to the average iteration frequency of traditional codecs every ten years, the development of AI Codec can be described as rapid.

"End-to-end optimization under the auspices of AI is a systematic project, a path we have not taken before," said Xiao Wei. "But we have a good value system that makes our goals clear. Because Tencent's value is to prioritize user experience, which actually sets our research and development priorities - first ensuring a significant improvement in experience, and then finding specific paths under this premise, giving us a sense of direction for all our explorations."

Traditional audio technology has actually approached Shannon's limit under traditional conditions. Xiao Wei introduced, "If the sampling rate of an original signal is 16,000 points per second, with each point represented by 16 bits, without any compression, it would be 256 kbps. Roughly estimating a 10-fold compression gives 24 kbps, which is the Shannon limit for traditional codec technology. In fact, this is also the case. Currently, based on traditional methods, a codec bit rate of around 20 kbps can ensure good quality."

"Generally speaking, in the field of information technology, a 20% improvement in efficiency can be considered an iteration," said Xiao Wei. "But this time, it's equivalent to reducing 20 kbps to 6 kbps, with an optimization amplitude of over 300%, which can only be achieved with the empowerment of new forces like AI."

In fact, there has been debate about whether to do this. Some believe that the current user bandwidth level is already very high, and 24 kbps is actually very low, so the ROI of further improvement is very low.

"But we don't see it that way. Because we have real scenarios with hundreds of millions of users," said Shang Shidong, vice president of Tencent Cloud and director of Tencent Meeting's Tianlai Lab. "From our real observations, there are numerous cases where users encounter weak network environments, which leads to significant benefits even from simply improving technology from the perspective of reducing bit rates. Even more, new and unexpected application scenarios may be developed."

In fact, in the process of understanding the birth of Penguins, I found that the Tianlai Lab and Tencent AI Lab teams jointly solved at least four major issues before finally achieving today's results.

The first issue is the path problem.

"In fact, this demand was raised in 2020, initially proposing to ensure moderate speech quality under low bit rates," said Yang Shan from Tencent AI Lab. "But at the time, we did not have any established routes to refer to."

After repeated discussions, it was decided to introduce deep neural networks, conduct massive learning in advance to perform speech modeling, thereby "utilizing AI capabilities to capture the most core audio feature parameters during encoding, intelligently allocate bit rates based on importance, and then predict and reconstruct fine speech structures using deep learning networks, ultimately generating realistic audio waveforms."

If it's difficult to understand this paragraph, let's use a metaphor - traditional codecs are like packaging and shipping in a fixed form, and if some packages are lost midway, there is no way to recover. But with AI empowerment, it will intelligently decide the optimal packaging method based on the characteristics of the goods and the transportation capacity. And if it predicts the possibility of losing goods, it can automatically replenish them, ensuring that the received packages are processed in the most appropriate way, and losses will be supplemented in real-time, greatly improving the satisfaction of both the shipper and the receiver.

In the following year, Penguins began serving scenarios such as Tencent Meeting as a new generation of AI speech engines, receiving a lot of praise, which initially proved that Tencent Meeting had chosen the right path.

The second issue is endless optimization.

Why did the technology, which began to be tested in 2021, only become widely known in 2024? Besides the significant amount of time required for participating in AVS standards work, a specific issue is that this technology is constantly being optimized along with product upgrades.

"If you dig into the details of this work, it can be endless," said Yang Shan. "The human ear is a very sensitive organ, and hearing is subjective and sometimes even mystical. For example, after a certain degree of compression, some people can hear the background noise, while others cannot, so we have to optimize based on the standards of those with more sensitive hearing, which creates a lot of algorithmic and engineering issues."

At the same time, the requirements are constantly increasing. "The initial goal was to meet moderate call quality under low bit rates, which was later continuously improved to lower bit rates and higher quality," said Xiao Wei. "You can also understand this as us being successful, or still on the way."

"Sometimes optimization is interesting because different scenarios produce different needs, and we have to do a good job of tuning," said Liu Tiancheng, the technical leader of QQ audio and video. "Just for QQ voice communication, if it's private point-to-point communication, the requirement for speech restoration is very high, such as whispers, breathing sounds, etc., which need to be realistically restored. There is also a scenario of continuous communication for several hours or even an entire night, where we need to consider factors like power consumption, device heating, and power saving throughout the process. You can say that the amount of black technology is proportional to the amount of engineering transformation."

The third issue is that the model needs to be small enough.

Yang Shan said, "I can clearly state that this is not a large model or a small model distilled from a large model. Because what we are doing is an AI codec, and there are many codecs concentrated in a speech solution. You can understand that we are creating a very precise small model with strict limitations on volume, efficiency, and power consumption."

Moreover, since it is mainly for real-time speech scenarios, 99% of it occurs on smartphones. "This requires us to continuously reduce the demand for end-side computing power, achieving consistent experience across different devices and network environments. In a joking manner, it's like 'running at the speed of fire wheels even on low-end phones,' which places very high demands on technology."

In fact, the final Penguins model is only of the scale of hundreds of K. Yang Shan said, "This reflects the underlying technical capabilities of Tencent AI Lab's team. Many people mention models and think of algorithms, but our tuning granularity is much finer than algorithms, including the most basic operators and even lower-level functions. We optimize them all, which is why we can ultimately produce a world-class product - it's the confidence and mastery of underlying capabilities."

"In summary, we are solving a 'both-and' problem: high quality, low bit rate, low computing power (and actually low latency as well). Therefore, when designing the entire solution on the system side, we further tightly integrated traditional signal processing and information theory with the latest AI technology, forming a new methodology combining data-driven and domain knowledge. This is not a simple 1+1 process; rather, it is a closed-loop approach to the problem from multiple aspects such as top-level design of the solution, algorithmic details, and extreme engineering," explained Xiao Wei.

If the above three issues belong to technical issues, then the ultimate test faced by Penguins is whether, having achieved world-class innovation, it can gain world-class recognition and create value for users widely, which is the ultimate big issue.

So we will discuss this issue in the next section - the issue of standardization.

03

The road to becoming a world standard is not smooth

In June 2024, the AVS3P10 real-time voice coding standard officially completed its standardization work and entered the public disclosure stage.

We need to first understand what AVS is.

Simply put, AVS (Audio Video Coding Standard) is an audio and video coding standard with fully independent intellectual property rights in China. As a working organization, it was established 22 years ago in 2002.

Its advanced feature lies in being the world's first audio and video source coding standard for 8K and 5G industrial applications, which has been officially incorporated into the core specifications of the International Digital Video Broadcasting Organization (DVB).

Readers who are familiar with computers may think that the file name suffix for their frequently played audio and video files is * MPEG。

That's right, the main competitors of AVS are the MPEG-1, MPEG-2, MPEG-4 and other standards developed by the MPEG expert group jointly established by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC).

In terms of technical performance, there is a direct competitive relationship between AVS and MPEG standards, especially in terms of encoding efficiency, compression ratio, etc. The AVS standard not only maintains technical performance comparable to international standards, but also has the advantages of low complexity and low implementation costs.

But in terms of market coverage, the advantage of MPEG is its long history, so it is a de facto international standard.

Therefore, the competition between AVS and MPEG to some extent reflects the fierce competition in an important category of the digital industry - the audio and video technology market.

And Tencent participated in the development of a module in AVS3, the third-generation standard of AVS, which is the P10 real-time voice coding standard.

In fact, although Pengunis has billion level scenarios such as Tencent Meeting and QQ Voice in China, there is not only one deep learning based speech coding solution internationally, such as Microsoft's Satin solution, Google's Lyra solution, SoundStream solution, etc. The focus of competition is also on low bit rate and high quality - for example, Microsoft's solution is also based on 6kbps, while Google emphasizes performance better than Microsoft's standards.

In a sense, Pengunis represents China's highest level in AI Codec, helping Chinese standards strengthen international competitiveness by participating in the development of standards.

But this does not mean that AVS will "open a backdoor" to Tencent. On the contrary, Tencent needs to participate in the formulation of standards, not only actively proposing and participating in the formulation of standards; Meanwhile, the candidate technologies submitted based on Penguins must also undergo cross validation by the AVS audio group before they can be adopted.

In fact, with the completion of standardization work for the AVS3P10 real-time speech coding standard in June 2024 and entering the public disclosure stage, some previous information was gradually disclosed.

"It is worth mentioning that Tencent led the process of developing the standard, which was also evaluated by the AVS working group as the fastest in development, the highest in standard delivery quality, and the process of receiving full praise during testing," said Shang Shidong.

The AVS working group also pointed out that "AVS3P10 real-time voice coding, as a new generation of voice coding and decoding technology standard, is an important supplement to the AVS series standards. This standard is currently the highest level in the industry, reflecting Tencent's strength in voice processing, artificial intelligence technology innovation, and user experience, and will bring users a better experience."

"At present, according to publicly available materials, our solution is the only one in the industry that has a subjective and objective quality score of 4 or even 4.5 points, even at 5.9kbps, which is a fraction of a point." Xiao Wei said, "Compared horizontally with international standards, our quality advantage is very obvious at 10kbps, which means that it reflects to some extent the leading position of Chinese standards compared to international standards conducted during the same period."

At the same time, this leadership is also the result of comparing with traditional signal processing encoders and advanced AI codecs. Xiao Wei excitedly said, "From the current perspective, we represent the highest level in the industry, the world's first systematic introduction of AI capabilities to form the next generation speech coding standard, and truly achieve high-quality results at low bit rates in practical environments. Therefore, AVS has made such a conclusion to us, and we are also proud."

As for why they use their painstakingly crafted technology as a standard, Shang Shidong's view is that this reflects Tencent's openness and is also for the common progress of the industry.

"We are in a standardization organization, exposing all new technological frameworks and details is objectively equivalent to opening up the entire industry," said Shang Shidong. "This is also what Tencent has always advocated. Through friendly cooperation and openness, we can greatly advance the entire industry in the next generation of voice encoding and decoding technology, and promote this industry to provide better technology and products to all users.".

Regarding the sharp question of "whether disclosing standards and details will actually weaken Tencent's competitiveness", Shang Shidong's view is: "Firstly, Tencent is a business oriented company. It will not weaken its competitiveness just because a single standard is disclosed, because our technical standards are first run through in scenarios with a scale of billions, and then mature solutions are standardized. This closed loop is difficult to replicate."

In fact, the author also believes that for partners and the ecosystem, the best way to use Penguins technology in the shortest possible time is not to do reverse research and development with standards, but to cooperate with Tencent, connect more scenarios and create more value through ecological connections.

04

Debate between products and technology

In the introduction, we raised a question - why has Tencent, a company that is good at product development, achieved world-class underlying technological innovation? What are its path advantages and industry insights?

One view is that companies that produce products have more opportunities for technological innovation because they know where the real pain points lie. Because products are meant for users, these technical personnel are exposed to the problems of technology application in the real world. They have a better understanding of various special situations and pain points in the real environment than in a pure laboratory.

An Internet mogul I know has a famous judgment that Chinese companies are more likely to achieve results in the AI era, because enterprises that do applications and products are more likely to innovate, because they always encounter problems before pure research institutions, and if they encounter problems first, they can achieve technological breakthroughs faster and earlier in the process of solving problems.

This statement partially explains why Tencent, which is good at making products, achieved a breakthrough in underlying technology earlier this time.

But we can go deeper because there has always been a debate in the digital industry about whether to prioritize technology or products.

One view is that technology should be developed first, and then explored from upstream to downstream to see what useful products can be produced.

Another viewpoint holds that the productization of technology is the prerequisite for its rapid evolution. It is the product that provides the opportunity for technology to face real users, and the productization that drives a four step closed-loop model of "technology productization product commercialization user feedback technology innovation", allowing products to drive technological development upstream from downstream.

For example, in the late 1960s, with the rapid development of computing and semiconductor technology, semiconductor component manufacturing technology shifted from discrete devices, small-scale, and medium-sized integrated circuits to large-scale integrated circuits.

As a milestone in this technological trend, the 4004 chip, the crystallization of large-scale integrated circuit technology, was born at Intel in 1971.

But we cannot assume that the 4004 was a product when it was introduced, because products were always targeted for specific purposes, and at that time, including Intel executives, no one in Silicon Valley knew what the 4004 could be used for - among them, Intel founder Noyce believed that its best use could be an electronic watch, some speculated it was a kitchen mixer, and some believed it could be used to control a car's carburetor.

This to some extent indicates that without productization technology, it is like a soul without a body, able to wander alone.

For example, it is IBM that truly transforms large-scale integrated circuits from technology to mature products and develops them into an industry.

Don't think that big companies are technology driven. IBM did not invent PC technology, but it successfully launched the PC product by integrating Intel's chips and Microsoft's MS-DOS operating system. The computer industry is thus divided into two distinct camps: software and hardware. Through generation after generation of product innovation, it drives technical workers to develop faster processors and more comprehensive software. This rule has not expired until today.

The inspiration this history has given us is that sometimes, technology is 0-1, and products are 1-100. 1 is indeed important, but without 100, a simple 1 loses its meaning and will not have the opportunity for evolution; Only when there is 100 can people have the opportunity to write more zeros later, and even change the entire world.

So, it is the product that chooses technology and promotes technology, rather than technology choosing the product. If you only have technology, then there is only a very small probability that it will be turned into a product, and a greater possibility that it will be elevated, such as Japan's hydrogen powered cars.

By first developing products and continuously iterating afterwards, a thriving industry can emerge and drive continuous technological progress. This can be said to be the mainstream of human technological evolution, such as China's new energy vehicle industry and AI industry.

To put it absolutely, so far in human society, all technologies have been born without knowing what they can be used for. It is products that provide opportunities for technology to be user oriented, allowing technology to develop downstream.

Looking back, the success of Penguins, from a technical perspective, is a breakthrough formed by AI's remarkable focus on a single point. However, from a product perspective, without products like Tencent Meeting and QQ Voice that have billions of users, as well as the real scenarios and demands generated by these products, no one would have realized the significance and urgency of transforming coding standards, let alone do such work. Our codec technology would have remained in the pre AI era, and we would not have enjoyed smoother and high-quality voice communication that can be conducted anytime and anywhere.

China is the second largest source of AI innovation in the world, and the only single product market with a scale of 1 billion users in the world, which makes it possible for us to overtake at the corner in the post Internet era and the AI era. However, the premise is that more companies good at making products should have enough mind and ambition, and have a pattern and awareness of starting from product innovation, but not just product innovation.

In this situation, giant companies like Tencent take the initiative to research underlying technologies from a product perspective and adopt an open and friendly attitude towards sharing successful technologies. This is of great significance for the progress of the entire industry and the improvement of the experience of every netizen, and has also established a good empowering paradigm.

Meanwhile, this also proves that the concept of "first-class companies setting standards, second-rate companies producing products" is outdated. In the digital age, technology and products interact and iterate, and in the process of improving technology, a large amount of feedback from products and users is needed to drive technological progress. This once again reminds us that the ability to create products deserves high attention. It is the password for the development and inheritance of business civilization. Overemphasizing technology and neglecting products often leads to resource mismatch and loss of market opportunities.