Can Alibaba Cloud and Others Handle the OpenAI Plate?

![]() 07/08 2024

07/08 2024

![]() 559

559

July 8, just one day shy of the final deadline.

On June 25, OpenAI notified some developers that it would block API traffic from unsupported regions as of July 9. Unfortunately, OpenAI currently only opens its APIs to 161 countries and regions, and China is not included. This means that Chinese developers will no longer be able to use OpenAI's API services.

Some compare this "ban" to the previous chip supply disruption, recognizing the importance of developing domestic large models. Others envision the future, seeing opportunities for the rise of domestic large models and the severe challenges they will face. This news has caused quite a stir, which is perhaps the power of OpenAI, the leader in large models.

However, as followers, domestic vendors have become quite formidable after two years of development. Shortly after the OpenAI ban was announced, these vendors sprang into action, attempting to capture the market share left behind by OpenAI. So, can they really handle this plate?

01

Customer Acquisition Race

What impact will the OpenAI ban have on the large model industry?

On one hand, there are layers of concerns among some developers, while on the other hand, large model vendors are eager to compete.

Currently, there are two main channels for domestic use of OpenAI technology: one is to connect to the API provided by OpenAI officially, and the other is to connect to the OpenAI technology provided by Microsoft's intelligent cloud Azure. Although the first channel has been closed, the second channel remains open. In fact, even Microsoft employees have stated that the focus this year, including in China, is to promote OpenAI.

This means that the impact of the OpenAI ban on developers is not as significant as imagined. In fact, it is the actions of domestic vendors after the ban announcement that have had a greater impact on developers.

What happens when the most popular and heavily trafficked restaurant on a street is closed? The surrounding restaurants take advantage of the opportunity to aggressively promote and attract customers. The current large model sector is no different.

With the announcement of the OpenAI ban, large model vendors quickly sprang into action, launching their own migration solutions, with some even offering zero-cost migration services, essentially saying, "Just come."

Among them, Baidu Intelligent Cloud Qianfan was the first to move, offering a "0 yuan migration + service" luxury package. In this package, the Wenxin flagship large model is offered for free for the first time, with a more advanced flagship model usage package equivalent to the OpenAI usage scale gifted to OpenAI migration users, valid until 24:00 on July 25.

Among the major players, Alibaba Cloud is also not to be outdone, promising to provide "the most cost-effective alternative Chinese large model solution," with Tongyi Qianwen offering 22 million free tokens and exclusive migration services to Chinese developers. Tencent Cloud announced that it would gift 100 million Tencent Hunyuan large model tokens to newly migrated enterprise users and provide free exclusive migration tools and services.

In addition, Zhipu, ZeroOne, Baichuan Intelligence, Moon's Dark Side, and others have also joined this competition to take over, offering "special relocation plans," "Yi API two-fold replacement plans," "zero-cost migration plans," and more. Among them, Moon's Dark Side stated, "Kimi's open platform interface is fully compatible with OpenAI, allowing for a seamless move in as little as five minutes."

These new and old vendors have their own confidence. If in the early stages of development, many domestic vendors "shell-wrapped" OpenAI, turning its technology into their own, and even rumors of large factories doing so, then after development, domestic vendors now have the ability to be independent and self-sufficient.

As Zhou Hongyi said, "In the early stages of domestic large model training, OpenAI was used to generate some fine-tuned data, essentially 'distilling' the capabilities of OpenAI GPT-4 into domestic local large models. But this was in the early stages of large model development. Now, many training methods, data, and tools for large models have become very mature, and not many vendors are still doing this."

From an industry perspective, this OpenAI ban, in addition to making it difficult for some vendors that continue to "shell-wrap," is actually beneficial to domestic vendors with independent research and development capabilities: first, it gives them a little more market share; second, it urges them to continue strengthening their independent research and development investments.

The Hundred Regiments Battle that once took place in areas such as group buying, ride-sharing, and bike-sharing is now being played out in the large model sector, with even more intense battles.

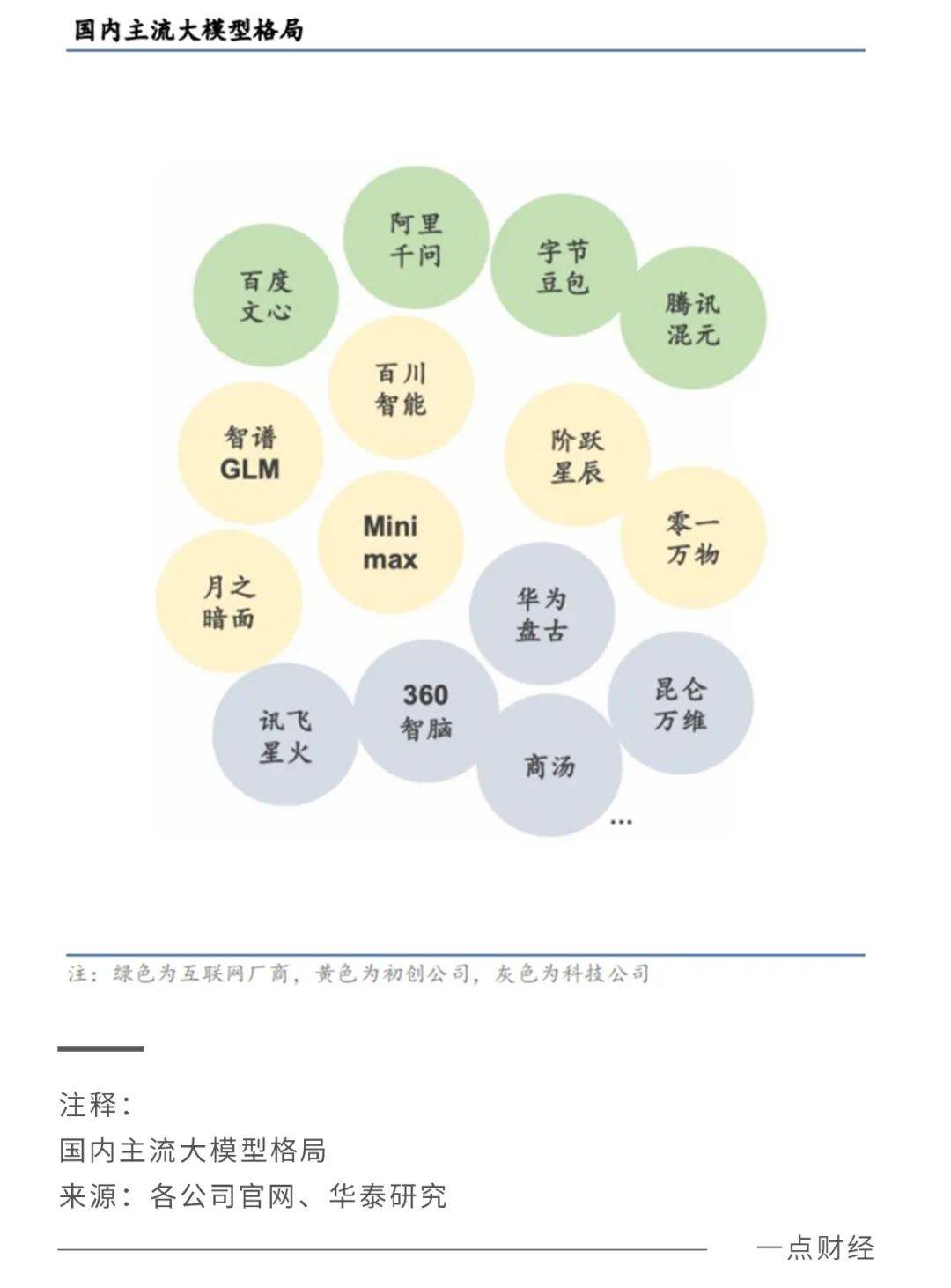

At the recent World Artificial Intelligence Conference and High-Level Conference on Global Governance of Artificial Intelligence (WAIC 2024), the number of large models exhibited grew rapidly from over 30 last year to over 100, including established players such as Ali Group, Huawei, Baidu, SenseTime, Tencent, and NetEase, as well as new forces such as Zhipu, Baichuan Intelligence, and Jieyue Xingchen.

"This WAIC is the most popular one I've attended," Xie Jian, co-founder of Baichuan Intelligence Technology, remarked at a forum.

The hot market is driving these vendors to continuously iterate. During this conference, vendors such as Huawei, Kuaishou, SenseTime, Tencent, NetEase, and Bilibili each showcased their strengths, starting from their proficient businesses or video expertise, or leveraging their advantages in voice capabilities...

Small and medium-sized vendors also have their own competition strategies. Some vendors stated that they conducted over 20 application scenario tests in 2023 but have focused only on the "enterprise marketing large model" scenario this year, continuously digging deeper to solve the most troubling issue of customer acquisition costs for enterprises.

If 2023 was the birth year of large models, then 2024 is the application year of large models. Everyone is trying to pinpoint their strengths and quickly find their footing in industries and businesses. The customer acquisition race after the OpenAI ban is just another ordinary new day of competition.

02

The Battle for Commercialization

From group buying to food delivery, from ride-sharing to bike-sharing, every competition in China's internet industry has involved the shadow of price wars. Lower prices to attract customers – increase market share – raise prices to earn profits – this is almost the basic path of every industry competition, and internet vendors have become reliant on this.

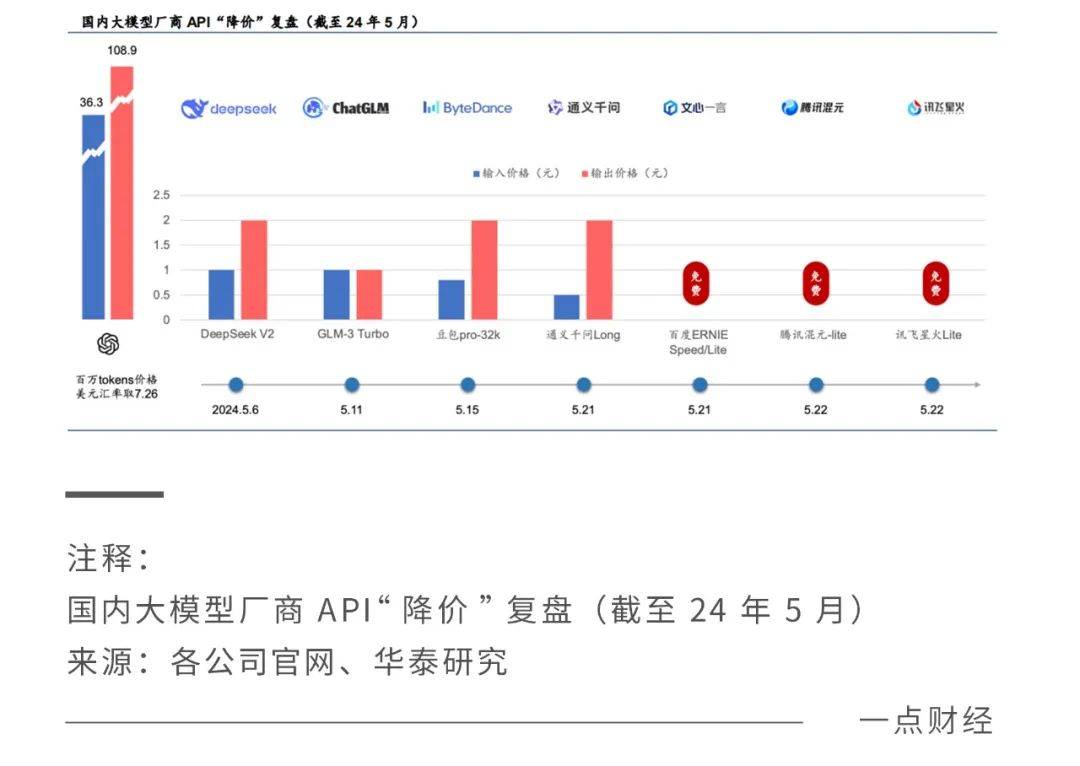

It seems to be the same with large models. The customer acquisition race after the OpenAI ban is actually just a microcosm of the price war in large models. Since May of this year, domestic vendors have launched a round of price reductions for large models, with self-developed models that can compete with global mainstream models such as GPT-4 reducing prices from several cents per unit to a few tenths of a cent.

Baidu announced that the Wenxin Large Model 4.0 Turbo (ERNIE 4.0 Turbo) is fully open to enterprise customers, with input and output prices of 0.03 yuan per 1,000 tokens and 0.06 yuan per 1,000 tokens, respectively.

Of course, these smart large model vendors do not place as much hope in the effect of price reductions for large models as they do in other industries. Compared to more consumer-oriented industries such as group buying, food delivery, and ride-sharing, large models, which are more business-oriented, have higher investments, and users are not as sensitive to prices.

A consensus is that AI will be a huge cost-driven productivity revolution, but businesses do not use AI out of cost considerations but rather based on whether it can generate business value. In layman's terms, businesses use AI to reduce costs and increase productivity, but they do not care about money as much as ordinary people do; they care more about whether it works well.

He Xiaodong, Dean of JD.com Exploration Research Institute, expressed the sentiments of a large number of insiders: "The price war for large models will indeed prosper the ecosystem in the short term, but in the long term, it is certainly not a sustainable strategy. The current prices may not even cover electricity costs."

It can be foreseen that the price war for large models will unfold with rationality and caution. Huatai Securities also holds this view in a research report: "We believe that domestic vendors will not blindly engage in a price war during this round of price reductions and will still consider factors such as costs."

As of May this year, after statistical analysis of the price reductions by major model vendors, Huatai Securities found that the price reductions or even free offerings were not the most advanced models of the vendors but often Lite lightweight versions or models with lower prices to begin with. At the same time, "those with larger price reductions (over 80%) are mostly domestic internet giants with their own cloud computing power infrastructure."

If the intention is not a price war, then what is the real purpose of these price reduction actions by domestic vendors?

Large models cater to both consumer and business segments. OpenAI's ChatGPT can spark a wave of robot conversations among global tech enthusiasts and also collaborate with Apple and Microsoft. The former made the concept of large models explode in popularity, skyrocketed OpenAI's valuation, and showed the world the unlimited prospects of large models; the latter grounded large models in industries and made them commercializable.

Nowadays, many large model vendors have chatbots for consumers, which serve as a window to showcase their capabilities to ordinary users. However, most vendors, like OpenAI, prioritize commercialization over the development of model capabilities. According to foreign media reports, OpenAI is considering transforming its governance structure into a for-profit enterprise.

Especially for Chinese vendors, commercialization must be accelerated for two reasons:

First, the investments are significant. Large models require investments of tens of billions or even hundreds of billions of yuan, and no matter how large the enterprise, it cannot continue to invest without output for a long time. Therefore, it is necessary to conduct both basic research and applied research, quickly transforming technology into results and promoting a positive cycle between technology and the market.

Second, the winner takes all. The large model market is a winner-takes-all market, and competition in China is fierce. Slowing down in commercialization means having to spend more to regain market share and may even fall further behind.

Whether it is growing into a super app or developing into a super model deeply integrated into industries, the prerequisite for the commercialization and popularization of large models is the popularization of users/customers.

Minsheng Securities pointed out in a research report that when a new application is launched, the market should observe metrics such as user scale and activity, downloads, and product iterations from a growth perspective, focusing on the application's development potential and de-emphasizing profitability indicators such as paid user scale and paid penetration rate.

Price reductions are always an effective way to stimulate consumption and increase popularization rates, and this is no exception in the large model sector. According to Alibaba Cloud CTO Zhou Jingren, after the price reductions, a large number of customers directly invoked large models on Alibaba Cloud. In the past two months, downloads of the Tongyi Qianwen open-source model doubled, exceeding 20 million, and the number of Alibaba Cloud Bailian service customers grew from 90,000 to 230,000, an increase of over 150%.

Where there are users/customers, there is an ecosystem, and where there is an ecosystem, there is scale and growth. As API resource providers, AI open platforms have a flywheel effect: more API products, lower invocation prices – better AI capabilities and experiences – more developers join – forming a larger industrial ecosystem – feeding back to strengthen the AI platform – more products, lower prices...

Behind the price competition is a battle for commercialization and a scramble for future tickets. Whoever can acquire more customers and land more scenarios and industries will have a greater advantage in the competition.

03

Conclusion

While engaging in price competition and customer acquisition races, large model vendors cannot ignore another crucial battle: the technology battle.

Behind the price competition is the technology battle. Simply sacrificing profits through price reductions is a brute force approach. A positive example is optimizing various components in the architecture to reduce training and inference costs.

The ultimate competition is the technology battle. The large model industry is talent-intensive and technology-intensive, and top-tier technology is almost a prerequisite for all competition. Making do with makeshift solutions or buying off-the-shelf components will not work here. Competitors will not wait for you, and users do not have the patience to wait.

This is only the initial stage of accelerated competition, but it is also a crucial stage. Or rather, every stage is crucial.