Zhipu AI releases AI assistant, helping humans knock on the door to AGI

![]() 11/01 2024

11/01 2024

![]() 583

583

John McCarthy, the father of artificial intelligence, once said, "As soon as AI can start working normally, no one will consider it AI anymore." Today, this prophecy is gradually becoming a reality.

On October 25, Zhipu AI launched AutoGLM, an autonomous agent capable of simulating human operation of mobile phones to perform various tasks.

Simultaneously, Zhipu AI also introduced GLM-4-Voice, an end-to-end emotional speech model that can understand emotions, achieve highly human-like emotional expression, and support multi-speed and multi-language content output.

Image source: Zhipu official website

Upon its launch, the product immediately sparked the capital market, with many related concept stocks continuing to strengthen: AI video, AI education, AI companionship, and other individual stocks were warmly received by the market.

With the support of technological progress and capital enthusiasm, is the era of "personal AI assistants" finally arriving?

The concept of AI assistants is hot again, but why is this time different?

When talking about AI assistants, Siri cannot be overlooked - the first AI assistant most people have encountered.

When Apple first introduced Siri, Steve Jobs said, "Siri belongs to artificial intelligence, not search." However, following Jobs' departure, Siri now positions itself more as a search engine with voice functionality rather than an AI assistant. Its deviation not only signifies the end of Jobs' grand vision but also represents the failure of human exploration into AI assistants in the mobile internet era.

As we enter the era of AI, ChatGPT's emergence reignited people's fantasies about AI assistants. In April 2023, AutoGPT was launched, claiming to perform tasks autonomously without any user intervention. At that time, Andrej Karpathy, former AI director at Tesla and co-founder of OpenAI, described AutoGPT as "the next frontier in prompt engineering." Some even predicted that AutoGPT would replace ChatGPT.

However, due to insufficient reasoning capabilities in the underlying model, AutoGPT ultimately failed to proactively recognize and operate screens. Like countless other "conversational AI large models," it was trapped in a question-and-answer bubble, lacking the authority and ability to operate autonomously - until the emergence of AutoGLM under Zhipu AI.

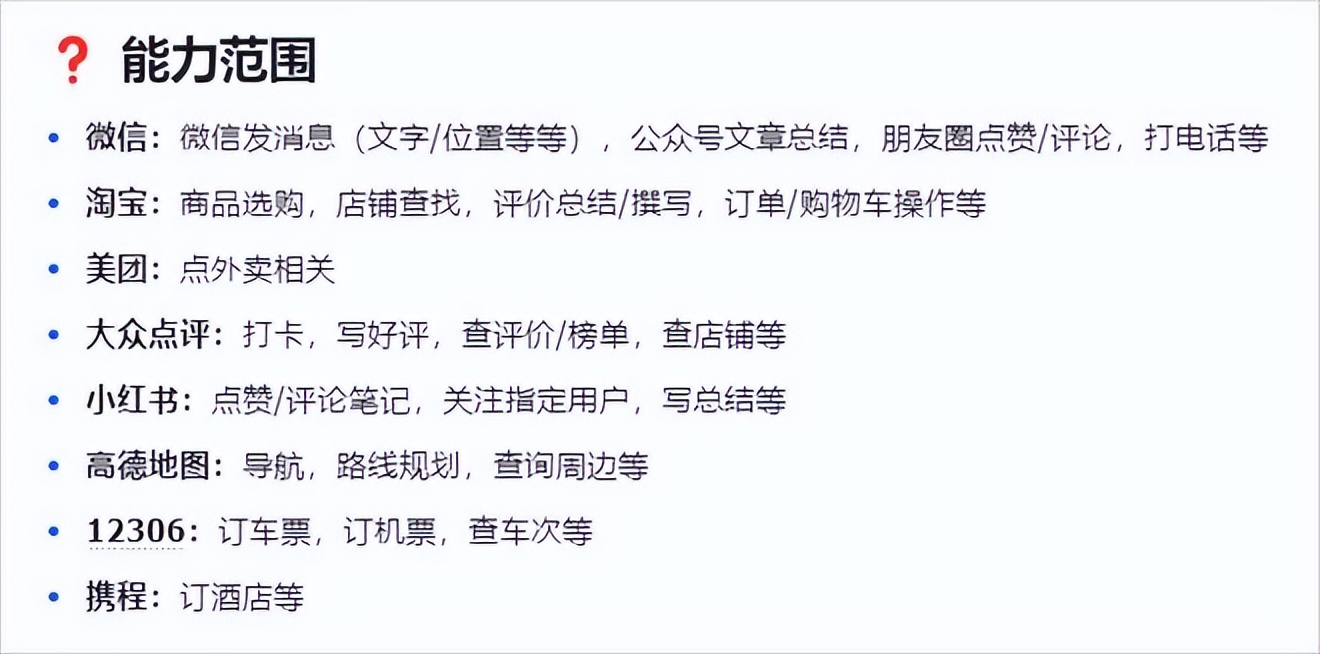

Based on footage shared by review bloggers, Zhipu AutoGLM can accurately recognize and understand user instructions, requiring no manual operation demonstrations by users and not being limited to simple task scenarios or API calls. It can perform operations on electronic devices on behalf of users, automatically completing daily activities such as ordering takeout, editing comments, shopping on Taobao, liking posts on WeChat Moments, and summarizing articles to generate abstracts.

Image source: Digital Life Cazic

Moreover, unlike other language models and AI assistants, Auto GLM possesses a certain degree of self-correction ability. According to the Zhipu AI team, based on the WEBRL framework for self-evolving online curriculum reinforcement learning, AutoGLM overcomes challenges in web agent research and application such as scarce training tasks, limited feedback signals, and strategy distribution drift. It can continuously improve and stably enhance its performance during the iteration process.

In Zhipu AI's view, theoretically, AutoGLM can complete any task humans perform on electronic devices in the future. With simple voice commands, it can understand user intent, automatically invoke tools, and operate mobile phones using logic similar to humans to accomplish various tasks.

Zhipu AI refers to this as the "phone use" ability. With AutoGLM's "phone use," future mobile applications will be full of imagination, and AI technology will truly benefit millions of households.

From this perspective, the emergence of AutoGLM is a milestone. It signifies that in the entire AI revolution, AI will no longer be confined within chat boxes but will truly take over devices in people's hands. The seemingly unattainable AGI is now within reach.

The more open, the smarter: How far are we from having a personal "Jarvis"?

In the Marvel work "Iron Man," Stark's AI assistant "Jarvis" seems omnipotent. In contrast, most AI assistants on the market previously appeared to be merely "more specialized" ChatGPTs within vertical fields, and most people still hesitate to entrust core work to AI assistants.

What limits their development?

The core reason lies in insufficient model capabilities. In the words of Zhang Peng, CEO of Zhipu AI, early language models represented by the first-generation GPT had "insignificant training advantages."

It was not until the emergence of powerful reasoning models such as OpenAI's O1 large model and Claude 3.5 large model that AI large model applications transitioned from the era of simple dialogue generation to the era of agents executing complex multi-step tasks.

Previous AI large models placed more emphasis on interaction while providing some functions in specific domains such as images and videos to achieve multimodal interaction. Today, large models focus more on understanding and integration, i.e., agent capabilities. This requires models to possess comprehensive abilities including independent thinking, tool invocation, and goal accomplishment, adding workflows for planning, memory, and summarization to the original model foundation. Therefore, only a complete enhancement of this ability can make AI assistants more generalized and practically infiltrate users' daily work and life.

Meanwhile, according to Gartner's prediction, by January 2024, 21% of enterprises had already integrated AI assistants into production. By 2026, over 80% of enterprises will have adopted AI assistants. McKinsey predicts that by 2030, it is expected to contribute $7 trillion to the global economy, with China further unleashing one-third of the total benefits of generative AI.

This shows that the demand for AI assistants remains robust, and the industry is actively deploying and exploring this field, striving to increase supply. Examples include OpenAI's Agent, Alibaba's Mobile Agent, Tencent's App Agent, ByteDance's Doubao, Honor's MagicOS 9.0 operating system, and Apple's Apple Intelligence.

From this perspective, as the penetration rate of AI assistants increases, AI will ultimately become a fundamental production factor accessible to everyone. At that time, all superstructures will be reconstructed, leading to radical changes in human collaboration, organization, and business models in work and life.

The "proxy war" among tech giants: How to overcome the biggest obstacle on the path to AGI?

In fact, not only Zhipu AI but also tech giants in the entire AI industry have "turned" to strengthen their product capabilities in the field of AI assistants.

As early as 2023, Microsoft began exploring the integration of Copilot with office software. In October this year, Microsoft relaunched 10 autonomous AI assistants integrated into Dynamics 365, capable of automatically executing ultra-complex cross-platform business operations, helping enterprises save time and operational costs.

Google, Meta, and OpenAI have also recently been testing similar AI assistant products, beginning to compete in this market. Why have tech giants slowed down their internal competition in model parameters and API prices and instead accelerated their layout in AI assistants?

The core reason relates to commercialization:

Currently, top-tier AI large models continue to burn money at a rate of tens of billions of dollars annually. OpenAI predicts that from 2023 to 2028, the company will incur losses of $44 billion. Such a huge investment yields only about $3.4 billion in annual revenue.

This year, many large model companies have been on the brink of mergers and acquisitions or bankruptcy: Character.AI was acquired by Google; AI unicorn Inflection AI was acquired by Microsoft; Stability AI incurred a quarterly loss of over $30 million and was once rumored to be up for sale.

Previously, after returning from a Silicon Valley exchange, "Red Shirt Uncle" Zhou Hongyi exclaimed, "No one in Silicon Valley is selling large models anymore; everyone is selling products." When startup companies in Silicon Valley have started considering launching products for profit, it is evident how crucial profitability is for AI companies. After all, as the bubble gradually deflates, both investors and users will ultimately focus on whether their investment is worth it.

Regarding this commercialization issue, Zhipu AI has provided its own answer: "a full product matrix."

Since 2022, following the birth of the trillion-parameter pre-training model GLM-130B, Zhipu AI has commenced its commercial layout based on this trillion-parameter foundation model.

Today, from the underlying general model to compatibility with domestic computing power chips and to multimodal generation and AI Agent, Zhipu has established a full-process technical and commercial closed loop within the AI industry chain, thereby launching the most comprehensive product matrix among domestic large models. This allows more users and enterprises to find suitable products and services on the Zhipu platform.

Since 2023, Zhipu AI has collaborated with over 2,000 enterprises, including leading enterprises in various fields such as consumption, manufacturing, gaming, healthcare, education, culture, and tourism, providing personalized applications for the consumer end while offering cost-reduction and efficiency-enhancement solutions for the business end.

Image source: Zhidongxi

Previously, a Boyan Research report indicated that by the end of 2022, the global AI assistant market had reached approximately $45 billion and was expected to exceed $120 billion by 2027, with a compound annual growth rate of 22%.

From this perspective, facing such broad market prospects, Zhipu AI may have provided a pathway for all AI enterprises. The comprehensive promotion of AI assistants at the industrial level can generate revenue for enterprises while giving investors the motivation to continue investing, allowing the beautiful story of AI to continue.

Source: Hong Kong Stocks Research Community