Breaking Free from 'Monkey Driving': The Real-World Testing of Ideal VLA

![]() 08/13 2025

08/13 2025

![]() 609

609

Thinking and Deciding

Author | Wang Lei

Editor | Qin Zhangyong

Many may not have noticed, but Ideal, a brand known for its extended-range vehicles, is undergoing a profound transformation in its brand essence.

The recent launch of the Ideal i8 marks the company's strategic move into the mainstream pure electric vehicle market, where it faces stiff competition from various flagship models. This signals Ideal's bold entry into the depths of the pure electric vehicle arena.

In addition to the product experience brought by the Ideal L series and innovations in pure electric technology, the i8 also relies on a key technological breakthrough: the VLA (Vision-Language-Action) Driver Large Model.

Compared to smart cockpits, assisted driving technology evolves rapidly, with frequent shifts in direction. A slight oversight can lead to a misstep. Last year, Tesla's end-to-end large model revolution ushered in the era of AI large models for intelligent driving.

However, as time passed, the shortcomings of end-to-end AI large models became increasingly apparent. Essentially, they rely on imitation learning, knowing how to perform a driving behavior but not why. In other words, they cannot handle scenarios they have not encountered before.

In contrast, the essence of the VLA Driver Large Model lies in reinforcement learning, possessing the ability to think independently, much like a brain capable of reasoning.

On the eve of the Ideal i8's launch, the Super Electric Lab had an in-depth experience with the Ideal VLA Driver Large Model at Ideal Automobile's headquarters. From a logical perspective, it can accomplish many actions that end-to-end large models cannot, with significantly improved safety and smoothness.

We also had an in-depth discussion with Dr. Lang Xianpeng, Senior Vice President of Autonomous Driving Research and Development at Ideal Automobile, and the core R&D team members of the VLA Driver Large Model, about the challenges and breakthroughs of VLA.

One clear impression is that Ideal's approach to assisted driving may be the deepest and fastest-implemented in the industry.

01 Overcoming the 'Monkey Driving' Dilemma

First, it's essential to understand what the Ideal VLA Driver Large Model is.

Currently, the most well-known and mainstream on the market is the end-to-end large model, which inputs from one end and outputs from the other. The more 'data' you provide, the better the performance of the trained model.

'However, after reaching 10 million clips, we discovered a problem: increasing the amount of data alone is useless; valuable data becomes increasingly scarce,' said Lang Xianpeng, head of Ideal's assisted driving team.

It's easy to understand. End-to-end + VLM is akin to 'Monkey Driving.' It performs actions based on your intentions. When a monkey watches 10 million videos of humans driving, it can learn to imitate human actions, such as when to turn the steering wheel or apply the brakes.

Relying on this kind of 'imitation,' Ideal Automobile was able to increase the average takeover mileage for assisted driving from 12 kilometers to 120 kilometers within just seven months.

However, the problem is that the 'monkey' doesn't understand why it's performing these actions. It acts out of habit but lacks understanding. This leads to a slowdown in performance improvement when the amount of valuable data decreases.

The root cause is that current end-to-end imitation learning lacks deep logical thinking capabilities. The 'monkey' only knows rigid, fixed behaviors. For instance, when encountering an unfamiliar corner or a pedestrian darting out from under a dark bridge, the 'monkey's' brain shuts down, unable to handle driving behaviors that violate common sense.

This is where the VLA Driver Large Model comes in.

The transition from end-to-end + VLM to VLA essentially shifts from imitation learning to reinforcement learning, using generated data combined with a simulation environment to train the model. Through inputs from various sensors or navigation information, the model gains a specific perception of space, which is what the 'V' represents.

Then, it processes and interprets this perceived space, translating, compressing, and encoding it into a language that the large model can 'understand,' much like a human, which is the 'L' in VLA.

The 'A' generates behavioral strategies based on the scene encoding of the 'L,' determining how the model should drive the car. The key here is language (Language), which acts like a brain capable of thinking and reasoning.

One notable result of this chain is the 'command-and-obey' instruction, where you can directly communicate with it using language, such as telling it to speed up, turn left, turn right, and so on.

02 The Real-World Experience with VLA

The Super Electric Lab had an intuitive experience with the Ideal i8 VLA Driver Model.

Although the experience duration was not long, the scenarios were rich. You can use voice commands to control the driving behavior of Ideal Automobiles.

When driving normally, you can say, 'Ideal, speed up,' and the Ideal assistant in the car will respond, 'Received, I will increase the speed.'

You can clearly feel the vehicle accelerating, with the speed on the dashboard increasing from 63 km/h to 70 km/h.

You don't even need to specify the acceleration or deceleration amplitude; it can choose the appropriate amplitude based on the surrounding road conditions. For example, when you ask Ideal to slow down, it will also reduce the speed from 40 km/h to 35 km/h based on the current construction section of the road.

In addition, you can use voice commands to complete maneuvers such as pulling over, directing driving, and turning left or right.

When you need to temporarily park, you can call Ideal and issue a command to pull over. It will immediately respond, 'Okay, pulling over,' and then choose to slowly decelerate without abruptly braking due to the sudden command. You can see on the central control screen that it reduced the speed from 29 km/h to 0 over a distance of about 30 meters and completed the pull-over.

Moreover, it decelerates while slowly moving towards the roadside, aligning well with human driving habits. After parking, Ideal will say, 'Parking completed,' and then let you take over the vehicle.

If you feel that temporary parking in that spot is inconvenient, you can tell Ideal, 'Drive forward 20 meters,' and it will execute accordingly, moving exactly 20 meters forward. After stopping, it will also prompt that parking is completed and remind you to take over the vehicle.

Even if you've taken over the vehicle after pulling over and want to continue driving, you can still issue the 'Continue driving' command with zero frames of delay, and it will observe the road conditions and quickly merge into the main lane.

It's not just for temporary stops; it can also park at a specific location. For example, you can describe a landmark ahead, and it will understand and park next to that landmark. For instance, if you give the command, 'Park next to the red tricycle ahead,' it will fully comprehend it. Similarly, if you need to pick someone up temporarily, you can simply use that person as the landmark.

Lane-changing operations on the road can also be directly controlled by voice commands. Simply tell Ideal to change lanes to the left or right, and it will quickly recognize and execute the command.

With the support of deep thinking capabilities, the VLA Driver not only possesses the 'command-and-obey' ability but also deep memory capabilities. For instance, when driving on a familiar road, Ideal may say, 'You used to drive at this speed on this road; I'll adjust to that speed now.'

It remembers your preferences and choices, differing from other automakers that rigidly adjust based on speed limits in maps. For example, if a road has a speed limit of 60 km/h but you always drive at 75 km/h, the VLA large model will independently remember this, even if it exceeds the speed limit on that road.

If you feel like you were driving too slowly on that road before, you can give it a new command.

When approaching an intersection, you can see that there are quite a few pedestrians, and they are not walking according to the markings on the zebra crossing. Ideal will smoothly stop the car in front of the zebra crossing and wait for the pedestrians to pass. It even engages in a bit of a game with the pedestrians before choosing to yield.

When passing through the intersection, you can see that it avoids pedestrians and maintains a safe distance. The stopping and starting are also very smooth. After the pedestrians pass, it quickly moves through the intersection.

It also handles interactions with oncoming vehicles very well, first making a judgment and then quickly adjusting its direction to pass. After passing the interacting vehicle, it quickly merges back into its own lane, which is quite human-like.

The handling of U-turns is also very smooth, using a three-point U-turn to give the driver a more stable sense of security. Moreover, when making large directional adjustments, it doesn't drag its feet, completing the U-turn within a few seconds.

The Ideal VLA can do more than just that. In the Ideal headquarters park, the driverless Ideal i8 VLA 'Shuttle Bus' can receive complex instructions such as 'From Area A to Starbucks, then to the charging station, and finally to the underground garage in Area C,' autonomously navigating the entire route. When encountering narrow roads, it will pass by pressing the rearview mirrors against the wall. In the underground garage, it can follow text navigation and even park itself in the 5C supercharging station to wait for charging.

03 The Reason Behind Ideal's Quick Implementation of VLA

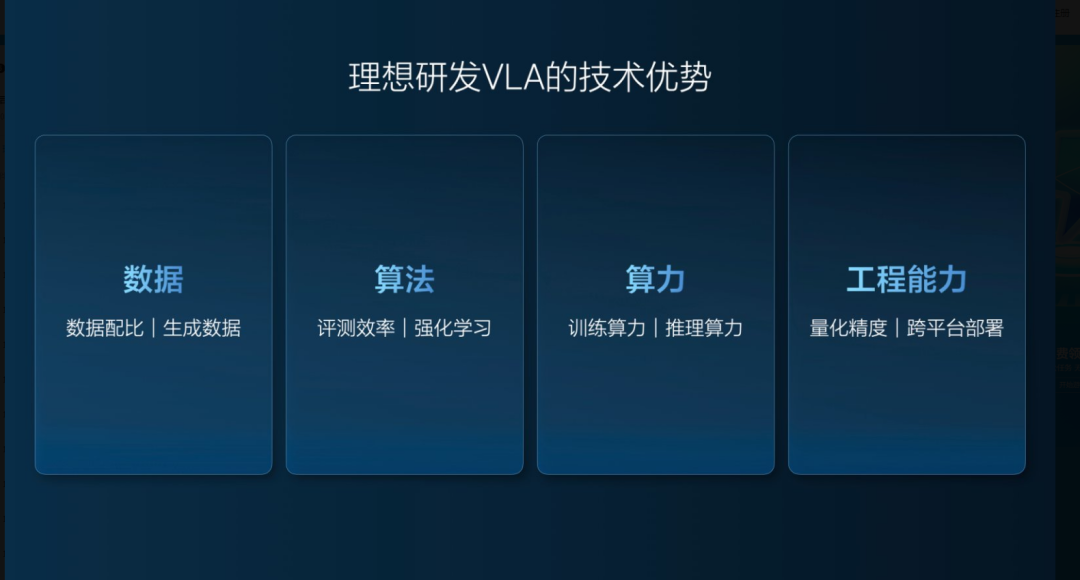

In Lang Xianpeng's view, this is not the result of luck but the culmination of accumulated strength in four dimensions: data, algorithms, computing power, and engineering.

He explained that while the data and algorithms of VLA may differ from the past, they still build upon previous foundations.

Without a complete closed loop of data collected from actual vehicles, there would be no data to train the world model. 'The reason Ideal Automobile can implement the VLA model is that we have 1.2 billion kilometers of data. Only by fully understanding these data can we better generate data,' said Lang Xianpeng.

Moreover, when traditional real-vehicle data can no longer provide more improvements, Ideal has introduced simulation data on a large scale. Think of it as an infinitely realistic driving simulator tailored for AI drivers. In this virtual world, AI no longer simply imitates but explores and learns through trial and error.

Lang Xianpeng expressed great confidence in the effectiveness of simulation data during the interview, stating, 'Our current simulation effects can fully compete with real-vehicle testing.'

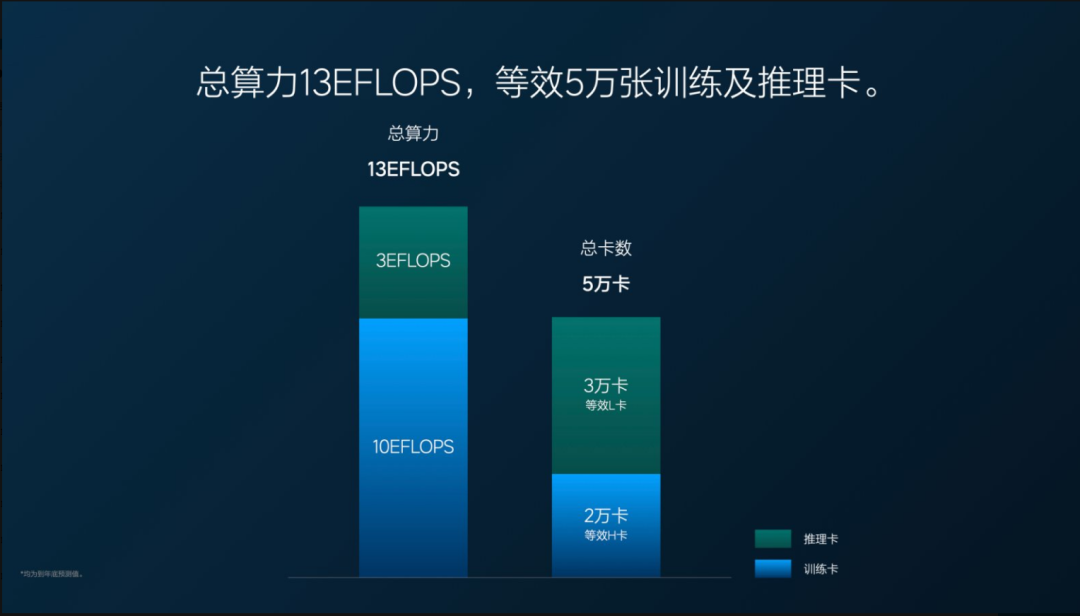

Another aspect is algorithms and computing power. Lang Xianpeng said that Ideal currently has a total training platform of 13 EFLOPS, with 10 EFLOPS allocated to training and 3 EFLOPS for inference. According to internal estimates at Ideal, its current inference resources are equivalent to 30,000 NVIDIA L20 inference cards.

Computing power is also the foundation that supports massive amounts of data. In the reinforcement training of VLA, without inference cards, a simulation training environment cannot be generated, and massive training is naturally impossible.

If data, computing power, and algorithms are soft power, then what quantifies these soft powers is engineering capability. After all, without good engineering capability, no matter how well the model is trained, it is useless if it cannot be deployed to chips and vehicles.

Keep in mind that the current VLA large model is only the first generation. With time and iteration, it may not be long before assisted driving truly sheds its label and becomes the norm.