Annual Revenue of $1 Billion! Apple Enlists Gemini as External Support—Will Siri's Experience Be Rejuvenated?

![]() 11/07 2025

11/07 2025

![]() 331

331

Is Apple having Gemini, the top-performing model, tutor Siri?

In the ongoing wave of AI large models, Apple has clearly emerged as a latecomer.

On November 4, Apple officially announced the addition of Traditional Chinese support for its Apple Intelligence feature. However, over the past two years, Siri's 'generative AI transformation' has nearly become the butt of jokes in the industry. From the 'imminent upgrade' promised at WWDC24 to the 'launch next year' declaration this year, Siri's large model reconstruction plan has faced repeated delays.

Despite Apple Intelligence steadily rolling out features and expanding language (including Simplified Chinese) and market support over the past year, its feature richness and performance have left much to be desired.

Just as many were losing patience with Siri's future, a piece of news suddenly added a twist to the situation. According to renowned Bloomberg reporter Mark Gurman, Apple is in the final stages of negotiating a multi-year cooperation agreement with Google. Under this deal, Apple will pay approximately $1 billion annually to secure the usage rights of Google's Gemini large model.

Image Source: Google

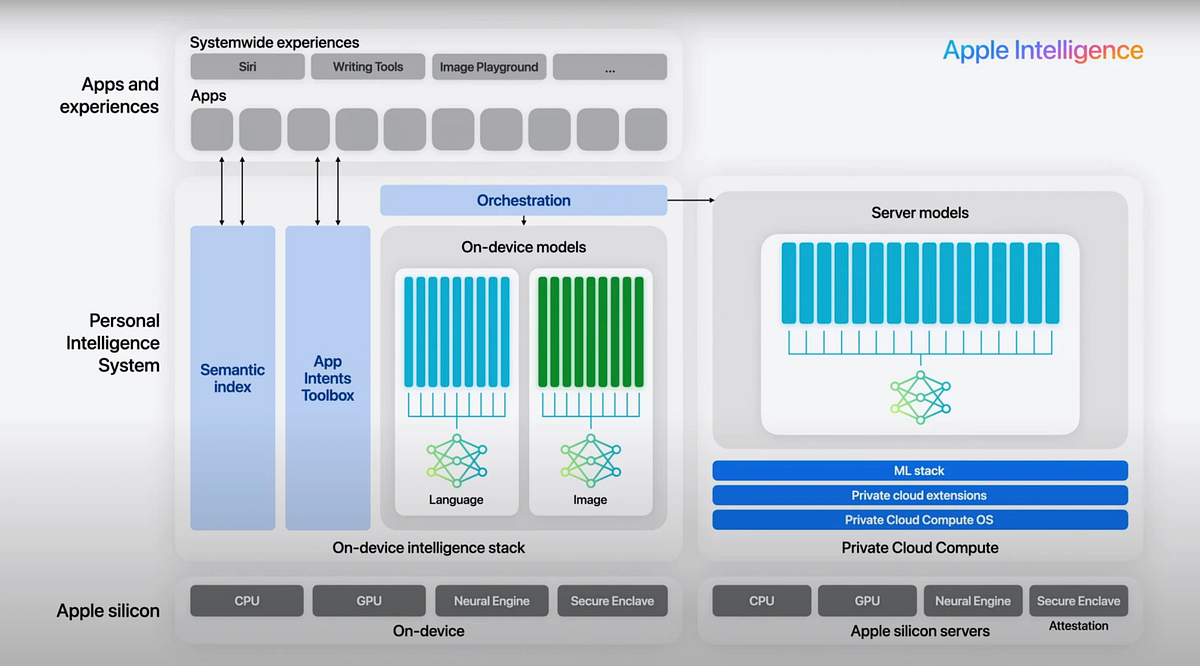

Unlike the previous optional integration of ChatGPT in certain features, the collaboration with Gemini implies that Apple will, for the first time, directly connect an external large model to the cloud reasoning layer of Siri and Apple Intelligence. This move will finally enable Siri to perform tasks that other mobile AI assistants have been accomplishing for over a year.

For Apple, opting for Gemini, if it materializes, represents a temporary solution but also an opportunity for its large model team to catch up. However, this is unlikely to be a mere technology licensing deal. While $1 billion may not seem exorbitant for Apple, the crux lies in what Apple is truly purchasing.

Can Gemini Revitalize Apple Intelligence?

Over the past year, Google has made a remarkable comeback in the large model arena. Whether it's the formidable reasoning capabilities of Gemini 2.5 Pro, the cost-effectiveness of Flash, the leadership in Veo 3 video generation, or the disruptive impact of Nano Banana in AI image generation and editing, Google has demonstrated its absolute dominance in the top tier of AI technology. Hence, Apple's choice of Gemini would come as no surprise.

What is surprising, however, is that according to reports from Bloomberg, Reuters, and other media outlets, the core of the cooperation between Apple and Google is not to add an optional answer source for Siri but to make Gemini the cloud foundation for Apple Intelligence.

Specifically, when users make complex requests on their iPhones, such as generating images or summarizing lengthy documents, the system may directly invoke the 1.2 trillion-parameter Gemini large model in the cloud and complete reasoning within Apple's PCC private cloud computing servers.

In essence, Gemini will no longer function as an external plugin but will serve as an embedded 'brain' at the foundational level. This transformation not only has the potential to completely address Siri's shortcomings but may also redefine the relationship between Apple and Google.

Modified and generated by Gemini, using material from Apple. Image Source: Leitech

For users, this could be a seamless upgrade. The previous ChatGPT invocation was more of an additional option, requiring users to actively select and confirm. Once Gemini becomes the default cloud reasoning layer, the AI experience will become more integrated and unified. Whether it's voice generation, image summarization, or cross-application tasks, both AI features and Siri may operate under a consistent logic, truly revolutionizing the user experience.

More importantly, all of this will still occur within Apple's self-built PCC private cloud computing server environment, ensuring that user data remains under Apple's control. In other words, Apple aims to 'borrow brains without borrowing hearts,' maintaining its commitment and narrative on user privacy.

On the flip side, after more than a year of validation, Apple Intelligence's self-developed large model on the device side is adequate for supporting daily tasks. However, it still faces limitations in model size and training depth when it comes to more complex content generation and multimodal understanding. The addition of Gemini can significantly enhance Siri's response complexity in the short term, allowing Apple to offer an improved experience without waiting for its own cloud large model to fully mature.

In a sense, this is equivalent to stopping the bleeding, preserving reputation, and buying the Apple large model team some breathing room for research and development.

Of course, for Google, this represents a significant expansion opportunity. Even Samsung, which utilizes Gemini as the underlying model for its devices, has a far smaller device base and potential user pool that can truly experience the full AI capabilities compared to Apple. Moreover, beyond mobile phones, Apple boasts a vast user base of iPads and Macs. Compared to the $1 billion technology usage fee reported in the media, securing a 'long-term seat' in Apple's ecosystem is the ultimate prize.

However, this development obviously has no direct impact on domestic Apple users. According to previous media reports, Apple plans to adopt Baidu's Wenxin large model in the Chinese market and may also explore cooperation with Alibaba Cloud. Unfortunately, the timeline for introducing Apple Intelligence to China remains uncertain, and Cook's statement still echoes with 'working hard to enter the Chinese market.'

Nevertheless, if this 'Gemini Plan' comes to fruition, its significance extends beyond Siri itself. Unlike the partial integration of ChatGPT, it represents Apple's first external appeal for help in the generative AI battlefield, indicating that the iPhone's intelligent experience may undergo a genuine leap.

However, if this step is taken too hastily, one cannot help but ponder: How much of Apple's intelligence will be 'self-made' in the future?

Apple Intelligence Needs to Strengthen Itself

$1 billion is a negligible sum for both Google and Apple. In fact, to set Google Search as the default search engine on the iPhone, Google pays Apple $20 billion annually. For Apple, money has never been the issue; the real challenge lies in whether the progress of its self-developed large models can keep pace with this AI arms race.

Over the past two years, Apple's AI endeavors have proceeded at a 'steady but concerning' pace. The Siri team has undergone multiple reorganizations. According to multiple reports from The Information and other media outlets, there have been directional swings and management disagreements within Apple. One faction advocates for the gradual optimization of Siri, while another insists on a complete reconstruction driven by a large model. This tug-of-war, coupled with the original team's development and execution capabilities, has hindered Siri's generative AI transformation process.

In July of this year, Apple's machine learning research team released a technical report, disclosing two types of self-developed models. The first is an on-device model with approximately 3 billion parameters, optimized for KV-cache sharing and quantization-aware training. The second is a cloud large model with hundreds of billions of parameters, revealed at the end of last year, adopting the current mainstream Mixture of Experts (MoE) architecture. It plans to deeply integrate multi-expert pathways with Apple's PCC private cloud computing servers to enhance reasoning efficiency and privacy security.

PCC Architecture. Image Source: Apple

In comparison, Apple's on-device model has clearly made faster progress, with relatively complete support for basic tasks. It was also made available to developers around WWDC25 this year, truly beginning to release on-device computing power and models to third-party applications. Gradually, many applications leveraging on-device models have emerged. However, from the current perspective, Apple's cloud-side model still has a considerable distance to go before large-scale commercialization.

Against this backdrop, bringing in Gemini seems both realistic and urgent. It fills a crucial gap for Apple—complex task reasoning and multimodal generation. From a strategic standpoint, this is also Apple's typical approach: first introduce the strongest external capabilities to stabilize the experience, then gradually complete self-developed replacements over time.

Just like with maps, chips, and camera modules in the past, Apple often builds its own closed loop based on externally mature technologies.

However, the challenge this time is unprecedented. OpenAI, Anthropic, and Google are all rapidly iterating, with model scale and ecological feedback forming a positive cycle. Apple may excel at product definition and integrated experience, but it's uncertain how much advantage it holds in large-scale model training and data processing and utilization.

Perhaps, the addition of Gemini can enable Siri and Apple Intelligence to compensate for Apple's product experience disadvantages in AI capabilities in the short term. But can it allow Apple to seize the opportunity and catch up with the top tier of AI terminals and even large models? Only time will tell.

In Conclusion

Ideally, Apple may utilize the time gained from introducing Gemini to catch up in model development. However, we must also acknowledge another possibility: Apple may never catch up with the top tier in the competition for cloud large models.

It's not about lacking money or computing power but that the pace of this race no longer aligns with Apple's rhythm. Apple excels at long-term accumulation and experience refinement, not high-frequency iteration and aggressive trial and error.

On the other hand, if Apple one day abandons its obsession with self-developed cloud models and chooses to build a third-party cloud large model ecosystem and architecture, focusing more attention on on-device models, it may not be a bad thing. After all, on the device side, Apple possesses the most complete chip architecture, the most mature energy efficiency optimization system, and the most solid user trust.

This may be the possible path for Apple-style AI—not vying for the forefront but striving to make intelligence reliable, secure, and sustainable.