Meta Expands AI + Smart Glasses Footprint Through Strategic Acquisitions

![]() 08/18 2025

08/18 2025

![]() 780

780

Text/VR Gyro Pea

The AI sector, characterized by its surging investment and financing activity, stands as a key battleground for technology titans and capital. According to Crunchbase data, global startup financing totaled $114 billion in the first quarter of 2025, marking a 17% increase quarter-on-quarter and a 54% jump year-on-year from the $96 billion recorded in the fourth quarter of 2024. This represents the strongest quarterly performance since the second quarter of 2022.

A notable financing event in the first half came from Meta, which spent $14.3 billion to acquire a 49% stake in Scale AI. The company has since continued its aggressive expansion strategy, recruiting top talent and acquiring firms, creating ripples across the industry. These transactions are poised to significantly impact Meta's AI business and smart glasses product ecosystem.

AI Voice: A Pivotal Move in Meta's Ecosystem Strategy

Over the past two months, Meta's investment and financing activities have primarily focused on the AI voice field. With the advancement of large model capabilities, AI voice has become more intertwined with terminal devices, offering a diversity of product forms. AI voice functions have been integrated into AI toys, smart speakers, AI glasses, and more, revealing immense potential in various application scenarios.

Regarding Meta's first-quarter 2025 financial report, CEO Mark Zuckerberg stated, "We've made a solid start in an important year, with our community continuing to grow and our business performing exceptionally well. We've made good progress in AI glasses and Meta AI, currently boasting nearly 1 billion monthly active users."

Meta is leveraging audio-video interaction to drive the future of enhanced AIXR experiences, aiming to compete with rivals like Google. Here's a snapshot of Meta's moves over the past two months:

Meta first "spent big" to poach Johan Schalkwyk, the Machine Learning Director of AI voice startup Sesame AI, with speculation that this was aimed at enhancing the voice and personalized function experience of the Llama large model.

Subsequently, Meta acquired a minority stake of approximately 3% in EssilorLuxottica, the world's largest eyewear manufacturer, valued at around $3.5 billion based on current market prices. The AI smart glasses co-developed by the two companies have become industry leaders, and the firms will continue to deepen their collaboration in the smart glasses field.

Meta then invested $16 million to establish an audio laboratory in the UK, focused on advancing audio technology for Meta AR and AI glasses such as Ray-Ban Meta and Oakley Meta. The new audio lab will concentrate on developing intelligent audio that adapts to users and their surroundings, training glasses to detect user focus, enhance desired sounds, and reduce background noise.

Just days later, reports emerged that Meta had completed the acquisition of AI voice startup PlayAI. Meta's memo noted that PlayAI's "work in creating natural-sounding voices and a platform that simplifies voice creation aligns well with our AI characters, Meta AI, wearable devices, and audio content creation efforts and roadmap."

In early August, foreign media revealed that Meta had discussed potential cooperation with AI video startup Pika, including possible acquisitions or technology licensing agreements. Meta had also explored acquisition opportunities with AI video generator Higgsfield, but those negotiations have since been halted.

Meta hopes to revitalize and transform its AI department's operational strategy by urgently addressing shortcomings with "money." It has even comprehensively restructured the AI department to form the "Super Intelligence Lab," dedicated to developing AI systems that surpass human capabilities. This department's research and development direction will directly influence the multimodal upgrade of XR products, which Zuckerberg describes as "the dawn of a new era for humanity." The newly established department is led by Alexandr Wang, the former CEO of data labeling startup Scale AI.

Meta's AI investments ultimately aim for commercialization, and one of Meta AI's most successful commercialization projects is the smart glasses Ray-Ban Meta.

From a user perspective, functional experience is paramount, and the key to activating these functions lies in AI voice's interactive capabilities. To enable AI assistants to further achieve functions such as "emotion understanding, human speech comprehension, and faster responses," AI voice is gradually evolving into a crucial hub connecting smart device decisions. Thus, it is logical for Meta to intensify its layout in the AI voice field.

Technological Complementarity: Meta Tackles Smart Glasses' Audio-Video Interaction Challenges

AI Voice

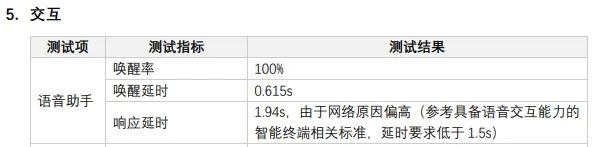

If Meta aims to further enhance the user experience of AI smart glasses, it must address latency and speech synthesis quality issues, which are the most noticeable "unnatural" aspects of voice interaction for users. It is understood that interactive audio calls with a latency limit of approximately 150-200 milliseconds are barely noticeable to the human ear, maintaining a natural call experience, whereas exceeding this range negatively impacts user experience.

Ray-Ban Meta Wayfarer test report, source: China Academy of Information and Communications Technology (CAICT) Terminal Laboratory

Meta's acquisition of AI voice company PlayAI complements its speech technology: It supports communication in over 30 languages and local accents, boasts over 200 realistic AI voices, and has compressed speech synthesis latency to within 130 milliseconds. It relies on custom low-latency large language models trained on vast datasets containing thousands of hours of human speech, covering various styles such as podcasts, storytelling, narration, and business conversations. In terms of technical capabilities, PlayAI's core advantage lies in enabling developers to create powerful voice applications without initial model training.

While the financial terms of the acquisition have not been disclosed, insiders estimate the acquisition value to be close to $200 million.

PlayAI's Dialog Text-to-Speech Model

The acquisition of PlayAI is a crucial aspect of Meta's talent competition strategy, optimizing the reliability and naturalness of Meta AI's voice capabilities and addressing latency issues. More importantly, it fills the company's core capabilities gap in the "AI+XR" field, enhancing the immersive experience of Meta's social application "Horizon World," the interactive capabilities of AI smart glasses, and audio content creation. Clearly, sound has become the cornerstone of AI device interaction, and only when voice interaction is sufficiently "human-like" can it support a broader range of scenario implementations.

AI Text-to-Video

In addition to voice interaction, video capability is also crucial for enhancing the user experience. Meta also intends to make strides in the AI video field. Foreign media reported that Meta is in advanced negotiations with Pika Labs, with potential transactions involving partial equity transfers, technology integration, or even the incorporation of Pika's core team into Meta.

Shortly after its establishment, Pika gained internet fame with an animation of "Musk flying into space in a spacesuit." Subsequently, Pika rapidly completed multiple funding rounds, raising a cumulative total of over $130 million and achieving a valuation of nearly $500 million. Its core technology lies in generating realistic short videos from text and supporting stylized editing, adding appropriate sound effects and lip-syncing to videos, thereby lowering the creation threshold.

Pika promotional video clip

Pika's user experience and community operations align closely with Meta's vision for AI content platforms. Meta positions text-to-video functionality as a vital aspect of super intelligence enhancement.

If Pika's products are integrated into Meta's existing projects, such as the two AI features launched on the Horizon Worlds desktop editor—Creator Assistant and Style Reference—it will further boost content generation efficiency and enhance users' immersive experience. Imagine users issuing voice commands to AI glasses, with the display-enabled AI glasses/AR glasses generating real-time operation demonstration videos corresponding to the scenes in front of the user.

For Meta, which focuses on entertainment and social business, AI audio-video technology is key to dominating the short video and virtual space territories. Behind this series of acquisition moves, Meta AI's multimodal understanding ability will become more aligned with real-life scenarios, thereby strengthening its technical barriers in the AI+XR field.

Advancing Together: EssilorLuxottica Collaborates to Expand the Smart Glasses Ecosystem

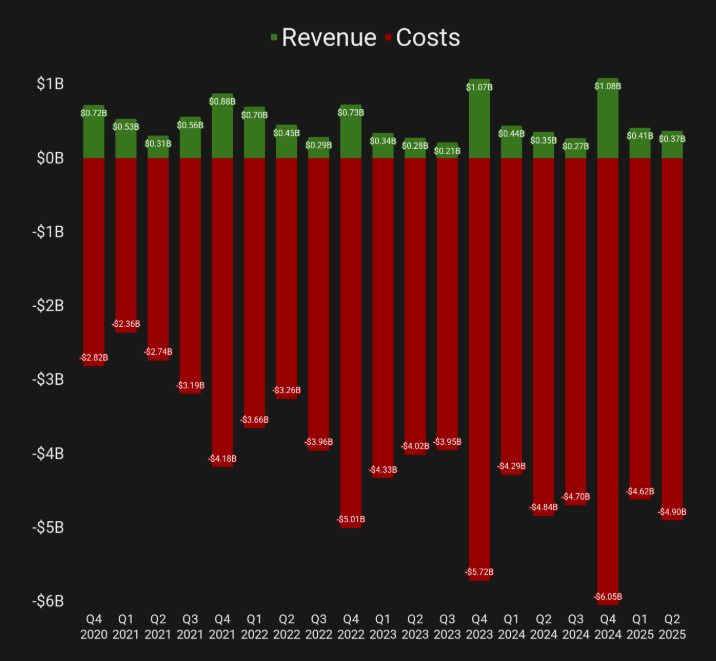

From its dense AI voice layout to its deep tie-up with EssilorLuxottica, Meta is propelling the smart glasses business towards the core of AI interaction terminals. A few days ago, Meta and its partner EssilorLuxottica each released their second-quarter 2025 financial reports. These performance reports will serve as important benchmarks for deepening their collaboration:

Meta's metaverse division, Reality Labs, reported Q2 revenue of $370 million, a 5% year-on-year increase, with expenditures of $4.9 billion and a loss of $4.53 billion, lower than market expectations of $4.8 billion. The growth was primarily driven by Ray-Ban Meta smart glasses. EssilorLuxottica reported a 7.3% increase in revenue in the second quarter, reaching 7.18 billion euros (approximately $8.36 billion), with Ray-Ban Meta AI glasses sales increasing by over 200% year-on-year in the first half of the year.

As a traditional optical giant, EssilorLuxottica provides "last-mile" support for Meta's technology implementation with its precision optical technology, global brand influence, and retail channel network. This drives the penetration of the entire smart glasses category in the consumer market and offers a model for the intelligent transformation of traditional manufacturing industries. This support is already reflected in the aforementioned financial reports of both parties.

For Meta, Ray-Ban Meta serves as crucial evidence of the feasibility of the AI+XR path. With PlayAI enhancing AI voice capabilities, EssilorLuxottica's brand matrix, and Scale AI's data capabilities, Meta is building a new link from the technical bottom layer to device terminals atop AI. With voice as the core interaction entry, AI smart glasses will achieve faster transformation in vertical scenarios such as healthcare, education, and industry.

The implementation of AI+XR products cannot be achieved without cross-border collaboration and the integration of scenario experience and channel resources from traditional industries. Meta's current losses and investments are still "necessary costs." Meta's Chief Technology Officer Andrew Bosworth previewed that the second half of the year's Connect 2025 will feature "significant wearable device launches."

The preview likely points to Meta Celeste (codename Hypernova), mentioned in leaks, which will be Meta's first AI glasses with a display screen, equipped with a myoelectric bracelet codenamed Ceres. If the core functions of "listening, speaking, and taking photos" of Ray-Ban Meta smart glasses are enhanced with the ability to "see," Meta will need to increase its investment in enhancing audio and video for smart glasses. Another possible launch in the second half of the year is the new Oakley Meta Sphaera smart glasses, developed in collaboration with EssilorLuxottica.

During Meta's second-quarter 2025 earnings call, CEO Mark Zuckerberg mentioned, "I believe that in the future, if you're not equipped with AI or some kind of glasses that interact with AI, your cognitive disadvantage compared to those you work with or compete against may be quite apparent. So I think this is a very fundamental form factor. It has many different versions. Currently, we're creating some glasses that I think are fashionable, but we're not focusing on the display. I think there are many different things worth exploring on the display front."