ICONIQ 10,000-Word Report Analysis: Navigating Three Pivotal Turning Points to Avoid Being a Runner-Up

![]() 07/03 2025

07/03 2025

![]() 633

633

As AI transitions from an "innovative buzzword" to a "mandatory question" for every enterprise, a prevalent misconception arises: it seems that merely investing funds and hiring engineers will suffice to board this epochal train.

Yet, in reality, players at the AI table have discreetly stratified.

Recently, a formidable 67-page report titled "2025 State of AI Report: The Builder's Playbook" swept through the entire venture capital circuit. The seismic impact of this report stems not just from its meticulous data but also from the esteemed status of its publisher, ICONIQ Capital.

So, who exactly is ICONIQ?

In Silicon Valley, ICONIQ is a resounding yet profoundly mysterious name. Initially gaining fame for managing the private wealth of tech titans like Mark Zuckerberg, Sheryl Sandberg, Jack Dorsey, and others, it is known as the "exclusive family office of Silicon Valley billionaires." This unique identity grants it unparalleled access to the elite networks and visionary insights of the global tech core.

Subsequently, its growth equity investment arm, ICONIQ Growth, invested in a star-studded portfolio, encompassing numerous giants that have defined their respective sectors, such as Snowflake, Databricks, Canva, Adyen, GitLab, and more.

Thus, when such a "far-sighted, high-standing" institution speaks out after conducting in-depth research on 300 AI companies, its conclusions transcend simple trend predictions, offering the most authentic interpretation of the current AI landscape.

This report mercilessly reveals the "second stage" of the AI race – competition is no longer about "doing or not doing" but "right or wrong"; the battle is no longer about the resolve to invest but the depth of cognition.

The report's data serves as a prism, reflecting three pivotal turning points forming in the AI field: the chasm between cost and ROI, the divergence in strategy and culture, and the myth of tools and efficiency. Countless enterprises are engaged in "futile efforts," hovering indecisively before these turning points, ultimately becoming mere runners-up in this era.

What Silicon Rabbit brings you is not a recounting of the report but an in-depth analysis that transcends these turning points.

I: The Abyss of Cost and ROI

The first turning point is the harsh reality on financial statements. As AI moves from the lab to the market, the cost structure spirals out of control, while the return on investment (ROI) remains ambiguous.

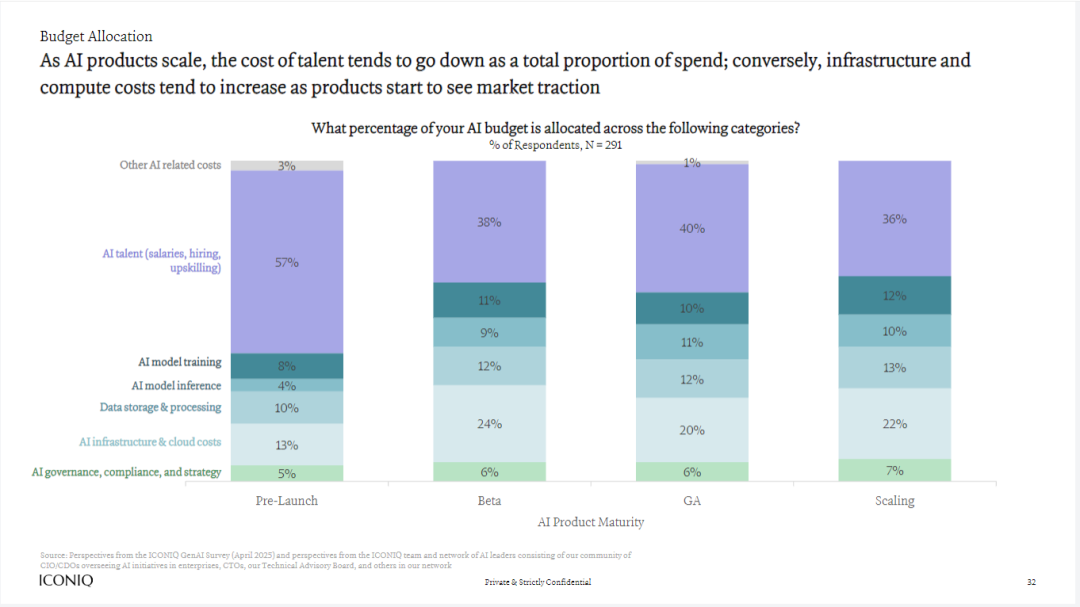

The report points out a fatal shift: During the initial product stage, AI talent costs dominate, accounting for up to 57%. However, once the product enters the "beta testing" and "scaling" stages, the proportion of AI infrastructure and cloud costs surges from 13% to 22%, and model inference costs skyrocket from 4% to 13%. Meanwhile, the proportion of talent costs is diluted to 36%.

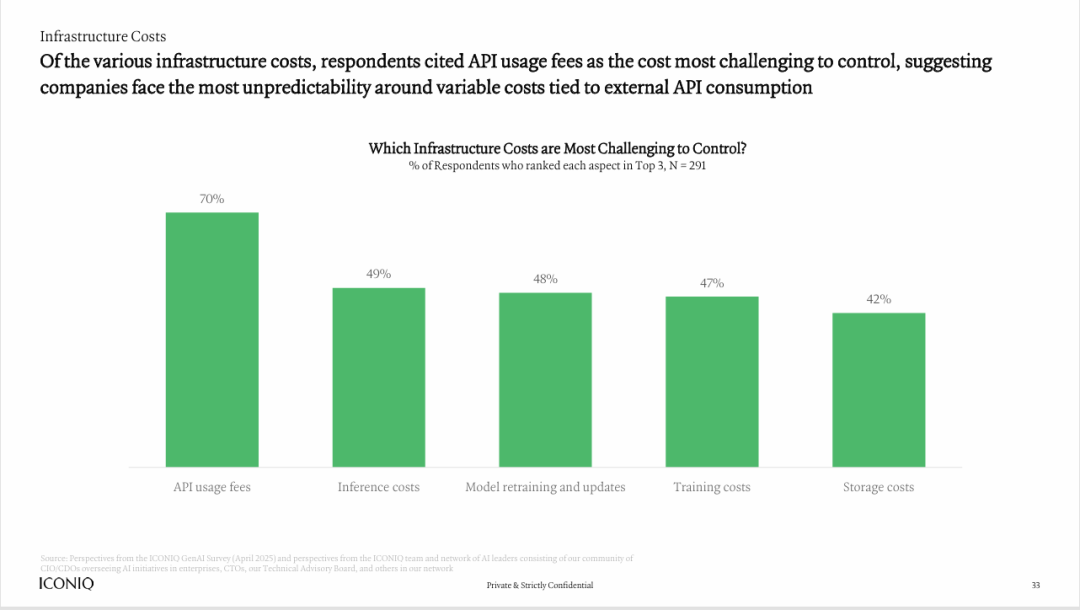

What does this mean? The more users you have, the more frequently your model is invoked, and the thinner your profits become. Even more alarming, 70% of companies believe that third-party API usage fees are the most unpredictable and hardest to control among all infrastructure costs.

[In-depth Interpretation]

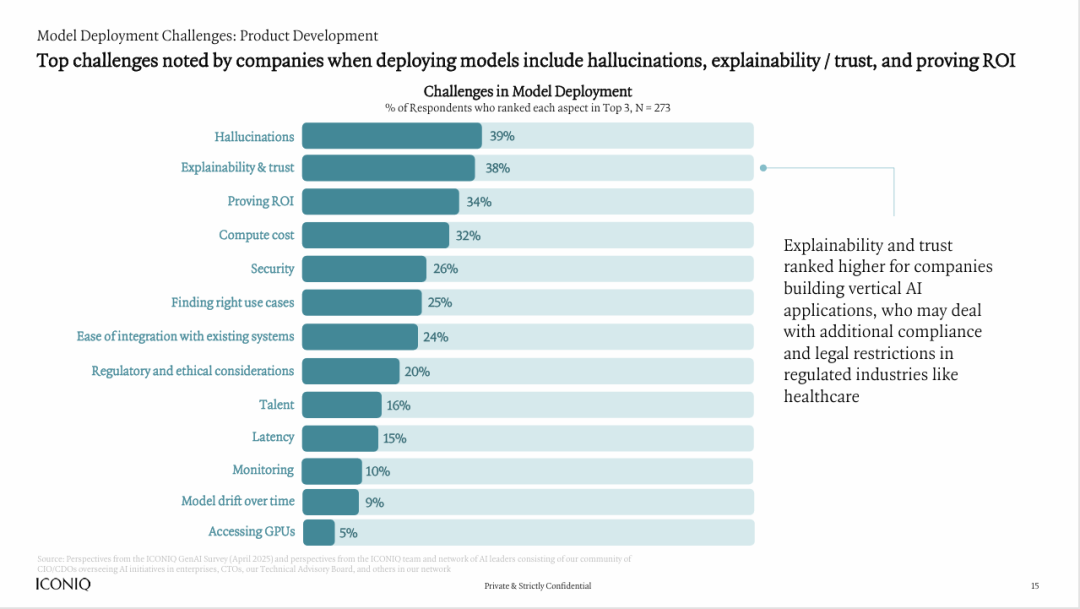

This exposes the first structural dilemma of the current AI business model: the mismatch between value creation and cost consumption. Most companies use AI as a "functional enhancement" or "defensive strategy," attracting users through free or bundled premium packages, but this prevents AI from becoming an independent profit center. High and unstable inference costs (especially dependence on third-party APIs) penalize companies whose products are popular, creating a vicious cycle of "the more successful, the more losses." Consequently, proving ROI has become the third-largest challenge in model deployment, after "illusion" and "explainability."

II: The Boundary Between Strategy and Culture

The second turning point is a confrontation at the organizational genes level. "AI-native" companies and "AI-empowered" companies are heading towards starkly different destinies.

The report clearly contrasts these two types of companies for the first time, revealing startling data differences:

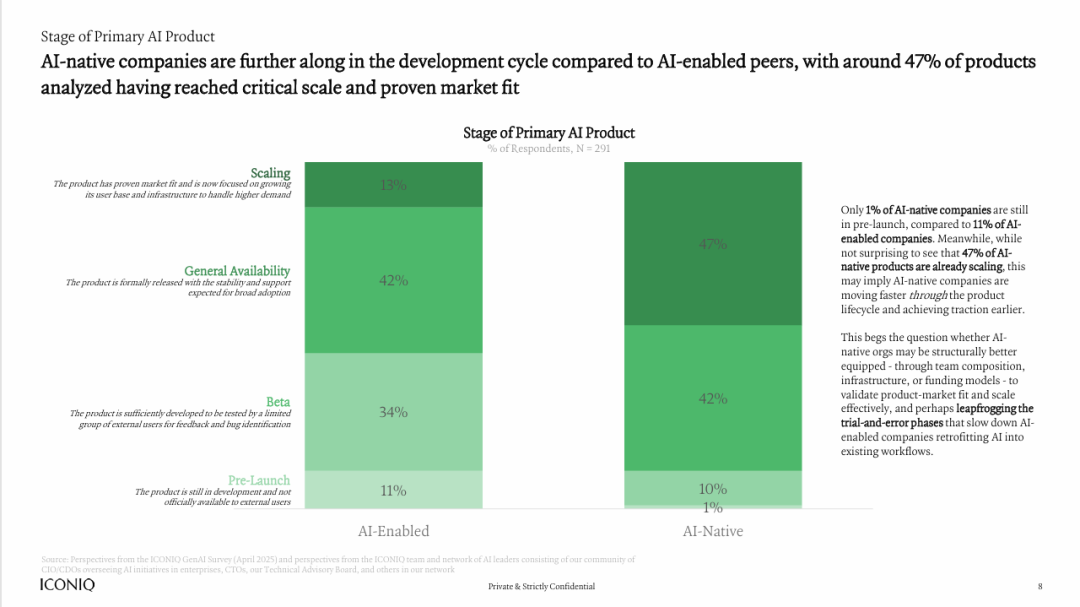

Growth Rate: 47% of AI-native companies' products have entered the mature "scaling" stage, while only 13% of AI-empowered companies have done so.

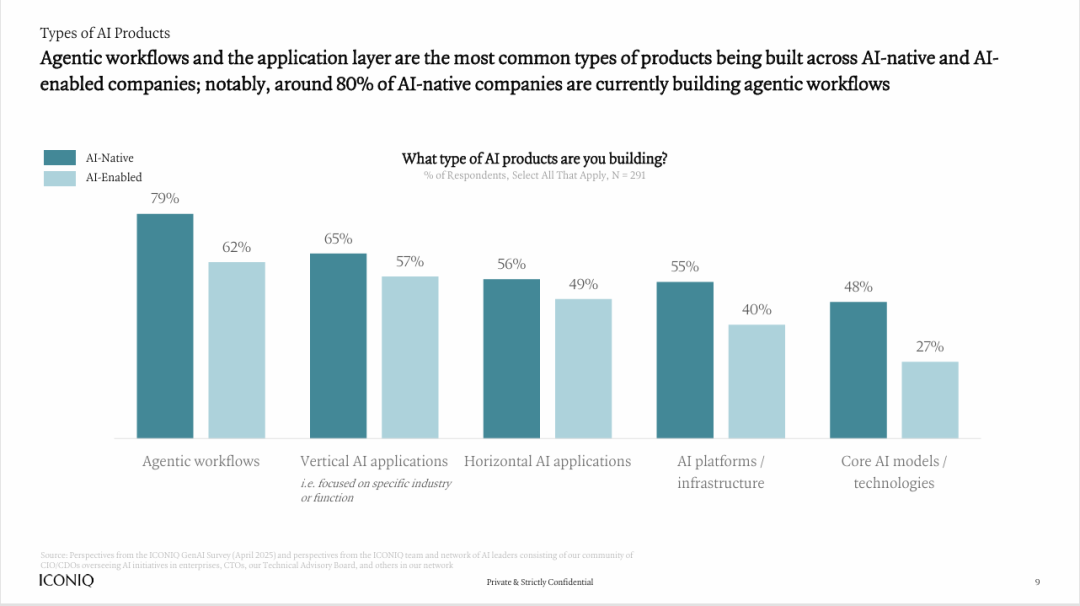

Product Focus: Nearly 80% of AI-native companies are building more complex "agentic workflows," not just functional AI applications.

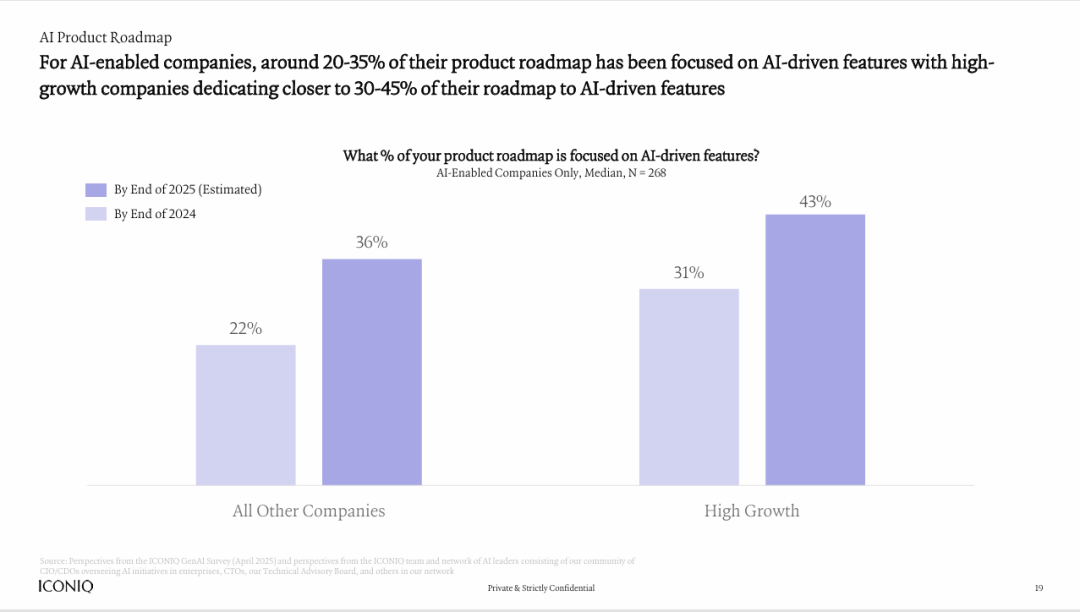

Strategic Commitment: High-growth companies anticipate investing 43% of their product roadmaps in AI-driven features by the end of 2025, far exceeding the 36% of other companies.

[In-depth Interpretation]

This is not merely a technological gap but also a gap in strategy and culture. AI-native companies view AI as the core engine of their business and the cornerstone of their business model. Their organizational structure, talent composition, and decision-making processes are all tailored for this purpose, enabling them to swiftly "validate product-market fit (PMF) and cross the trial-and-error stage."

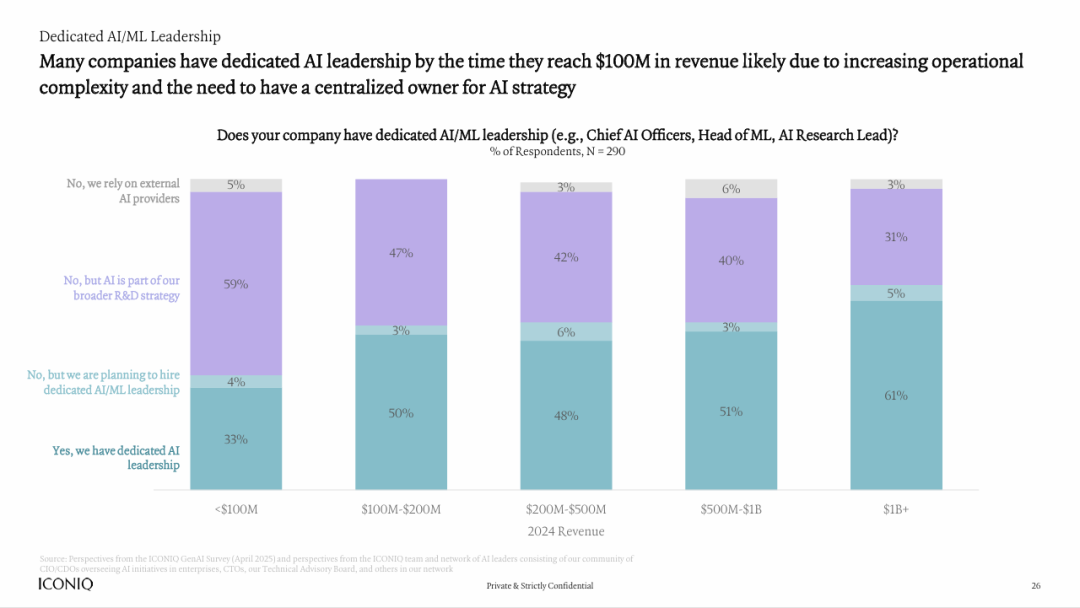

Many traditional "AI-empowered" enterprises, however, still perceive AI as an "advanced tool" that needs to be "integrated" into existing processes. This mindset leads to significant friction within the organization: Who will lead? Where will the budget come from? How to alter existing workflows? The report shows that only when a company's revenue exceeds $100 million does the establishment of an independent AI/ML leadership position become mainstream (over 50%). Before that, the fuzziness of strategy and the lack of responsibility and authority have caused them to miss valuable time windows.

III: The "Take-ism" Trap of Tools and Efficiency

The third turning point is reflected in the choice of technology stack. Between "using wheels" and "making wheels," leaders have discovered a delicate balance.

The report devotes a substantial portion to showcasing the panoramic tool chain for AI product development, revealing a trend: "Take-ism" can help you get started swiftly, but to go far, you must have your own thoughts and trade-offs.

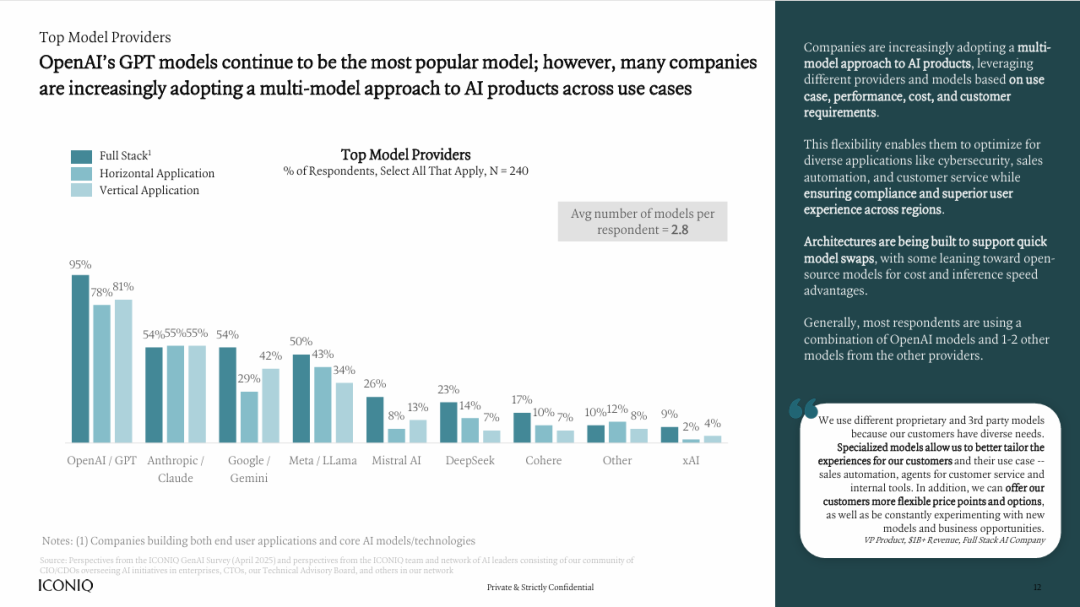

Model Selection: OpenAI remains the preferred choice, but a multi-model strategy has become the consensus, with an average of 2.8 model providers used per team. Teams are now flexibly combining Google, Anthropic, Meta Llama, Mistral, etc., based on use cases, costs, and performance.

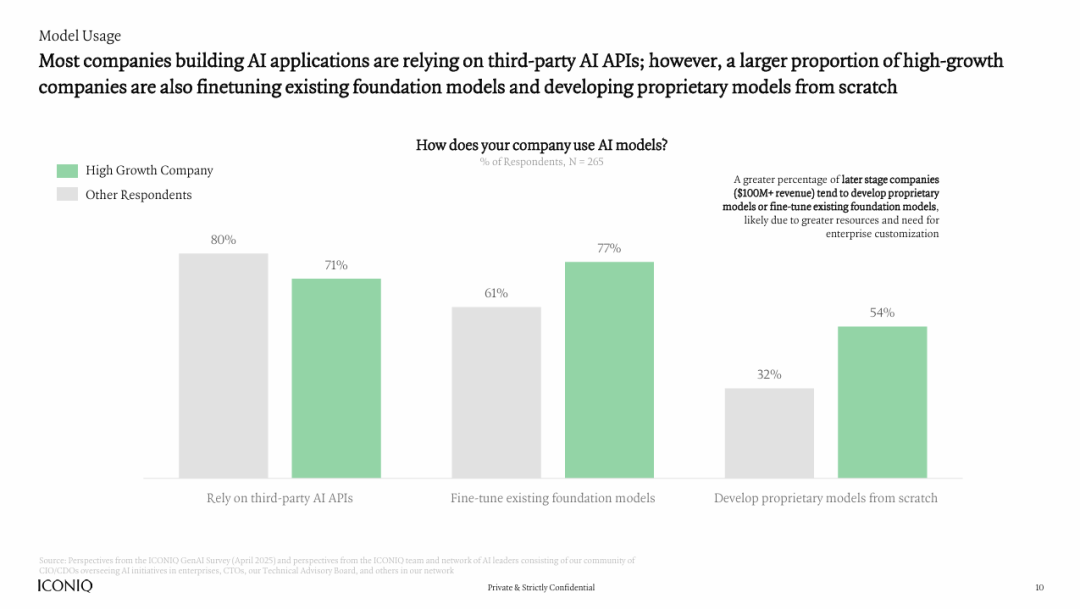

Model Usage: 80% of companies rely on third-party APIs, but among high-growth companies, 77% fine-tune existing models, and 54% even develop proprietary models from scratch, far surpassing other companies.

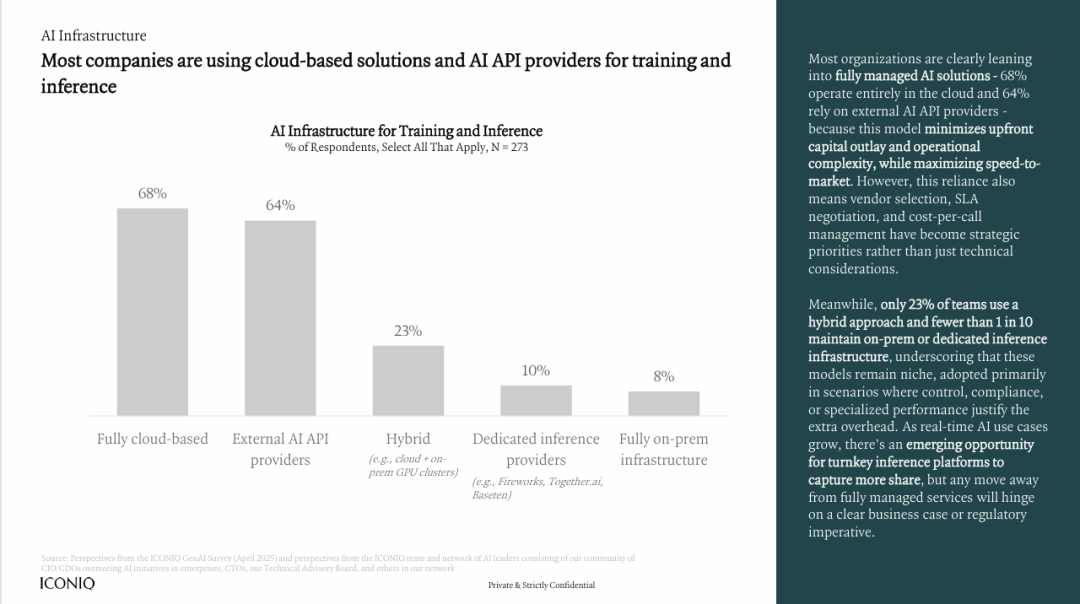

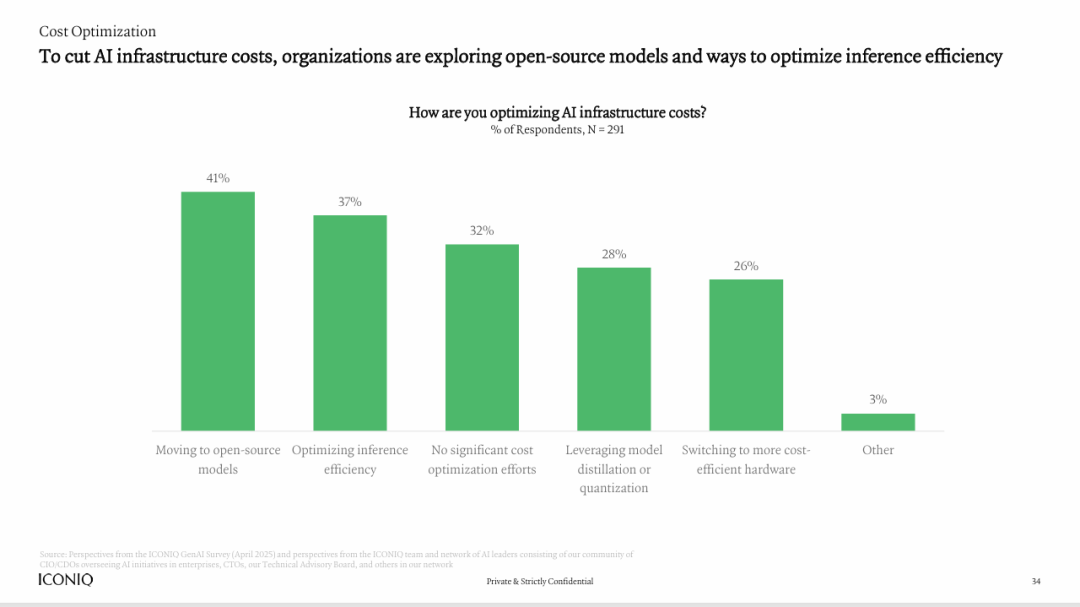

Infrastructure: 68% of companies prefer "fully cloud-based," but to control costs and compliance, turning to open-source models (41%) and optimizing inference efficiency (37%) have become the preferred solutions for cost reduction and efficiency enhancement.

[In-depth Interpretation]

The lesson here is that the technological barrier in the AI era is shifting from "having models" to "using models wisely." Relying solely on external APIs leaves you at the mercy of others in terms of costs, customization, and data privacy. Leading "builders" have begun constructing their own moats: they possess superior model evaluation and selection capabilities, more efficient fine-tuning and inference optimization technologies, and more flexible multi-cloud/hybrid cloud deployment architectures. They know when to "buy" and when to "build," which is a core competitive advantage in itself.

Navigating these three pivotal turning points is the core proposition for all AI entrants. The ICONIQ report is akin to a detailed map, but a map cannot replace a guide. When your team faces specific decisions:

How to design a multi-model architecture that balances cost and performance?

How to establish a scientific ROI measurement system to guide AI investment?

How to draw inspiration from the organizational culture of AI-native companies to reshape internal collaboration processes?

The answers to these questions are not found between the lines of the report but in the minds of those who drew this map. This is precisely the core value of Silicon Rabbit. We don't merely deliver information but enable you to engage in direct dialogue with the people who create it, providing you with the most authentic and reliable micro-insights necessary for decision-making.