Ensuring the Accuracy of Autonomous Vehicle Perception

![]() 08/28 2025

08/28 2025

![]() 647

647

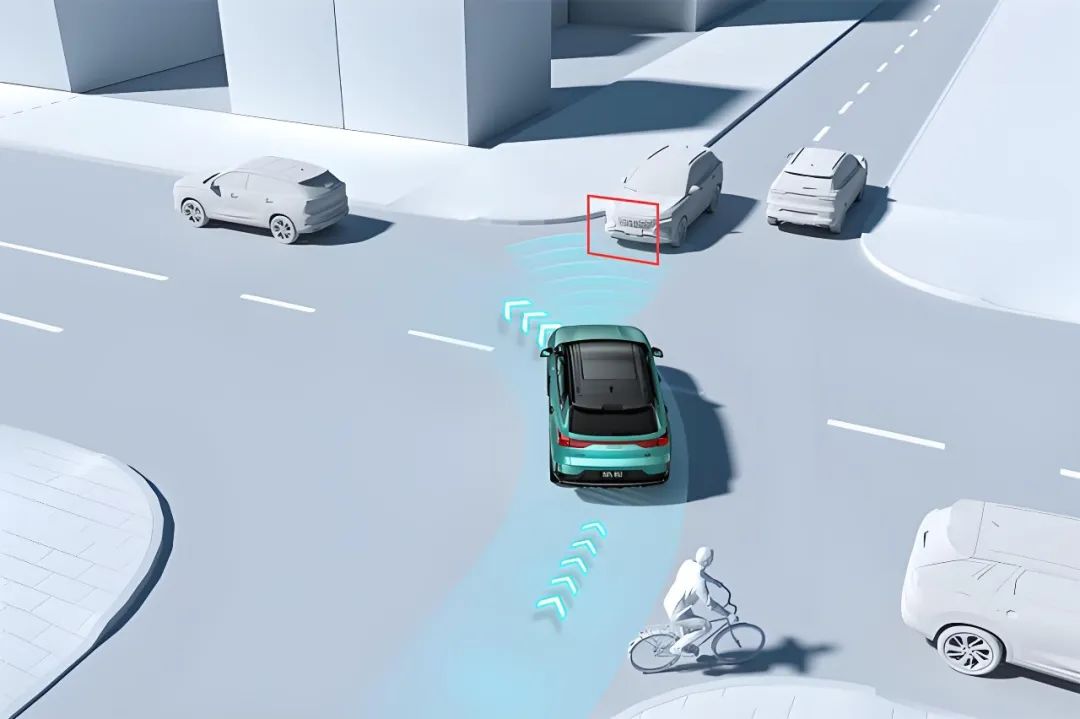

For autonomous vehicles to navigate independently, the paramount task is achieving precise perception of their surroundings—effectively "seeing" the road clearly. How can these vehicles attain precise and reliable perception amidst complex and rapidly evolving road environments?

Definition and Principle of Autonomous Driving Perception

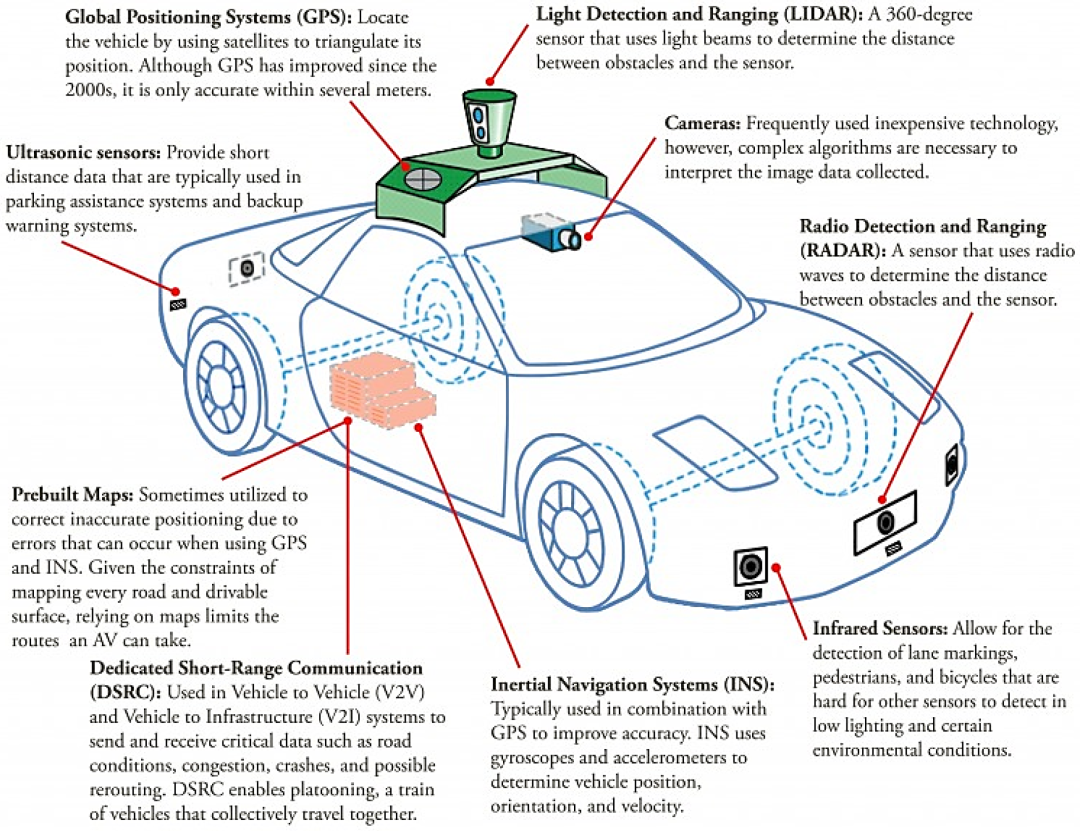

Before diving into the topic, let's understand what perception entails. An autonomous driving perception system is an engineering pipeline that translates the external world into machine-usable information. This system primarily relies on sensors such as cameras (visible light, near-infrared), LiDAR, millimeter-wave radar (RADAR), ultrasonic sensors, positioning sensors (GNSS/INS), and onboard inertial measurement units (IMU). Each sensor excels in different tasks: cameras offer high resolution and can identify colors and signs but struggle at night or under strong reflections; radar is robust to speed and harsh weather but has low resolution; LiDAR provides precise 3D point clouds beneficial for reconstructing object shapes and distances, though its performance in rain, snow, and fog is less than ideal. Achieving precise perception for autonomous vehicles necessitates not just one type of sensor but a combination of these complementary sensors into a "perception suite." Software and hardware convert raw sensor data into a semantic, structured environmental model (e.g., surrounding objects, their positions, speeds, predicted trajectories, etc.) for use by planning and control modules.

Why fuse data from different sensors? Consider human perception while driving. During the day, we rely heavily on vision, but in rainy nights or dense fog, our vision is impaired, and we compensate with touch, hearing, and experience. The same applies to autonomous vehicles. A single sensor has "blind spots" and specific failure modes: cameras cannot see under strong backlight, at night, or behind obstructions; radar is weak in detecting small shapes; LiDAR generates noise in heavy rain and snow. Fusing multi-sensor data not only complements information but also enhances safety and robustness through redundancy. This fusion encompasses both "early fusion" at the raw data level (e.g., projecting point clouds onto images for joint perception) and "late fusion" at the decision-making or trajectory level. Recently, significant research has combined deep learning methods with traditional filter methods to improve fusion effectiveness and real-time performance.

Given the perception system's importance, what are its workflow steps? Essentially, the perception system can be divided into several continuous sub-tasks: sensor data acquisition, time synchronization and preprocessing, detection and segmentation, tracking and state estimation, scene understanding and prediction, and final output representation. Data acquisition requires hardware interfaces, high bandwidth, and deterministic latency. Time synchronization aligns sensor timings; otherwise, the fused world model will exhibit "ghost" objects. Preprocessing includes denoising, distortion correction (camera distortion, LiDAR point cloud distortion correction), and coordinate transformation based on internal and external parameters. Detection often involves deep networks or point cloud-based models outputting object bounding boxes and categories. The tracking module associates detection results across frames, estimating object speed and acceleration to stabilize subsequent predictions. The prediction module provides possible movements of other participants within the next few seconds. Finally, this semantic information is packaged into map layers, obstacle lists, lane information, etc. The entire process demands real-time performance, determinism, and interpretability (especially in safety scenarios). These modules are often interconnected in engineering, forming a system with feedback loops. Perception confidence is fed back to the planning layer to decide whether to degrade or request manual intervention.

How to Ensure the Accuracy of Perception?

Now that we understand the perception system's workflow, how do we ensure it is "accurate"? First, let's clarify what "accuracy" entails: localization accuracy (vehicle positioning), detection accuracy (no missed or false reports), ranging accuracy (relative position error between the target and the vehicle), speed/trajectory estimation accuracy, and semantic/category recognition accuracy.

Hardware-level Guarantees involve redundancy and division of labor. Redundancy refers to the number and layout of sensors of the same or different types to avoid single-point failures. For example, forward long-range radar is used for high-speed long-distance detection, lateral short-range radar enhances blind spot detection, top LiDAR provides 360° point cloud perception, and multiple cameras cover different perspectives for coverage and stereo vision. Sensor selection and placement are not arbitrary but determined by field of view, range, resolution, and vehicle dynamics. Different vendors balance "full perception" and "simplified perception" based on costs and business scenarios. Robot taxis like Waymo and Cruise typically use richer suites for higher robustness.

Algorithm-level Guarantees include the design of the perception model, fusion strategies, and uncertainty modeling. Traditional filtering methods (Kalman filter, extended Kalman filter, particle filter, etc.) have long been used for target tracking and state estimation, excelling in providing probabilistically optimal estimates under modeled noise. In recent years, deep learning has demonstrated powerful capabilities in object detection, semantic segmentation, and attention-based cross-modal fusion. Thus, actual systems often adopt a "hybrid" strategy: using deep networks for detection/classification/segmentation and filters for tracking and state smoothing, with uncertainty weighting based on confidence during fusion. For multi-sensor delays, data packet loss, or alignment errors, engineering employs temporal compensation, delay estimation, and delay compensation algorithms to reduce error impacts. The latest research directly incorporates "perceptual uncertainty" into network outputs (e.g., outputting confidence and covariance matrices), enabling the upper-level planning module to decide more conservative actions based on uncertainty.

Calibration and Synchronization are often overlooked but crucial aspects. Each sensor has internal parameters (e.g., camera focal length, distortion coefficients) and external parameters (sensor pose relative to the vehicle coordinate system). The premise of multi-sensor fusion is that these external parameters must be correct to millimeter-level precision; otherwise, fused point clouds and image mappings will be offset, leading to misjudgment or tracking failure. Calibration is divided into static calibration (completed in laboratories or parking lots using calibration boards) and online self-calibration (fine-tuning external parameters during vehicle operation to compensate for installation vibrations or temperature-induced drifts). Additionally, time synchronization (unifying sensor sampling times) is equally important: even with precise spatial alignment, if time is not synchronized, it will lead to significant offsets in the relative positions of the same object across different sensors in high-speed scenarios. Engineering practices include regular automatic calibration, startup self-tests, and fault-tolerant strategies for calibration variation.

Data and Validation Strategies are another vital dimension for ensuring accuracy. Autonomous driving perception training and testing heavily rely on large-scale, diverse, annotated datasets. Over the past decade, public datasets like KITTI, nuScenes, and Waymo Open Dataset have emerged, containing annotations under different cities, weather conditions, and sensor combinations, serving as the cornerstone for algorithm development and benchmarking. Besides offline datasets, the industry relies heavily on simulation (including high-fidelity physical rendering and sensor noise modeling) to supplement training samples for rare or dangerous scenarios. Validation is not just offline metric evaluation (e.g., mean average precision, positioning error, etc.) but more importantly, system-level testing, assessing perception performance under real vehicle conditions through closed-loop simulations, scenario replays in enclosed venues, and extensive real-world road testing. Only through multi-level, multi-scenario testing can we develop engineering-level confidence in a perception system's accuracy and robustness.

How to handle rare but dangerous scenarios in engineering implementation? Two common approaches exist: one is "expanding data coverage" through targeted collection and simulation synthesis of extreme weather, rare interactions, and occlusion scenarios; the other is "safe degradation strategies," where when perception confidence decreases or significant inconsistencies are detected (e.g., LiDAR detects an object but the camera does not confirm it, and the confidence is low), the system triggers more conservative planning strategies such as deceleration, pulling over, entering a restricted mode, or requesting manual intervention. Incorporating "system uncertainty" as input for active decision-making is a crucial design concept to ensure safety. Creating an autonomous driving system that is both bold and cautious is essentially the art of engineering trade-offs.

Moreover, the perception system's interpretability and traceability are vital, especially in accidents or anomalies. This requires designing a comprehensive logging system to record the confidence level of each perception module step, sensor status, calibration history, and anomaly alerts. This not only aids in fault root cause analysis but also provides data support for algorithm improvement. In strict validation scenarios, manufacturers save key intermediate representations of perception decisions (e.g., feature maps, point cloud projections, tracking history) for post-event review and regulatory compliance.

Future Developments of perception systems will witness breakthroughs in end-to-end deep learning for certain perception sub-tasks, improving detection accuracy through multi-modal networks combining vision and point clouds. Additionally, sensor hardware is evolving, with imaging radar, solid-state LiDAR, and higher-resolution millimeter-wave radar being commercialized, offering more options. At the engineering level, there's an emphasis on "coordinated optimization of software and hardware," considering sensor capabilities, data bandwidth, computing power, and algorithm design together to balance cost, performance, and safety. At the operational level, extensive real-world fleet data is fed back for continuous model iteration and edge deployment updates, enhancing the system's long-term real-world performance.

Final Words

In summary, the autonomous driving perception system is not just a single "camera" or "LiDAR" but an engineering system comprising multiple sensors, complex algorithms, rigorous calibration, and system-level verification. To achieve "accuracy," it's essential to focus on hardware redundancy, algorithm fusion, uncertainty modeling, calibration synchronization, and large-scale data validation. There are no shortcuts on the technical path. Only through repeated engineering verification, in-depth anomaly analysis, and rigorous design of system safety boundaries can the ability to "see the world" be transformed into a usable, controllable, and verifiable autonomous driving product.

-- END --