Empowering Domestic AI with "FP8 Precision"

![]() 09/01 2025

09/01 2025

![]() 495

495

The burgeoning demand for computational power in training and inference of large AI models (LLMs), alongside the bottlenecks of power consumption, memory bandwidth, and computational efficiency faced by traditional precisions (such as FP16/BF16), underscores the need for innovation.

FP8 (8-bit floating-point number), supported by industry giants like NVIDIA, AMD, and Intel, is emerging as a standard feature in next-generation AI chips.

What strategic significance does FP8 hold for domestic AI chips? It represents not just technological catch-up but a pivotal opportunity to shape future AI computing standards and build an autonomous ecosystem.

01 The Inevitable Shift to the "8-bit Era" in AI Computing

Today, the insatiable thirst for computational power of large models and the "memory wall" dilemma are growing more acute. Model parameters have expanded exponentially, from hundreds of billions to trillions, with the "expansion speed" of large models described as "uncontrollable": from 340 million parameters in BERT to 175 billion in GPT-3, and now to models with over a trillion parameters, with the parameter scale increasing by approximately 240 times every two years. In contrast, GPU memory growth has been sluggish—the P100 in 2016 had only 12GB, and the H200 in 2023 has only 141GB, a tenfold increase in seven years.

This disparity between "frantic parameter growth and sluggish memory growth" makes training large models a "memory nightmare." For instance, training GPT-3 requires 650GB of memory just for model parameters, plus gradients, momentum, and other training states (about 1950GB), plus activation values from intermediate calculations (366GB), totaling over 2900GB. However, a single A100 GPU only has 80GB of memory, necessitating multi-GPU parallelism, which introduces new bottlenecks due to increased communication between GPUs.

The mismatch between "storage" and "computing" performance leads to high memory access latency and low efficiency due to the memory wall. The memory wall refers to the phenomenon where the limited capacity or transmission bandwidth of memory severely restricts CPU performance. Over the past 20 years, computing power has increased by 90,000 times, a remarkable improvement. While memory has evolved from DDR to GDDR6x, capable of being used in graphics cards, gaming terminals, and high-performance computing, and interface standards have upgraded from PCIe1.0a to NVLink3.0, communication bandwidth has only grown 30 times, a sluggish increase compared to computational power.

Under the von Neumann architecture, data transmission leads to significant power loss. Data must constantly be "read and written" between memory units and processing units, consuming a lot of transmission power. According to Intel research, when semiconductor processes reach 7nm, data transfer power consumption can reach 35pJ/bit, accounting for 63.7% of total power consumption. The power loss from data transmission is becoming increasingly severe, limiting chip development speed and efficiency, creating a "power wall" problem.

FP8's advantage lies in its perfect balance of efficiency and precision.

02 FP8: Beyond Simply "Reducing Bits" - Technical Connotation and Design Challenges

The FP8 format is becoming a key technology driving AI computing to the next stage with its unique comprehensive advantages. Its core value can be summarized as achieving an unprecedented balance between efficiency and precision.

First, FP8 brings extreme improvements in computational and storage efficiency. Compared to the widely used FP16, FP8 directly reduces memory usage by 50%, significantly alleviating memory bandwidth pressure, enabling the processing of larger models or higher-batch data under the same hardware conditions. This not only directly increases inference and training speeds but also significantly reduces system power consumption, crucial for AI applications deployed on edge devices or large-scale data centers. Especially in AI accelerators where memory bandwidth is often a bottleneck, the effective application of FP8 can unleash greater computational power potential.

Secondly, FP8 maintains acceptable numerical precision while compressing data. Compared to pure integer formats (such as INT8), FP8 retains the representation characteristics of floating-point numbers, with a larger dynamic range and more flexible precision allocation, making it better suited for operations sensitive to numerical ranges, such as gradient calculations and activation function outputs during training. This means that despite having fewer bits, FP8 can still maintain good model training stability and final precision, reducing performance loss due to quantization, and thus avoiding a significant decline in model quality while improving efficiency.

Moreover, FP8 is rapidly gaining industry-wide support. Starting with NVIDIA's Hopper architecture and H100 GPU, multiple chip vendors have already provided native support for FP8 in their latest hardware. At the software and framework level, mainstream deep learning frameworks (such as TensorFlow and PyTorch) and inference engines are actively integrating FP8 operator libraries and optimization toolchains. This comprehensive standardization from hardware to software, from training to inference, provides developers with a unified and efficient programming environment, further promoting the adoption of FP8 in various models.

FP8 is not simply a "bit reduction" based on existing formats but a system-level optimization tailored to the real needs of AI computing. It addresses the urgent need for efficient resource utilization in high-performance computing while maintaining model precision, rapidly becoming the new standard in AI training and inference.

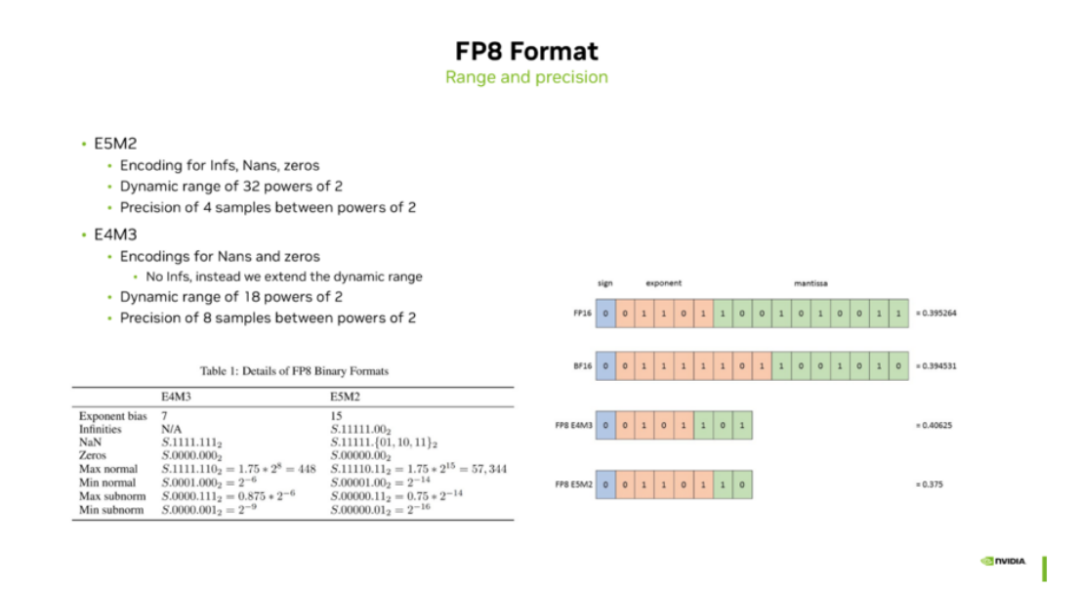

Two mainstream formats of FP8:

- E5M2 (5-bit exponent, 2-bit mantissa): Large dynamic range, suitable for saving forward propagation activations and weights.

- E4M3 (4-bit exponent, 3-bit mantissa): Higher precision, suitable for saving backpropagation gradients.

While the global tech community discusses GPT-5's breakthroughs, Chinese AI company DeepSeek announced the V3.1 model in the comments, noting that "UE8M0FP8 is designed for the upcoming next-generation domestic chip."

What are the core challenges for domestic chips to implement FP8? Firstly, efficiently supporting mixed operations of the two formats in computing units like ALUs and Tensor Cores. Secondly, ensuring compilers, operator libraries, drivers, etc., seamlessly map AI framework instructions to FP8 hardware; designing advanced quantization toolchains to minimize precision loss when converting models from high precision to FP8. Finally, compatibility with the existing ecosystem, supporting smooth migration and mixed-precision computing for formats like FP16/BF16.

03 Opportunities for Domestic AI Chips with FP8: Breakthrough and Surpassing

FP8, as a relatively new standard, presents a smaller gap between domestic and international levels compared to the CUDA ecosystem, offering a rare window of opportunity.

Using NVIDIA B100's FP8 computational power (14 petaFLOPS) as a benchmark, under the same process conditions, the DeepSeark architecture is expected to achieve a 20%–30% increase in effective computational power through collaborative optimization of algorithms and hardware. It's worth noting that this estimate is based on publicly available technical documentation, and actual performance will be subject to tape-out testing.

In terms of ecosystem construction, Huawei's Ascend 910B currently mainly supports FP16 and BF16 formats, lagging at least one generation behind in FP8 support. DeepSeek's "model-as-chip" strategy draws on Google TPU's success but still needs to address practical engineering challenges, such as adapting the PyTorch quantization toolchain.

From a supply chain security perspective, the FP8 format has relatively low precision requirements, reducing dependence on transistor density, which becomes a differentiated advantage under domestic process conditions.

Taking Huawei's Ascend series NPU as an example, the dedicated instruction set designed for FP8 increases throughput by 40% on typical ResNet models while reducing energy consumption per unit of computational power to one-third of the original. This breakthrough stems from two major innovations: optimizing metastable circuit design at the hardware level to solve low-bit gradient disappearance issues; and supporting mixed-precision training in the software framework, allowing different network layers to flexibly switch between FP8 and other formats. Notably, domestic chips like Cambricon's Thinker 590 have pioneered the integration of FP8 acceleration modules, marking the beginning of autonomous architectures leading precision innovation.

DeepSeek's strategic layout reveals a crucial logic: when NVIDIA's A100/H100 face shortages due to export controls, the deep ties between domestic chip vendors and downstream users form a unique competitive advantage. New products like Biren Technology's BR104 and MXIC's MXC500 use FP8 as a core selling point, with self-developed compiler toolchains enabling seamless integration from model conversion to deployment.

Currently, while international standard organizations like IEEE P754 are advancing FP8 standardization, industrial applications have clearly outpaced standard setting. AI platforms like Baidu PaddlePaddle and Zhipu AI have led in providing default support for the FP8 format, and in open-source ecosystems like PyTorch, automated mixed-precision libraries like AutoFP8 are constantly emerging. This bottom-up technology diffusion driven by practical applications has opened a crucial window of opportunity for Chinese enterprises to compete for global discourse power in AI basic software and hardware. If three key breakthroughs can be achieved within the next year and a half—deep integration of FP8 into mainstream frameworks, demonstration and validation of high-quality open-source models, and a domestic hardware adaptation rate exceeding 50%—China will have the capacity to lead an ecological transformation with FP8 as the consensus.

However, the full promotion of FP8 still faces practical obstacles. Some industry viewpoints question its stability under complex data distributions, and compatibility issues of operators between different platforms remain unresolved. Facing these challenges, enterprises like Moore Threads have proposed a "progressive upgrade" approach, using containerization technology to ensure compatibility of existing models and introducing a dynamic partitioning mechanism for intelligent selection of different precision strategies during inference. This progressive path not only alleviates the core pain points of high migration costs and risks but also garners broader support and a time window for FP8 to transition from the experimental stage to large-scale deployment.

DeepSeek's technical route proves that algorithm-hardware collaborative innovation may be more feasible than simply pursuing process advancements, which could be the first step towards the autonomy of Chinese AI computational power.